- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Consume CDS Views from SAP S/4 HANA on-premise int...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Product and Topic Expert

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

09-21-2021

10:21 AM

Overview

The purpose of this blog post is to provide an overview on how to extract CDS views from SAP S/4HANA 2020 on-premise leveraging the ABAP Pipeline Engine (APE) and to consume them in SAP Data Warehouse Cloud (SAP DWC). For that purpose we’ll make use of Cloud Connector to establish the connectivity.

As a first step, we will look at the configurations to be applied in the SAP S/4HANA 2020 system.

In a second step, we’ll describe the required configuration in Cloud Connector for setting up the connection including the enablement of functions necessary for the data extraction from the SAP S/4HANA on-premise source system.

Finally, we’ll create both a connection to the SAP S/4HANA 2020 on-premise system in SAP Data Warehouse Cloud and a Dataflow to ingest the data of the CDS View into a deployed SAP Data Warehouse Cloud table.

Before going into further details I would like to thank my colleagues, especially daniel.ingenhaag and christian86 for their valuable inputs.

Prerequisites

General information related to the ABAP integration can be found in the following SAP Note 2890171, which describes the integration from various SAP systems like SAP ECC/SLT, SAP S/4HANA and SAP BW systems. In our case we will focus on the integration of SAP S/4HANA on-premise systems.

The minimum prerequisites to consume CDS views from a SAP S/4HANA on-premise system are as follows:

- SAP S/4HANA on-premise system with at least SAP S/4HANA 1909 FPS01 (+ mandatory TCI note 2873666) or a higher SAP S/4HANA version.

- More information about SAP S/4HANA 1909 integration including relevant notes can be found in SAP Note: 2830276.

The relevant note for our scenario built on SAP S/4HANA on-premise 2020 is note 2943599.

The minimum Cloud Connector version to be installed on a dedicated machine on the on-premise network that has access to the internet, specifically SAP Business Technology Platform (SAP BTP) is 2.12.x or newer (Download link).

More information about the pre-requisites for ABAP sources can be found in the official Data Warehouse Cloud documentation on help.sap.com: https://help.sap.com/viewer/9f804b8efa8043539289f42f372c4862/cloud/en-US/a75c1aacf951449ba3b740c7e46...

Configurations steps

SAP S/4HANA ON-PREMISE

The scenario built in this blog post is based on SAP S/4HANA 2020.

The general prerequisites for connecting SAP S/4HANA On-Premise with SAP Data Warehouse Cloud can be found here.

It explains in detail the prerequisites and supported connection types and their properties.

Another important step is the definition and annotation of the CDS views to make them available for consumption. With the annotation, you can specify which views will be exposed in replication scenarios and are suitable for data replication. You can also enable the generic delta extraction functionality which is the element that should be used for filtering the data during delta load.

My colleague martin.boeckling wrote a great blog post on CDS creation and annotation which you can refer to for more details.

Note: The scenario that is described in this blog post to extract data out of S/4HANA via ABAP Pipeline Engine (APE) into SAP Data Warehouse Cloud does currently not support delta and real-time data extraction. For details about support for delta replication or real-time replication in SAP Data Warehouse Cloud please check here. .

Cloud Connector

Cloud Connector serves as a link between applications in SAP Business Technology Platform and on-premise systems and lets you use existing on-premise assets without exposing the entire internal landscape. It acts as a reverse invoke proxy between the on-premise network and the SAP Business Technology Platform.

For our scenario we followed the detailed configuration steps described in this excellent blog post which is explaining how to:

- create a subaccount in the SAP Business Technology Platform

- assign a Cloud_Connector_Administrator role to your administrator user in the SAP Business Technology Platform

- add this subaccount to the Cloud Connector and connect it to the SAP Business Technology Platform

Once you are done with the installation and configuration of Cloud Connector, you can then add a new on-premise system.

Since we want to connect to a SAP S/4HANA on-premise system, let’s select ABAP system.

Add system mapping

Define the protocol to be used (RFC in our case) and maintain the system information (like application server, instance number…)

Enter server application

Click Next and allow the listing settings

Please note that the list of allowed functions depends on the source ABAP system you are using.

More details can be found in the following SAP Note: https://launchpad.support.sap.com/#/notes/2835207

Allow listing settings

Go back to your subaccount in the SAP Business Technology Platform cockpit and verify that the connection has been correctly established with the on-premise network

Connection Check in BTP

SAP Data Warehouse Cloud

In SAP Data Warehouse Cloud you need to create a connection to the SAP S/4HANA on-premise system.

Navigate to Space Management, click on Connections and then click on the + sign to create a new connection

Create Connection

Select a connection of type SAP ABAP

Select Connection Type

Define the connection and save it.

Connection Information

Select the connection and validate it

Validate the connection

Switch now to Data Builder and create a New Data Flow

Create a new Dataflow

Define a business name and technical name for the newly created Data Flow, then go to Source and click on the pop-up icon next to your Data Source

Dataflow Properties

Search for your CDS View and click Next

Import Views

Select it and click Add Selection.

This will fetch the metadata of the views and then import it into the Data Flow.

Fetching Views details

Your CDS view has now been added to the Data Flow.

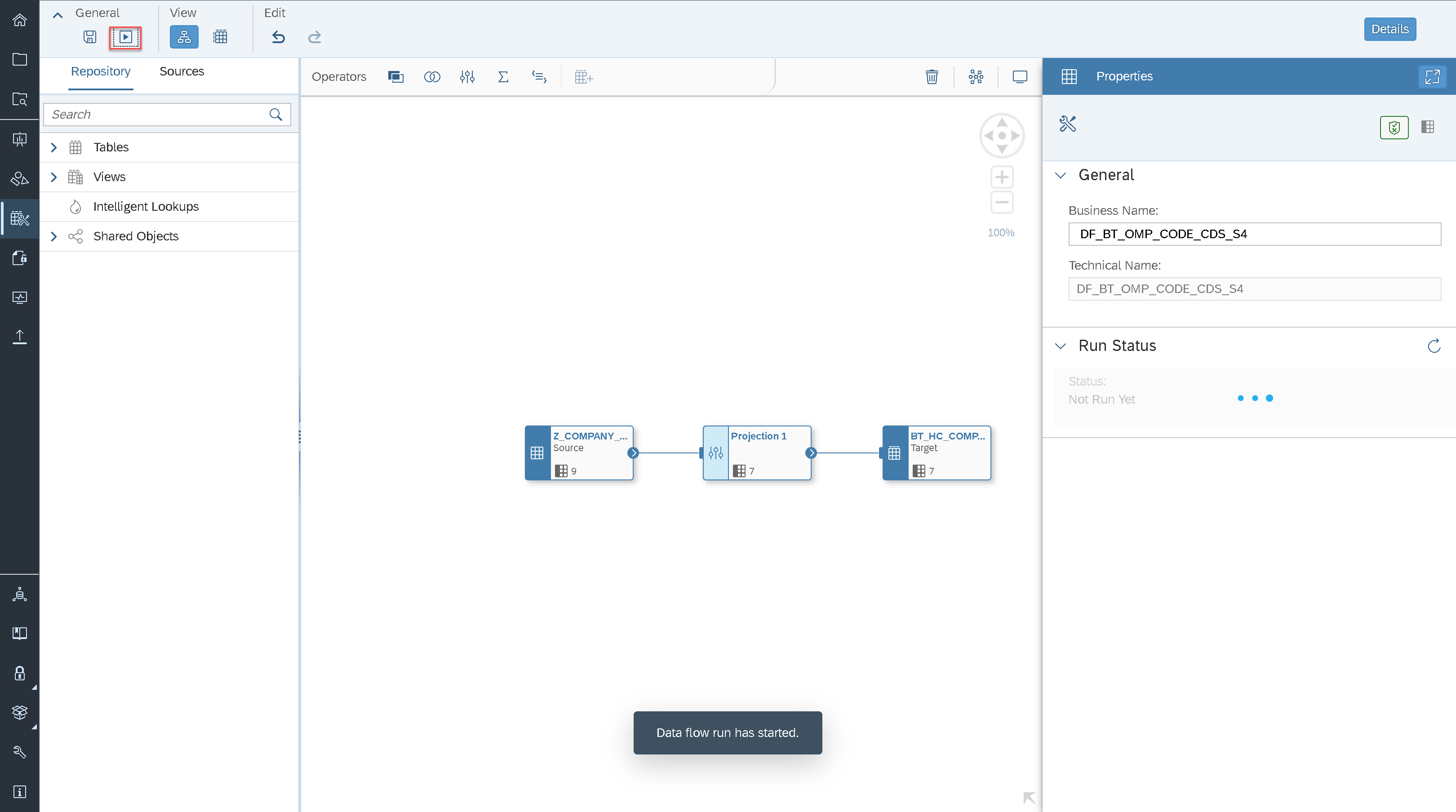

Add a projection operator to map the columns you want to replicate and eventually add a filter to the data

Using a projection operator in Dataflow

Add now a target table and rename it.

Then click on Create and Deploy Table to generate it in the underlying SAP HANA Cloud database

Create target table

Confirm the creation and deployment of the table

Deploy table

Save now the Data Flow

Data Flow saved

As the next step, you can execute the saved Data Flow

Run the Dataflow

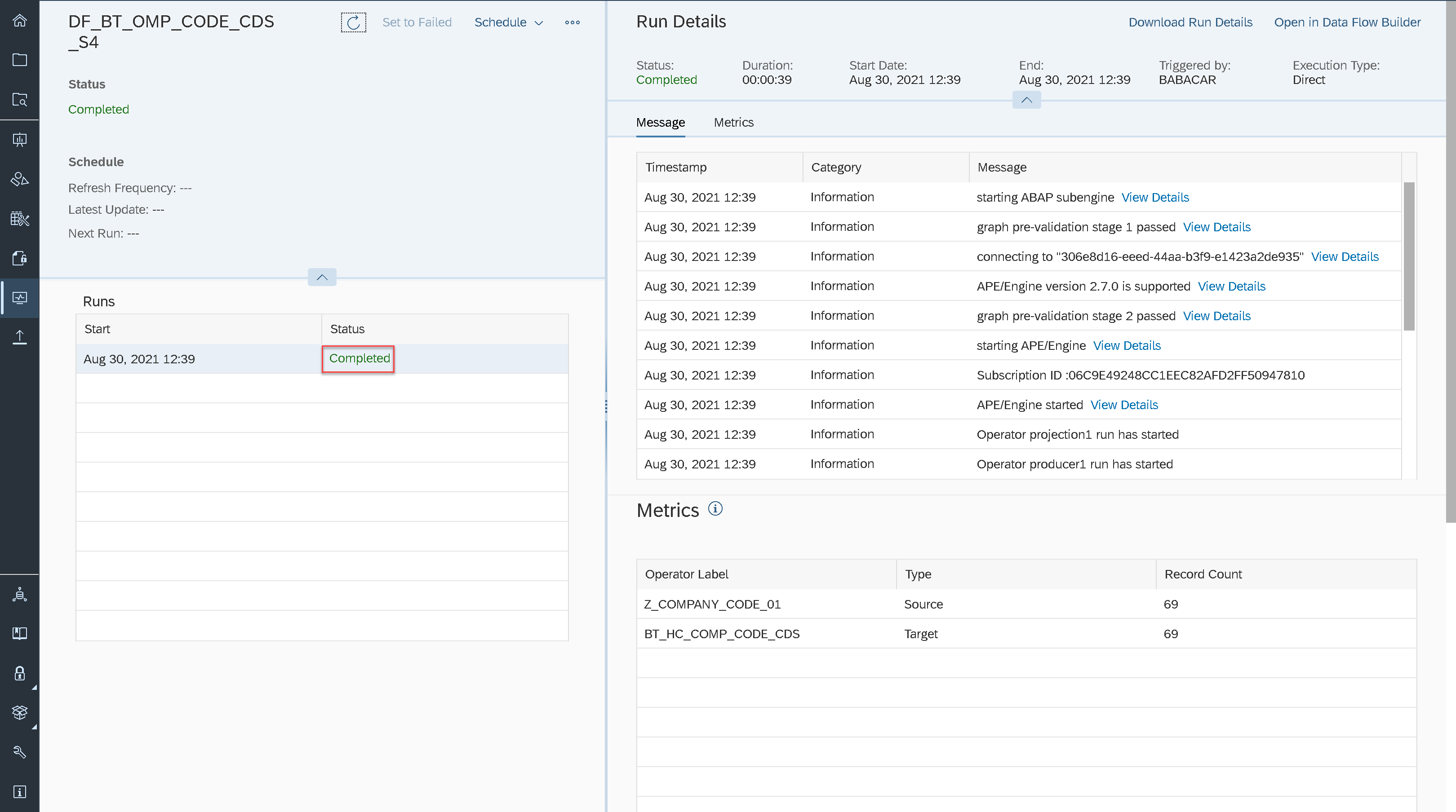

Look at the Run Details information.

Note that the status is changing every couple of seconds and that it might take some time until the Data Flow execution has been completed.

Run Details

Once the run has completed successfully, preview your loaded data in SAP Data Warehouse Cloud

Preview loaded data

I hope this was helpful.

If you have any questions please use this link.

If you need more information related to SAP Data Warehouse Cloud please check here.

References:

- SAP S/4HANA OP1909 FPS01 + TCI note: https://launchpad.support.sap.com/#/notes/2873666

- SAP S/4HANA OP2020: https://launchpad.support.sap.com/#/notes/2943599

- SAP ABAP in SAP Data Warehouse Cloud: https://help.sap.com/viewer/9f804b8efa8043539289f42f372c4862/cloud/en-US/a75c1aacf951449ba3b740c7e46...

- ABAP CDS replication in SAP Data Intelligence (Blog from Martin Boeckling): https://blogs.sap.com/2021/01/21/abap-cds-replication-in-data-intelligence/

- Replicate Data Changes in SAP Data Warehouse Cloud: https://help.sap.com/viewer/9f804b8efa8043539289f42f372c4862/cloud/en-US/441d327ead5c49d580d86003017...

- Configure Cloud Connector : https://help.sap.com/viewer/9f804b8efa8043539289f42f372c4862/cloud/en-US/1c7dc8c6acad44869ca9105d0b9...

- How to use SAP Data Intelligence with Cloud Connector (Blog from Dimitri Vorobiev): https://blogs.sap.com/2021/03/16/how-to-use-sap-data-intelligence-with-sap-cloud-connector/

- SAP Managed Tags:

- SAP Datasphere,

- SAP Connectivity service,

- SAP S/4HANA

Labels:

5 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

118 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

76 -

Expert

1 -

Expert Insights

177 -

Expert Insights

360 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

15 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,574 -

Product Updates

400 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,871 -

Technology Updates

495 -

Workload Fluctuations

1

Related Content

- Watch the SAP BW Modernization Webinar Series in Technology Blogs by SAP

- Understanding Data Modeling Tools in SAP in Technology Blogs by SAP

- What’s New in SAP Datasphere Version 2024.9 — Apr 23, 2024 in Technology Blogs by Members

- SAP Datasphere - Space, Data Integration, and Data Modeling Best Practices in Technology Blogs by SAP

- What are the use cases of SAP Datasphere over SAP BW4/HANA in Technology Q&A

Top kudoed authors

| User | Count |

|---|---|

| 13 | |

| 7 | |

| 7 | |

| 7 | |

| 6 | |

| 6 | |

| 6 | |

| 6 | |

| 5 | |

| 5 |