- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- How NOT to size a SAP BW system for SAP HANA

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

former_member93

Active Contributor

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

08-28-2013

9:31 PM

Over the past couple years I have been involved in many sizing activities for BW on HANA. I have collected some valuable lessons learned. This should help you understand better what common mistakes to avoid and how the sizing works in more detail.

Note: This blog is specifically for sizing existing BW systems. For sizing brand new installations, we recommend using SAP QuickSizer (http://service.sap.com/quicksizer). Also if you are planning to run multiple applications on a single SAP HANA database (in addition to BW), you have to size these applications independently and added them together.

The following is based on an actual customer case. The customer says their BW system is 6.1 TB. Quick, takes a guess of how big the SAP HANA appliance needs to be.... Well, I hope you gave the proper "consulting answer" that you need more details. But let's see if you would have taken all of the following into consideration.

1) Do not count log and free space

In this case we were dealing with BW running on a Microsoft SQL server database. The 6.1 TB was actually the total size of the data and log files. Ha! Beginner mistake. Clearly only data files excluding the free space are relevant for HANA sizing (=allocated space). I'm sure everyone got that right.

2) Do not count indexes

Next we look at the allocated space. Much of it is taken up by database indexes and some database-specific administration data. We are really interested in the pure database tables only. In our case study, over half of the allocated space was indexes! HANA persists data differently, so we disregard all indexes.

3) Do not count aggregates

BW on HANA does not need aggregates. All related menu items have been removed and aggregate functionality is disabled since SAP HANA can aggregate billions of records on the fly.

4) Do not calculate with size of compressed data

We are down significantly from the original 6.1 TB but here's a surprise... The MS-SQL database was compressed already. We need the uncompressed data into consideration. However, we need to do it right. Don't rely on any compression factors provided by the database. It's hard to understand how these were calculated. They are irrelevant anyway since SAP HANA compresses differently.

Here's an example, you have a table with char(100) field and 1 million records. Some might say that's 100 million bytes of uncompressed data. But this again is not enough information to determine the size of the table in HANA. It matters what data is actually stored in the table! If a record contains "ABC", we need 3 bytes and not 100. Ergo, what you really need to do is look at all the data in the table.

Reading the complete database would be rather time consuming so we take some shortcuts: We sample only a small subset of the records and extrapolate the results. And we can exclude reading fields that have a fixed size like INT4. In the example, we select a sample of 100,000 records and determine an average field length of 10. This gives us an uncompressed, raw data size of 10 million bytes.

Your system is not on Unicode yet? Typically, there's not much textual information stored in BW (this will certainly change over the coming years) so you need to apply an uplift of about 5% (based on experience).

If we follow this process for all tables, we get about 3.2 TB of uncompressed data that will need to fit into our SAP HANA database. Grouped into the usual BW categories, it looks like this:

5) Do not apply the same compression factor to all tables

Sure, HANA does some incredible compression of data but it's not the same for each type of table. For example, InfoCube fact tables contain integer values only (pointers to dimensions or master data) and we usually find only few unique values per column. These will therefore compress very well. We assume factor 7 based on experience with other customer systems.

For master data tables, the opposite is true. Mostly all values in a column are different (not so much for attributes of master data of course). Overall, we will get little compression (1-2x).

6) Do not assume the complete table must be in memory

We also have to take into consideration that many tables are partitioned by default in BW on HANA and only the used partitions will be loaded into memory. Best example are PSA tables which are partitioned by request id. BW usually works only with the most recent requests and therefore much of the PSA table will not be loaded. This is the default behavior for PSA tables and write-optimized DataStore Objects.

Optionally, we can apply the "non-active" data logic to other InfoProviders as well (if you configure BW accordingly).

The result in our case study: We end up with approximately 925 GB of memory once the data is compressed inside HANA.

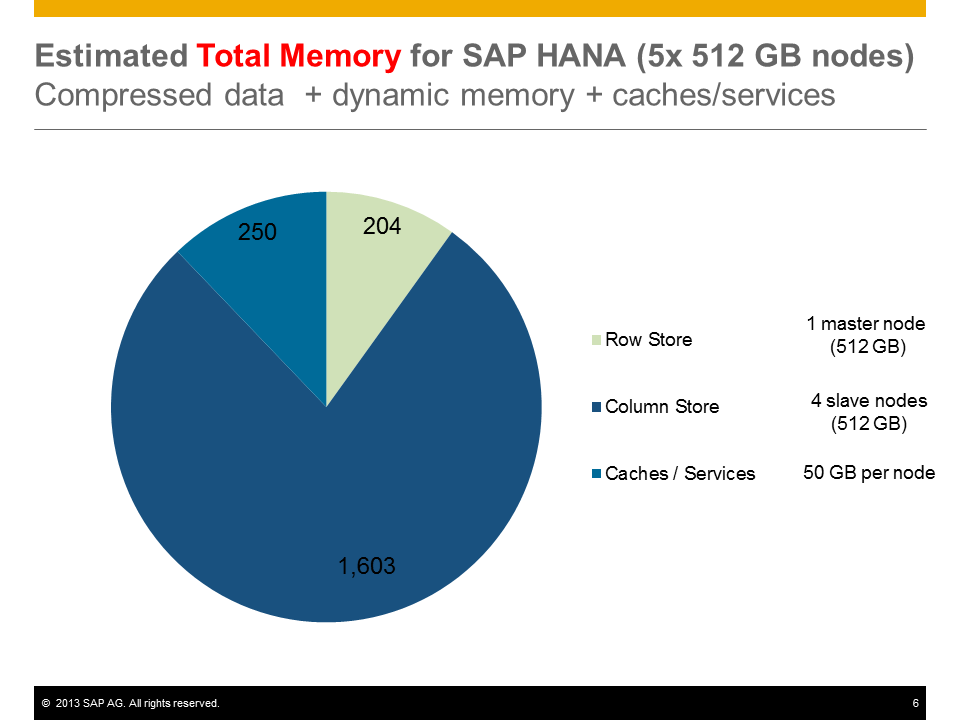

7) Do not forget room for server processes, caches, and processing of data

The database software needs to run in memory of course. For SAP HANA we assume about 50 GB of memory are required for these server processes and related caches (per node).

What's more essential is to leave amble room for dynamically memory allocation for processing your business data. There are two main cases: merging new data into a column store table (so called "delta merge") and processing end-user queries. We don't want to run short on memory for these.

In general we double the size of the business data to include enough room for processing. (Note: We don't double for tables marked as "non-active").

😎 Do not assume the total amount of memory is the same for different size of server nodes

Finally, we need to distribute the data to server nodes. Today we have nodes with 512 GB or 1 TB available. First, we need to take into consideration that only certain tables will be located on the master node. This includes all tables of the row store plus some other administration tables located in the column store (another mistake is thinking that the master node is only for the row store).

All business data however is distributed across the available slave nodes. Since the master node is not used for business data and we need some amount of memory for server processes and cache per slave node, you can end up with different total amount of required hardware.

In our case study, the result was either 5x 512 GB nodes or 3x 1 TB nodes.

9) Do not forget to size themaster node properly

As mentioned above, the master node will contain certain tables as a default configuration. Therefore you have to make sure that these tables will fit into the given size of the master node... including future growth of these tables. If you follow best practices for housekeeping in BW, this is not a problem at all. However, if you are a bit lax on housekeeping, you might run into the restriction of the master node.

For a one time clean up and frequent housekeeping for row store tables, please see SAP Note 706478.

The most common areas for BW customers are:

Additional details on sizing the master node, see SAP Note 1855041.

10) Do not ignore means for system size reduction

So far we assumed that the complete system is migrated as is. However, there are things to consider that will reduce the over size of your system significantly:

- Removal of complete data layers, for example reporting off DSO instead of an InfoCube with the same or just aggregated data

- More aggressive housekeeping of PSA, change log, and DTP error stacks

- Usage of near-line storage solution (NLS)

- Revisit data retention strategy and archiving

For more details see "Optimization of Data Retention".

Finally...

Now you know how to avoid these pitfalls. Did you get them all? Of course it would be extremely cumbersome and time consuming to calculate all of this by hand. But here's the best news: We have a program available that fully automates the complete sizing calculation!

For the one and only way to size a BW system properly for SAP HANA, please see SAP Note 2296290 and its attachments.

Best,

Marc Bernard

@marcfbe

- SAP Managed Tags:

- BW (SAP Business Warehouse),

- SAP BW/4HANA,

- SAP HANA

Labels:

24 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

109 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

74 -

Expert

1 -

Expert Insights

177 -

Expert Insights

346 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

14 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,575 -

Product Updates

390 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,871 -

Technology Updates

479 -

Workload Fluctuations

1

Related Content

- BODS migration in Technology Blogs by Members

- Watch the SAP BW Modernization Webinar Series in Technology Blogs by SAP

- Handling profile parameter values in SAP NetWeaver and SAP HANA in Technology Blogs by Members

- Z-UIBB: Button for changes disappears in Technology Q&A

- Configuration as code (CaC) with destinations. in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 17 | |

| 15 | |

| 11 | |

| 9 | |

| 9 | |

| 8 | |

| 8 | |

| 8 | |

| 7 | |

| 7 |