- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- How to use Data Lake Files

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

What is Data Lake Files?

Data lake Files is a component of SAP HANA Cloud that provides secure, efficient storage for large amounts of structured, semi-structured, and unstructured data. Data lake Files is automatically enabled when you provision a data lake instance.

Provisioning creates the data lake Files container as a storage location for files. This file store lets you use data lake as a repository for big data. For more information on provisioning data lake Files with your data lake instance, see Creating SAP HANA Cloud Instances.

Configuration the File Container

I will introduce the step by Rest Api.

- Create HANA DB on BTP with Data Lake

- Note that the storage service type selects SAP Native

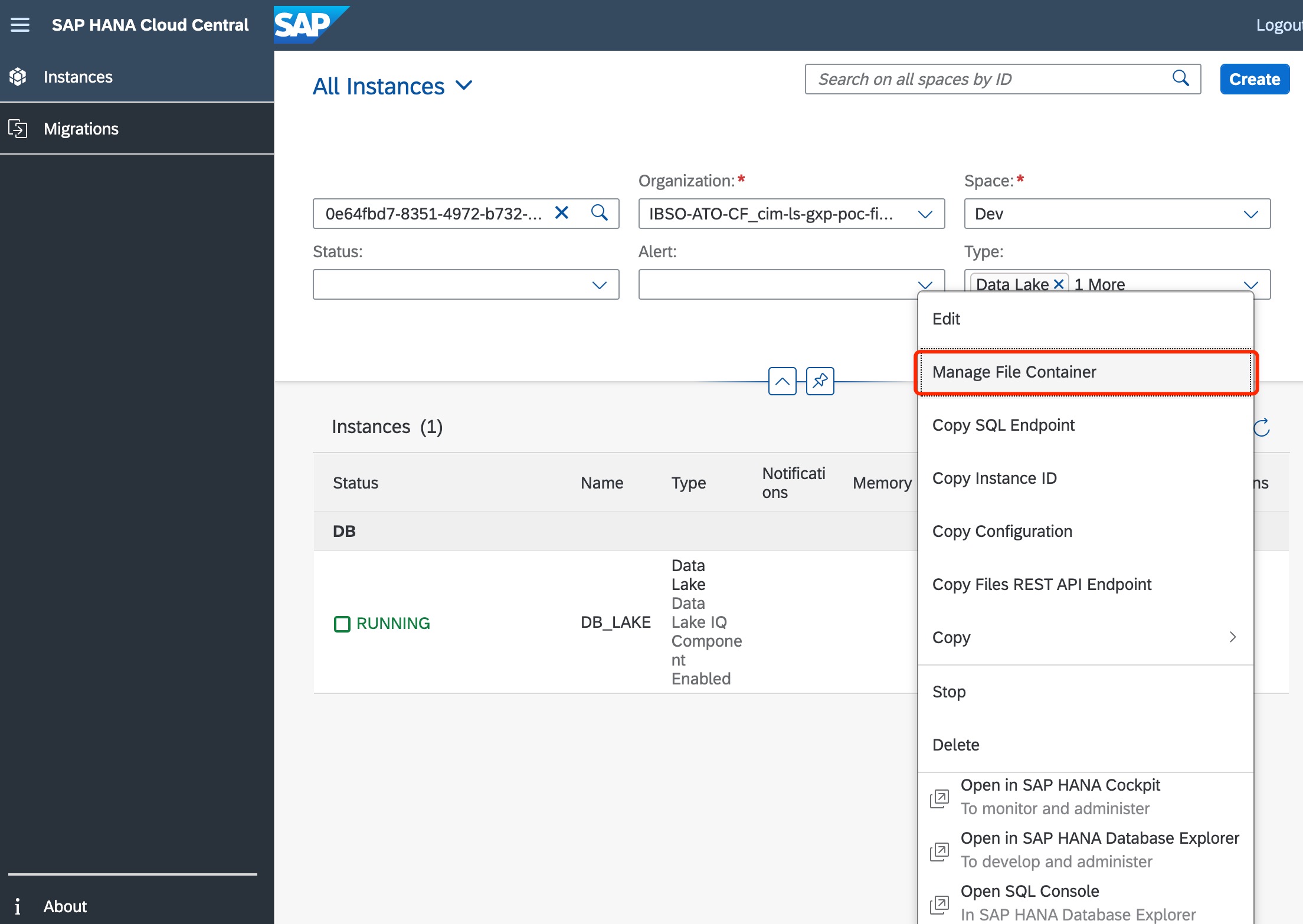

- Go to the SAP HANA Cloud on BTP, click Data Lake instance Actions -> Open SAP HANA Cloud Central

- Next, please configure the file container like this URL -> Setting Up Initial Access to HANA Cloud data lake Files

Okay, the data lake file configuration is complete.

Using the File Container

We can start to fetch or upload files through the Rest API.

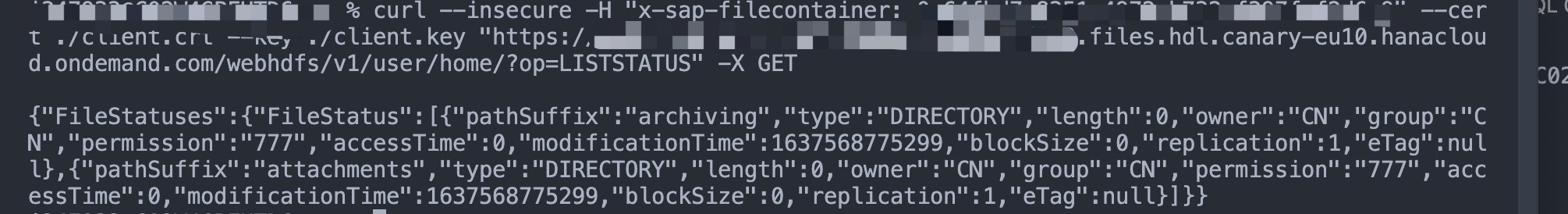

- Copy the instance ID and execute the following cmd command in the authorized folder locally.

Get list status:curl --insecure -H "x-sap-filecontainer: {{instance-id}}" --cert ./client.crt --key ./client.key "https://{{instance-id}}.files.hdl.canary-eu10.hanacloud.ondemand.com/webhdfs/v1/user/home/?op=LISTSTATUS" -X GET

You will see:

- Upload file please execute the command

curl --location-trusted --insecure -H "Content-Type:application/octet-stream" -H "x-sap-filecontainer: {{instance-id}}" --cert ./client.crt --key ./client.key --data-binary "@Studies.csv" "https://{{instance-id}}.files.hdl.canary-eu10.hanacloud.ondemand.com/webhdfs/v1/user/home/Studies.csv?op=CREATE&data=true&overwrite=true" -X PUT

- Now get the list status again, you can see the file just uploaded

Read the contents of the file into the DB table

Go to the SAP HANA database explorer and open the account.

Note that in this step, you must ensure that the table fields in the database are the same as those in the csv file.

The IQ table I use here to load the data, please refer to

CALL SYSHDL_BUSINESS_CONTAINER.REMOTE_EXECUTE('

LOAD TABLE MANAGEMENT_STUDIES

(status_code,study_num,description,study_ID,protocol_ID,lastSubjectLastVisit,isLeanStudy,studyPhase,ID)

FROM ''hdlfs:///user/home/archiving/Studies.csv''

format csv

SKIP 1

DELIMITED BY '',''

ESCAPES OFF' );

You can use data lake file to save some unstructured data, or to storage some archiving files, which seems to be a new good choice besides object store and AWS, etc.

- SAP Managed Tags:

- SAP HANA Cloud,

- SAP HANA Cloud, data lake

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

103 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

69 -

Expert

1 -

Expert Insights

177 -

Expert Insights

322 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,576 -

Product Updates

368 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

454 -

Workload Fluctuations

1

- SAP Datasphere News in April in Technology Blogs by SAP

- Neural Network: Predict "MNIST" data records by a PAL Multilayer Perceptron in Technology Blogs by Members

- Consuming SAP with SAP Build Apps - Web App in SAP Build Work Zone, standard edition in Technology Blogs by SAP

- Hybrid Architectures: A Modern Approach for SAP Data Integration in Technology Blogs by SAP

- HDI Artifact Recovery Wizard in Technology Blogs by SAP

| User | Count |

|---|---|

| 24 | |

| 9 | |

| 8 | |

| 7 | |

| 7 | |

| 6 | |

| 6 | |

| 6 | |

| 6 | |

| 6 |