- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- NSE: Systematically Measure Memory Savings

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

danielsblog01

Participant

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

09-07-2021

10:52 PM

This guide will show a methodical approach to finding data with a large memory footprint and calculating the memory storage savings after moving them to disk via Native Storage Extension. The TPC-H dataset is used in this guide.

Assumptions:

Observing the Memory Footprint

There are a few useful views for viewing the system’s memory footprint: M_CS_TABLES, M_CS_PARTITIONS, M_CS_ALL_COLUMNS.

First, we begin with observing which tables have the largest memory footprint. The following SQL will query the M_CS_TABLES view and converts the values in the size columns from bytes to megabytes.

Notice: There is quite a large table, LINEITEM. Let’s observe this tables footprint at the column level.

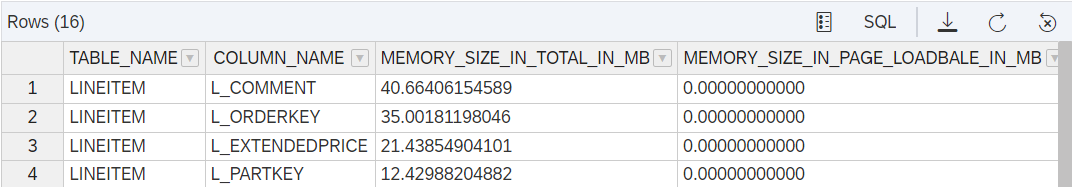

Notice: Columns L_ORDERKEY, L_COMMENT, L_EXTENDEDPRICE, and L_PARTKEY are quite large. Also, there seems to be multiple date columns that could be good candidates for partitions. Let’s observe these columns to get a sense of the data

Notice: L_COMMENT is long text data that doesn’t immediately appear useful for day-to-day analytics, it makes sense to offload this column to disk. Also, notice that the L_SHIPDATE range is from 2012-01-02 to 2018-12-01. For typical, year-over-year analysis we only need the latest two years of data. We can make a partition and place all data with a SHIPDATE before 2017 on disk as well.

Before beginning to move data it is useful to use the following views to observe the current Buffer Cache configuration. To view results in these views the user running the query requires adequate system permissions.

Right now, the Buffer Cache is configured for 1GB. Altering the Buffer Cache size can be done with the following SQL. This requires a user with INIFILE privilege.

Decide Which Data to Unload

Native Storage Extension supports the ability to move portions of a table to disk. This allows for more flexibility in the performance-cost trade off from storing data on disk.

From the simple analysis of the LINEITEM table, the L_COMMENT column can be offloaded and the L_SHIPDATE column can be used to partition the table.

To partition the table on a date column while a table has a primary key, a multilevel partition is required.

Now, the newly made partition and the L_COMMENT column can be offloaded from memory and the memory savings can be calculated.

First, observe the M_CS_TABLES view again to see the partition sizes that were made.

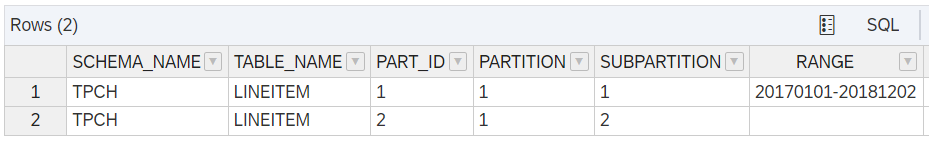

Notice: There is a larger and a much smaller partition, partitions 2 and 1 respectively. Now, set the partition with ID 2 and column L_COMMENT to have the PAGE LOADABLE load unit.

Setting the Load Unit

The SQL syntax for setting load units can be found here. In this case, the SQL was the following.

Verify the changes in the M_TABLE_PARTITIONS and M_CS_ALL_COLUMNS view where the LOAD_UNIT column can be observed. A value of PAGE indicates data stored on disk and COLUMN indicates data stored in memory.

Observe already, that the partition with PART_ID = 2 has gone from using 33.22 MB of main memory to only 0.8194 MB. (33.22-0.8194)/33.22 = 0.975, that's a 97.5% reduction in main memory usage for this partition. Unload the table completely from memory with the following SQL.

Load the data back into memory using the following SQL.

This unloads the LINEITEM table completely from memory and loads it back into memory for column loadable data and the buffer cache for page loadable.

Calculate the Total Memory Savings

To get a picture of what the table size was in memory, disk, and disk in page loadable format we can run this query.

We can observe that currently in main memory the table takes up 248.67 MB and like wise in DISK_SIZE if we were to unload it. Also note, since I've made the table completly COLUMN LOADABLE by reversing the load unit change I've made earlier, that currently there is 0 space taken up in the PAGE_LOADBALE disk storage. Now, I can compare this to the results after I've made my partition and L_COMMENT column PAGE LOADABLE.

The result shows that the table now only takes 83.54 MB of main memory and 170.35 MB in the PAGE LOADABLE disk storage. To compare main memory usage, (248.67 - 83.54) / 248.67 = 0.664. A 66.4% reduction in the main memory footprint for this table.

The same steps can be done on the column level as well. I will place it in a single query below.

Below are the results for the main memory footprint of the L_COMMENT column as column loadbale and page loadable respectively.

Summary

To calculate the main memory savings of NSE,

Following these steps, it is possible to calculate the main memory savings of NSE for any table, column or partition.

If you’ve found this blog post useful feel free to give it a like! Questions are welcome in the comment section below or on the community questions page.

Have another NSE topic you want to learn more about? Comment down below!

Assumptions:

- Reader has access to a provisioned SAP HANA instance SPS04+

- Reader has familiarity with Database Explorer, SAP HANA Cockpit, and HANA System Views

Observing the Memory Footprint

There are a few useful views for viewing the system’s memory footprint: M_CS_TABLES, M_CS_PARTITIONS, M_CS_ALL_COLUMNS.

First, we begin with observing which tables have the largest memory footprint. The following SQL will query the M_CS_TABLES view and converts the values in the size columns from bytes to megabytes.

SELECT

TABLE_NAME,

SCHEMA_NAME,

MEMORY_SIZE_IN_TOTAL / 1024 / 1024 AS MEMORY_TOTAL_IN_MB,

MEMORY_SIZE_IN_PAGE_LOADABLE_MAIN / 1024 / 1024 AS MEMORY_SIZE_IN_PAGE_LOADABLE_IN_MB

FROM M_CS_TABLES WHERE SCHEMA_NAME='TPCH' ORDER BY MEMORY_SIZE_IN_TOTAL DESC;

Notice: There is quite a large table, LINEITEM. Let’s observe this tables footprint at the column level.

SELECT

TABLE_NAME,

COLUMN_NAME,

MEMORY_SIZE_IN_TOTAL / 1024 / 1024 AS MEMORY_SIZE_IN_TOTAL_IN_MB,

MEMORY_SIZE_IN_PAGE_LOADABLE_MAIN / 1024 / 1024 AS MEMORY_SIZE_IN_PAGE_LOADBALE_IN_MB

FROM M_CS_ALL_COLUMNS WHERE TABLE_NAME='LINEITEM' ORDER BY MEMORY_SIZE_IN_TOTAL DESC;

Notice: Columns L_ORDERKEY, L_COMMENT, L_EXTENDEDPRICE, and L_PARTKEY are quite large. Also, there seems to be multiple date columns that could be good candidates for partitions. Let’s observe these columns to get a sense of the data

SELECT

L_ORDERKEY,

L_COMMENT,

L_EXTENDEDPRICE,

L_RECEIPTDATE,

L_SHIPDATE,

L_COMMITDATE

FROM LINEITEM ORDER BY L_SHIPDATE DESC;Notice: L_COMMENT is long text data that doesn’t immediately appear useful for day-to-day analytics, it makes sense to offload this column to disk. Also, notice that the L_SHIPDATE range is from 2012-01-02 to 2018-12-01. For typical, year-over-year analysis we only need the latest two years of data. We can make a partition and place all data with a SHIPDATE before 2017 on disk as well.

Before beginning to move data it is useful to use the following views to observe the current Buffer Cache configuration. To view results in these views the user running the query requires adequate system permissions.

SELECT * FROM M_BUFFER_CACHE_STATISTICS;

SELECT * FROM M_BUFFER_CACHE_POOL_STATISTICS;Right now, the Buffer Cache is configured for 1GB. Altering the Buffer Cache size can be done with the following SQL. This requires a user with INIFILE privilege.

ALTER SYSTEM ALTER CONFIGURATION ('indexserver.ini', 'system') SET ('buffer_cache_cs', 'max_size') = '<SIZE IN MB>' WITH RECONFIGURE;Decide Which Data to Unload

Native Storage Extension supports the ability to move portions of a table to disk. This allows for more flexibility in the performance-cost trade off from storing data on disk.

From the simple analysis of the LINEITEM table, the L_COMMENT column can be offloaded and the L_SHIPDATE column can be used to partition the table.

To partition the table on a date column while a table has a primary key, a multilevel partition is required.

ALTER TABLE LINEITEM PARTITION BY HASH (L_ORDERKEY) PARTITIONS 1, RANGE(L_SHIPDATE) (

PARTITION '1990-01-01' <= VALUES < '2017-12-02',

PARTITION OTHERS

);

Now, the newly made partition and the L_COMMENT column can be offloaded from memory and the memory savings can be calculated.

First, observe the M_CS_TABLES view again to see the partition sizes that were made.

SELECT

TABLE_NAME,

PART_ID,

MEMORY_SIZE_IN_TOTAL / 1024 / 1024 AS MEMORY_SIZE_IN_TOTAL_IN_MB,

MEMORY_SIZE_IN_PAGE_LOADABLE_MAIN / 1024 / 1024 AS MEMORY_SIZE_IN_PAGE_LOADABLE_IN_MB

FROM M_CS_TABLES WHERE TABLE_NAME='LINEITEM' ORDER BY MEMORY_SIZE_IN_TOTAL DESC;

Notice: There is a larger and a much smaller partition, partitions 2 and 1 respectively. Now, set the partition with ID 2 and column L_COMMENT to have the PAGE LOADABLE load unit.

Setting the Load Unit

The SQL syntax for setting load units can be found here. In this case, the SQL was the following.

ALTER TABLE LINEITEM ALTER ("L_COMMENT" NVARCHAR(44) PAGE LOADABLE);

ALTER TABLE LINEITEM ALTER PARTITION 2 PAGE LOADABLE;

Verify the changes in the M_TABLE_PARTITIONS and M_CS_ALL_COLUMNS view where the LOAD_UNIT column can be observed. A value of PAGE indicates data stored on disk and COLUMN indicates data stored in memory.

SELECT

TABLE_NAME,

PART_ID,

MEMORY_SIZE_IN_TOTAL / 1024 / 1024 AS MEMORY_SIZE_IN_TOTAL_IN_MB,

MEMORY_SIZE_IN_MAIN / 1024 / 1024 AS MEMORY_SIZE_IN_MAIN_IN_MB,

LOAD_UNIT

FROM M_CS_TABLES

WHERE TABLE_NAME='LINEITEM' ORDER BY MEMORY_SIZE_IN_TOTAL DESC;

SELECT

TABLE_NAME,

COLUMN_NAME,

MEMORY_SIZE_IN_TOTAL / 1024 / 1024 AS MEMORY_SIZE_IN_TOTAL_IN_MB,

MEMORY_SIZE_IN_MAIN / 1024 / 1024 AS MEMORY_SIZE_IN_MAIN_IN_MB,

LOAD_UNIT

FROM M_CS_ALL_COLUMNS

WHERE TABLE_NAME='LINEITEM' ORDER BY MEMORY_SIZE_IN_TOTAL DESC;

Observe already, that the partition with PART_ID = 2 has gone from using 33.22 MB of main memory to only 0.8194 MB. (33.22-0.8194)/33.22 = 0.975, that's a 97.5% reduction in main memory usage for this partition. Unload the table completely from memory with the following SQL.

UNLOAD LINEITEM;Load the data back into memory using the following SQL.

LOAD LINEITEM ALL;This unloads the LINEITEM table completely from memory and loads it back into memory for column loadable data and the buffer cache for page loadable.

Calculate the Total Memory Savings

To get a picture of what the table size was in memory, disk, and disk in page loadable format we can run this query.

ALTER TABLE TPCH.LINEITEM ALTER ("L_COMMENT" NVARCHAR(44) COLUMN LOADABLE);

ALTER TABLE TPCH.LINEITEM ALTER PARTITION 2 COLUMN LOADABLE;

UNLOAD TPCH.LINEITEM;

LOAD TPCH.LINEITEM ALL;

SELECT SUM(MEMORY_SIZE_IN_MAIN / 1024 / 1024), SUM(DISK_SIZE / 1024 / 1024 ), SUM(DISK_SIZE_IN_PAGE_LOADABLE / 1024 / 1024 ) FROM M_TABLE_PARTITIONS WHERE TABLE_NAME='LINEITEM';

We can observe that currently in main memory the table takes up 248.67 MB and like wise in DISK_SIZE if we were to unload it. Also note, since I've made the table completly COLUMN LOADABLE by reversing the load unit change I've made earlier, that currently there is 0 space taken up in the PAGE_LOADBALE disk storage. Now, I can compare this to the results after I've made my partition and L_COMMENT column PAGE LOADABLE.

ALTER TABLE TPCH.LINEITEM ALTER ("L_COMMENT" NVARCHAR(44) PAGE LOADABLE);

ALTER TABLE TPCH.LINEITEM ALTER PARTITION 2 PAGE LOADABLE;

UNLOAD TPCH.LINEITEM;

LOAD TPCH.LINEITEM ALL;

SELECT SUM(MEMORY_SIZE_IN_MAIN / 1024 / 1024), SUM(DISK_SIZE / 1024 / 1024 ), SUM(DISK_SIZE_IN_PAGE_LOADABLE / 1024 / 1024 ) FROM M_TABLE_PARTITIONS WHERE TABLE_NAME='LINEITEM';

The result shows that the table now only takes 83.54 MB of main memory and 170.35 MB in the PAGE LOADABLE disk storage. To compare main memory usage, (248.67 - 83.54) / 248.67 = 0.664. A 66.4% reduction in the main memory footprint for this table.

The same steps can be done on the column level as well. I will place it in a single query below.

ALTER TABLE TPCH.LINEITEM ALTER ("L_COMMENT" NVARCHAR(44) COLUMN LOADABLE);

UNLOAD TPCH.LINEITEM;

LOAD TPCH.LINEITEM ALL;

SELECT SUM(MEMORY_SIZE_IN_MAIN / 1024 / 1024) FROM M_CS_ALL_COLUMNS WHERE TABLE_NAME='LINEITEM' AND COLUMN_NAME='L_COMMENT';

ALTER TABLE TPCH.LINEITEM ALTER ("L_COMMENT" NVARCHAR(44) PAGE LOADABLE);

UNLOAD TPCH.LINEITEM;

LOAD TPCH.LINEITEM ALL;

SELECT SUM(MEMORY_SIZE_IN_MAIN / 1024 / 1024) FROM M_CS_ALL_COLUMNS WHERE TABLE_NAME='LINEITEM' AND COLUMN_NAME='L_COMMENT';

Below are the results for the main memory footprint of the L_COMMENT column as column loadbale and page loadable respectively.

Summary

To calculate the main memory savings of NSE,

- Note the MEMORY_SIZE_IN_MAIN value in M_CS_TABLES or M_CS_ALL_COLUMNS for a table or column.

- Set the load unit of a table, column, or partition to PAGE LOADABLE.

- UNLOAD the data from main memory. The easiest way to do this is to UNLOAD the entire table then LOAD it back.

- Use the new MEMORY_SIZE_IN_MAIN value in M_CS_TABLES or M_CS_ALL_COLUMNS for a table or column to calculate the memory savings. (OLD_MEM_VALUE - NEW_MEM_VALUE) / (OLD_MEM_VALUE)

Following these steps, it is possible to calculate the main memory savings of NSE for any table, column or partition.

If you’ve found this blog post useful feel free to give it a like! Questions are welcome in the comment section below or on the community questions page.

Have another NSE topic you want to learn more about? Comment down below!

- SAP Managed Tags:

- SAP HANA Cloud,

- SAP HANA

Labels:

13 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

116 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

75 -

Expert

1 -

Expert Insights

177 -

Expert Insights

354 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

14 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,575 -

Product Updates

398 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,871 -

Technology Updates

490 -

Workload Fluctuations

1

Related Content

- SAP Analytics Cloud - Performance statistics and zero records in Technology Blogs by SAP

- OUT NOW: SAP Signavio February 2024 release in Technology Blogs by SAP

- Boosting Business Performance: Measuring ROI in BPM in Technology Blogs by SAP

- Fast Track your AI Strategy with SAP Business AI in Technology Blogs by SAP

- An Introduction to SAP Build Process Automation in Technology Blogs by Members

Top kudoed authors

| User | Count |

|---|---|

| 20 | |

| 11 | |

| 8 | |

| 8 | |

| 7 | |

| 7 | |

| 7 | |

| 6 | |

| 6 | |

| 6 |