- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- SAP AI Core - Realtime inference with SAP HANA Mac...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Product and Topic Expert

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

08-16-2023

2:12 PM

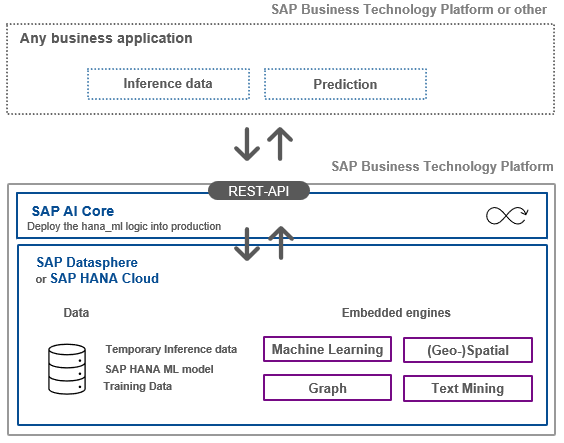

Learn with this hands-on tutorial how to provide realtime predictions from SAP HANA Machine Learning through SAP AI Core. Set up a REST-API, that uses a previously trained model in SAP HANA Cloud (or SAP Datasphere) to return predictions.

To follow the steps in this blog you need to be familiar with the earlier blog SAP AI Core – Scheduling SAP HANA Machine Learning, in which a Machine Learning model is trained to estimate the price of a used car.

In this blog that trained Machine Learning model is now used in SAP AI Core to create predictions in realtime.

Table of contents

All code that is used in this blog is available in this repository, to help you implement the scenario yourself.

We pick up from the previous blog (which used SAP AI Core to train and save the Machine Laerning model in SAP HANA Cloud) and jump right into the Python logic, that creates the REST-API and returns the predictions. The code in that file (main.py) creates predictions by:

The Python dependencies for the Docker image are in the requirements.txt file. This file is similar to the one used in the previous blog, when the model was trained. However, note that for creating the REST-API the Flask package is now included.

The Dockerfile is identical to the one used for training the Machine Learning model. However, the Python code in main.py, which is getting copied onto the image, is very different of course.

Build the docker image.

Push the image to the Docker repository, that is connected to SAP AI Core.

Now place this Argo ServingTemplate hana-ml-car-price-inference-rest-api.yaml into the Git repository that is connected to SAP AI Core. If the following values are identical to the one used in the WorkflowTemplate (as used in the previous blog), then AI Core automatically places both Templates into the same Scenario:

Note that the ServingTemplate's executables.ai.sap.com/name does not allow spaces in the name.

With the above steps SAP AI Core now has everything that's required to create the REST-API. In SAP AI Core go into the application from the previous blog, that trains the Machine Learning model. Hit "Sync" on that page so that the application picks up the new content from the above steps. Then click the "Refresh" button and you should see the new ServingTemplate in addition to the existing WorkflowTemplate.

The scenario now also shows both those Executables.

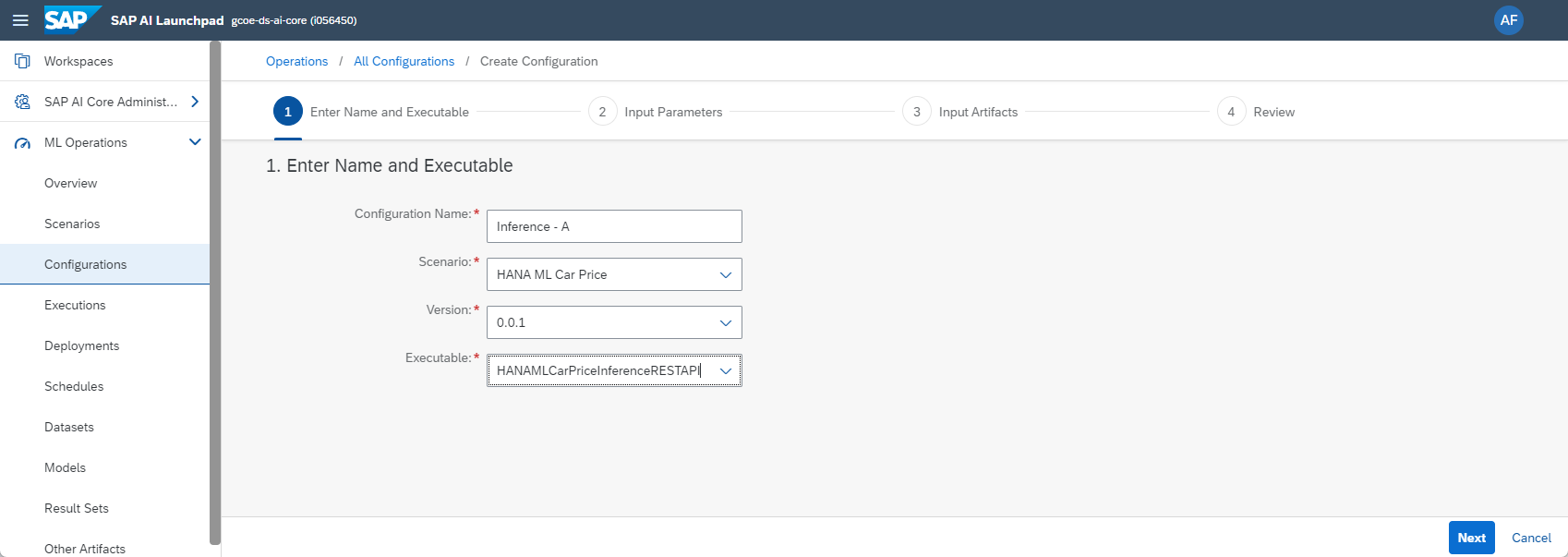

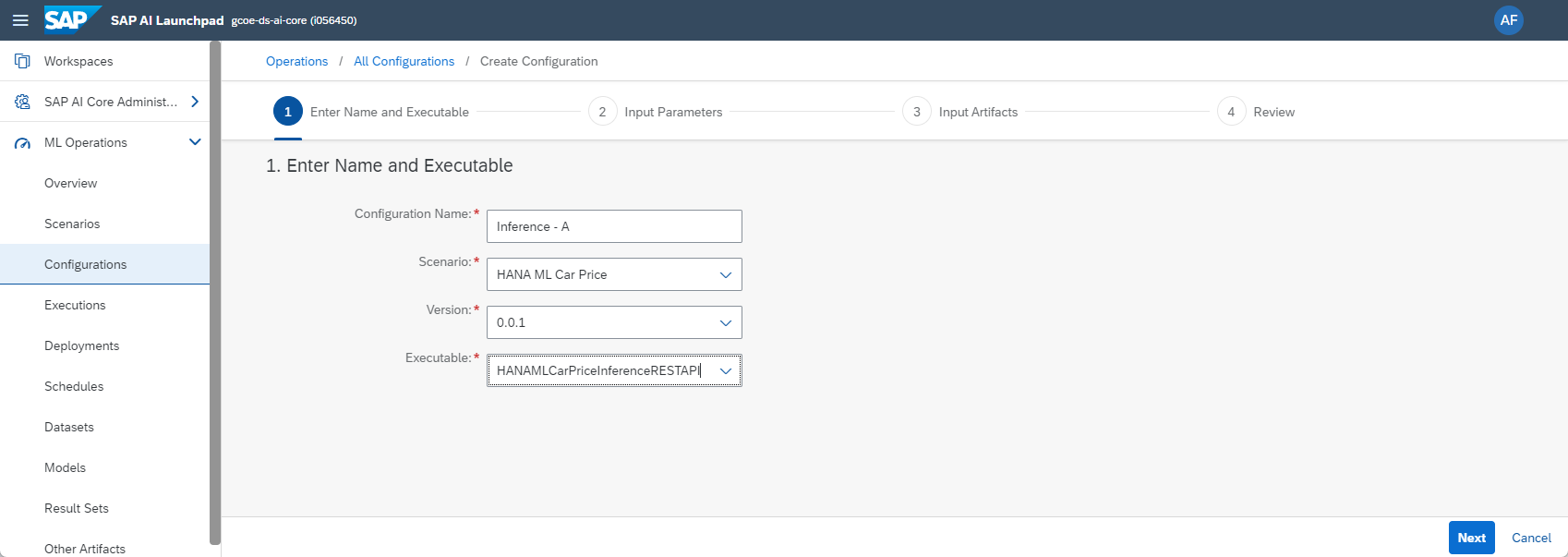

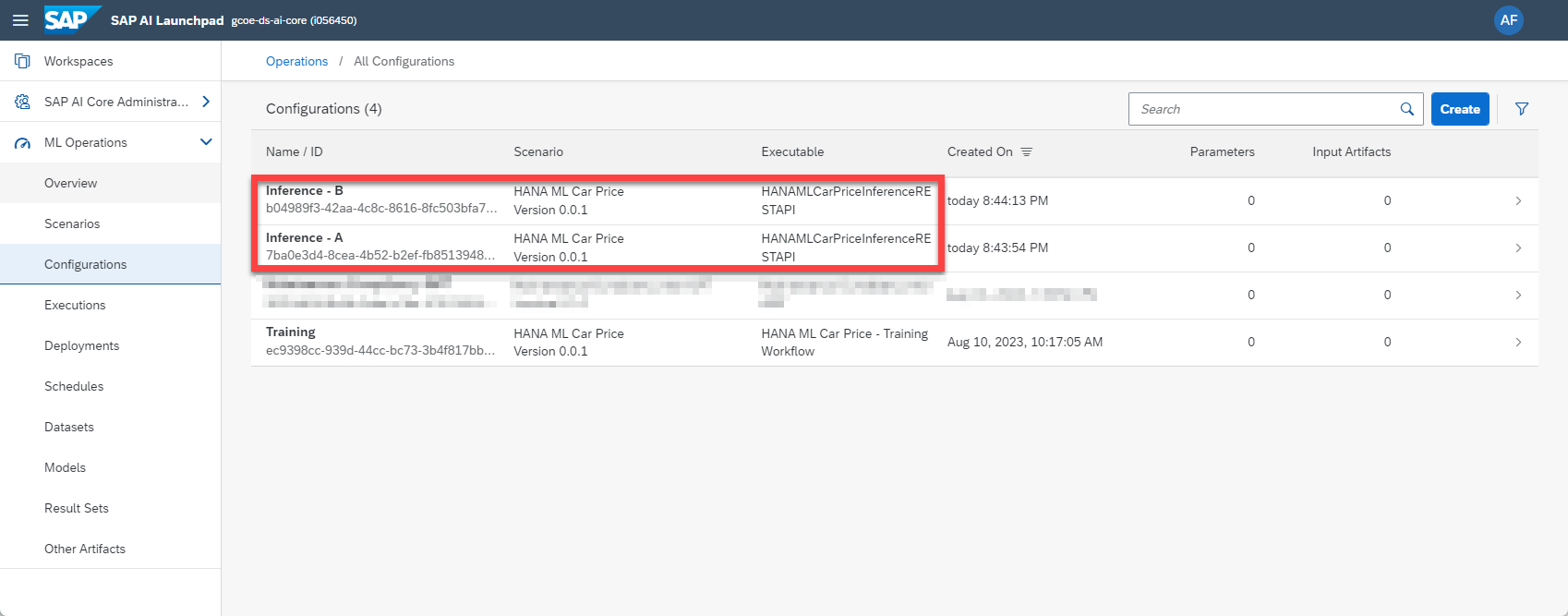

Now create a configuration based on the Inference Executable.

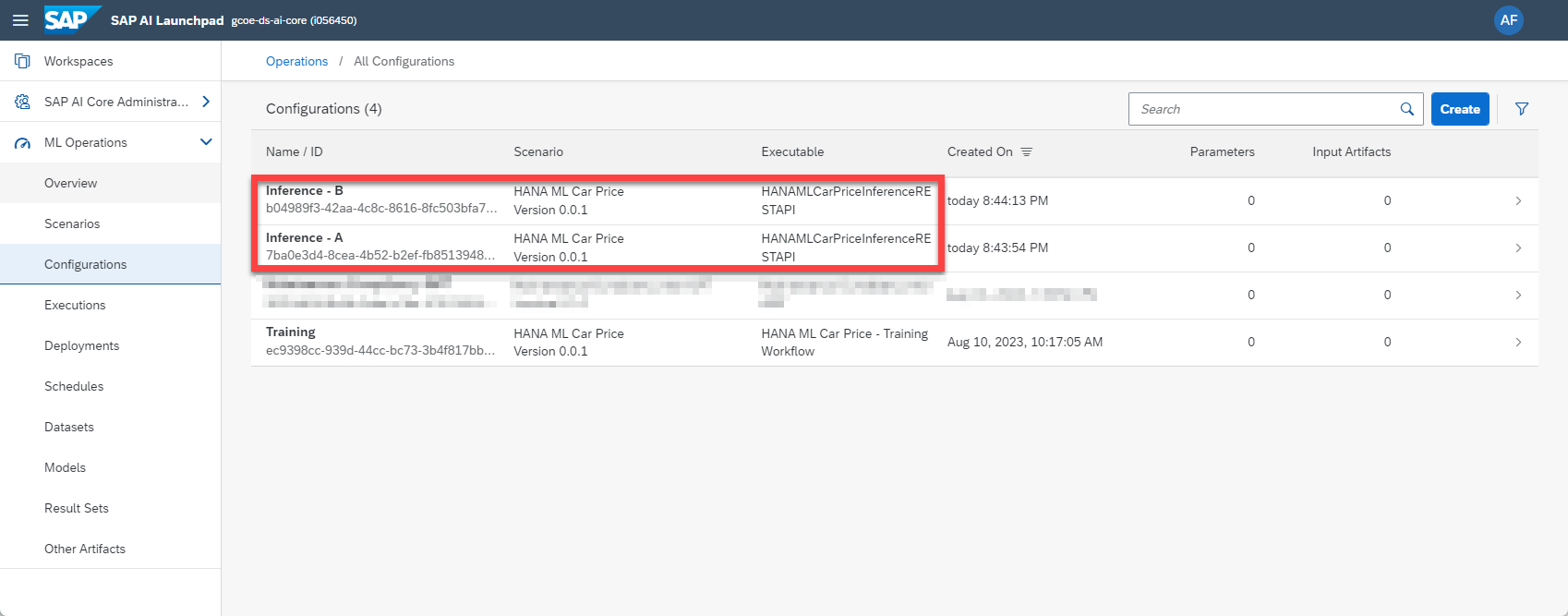

Personally, I found it useful to create two configurations. Both have exactly the same settings, they just have different names. This will be useful later, when updating the REST-API. We will come to that.

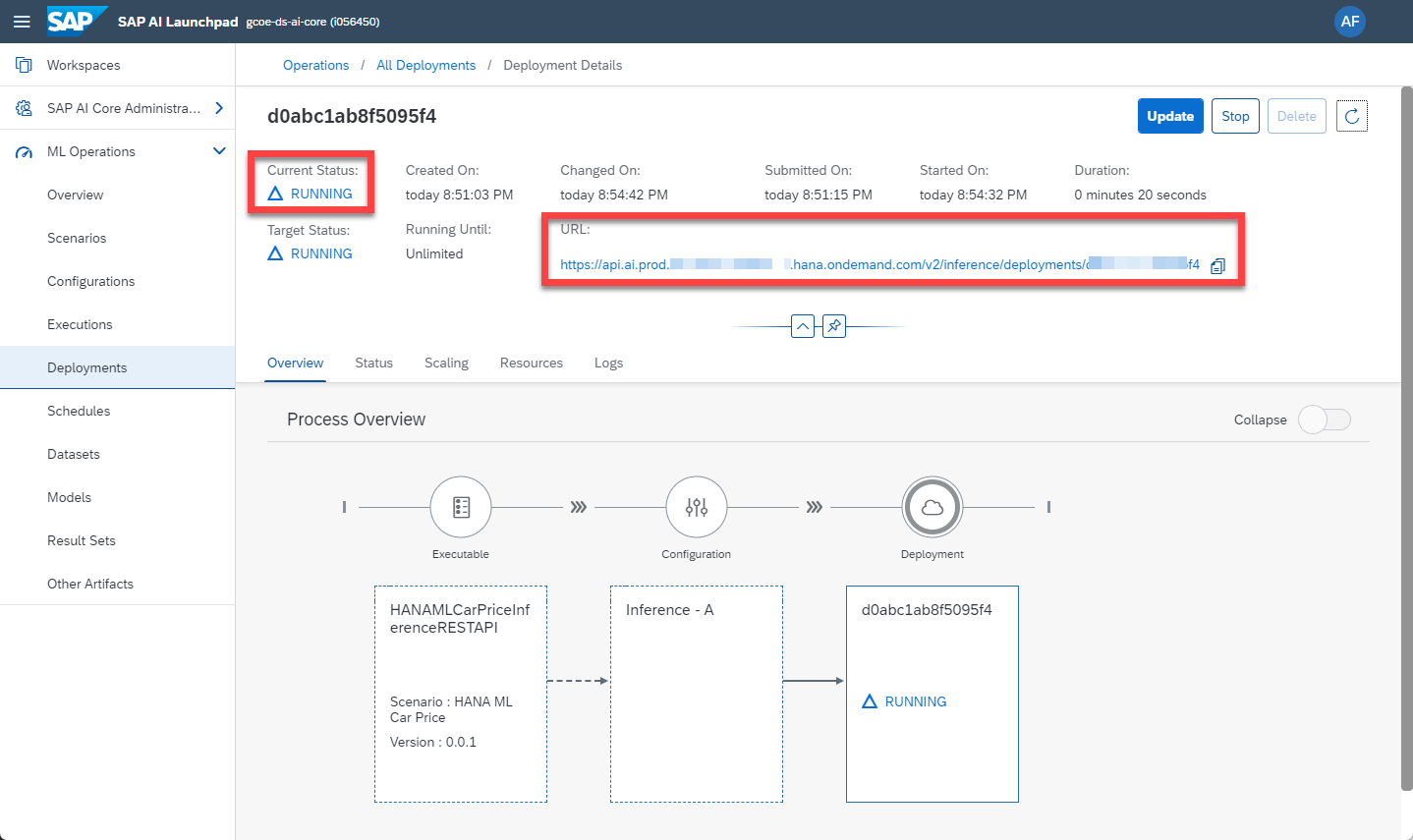

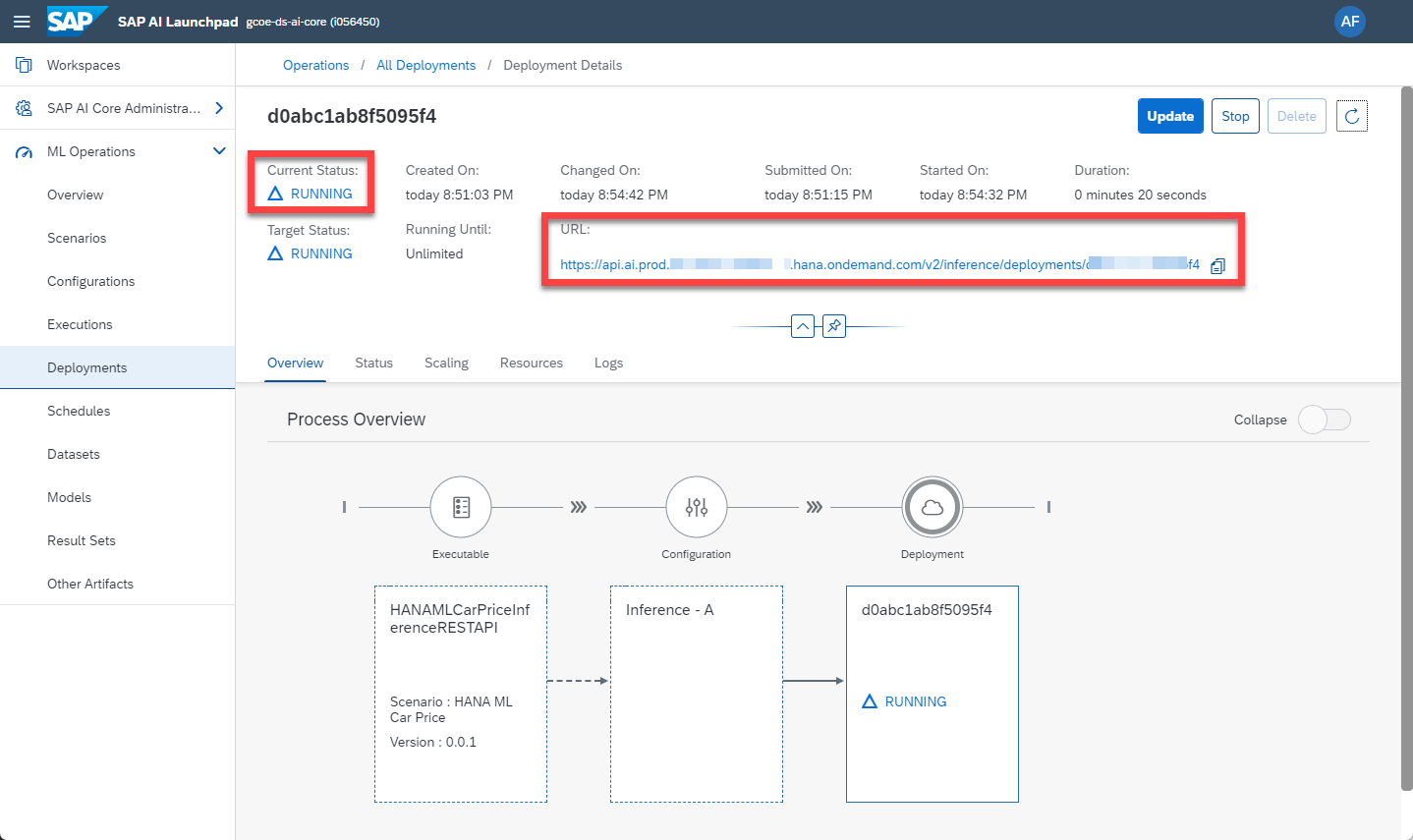

Now create the actual REST-API, that can be called to obtained predictions. Click into one of the two new Inference Configurations and hit "Create Deployment". Keep the Standard Duration, which means that the deployment is indefinite. It will keep running, until you stop it.

Review the settings, then create the deployment. After a few minutes the status switches to "RUNNING" and it shows the URL of the REST-API. The code is deployed, it is ready to provide predictions.

Hint: If you want to update the code, that is executed, when the REST-API is called, you can follow these steps. The deployment URL remains the same after the update. Those steps are not required now to follow this blog, but you might find this helpful, whenever you want to change the code behind the REST-API.

Any program that can call a REST-API can now obtain a prediction from the deployment. We use a Jupyter Notebook here to test this out.

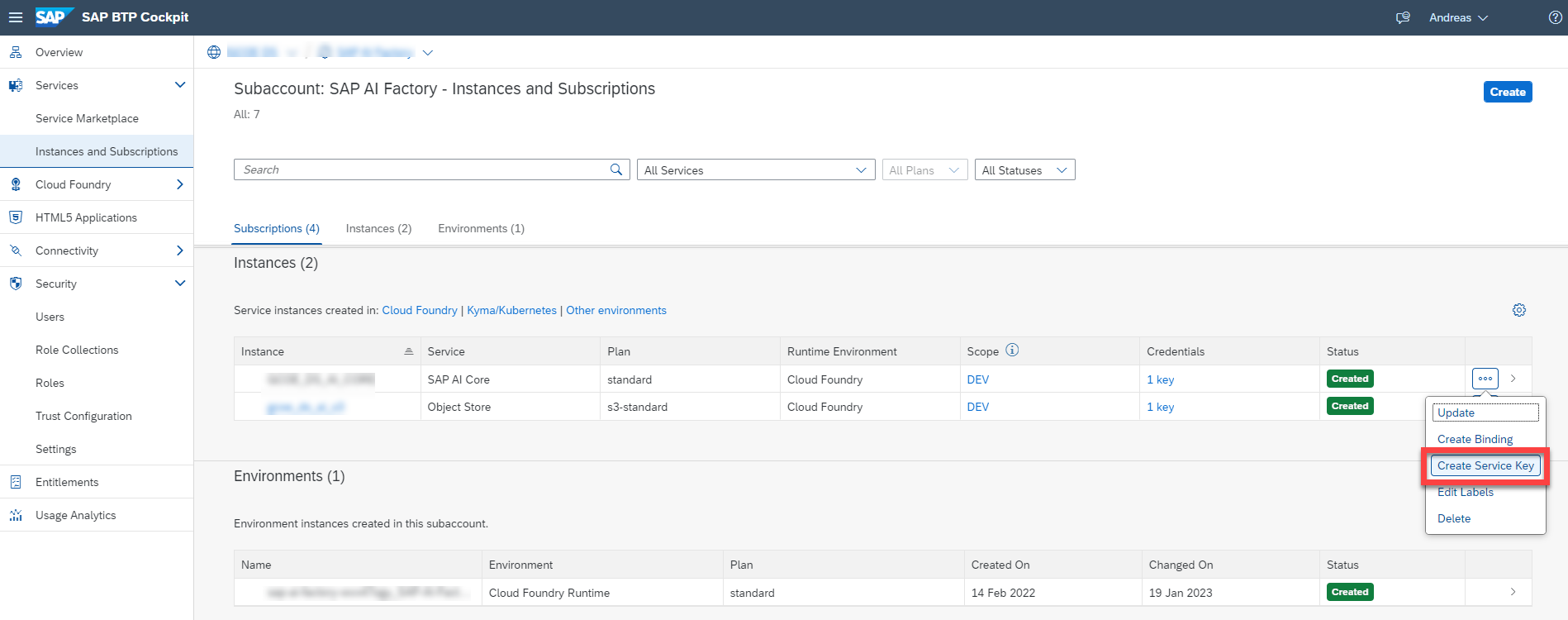

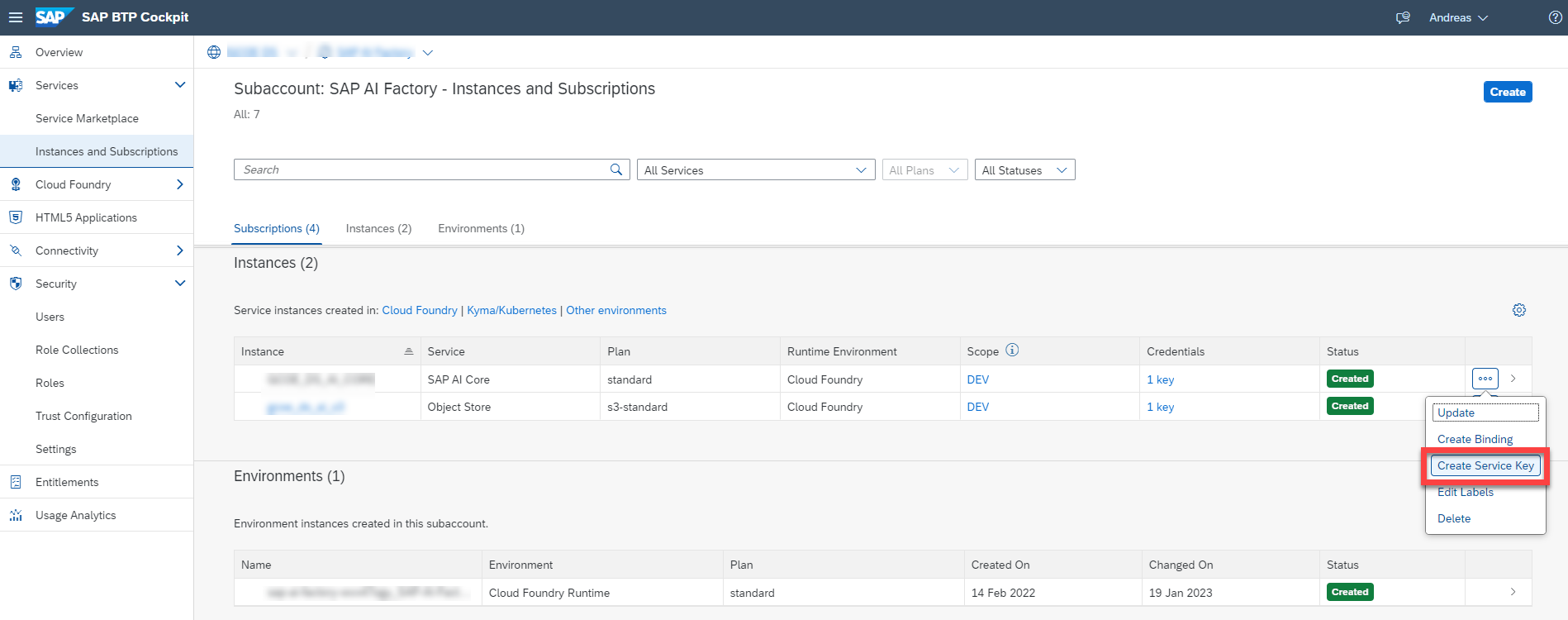

When calling that REST-API you need to provide a Bearer token to authenticate. That token can be created by the Python program by using a few values from your SAP AI Core instance's Service Key. This key can be downloaded from the SAP BTP Cockpit. You might need to ask your Administrator to create that key for you.

In this example the Service Key is saved as the file AICoreServiceKey.json, which we load in the Python code to access the values that are needed to create the Bearer token.

Create the bearer token.

Specify the Deployment URL of the running Inference Workflow. That is the URL SAP AI Core was showing when the deployment went into "RUNNING" status. Also add the endpoint "'/v1/predict'" as defined in the Python program.

Create the header for calling the REST-API, which passes the Bearer token. You also need to specify the name of the Resource Group, in which the deployment is running on SAP AI Core. There is no need to create a new Resource Group. Just enter the name of the Resource Group in which you created the deployment.

Specify the vehicle's details, whose price you want to estimate.

Finally, piece all content together and call the Deployment URL

The REST-API should respond with a price estimate for the car that you have provided. This particular car is estimated to cost € 14.877,20 at the time, when the training data was collected.

You can experiment with different car specifications. If the car's mileage is only 50.000 kilometers for example (instead of the 100.000 above), the price rises to € 16.447.40.

This completes the scenario. You can now use SAP AI Core to:

Happy predicting!

To follow the steps in this blog you need to be familiar with the earlier blog SAP AI Core – Scheduling SAP HANA Machine Learning, in which a Machine Learning model is trained to estimate the price of a used car.

In this blog that trained Machine Learning model is now used in SAP AI Core to create predictions in realtime.

Table of contents

Code and configuration

All code that is used in this blog is available in this repository, to help you implement the scenario yourself.

We pick up from the previous blog (which used SAP AI Core to train and save the Machine Laerning model in SAP HANA Cloud) and jump right into the Python logic, that creates the REST-API and returns the predictions. The code in that file (main.py) creates predictions by:

- using the Flask framework to create a REST-API with the endpoint /v1/predict

- retrieving the logon credentials for SAP HANA from a Generic Secret in SAP AI Core

- establishing a connection to SAP HANA Cloud on startup, and attempting to reuse the existing connection for any future incoming request

- uploading any incoming data as temporary table to SAP HANA Cloud

- loading the saved Machine Learning model

- obtaining a prediction from the loaded Machine Learning model on the temporary table, which has just been created

- returning that prediction through the REST-API

import os

import json

import pandas as pd

import hana_ml.dataframe as dataframe

from hana_ml.model_storage import ModelStorage

from flask import Flask

from flask import request as call_request

import uuid

# Print external IP address

from requests import get

ip = get('https://api.ipify.org').text

print(f'My public IP address is: {ip}')

# Variable for connection to SAP HANA

conn = None

# Function to establish connection to SAP HANA

def get_hanaconnection():

global conn

conn = dataframe.ConnectionContext(address=os.getenv('HANA_ADDRESS'),

port=int(os.getenv('HANA_PORT')),

user=os.getenv('HANA_USER'),

password=os.getenv('HANA_PASSWORD'))

print('Connection to SAP HANA established: ' + str(conn.connection.isconnected()))

# Creates Flask serving engine

app = Flask(__name__)

# You may customize the endpoint, but must have the prefix `/v<number>`

@app.route("/v1/predict", methods=["POST"])

def predict():

print("start serving ...")

# Convert incoming payload to Pandas DataFrame

payload = call_request.get_json()

payloadString = json.dumps(payload)

parsedPayload = json.loads(payloadString)

df_data = pd.json_normalize(parsedPayload)

# Ensure that the connection to SAP HANA is active

if (conn.connection.isconnected() == False):

get_hanaconnection()

# Load the saved model

model_storage = ModelStorage(connection_context=conn)

ur_hgbt = model_storage.load_model('Car price regression')

# Save received data as temporary table in SAP HANA

temp_table_name = '#' + str(uuid.uuid1()).upper()

df_remote_topredict = dataframe.create_dataframe_from_pandas(

connection_context=conn,

pandas_df=df_data,

table_name=temp_table_name,

force=True,

drop_exist_tab=True,

replace=False)

# Create prediction based on the data in the temporary table

df_predicted = ur_hgbt.predict(data=df_remote_topredict,

key='CAR_ID').collect()

prediction = df_predicted['SCORE'][0]

# Delete the temporary table

dbapi_cursor = conn.connection.cursor()

dbapi_cursor.execute('DROP TABLE "' + temp_table_name + '"')

# Send the prediction as response

output = json.dumps({'price_estimate': round(prediction, 1)})

return output

if __name__ == "__main__":

# Establish initial connection to SAP HANA

get_hanaconnection()

print("Serving Started")

app.run(host="0.0.0.0", debug=False, port=9001)The Python dependencies for the Docker image are in the requirements.txt file. This file is similar to the one used in the previous blog, when the model was trained. However, note that for creating the REST-API the Flask package is now included.

Flask==2.0.1

hana_ml==2.17.23071400

shapely==1.8.0

jinja2==3.1.2

urllib3==1.26.16

requests==2.29.0

cryptography==39.0.1

IPython==8.14.0The Dockerfile is identical to the one used for training the Machine Learning model. However, the Python code in main.py, which is getting copied onto the image, is very different of course.

# Specify which base layers (default dependencies) to use

# You may find more base layers at https://hub.docker.com/

FROM python:3.9

#

# Creates directory within your Docker image

RUN mkdir -p /app/src/

#

# Copies file from your Local system TO path in Docker image

COPY main.py /app/src/

COPY requirements.txt /app/src/

#

# Installs dependencies within you Docker image

RUN pip3 install -r /app/src/requirements.txt

#

# Enable permission to execute anything inside the folder app

RUN chgrp -R 65534 /app && \

chmod -R 777 /appBuild the docker image.

docker build -t YOURUSERHERE/hana-ml-car-price-restapi-inference:01 .Push the image to the Docker repository, that is connected to SAP AI Core.

docker push YOURUSERHERE/hana-ml-car-price-restapi-inference:01Now place this Argo ServingTemplate hana-ml-car-price-inference-rest-api.yaml into the Git repository that is connected to SAP AI Core. If the following values are identical to the one used in the WorkflowTemplate (as used in the previous blog), then AI Core automatically places both Templates into the same Scenario:

- scenarios.ai.sap.com/name

- scenarios.ai.sap.com/description

- scenarios.ai.sap.com/id

Note that the ServingTemplate's executables.ai.sap.com/name does not allow spaces in the name.

apiVersion: ai.sap.com/v1alpha1

kind: ServingTemplate

metadata:

name: i056450-hana-ml-car-price-inference-rest-api # Executable ID (max length 64 lowercase-hyphen-separated), please modify this to any value if you are not the only user of your SAP AI Core instance. Example: `first-pipeline-1234`

annotations:

scenarios.ai.sap.com/name: "HANA ML Car Price"

scenarios.ai.sap.com/description: "Estimate the price of a use car with SAP HANA Machine Learning"

executables.ai.sap.com/name: "HANAMLCarPriceInferenceRESTAPI" # No spaces allowed in the name here

executables.ai.sap.com/description: "Create estimate"

labels:

scenarios.ai.sap.com/id: "hana-ml-car-price" # The scenario ID to which the serving template belongs

ai.sap.com/version: "0.0.1"

spec:

template:

apiVersion: "serving.kserve.io/v1beta1"

metadata:

annotations: |

autoscaling.knative.dev/metric: concurrency # condition when to scale

autoscaling.knative.dev/target: 1

autoscaling.knative.dev/targetBurstCapacity: 0

labels: |

ai.sap.com/resourcePlan: starter # computing power

spec: |

predictor:

imagePullSecrets:

- name: docker-registry-secret-XYZ # your docker registry secret

minReplicas: 1

maxReplicas: 2 # how much to scale

containers:

- name: kserve-container

image: docker.io/YOURUSERHERE/hana-ml-car-price-restapi-inference:01 # Your docker image name

ports:

- containerPort: 9001 # customizable port

protocol: TCP

command: ["/bin/sh", "-c"]

args:

- "python /app/src/main.py"

env:

- name: HANA_ADDRESS # Name of the environment variable that will be available in the image

valueFrom:

secretKeyRef:

name: hanacred # Name of the generic secret created in SAP AI Core for the Resource Group

key: address # Name of the key from the JSON string that is saved as generic secret

- name: HANA_PORT

valueFrom:

secretKeyRef:

name: hanacred

key: port

- name: HANA_USER

valueFrom:

secretKeyRef:

name: hanacred

key: user

- name: HANA_PASSWORD

valueFrom:

secretKeyRef:

name: hanacred

key: password

SAP AI Core

With the above steps SAP AI Core now has everything that's required to create the REST-API. In SAP AI Core go into the application from the previous blog, that trains the Machine Learning model. Hit "Sync" on that page so that the application picks up the new content from the above steps. Then click the "Refresh" button and you should see the new ServingTemplate in addition to the existing WorkflowTemplate.

The scenario now also shows both those Executables.

Now create a configuration based on the Inference Executable.

Personally, I found it useful to create two configurations. Both have exactly the same settings, they just have different names. This will be useful later, when updating the REST-API. We will come to that.

Now create the actual REST-API, that can be called to obtained predictions. Click into one of the two new Inference Configurations and hit "Create Deployment". Keep the Standard Duration, which means that the deployment is indefinite. It will keep running, until you stop it.

Review the settings, then create the deployment. After a few minutes the status switches to "RUNNING" and it shows the URL of the REST-API. The code is deployed, it is ready to provide predictions.

Hint: If you want to update the code, that is executed, when the REST-API is called, you can follow these steps. The deployment URL remains the same after the update. Those steps are not required now to follow this blog, but you might find this helpful, whenever you want to change the code behind the REST-API.

- Update the code in the Python file (here main.py)

- Build a new Docker image, which includes that new Python file, and push the image to the Docker repository

- In SAP AI Core sync the application so that it becomes aware of the changes (after the Sync hit the "Refresh" button to update the display)

- Go into the Deployment that is still running and click "Update". You can then replace the Configuration of the current deployment, with another Configuration, so that the deployment switches to the new code. A running configuration cannot be updated with itself. Hence, earlier on in the blog, two identical configurations were created with different names. You can switch between them to update the Deployment every time you have a change in the code.

Realtime inference

Any program that can call a REST-API can now obtain a prediction from the deployment. We use a Jupyter Notebook here to test this out.

When calling that REST-API you need to provide a Bearer token to authenticate. That token can be created by the Python program by using a few values from your SAP AI Core instance's Service Key. This key can be downloaded from the SAP BTP Cockpit. You might need to ask your Administrator to create that key for you.

In this example the Service Key is saved as the file AICoreServiceKey.json, which we load in the Python code to access the values that are needed to create the Bearer token.

import json

service_key_location = "AICoreServiceKey.json"

file_read = open(service_key_location, "r")

config = json.loads(file_read.read())

uua_url = config['url']

clientid = config["clientid"]

clientsecret = config["clientsecret"]Create the bearer token.

import requests

params = {"grant_type": "client_credentials" }

resp = requests.post(f"{uua_url}/oauth/token",

auth=(clientid, clientsecret),

params=params)

token = resp.json()["access_token"]Specify the Deployment URL of the running Inference Workflow. That is the URL SAP AI Core was showing when the deployment went into "RUNNING" status. Also add the endpoint "'/v1/predict'" as defined in the Python program.

inference_url = 'YOURDEPLOYMENTURLHERE' + '/v1/predict'Create the header for calling the REST-API, which passes the Bearer token. You also need to specify the name of the Resource Group, in which the deployment is running on SAP AI Core. There is no need to create a new Resource Group. Just enter the name of the Resource Group in which you created the deployment.

headers = {'Content-Type' : 'application/json',

'AI-Resource-Group': 'YOURRESOURCEGROUPHERE',

'Authorization': f'Bearer {token}'}Specify the vehicle's details, whose price you want to estimate.

payload = json.dumps({

"CAR_ID": 1,

"BRAND": "audi",

"MODEL": "a5",

"VEHICLETYPE": "coupe",

"YEAROFREGISTRATION": 2016,

"HP": 120,

"FUELTYPE": "benzin",

"GEARBOX": "manuell",

"KILOMETER": 100000

})Finally, piece all content together and call the Deployment URL

response = requests.request("POST", inference_url, headers=headers, data=payload)

if response.status_code == 200:

print(response.text)

else:

print('Error. Status code: ' + str(response.status_code))The REST-API should respond with a price estimate for the car that you have provided. This particular car is estimated to cost € 14.877,20 at the time, when the training data was collected.

You can experiment with different car specifications. If the car's mileage is only 50.000 kilometers for example (instead of the 100.000 above), the price rises to € 16.447.40.

This completes the scenario. You can now use SAP AI Core to:

- train a Machine Learning model in SAP HANA Cloud

- schedule that training process to keep the Machine Learning model up to date

- create a REST-API to integrate realtime-predictions into your business application

Happy predicting!

- SAP Managed Tags:

- Machine Learning,

- SAP Datasphere,

- SAP HANA Cloud,

- Python,

- Artificial Intelligence,

- SAP AI Core,

- SAP AI Launchpad

Labels:

3 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

301 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

346 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

429 -

Workload Fluctuations

1

Related Content

- AI Foundation on SAP BTP: Q1 2024 Release Highlights in Technology Blogs by SAP

- Activating Embedded AI – Intelligent GRIR Reconciliation in Technology Blogs by SAP

- Demystifying Transformers and Embeddings: Some GenAI Concepts in Technology Blogs by SAP

- Augmenting SAP BTP Use Cases with AI Foundation: A Deep Dive into the Generative AI Hub in Technology Blogs by SAP

- SAP Inside Track Bangalore 2024: FEDML Reference Architecture for Hyperscalers and SAP Datasphere in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 32 | |

| 17 | |

| 15 | |

| 14 | |

| 11 | |

| 9 | |

| 8 | |

| 8 | |

| 8 | |

| 7 |