- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- SAP Data Intelligence | How to get headers of CDS ...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Advisor

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

02-15-2021

3:07 PM

In my previous post I promised you to show the combination of ABAP CDS Reader and Python operator. Here we are. You can use the approach below for SLT and ODP operators too, because the JSON output of operators is the same. In this blog post we are going to extract a standard CDS view from SAP S/4HANA Cloud into SAP Data Intelligence Data Lake. And what the most important is - we want to see headers in the file. This topic is more complicated, than the first one, but I’m sure you will get it 😊 Why I picked this topic? Firstly, I know that some colleagues encountered this problem. Secondly, there isn't a standard solution for it at the moment. Moreover, this approach will help you to select columns or make some data preparation if it needed. So, let's go!

For the CDS view extraction from SAP S/4HANA Cloud we are going to use ABAP CDS Reader operator in DI. It is a standard operator using to read data from a CDS view. There is a standard template “CDS View to File” using for data extraction from a CDS view into a csv file (located in the Data Lake in DI):

Problem description:

However, using this pipeline you will not see any headers in the file. It’s bad…very bad ☹

There are three versions of ABAP CDS Reader operator. The main difference here is the output data type. But independent of version you are using, the headers are still not visible in the file. And this problem we are going to solve in this post.

Solution:

Among these three versions only ABAP CDS Reader V2 has an information about fields in its output. So, we are going to use this version. The output data type of this operator is message. A message has a body and attributes. The body of the output deliveries data, and attribute some information about fields, last batch etc (see image below). Because there is not any standard solution to get headers, we must use a custom operator. In this post I’m going to use Custom Python operator. If you don’t know what it is, check my previous blog post.

Below you will see the example how attributes look like (I_COSTCENTERTEXT view):

I’m going to read headers from 'ABAP' -> 'Fields' -> 'Name'. The pipeline looks like:

I’m not going to go through back end setup, we will concentrate us on Data Intelligence. Let’s create our Python operator:

1.Step – Create input and output ports for Python operator

Input port: name – inData, type – message

Output port: name – outString, type – string

2.Step - Add some python magic into the "Script"

from io import StringIO

import csv

import pandas as pd

import json

def on_input(inData):

# read body

data = StringIO(inData.body)

# read attributes

var = json.dumps(inData.attributes)

result = json.loads(var)

# from here we start json parsing

ABAP = result['ABAP']

Fields = ABAP['Fields']

# creating an empty list for headers

columns = []

for item in Fields:

columns.append(item['Name'])

# data mapping using headers & saving into pandas dataframe

df = pd.read_csv(data, index_col = False, names = columns)

# here you can prepare your data,

# e.g. columns selection or records filtering

df_csv = df.to_csv(index = False, header = True)

api.send('outString', df_csv)

api.set_port_callback('inData', on_input)Note, that you should take care of data types using pandas in python. I don’t consider it in the code. Moreover, don’t forget about last batch if you need it in your use case. In addition, be aware that the “on_input” function will be executed by each data package. So, this approach is appropriate for replication/delta mode (because in the initial load json of attributes looks different after the last package, parsing cannot be done anymore, and the pipeline will fail after the last package). But this code can be adapted for initial load with only a couple of code lines. Take it as an exercise 😊 You can use this approach, if the CDS’s structure will be changed. And, of course, this code can be optimized.

3.Step - Upload a cool icon for your python operator and save it.

Configure “ABAP CDS Reader V2” and “Write to File” operators, save and run your pipeline. You are almost done 😊

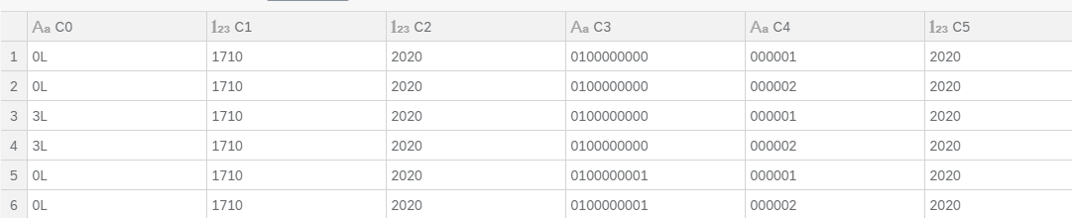

Your output with the headers:

Congratulations! As of today, you don’t have any troubles with CDS's headers 😊 If you have any questions or ideas for the next blog post, feel free to let me know in the comments. Stay tuned!

How to connect SAP S/4HANA with SAP Data Intelligence:

Integrate SAP Data Intelligence and S/4HANA Cloud to utilise CDS Views – Part 1

How To Integrate SAP Data Intelligence and SAP S/4HANA AnyPremise

- SAP Managed Tags:

- SAP Data Intelligence,

- SAP S/4HANA

Labels:

9 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

116 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

75 -

Expert

1 -

Expert Insights

177 -

Expert Insights

354 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

14 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,575 -

Product Updates

398 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,871 -

Technology Updates

490 -

Workload Fluctuations

1

Related Content

- Develop with Joule in SAP Build Code in Technology Blogs by SAP

- SAP BusinessObjects Mobile alternatives in Technology Blogs by SAP

- Unlocking the Potential of Business AI: Engineering Best Practices in Technology Blogs by SAP

- Your Ultimate Guide for SAP Sapphire 2024 Orlando in Technology Blogs by SAP

- SAP BTP FAQs - Part 2 (Application Development, Programming Models and Multitenancy) in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 20 | |

| 11 | |

| 8 | |

| 8 | |

| 7 | |

| 7 | |

| 7 | |

| 6 | |

| 6 | |

| 6 |