- SAP Community

- Groups

- Interest Groups

- Artificial Intelligence and Machine Learning

- Blogs

- Bring Open-Source LLMs into SAP AI Core

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

The open-source community surrounding Large Language Models (LLMs) is evolving rapidly, with new models(model architectures), backends(inference engines), libraries, and tooling constantly emerging. These developments enable the running of LLMs locally or on self-hosted environments. SAP AI Core is a service in the SAP Business Technology Platform that is designed to handle the execution and operations of your AI assets in a standardized, scalable, and hyperscaler-agnostic way. In this blog post series, we will explore various options for running popular open-source Large Language Models like Mistral, Mixtral, LlaVA, Gemma, Llama 2, etc., in SAP AI Core, which complements SAP Generative AI Hub with self-hosted open-source LLMs We'll utilize widely adopted open-source LLM tools or backends such as Ollama, LocalAI, llama.cpp and vLLM, among others, through the BYOM (Bring Your Own Model) approach. The source code of AI Core sample app byom-oss-llm-ai-core used in this blog post series are delivered as an SAP example under Apache 2.0 license. Not part of SAP product.

| Blog post series of Bring Open-Source LLMs into SAP AI Core |

| Part 1 – Bring Open-Source LLms into SAP AI Core: Overview (this blog post) Part 2 – Bring Open-Source LLMs into SAP AI Core with Ollama (To be published) Part 3 – Bring Open-Source LLMs into SAP AI Core with LocalAI (To be published) Part 4 – Bring Open-Source LLMs into SAP AI Core with llama.cpp (To be published) Part 5 – Bring Open-Source LLMs into SAP AI Core with vLLM (to Te published) Note: You can try it out the sample AI Core sample app byom-oss-llm-ai-core by following its manual here with all the technical details. The following blog posts will just wrap up the technical details of each option. |

We'll begin the series of blog posts with some overview introduction of bringing open-source LLMs into SAP AI Core.

Overview

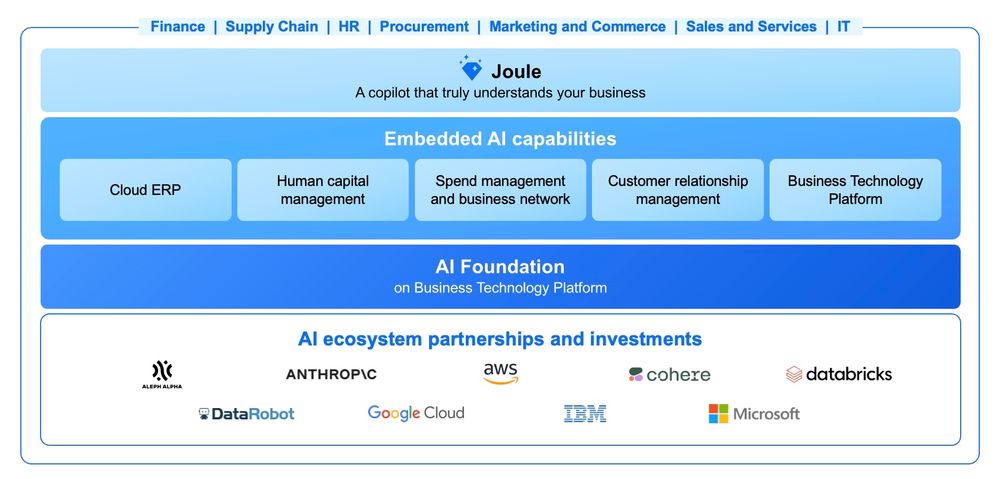

The SAP Business API strategy aims to infuse SAP business applications with intelligence by integrating cutting-edge AI technologies. An essential component of this strategy involves fostering partnerships and investments within the AI ecosystem. In addition to collaboration with proprietary AI vendors as illustrated in this diagram, the open-source commercially viable foundation models are also supported through BYOM(Bring Your Own Models) approach, in which SAP AI Core is leveraged as a start-of-art and enterprise-ready AI Infrastructure as a Service to deploy and infer open-source LLMs. Notably, an open-source foundation model (falcon-40b) is already available in the SAP Generative AI Hub within SAP AI Core.

On the flip side, within the open-source community of LLMs, an increasing number of high-performing models such as Mistral, Mixtral, Gemma, LLaVa, LLaMA-2, etc., are emerging. These models have the potential to drop in and replace GPT-3.5 in certain use cases.

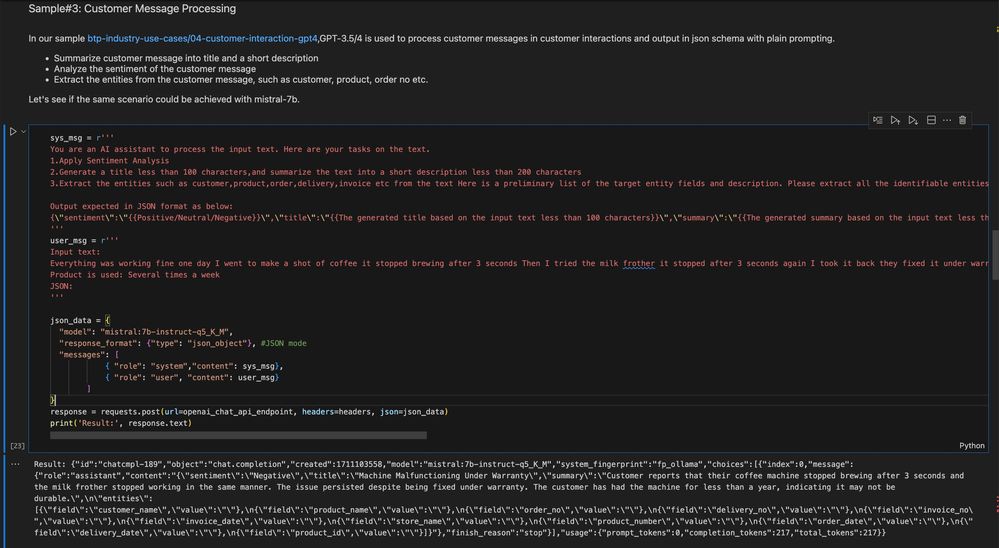

Sample#1: Customer Message Processing in Intelligent Customer Interaction use case

In our Intelligent Customer Interaction sample use case, we have used GPT3.5 to process the customer message. Details of this implementation can be found in our blog post Integrate with GPT Chat API for Advanced Text Processing. Now, this task can potentially be accomplished using Mistral-7b with comparable results.

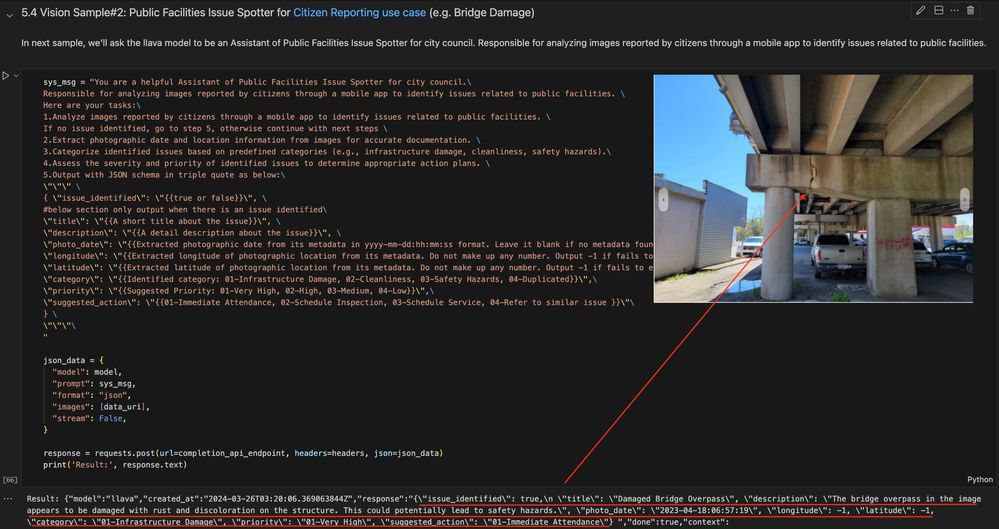

Sample#2: Public Facilities Issue Spotter for Citizen Reporting use case

We can enhance the citizen reporting use case with LLaVa model's vision capability by automatically analyzing images and identifying public facility issues. This simplifies the user experience in the citizen reporting use case, allowing users to take and upload photos to report an issue through the app effortlessly. Certainly, the same use case can be achieved with GPT-4V when it is available SAP Generative AI Hub.

This is just a few samples about what the open-source LLMs can help in business context. Next, let's evaluate the rationale of why running open-source LLMs with SAP AI Core.

Why running open-source LLMs with SAP AI Core?

Data Protection & Privacy: Ensure compliance with stringent data protection and privacy regulations by leveraging SAP AI Core's robust security measures. Particularly in contexts where strict data protection and privacy policy and regulations prohibit the transmission of data to external proprietary LLMs. A self-hosted LLM with SAP AI Core becomes a complement for SAP Generative AI Hub.

Security: Safeguard your data and applications with state-of-the-art security protocols and infrastructure provided by SAP AI Core.

Cost-Effectiveness: Benefit from cost-effective solutions without compromising on quality or security, SAP AI Core offers efficient resource allocation(GPU) and dynamic scalability.

Flexibility of Choices: Enjoy the flexibility to choose from a variety of open-source LLMs and LLM backends, tailored to your specific business needs and requirements.

Making Open-Sourced LLMs Enterprise Ready: Elevate open-source LLMs to enterprise-level standards by integrating them seamlessly with SAP AI Core, ensuring reliability, scalability, and performance.

How to bring open-source LLMs into SAP AI Core?

Now, let's go straight to the most interesting part, bringing the open-source LLMs into SAP AI Core through BYOM approach. Let's start with explanation of some basic concepts around LLM.

Basic concepts:

- LLM vs LMM

- Large Language Model(LLM): A type of machine learning model designed to process and generate human-like text. For instance, GPT-3.5, Mistral etc.

- Large Multi-modality Model(LMM): a more versatile type of machine learning model that can handle multiple modalities of data, such as text, images, audio, video, etc. These models are designed to process and generate content across different media formats. e.g. GPT-4V, LLaVa etc

- Model Architecture, Model Family and Models

- Model architecture: Refers to the structural design and organization of the neural network used for natural language processing tasks.

- Model Family: Refers to a group of related LLMs that share similar architectural principles, training methodologies, and objectives. For instance, LLaMa-2 is a model family.

- Models refer to individual instances or versions within a model family, each with its own specific architecture, parameters, and trained weights. e.g. models LLaMa2-7b, LLaMa2-13b, LLaMa2-70b belongs the model family LLaMa-2.

- Non-Quantization vs Quantization: A trade-off between efficiency and accuracy for model inference

- Non-quantization, also referred to as full-precision or floating-point representation, involves storing the model parameters using full precision floating-point numbers (e.g., 32-bit or 16-bit floating-point), which maintains the model's highest possible accuracy with much higher computational resources.

- Quantization is the process of reducing the precision of the parameters/weights in AI Models from floating-point numbers (typically 32-bit or 16-bit floating-point) to a lower bit precision, such as 8-bit integers or even lower, which can reduce the memory footprint and computational requirements of the model on the cost of accuracy loss. In this sample, we test the model with Quantization, like Q5_K_M, AWQ etc, which keep a balance of good model accurracy and inference efficiency.

- LLM Backend or Inference Engine: Refers to the computational framework or infrastructure responsible for executing inference tasks with the trained LLM model. for example, transformer, llama.cpp and vLLM support multiple models with different model architectures for inference.

- Model Hub: Refers to a centralized repository or platform where pre-trained LLM models are stored, managed, and made accessible to users for model inference tasks. The Model Hub serves as a resource hub for developers, researchers, and practitioners interested in leveraging pre-trained LLM models for their applications. Hugging Face is a great example of Model Hub.

Solution Architecture:

In this sample of Bringing Open-Source LLMs App in SAP AI Core, I have implemented four options(Ollama, LocalAI, llama.cpp and vLLM) which allow you can bring your preferred LLMs through configuration into SAP AI Core with OpenAI-compatible APIs. Some options(Ollama and LocalAI) even enables you to download and switch the model efficiently and dynamically during run-time.

In principle, there are three essential parts for bringing an open-source LLM/LMM into SAP AI Core

- Commercially viable Open-Source or Open-Weight Models: e.g. Mistral, Mixtral, LLaVa etc.

- Public accessible Model Hub: For instance, Ollama Model Library tailored for Ollama, hugging face as a general purposed model repository.

- Custom LLM lnference Server in SAP AI Core: You can bring your own code to implement an inference server, for example, in the blog post Running Language Models – Deploy Llama2 7b on AI Core by felixbartler, it shows a great sample to implement a custom inference server with hugging face transformer. Alternatively, there are open-source and ready-to-use llm inference servers which can be reused in SAP AI Core, like Ollama, LocalAI, llama.cpp and vLLM with minimal custom code as a custom Dockerfile and configurable serving template adapted for SAP AI Core. Ollama is recommended for its simplicity and efficiency.

Why leverage Ollama, LocalAI, llama.cpp and vLLM as LLM inference Server within SAP AI Core?

Ollama, LocalAI, llama.cpp and vLLM offer a comprehensive solution for running Large Language Models (LLMs) locally or in self-hosted environments. Their full stack capabilities include:

- Model Management: Dynamically pull or download LLMs from model repository through API during run-time (exclusive to Ollama and LocalAI. vLLM provides seamless integration with Hugging Face models)

- Running LLM efficiently with GPU Acceleration in SAP AI Core using open-source backends such as llama.cpp, vllm, transformer, exllama etc.

- Serving with built-in OpenAI-compatible chat completion and embedding APIs. Exact the same APIs in SAP Generative AI Hub.

- Easy deployment and setup with the need for minimal custom code deployment in SAP AI Core, simply just a custom Dockerfile and a configurable serving template adapted for SAP AI Core.

- Commercially viability: They are all under MIT licenses or Apache 2.0 License.

Let's have a close look at each option from different aspects list above.

Ollama vs LocalAI in context of SAP AI Core

LocalAI LocalAI | ||

| Description | "Ollama: Get up and running with Llama 2, Mistral, Gemma, and other large language models." | "LocalAI is the free, Open Source OpenAI alternative. LocalAI act as a drop-in replacement REST API that’s compatible with OpenAI API specifications for local inferencing..." |

| Recommendation | Recommended if just inference LLMs/LMMs in SAP AI Core. See its AI capabilities below for detail. | Recommended if speech recognition, speech generation and image generation are also required apart from LLMs/LMMs. |

| AI Capabilities | -Text generation -Vision -Text Embedding | -Text generation -Vision -Text Embedding -Speech to Text -Text to Speech -Image Generation |

| Installation & Setup | Easy installation and setup | Assure to use the corresponding docker image or build with the right variables for GPU acceleration. |

| GPU Acceleration | Automatically detect and apply GPU acceleration | Supported. Require additional configuration on individual model along side with GPU built docker image. |

| Model Management | Built-in model management through CLI commands or APIs | Experimental model gallery May require additional configuration for GPU acceleration per model |

| Supported Backends | llama.cpp | multi-backend support and backend agnostic. Default backend as llama.cpp, also support extra backends such as vLLM, rwkv, huggingface transformer, bert, whisper.cpp etc. Please check its model compatibility table for details |

| Supported Models | Built-in Model Library | Experimental Model Gallery |

| Model Switching | Seamless model switching with automatic memory management | Supported |

| APIs | -Model Management API -OpenAI-compatible chat/complemtion API -Embedding API | -Model Management API -OpenAI-compatible chat/complemtion API -Embedding API |

| Model Customization | supported | supported |

| License | MIT | MIT |

llama.cpp vs vLLM in context of SAP AI Core

llama.cpp llama.cpp |  vLLM vLLM | |

| Description | "The main goal of llama.cpp is to enable LLM inference with minimal setup and state-of-the-art performance on a wide variety of hardware - locally and in the cloud." | "A high-throughput and memory-efficient inference and serving engine for LLMs" |

| AI Capabilities | -Text generation -Vision -Text Embedding | -Text generation -Vision -Text Embedding |

| Deployment & Setup | Easy deployment via docker. Many arguments to explore on starting llama.cpp server | Easy deployment via docker. Many engine arguments on starting vllm.entrypoints.openai.api_server |

| GPU Acceleration | Supported | Supported |

| Model Management | Not supported. Need external tool(wget etc) to download models from Hugging Face | Seamless integration with popular HuggingFace models |

| Supported Quantization | 1.5-bit, 2-bit, 3-bit, 4-bit, 5-bit, 6-bit, and 8-bit integer quantization | GPTQ, AWQ, SqueezeLLM, FP8 KV Cache |

| Supported Models | https://github.com/ggerganov/llama.cpp > Supported models | Supported Model |

| Model Switching | Not supported. One deployment for one model. | Not supported. One deployment for one model. |

| APIs | -OpenAI-compatible chat/complemtion API -Embedding API | -OpenAI-compatible chat/complemtion API -Embedding API |

| License | MIT | Apache-2.0 license |

In this sample, I choose llama.cpp and vLLM server for their popularity. Similarly, the same approach is applicable to llama-cpp-python's OpenAI-compatible Web Server or LLaVa 's API Server etc.

Test Summary

I have conducted some tests on three open-source models(mistral-7b, mixtral, llava-7b) within SAP AI Core, such as:

- chain of thought

- general Q&A

- write a haiku about Run Ollama etc. in AI Core,

- customer message process in intelligent customer interaction(json mode),

- public facility issue spotter in citizen reporting(llava vision with json mode).

Overall, the quality of text generation is good engough in my test cases.

Let's have a look at the generation speed by token# generation per second in my test cases. Since Ollama, LocalAI and llama.cpp are using the same backend as llama.cpp, so they almost share the same results.

Important note: Due to the limited number of my test cases, the test result may not be accurrate and objective. And it may yield different result with some other use cases. To choose an open-source LLM and its backend, you should conduct test based on your use cases.

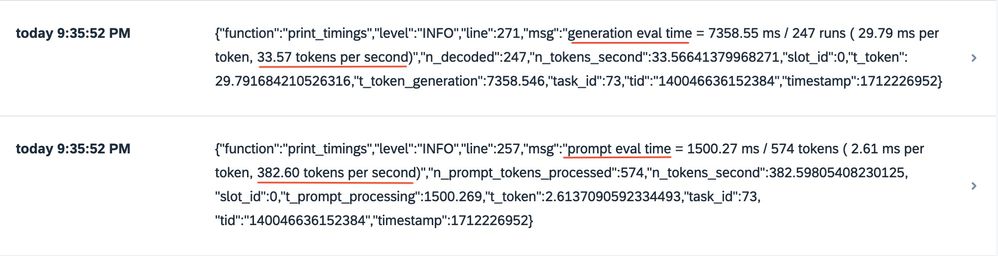

Mistral-7b on Ollama, LocalAI and llama.cpp

| Model: mistral:7b-instruct-q5_K_M (5.1 G) | Resource Plan of SAP AI Core: infer.s |

| Ollama, LocalAI and llama.cpp | Avg token generation per second: 30~40. Response time less than 10s in all of test cases |

Here is one of sample log screenshot in SAP AI Core about token# per second of mistral on Ollama, LocalAI and llama.cpp

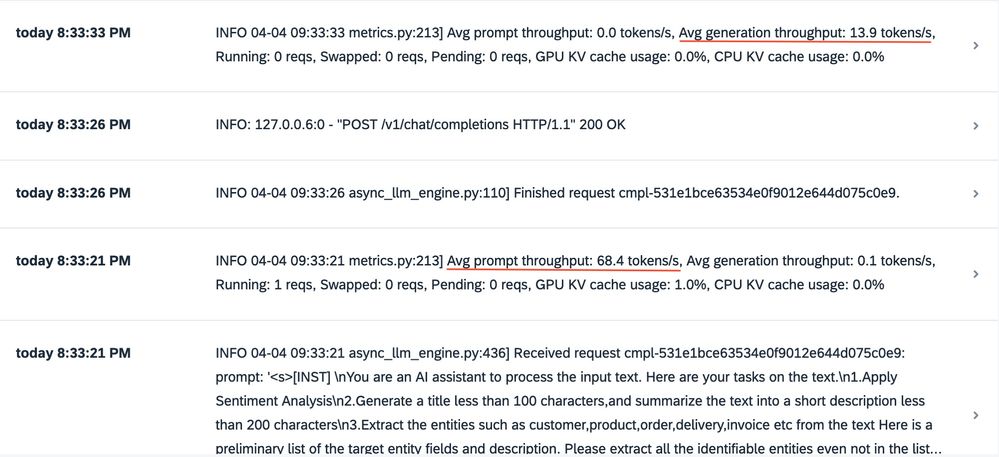

Mistral-7b on vLLM

| Model: Mistral-7B-Instruct-v0.2-AWQ (4.15 G) | Resource Plan of SAP AI Core: infer.s |

vLLM with the following engine arguments --dtype half --gpu-memory-utilization 0.95 --enforce-eager --max-model-len 2048 --max-num-batched-tokens 2048 --max-num-seqs 2048 --quantization awq | Avg token generation per second: 40~50 encounter error "cuda out of memory" on max-model-len etc as 4096 or beyond. |

Here is one of sample log screenshot in SAP AI Core about token# per second of mistral on vLLM. The calculation of avg generation throghput in vLLM in screenshot below seems different from llama.cpp backend. In fact, vLLM's response time is slightly faster than Ollama given the same task in my test cases. Also the response time may also attribute to its quantization method.

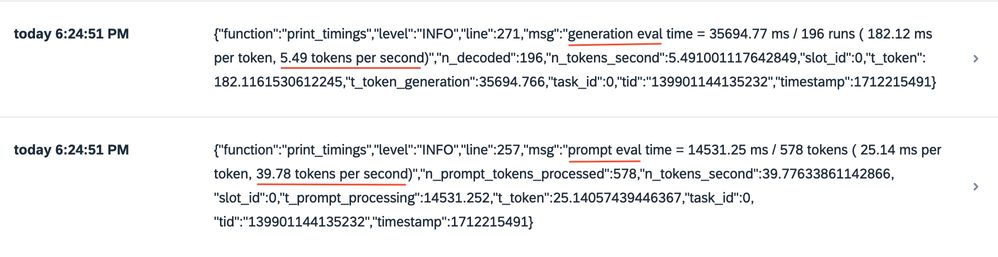

Mixtral on Ollama, LocalAI and llama.cpp

| Model: mixtral:8x7b-instruct-v0.1-q4_0 (26 G) | Resource Plan of SAP AI Core: infer.l |

| Ollama, LocalAI and llama.cpp | Avg token generation per second: 4~6 Response time take up to 60 s for customer message process use case. It seems due to insufficient GPU VRAM. Resource plan infer.l only has 1 T4 GPU(16 G VRAM) though 58 G RAM. Only 17 layers out of 33 layers in mixtral are offloaded to GPU. |

Here is one of sample log screenshot in SAP AI Core about token# per second of mixtral on Ollama, LocalAI and llama.cpp.

Mixtral on vLLM

| Model: Mixtral-8x7B-Instruct-v0.1-AWQ (24.65 G) | Resource Plan of SAP AI Core: infer.l |

vLLM with the following engine arguments --dtype half --gpu-memory-utilization 0.95 --enforce-eager --max-model-len 512 --max-num-batched-tokens 512 --max-num-seqs 512 --quantization awq | error with Cuda out of memory even with max-model-len as 512 Likely it is a bug of vLLM according to this issue |

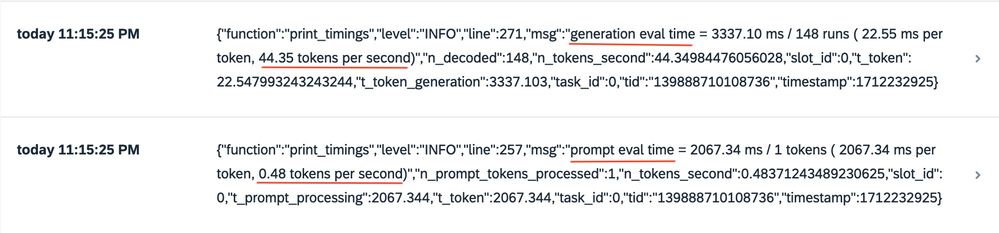

Llava on Ollama

| Model: llava:7b-v1.6 (4.7 G) | Resource Plan of SAP AI Core: infer.s |

| Ollama | Avg token generation per second: 40~50 Response time less than 10 s for public facility issue use case with json output. |

Try it out

Please refer to this manual to try it out. The source code of this sample is released under Apache 2.0 license. You should be accountable for your own choice of commercially viable open-source LLMs/LMMs.

Explore further resources on generative AI at SAP

- Augmenting SAP BTP Use Cases with AI Foundation: A Deep Dive into the Generative AI Hub

- OpenSAP Course | Generative AI with SAP

- Generative AI Hub in SAP AI Core

- Generative AI Hub in SAP AI Launchpad

- Generative AI Roadmap

- Discovery Center | SAP AI Core and Generative AI Hub

Summary

Self-hosted open-source LLMs in SAP AI Core with BYOM complement SAP Generative AI Hub by combining the efficiency and innovations of open-source LLM community with enterprise-grade security and scalability of SAP AI Core, which make open-source LLMs ready for enterprise. This also open the door of open-source LLM to SAP partners and customer for AI adoption in their business.

Disclaimer: SAP notes that posts about potential uses of generative AI and large language models are merely the individual poster's ideas and opinions, and do not represent SAP's official position or future development roadmap. SAP has no legal obligation or other commitment to pursue any course of business, or develop or release any functionality, mentioned in any post or related content on this website.

- SAP Managed Tags:

- SAP AI Core,

- SAP AI Launchpad

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

Agents

3 -

AI

5 -

AI Launchpad

2 -

Artificial Intelligence

2 -

Artificial Intelligence (AI)

3 -

Brainstorming

1 -

BTP

1 -

Business AI

2 -

Business Trends

1 -

Business Trends

1 -

Cloud Foundry

1 -

Data and Analytics (DA)

1 -

Design and Engineering

1 -

forecasting

1 -

GenAI

1 -

Generative AI

4 -

Generative AI Hub

4 -

Graph

1 -

Language Models

1 -

LlamaIndex

1 -

LLM

2 -

LLMs

2 -

Machine Learning

1 -

Machine learning using SAP HANA

1 -

Mistral AI

1 -

NLP (Natural Language Processing)

1 -

open source

1 -

OpenAI

1 -

Python

2 -

RAG

2 -

Retrieval Augmented Generation

1 -

SAP Build Process Automation

1 -

SAP HANA

1 -

SAP HANA Cloud

1 -

Technology Updates

1 -

user experience

1 -

user interface

1 -

Vector Database

3 -

Vector DB

1 -

Vector Similarity

1