- SAP Community

- Products and Technology

- Enterprise Resource Planning

- ERP Blogs by Members

- ECC-DB2 Migration from On-Prem to Cloud - Parallel...

Enterprise Resource Planning Blogs by Members

Gain new perspectives and knowledge about enterprise resource planning in blog posts from community members. Share your own comments and ERP insights today!

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

srikanth_sandha

Explorer

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

11-03-2022

2:25 PM

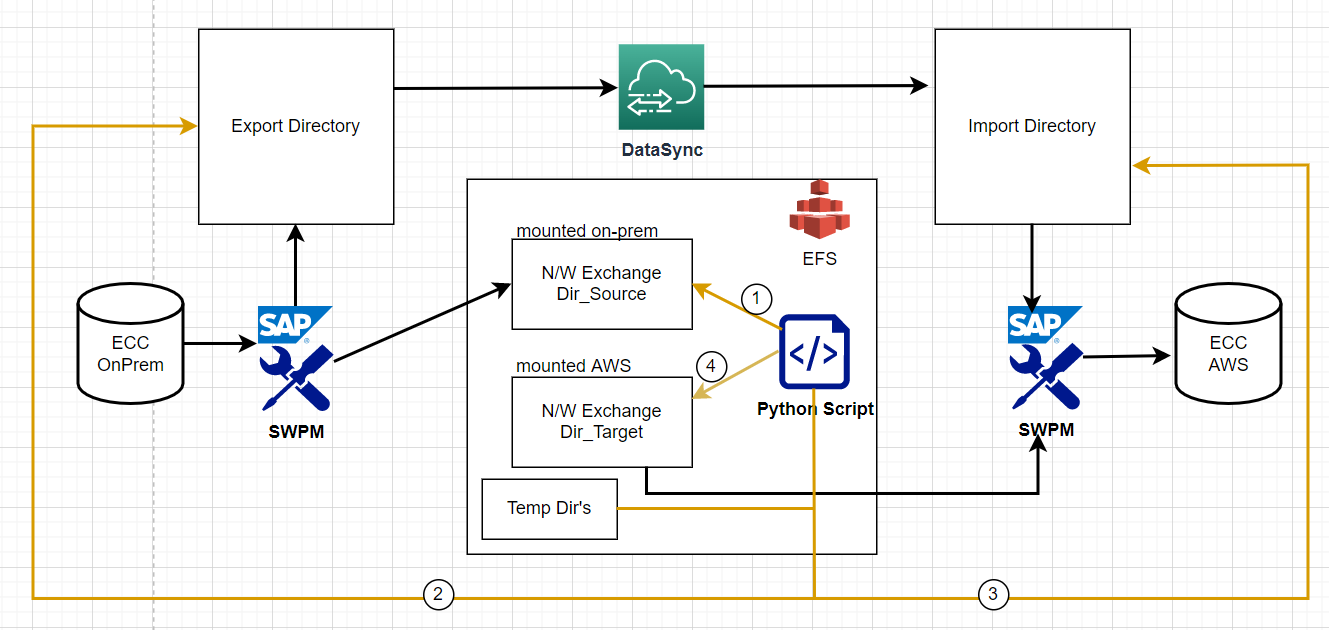

Scenario: If there is a requirement to move ECC on DB2 - AIX (on-premises) to ECC on DB2-Linux(AWS) or any DB2 heterogeneous migrations to cloud, this blog address issues with Export data dump transfer delays to cloud and better manage Cloud migration using parallel Export/Import process, reducing downtime.

Caveat: This method helps if you have limitations in using distribution monitor or in reducing DB2 migration with > 10 TB DB.

Challenge: During parallel export/Import–Data(flat files) that is generated by the export process needs to be available on the target cloud in-line with the Signal files generated by SWPM Export process(for every package it complete the export). But, since signal files are smaller and copied to cloud in short spawn, while export dump files are larger and takes time to be in sync(with cloud directory) there will be multiple situations(because of latency limitations) where your signal file is readily available while DB flat files are not. As soon as a signal file is available, the import process will try to import a package leading to failures - consuming time in re-starting or continuous manual monitoring. *Mounting NFS on either side will lead to the same issues as well.

Solution: To reduce downtime and to have a flawless import, below python program (attached below) and AWS cloud tools like DataSync and EFS been used to address above challenge. This has reduced downtime by 35% of the total time it took in sequential export/import(specific to Cloud migration).

Step 1 - Python Script checks for any new SIG file creation in ‘N/W Exchange Dir_Source’.

Step 2 – If new SIG file created, It Fetch all the file names matching with SIG FILE from source ‘Export DATA Directory ’along with their sizes into a file[created at Temp Dir_OnPrem]

Step 3 - same like Step 2 – executes for ‘Import DATA Directory’ - collect sizes into a file [created at Temp Dir_AWS]

Step 4 – If both the Names and Sizes Match – It moves the SIG file from ‘N/W Exchange Dir_Source’ to ‘N/W Exchange Dir_Target’ – which then picked by import SWPM channel.

Required File systems[change them accordingly in the script] :

<Export/Import DATA Path> - This should be local FS on on-premise(to have faster export speeds) and EFS on AWS. Can have the same naming convention or different.

/<EFS>/<Script location>/ - path for python script.

/<EFS>/<Script location>/OnPrem/ - Directory for storing Export temp files.

/<EFS>/<Script location>/AWS/ - Directory for storing Import temp files.

/<EFS>/<SID>_Network_OnPrem - Directory path for Network Exchange directory for Export.

/<EFS>/<SID>_Network_AWS - Directory path for Network Exchange directory for Import.

Required setup:

- Setup SSL between as AWS to On-Premise. And Execute script from AWS.

- Update Source IP or Hostname in the script.

- run with python3.

- Although DataSync has default periodicity of 1 hour. Setup and schedule to have 5 or 10 minutes periodicity to have better results.

- Execute this from the Cloud host.

Python Program:

Maybe there might be other better solution than using this program, it will intresting to learn if you guys came across any.

Caveat: This method helps if you have limitations in using distribution monitor or in reducing DB2 migration with > 10 TB DB.

Challenge: During parallel export/Import–Data(flat files) that is generated by the export process needs to be available on the target cloud in-line with the Signal files generated by SWPM Export process(for every package it complete the export). But, since signal files are smaller and copied to cloud in short spawn, while export dump files are larger and takes time to be in sync(with cloud directory) there will be multiple situations(because of latency limitations) where your signal file is readily available while DB flat files are not. As soon as a signal file is available, the import process will try to import a package leading to failures - consuming time in re-starting or continuous manual monitoring. *Mounting NFS on either side will lead to the same issues as well.

Solution: To reduce downtime and to have a flawless import, below python program (attached below) and AWS cloud tools like DataSync and EFS been used to address above challenge. This has reduced downtime by 35% of the total time it took in sequential export/import(specific to Cloud migration).

Highlevel solution representation

Step 1 - Python Script checks for any new SIG file creation in ‘N/W Exchange Dir_Source’.

Step 2 – If new SIG file created, It Fetch all the file names matching with SIG FILE from source ‘Export DATA Directory ’along with their sizes into a file[created at Temp Dir_OnPrem]

Step 3 - same like Step 2 – executes for ‘Import DATA Directory’ - collect sizes into a file [created at Temp Dir_AWS]

Step 4 – If both the Names and Sizes Match – It moves the SIG file from ‘N/W Exchange Dir_Source’ to ‘N/W Exchange Dir_Target’ – which then picked by import SWPM channel.

Required File systems[change them accordingly in the script] :

<Export/Import DATA Path> - This should be local FS on on-premise(to have faster export speeds) and EFS on AWS. Can have the same naming convention or different.

/<EFS>/<Script location>/ - path for python script.

/<EFS>/<Script location>/OnPrem/ - Directory for storing Export temp files.

/<EFS>/<Script location>/AWS/ - Directory for storing Import temp files.

/<EFS>/<SID>_Network_OnPrem - Directory path for Network Exchange directory for Export.

/<EFS>/<SID>_Network_AWS - Directory path for Network Exchange directory for Import.

Required setup:

- Setup SSL between as AWS to On-Premise. And Execute script from AWS.

- Update Source IP or Hostname in the script.

- run with python3.

- Although DataSync has default periodicity of 1 hour. Setup and schedule to have 5 or 10 minutes periodicity to have better results.

- Execute this from the Cloud host.

- Logfile will be handy to check the errors. This file is generated in the script location.

- Run the program in a screen session, to not to get terminated.

- 15 min delay is introduced in the program, this is to ensure Datasync finishes its verification after copy.

Python Program:

from filecmp import dircmp

import filecmp

import subprocess

from subprocess import Popen, PIPE

import time

import datetime

while True:

FileLIST = []

DIRCMP = dircmp('/<EFS>/<SID>_Network_OnPrem', '/<EFS>/<SID>_Network_AWS',ignore=None, hide=None)

f = open("/sapbasis/pytest_QR2/NetworkExchangelog.txt", "a")

now = datetime.datetime.now()

print (now.strftime("%Y-%m-%d %H:%M:%S"),file=f)

print (DIRCMP.left_only,file=f)

f.close()

f = open("/<EFS>/<Script location>/NetworkExchangelog.txt", "a")

AWKCMD = "awk '{print $5,$9}'"

for name in DIRCMP.left_only:

if(name == "export_statistics.properties"):

exportfile = open(r"/<EFS>/<SID>_Network_OnPrem/export_statistics.properties", "r")

IS_Error = exportfile.readlines()[2]

IS_str = IS_Error[0:7]

if(IS_str == 'error=0'):

AWSCOPY1 = subprocess.Popen(['cp -Rpf /<EFS>/<SID>_Network_OnPrem/' + name + ' /<EFS>/<SID>_Network_AWS/'],shell=True,stdout=f)

AWSCOPY1.communicate()

exportfile.close()

else:

SPLITFile = name.split(".")[0]

OnPremRun = subprocess.Popen(['ssh','<userID@SOURCE_IP/HOSTNAME>','ls -ltr <Export DATA Path> | grep -e ' + SPLITFile + '.0 -e ' + SPLITFile + '.TOC |' + AWKCMD + ' > /<EFS>/<Script location>/OnPrem/' + SPLITFile + '.log'],stdout=f)

OnPremRun.communicate()

time.sleep(5)

AWSRun = subprocess.Popen(['ls -ltr <Import DATA Path> | grep -e ' + SPLITFile + '.0 -e ' + SPLITFile + '.TOC |' + AWKCMD + ' > /<EFS>/<Script location>/AWS/' + SPLITFile + '.log'], shell=True,stdout=f)

AWSRun.communicate()

OnPremFile = "/<EFS>/<Script location>/OnPrem/" + SPLITFile + ".log"

AWSFile = "/<EFS>/<Script location>/AWS/" + SPLITFile + ".log"

time.sleep(2)

if(filecmp.cmp(OnPremFile,AWSFile)):

FileLIST.append(name)

else:

print("File {} not Yet SYNC in AWS".format(name),file=f)

print (now.strftime("%Y-%m-%d %H:%M:%S"),file=f)

print("Entering In to Loop-15 min",file=f)

# Sleep time required for DataSYNC verification

time.sleep(900)

for filename in FileLIST:

AWSCOPY = subprocess.Popen(['cp -Rpf /<EFS>/<SID>_Network_OnPrem/' + filename + ' /<EFS>/<SID>_Network_AWS/'],shell=True,stdout=f)

AWSCOPY.communicate()

print("File {} is in SYNC and moved to Target Directory".format(filename),file=f)

f.close()

Maybe there might be other better solution than using this program, it will intresting to learn if you guys came across any.

- SAP Managed Tags:

- SAP ERP

2 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

"mm02"

1 -

A_PurchaseOrderItem additional fields

1 -

ABAP

1 -

ABAP Extensibility

1 -

ACCOSTRATE

1 -

ACDOCP

1 -

Adding your country in SPRO - Project Administration

1 -

Advance Return Management

1 -

AI and RPA in SAP Upgrades

1 -

Approval Workflows

1 -

Ariba

1 -

ARM

1 -

ASN

1 -

Asset Management

1 -

Associations in CDS Views

1 -

auditlog

1 -

Authorization

1 -

Availability date

1 -

Azure Center for SAP Solutions

1 -

AzureSentinel

2 -

Bank

1 -

BAPI_SALESORDER_CREATEFROMDAT2

1 -

BRF+

1 -

BRFPLUS

1 -

Bundled Cloud Services

1 -

business participation

1 -

Business Processes

1 -

CAPM

1 -

Carbon

1 -

Cental Finance

1 -

CFIN

1 -

CFIN Document Splitting

1 -

Cloud ALM

1 -

Cloud Integration

1 -

condition contract management

1 -

Connection - The default connection string cannot be used.

1 -

Custom Table Creation

1 -

Customer Screen in Production Order

1 -

Data Quality Management

1 -

Date required

1 -

Decisions

1 -

desafios4hana

1 -

Developing with SAP Integration Suite

1 -

Direct Outbound Delivery

1 -

DMOVE2S4

1 -

EAM

1 -

EDI

3 -

EDI 850

1 -

EDI 856

1 -

edocument

1 -

EHS Product Structure

1 -

Emergency Access Management

1 -

Energy

1 -

EPC

1 -

Financial Operations

1 -

Find

1 -

FINSSKF

1 -

Fiori

1 -

Flexible Workflow

1 -

Gas

1 -

Gen AI enabled SAP Upgrades

1 -

General

1 -

generate_xlsx_file

1 -

Getting Started

1 -

HomogeneousDMO

1 -

How to add new Fields in the Selection Screen Parameter in FBL1H Tcode

1 -

IDOC

2 -

Integration

1 -

Learning Content

2 -

Ledger Combinations in SAP

1 -

LogicApps

2 -

low touchproject

1 -

Maintenance

1 -

management

1 -

Material creation

1 -

Material Management

1 -

MD04

1 -

MD61

1 -

methodology

1 -

Microsoft

2 -

MicrosoftSentinel

2 -

Migration

1 -

mm purchasing

1 -

MRP

1 -

MS Teams

2 -

MT940

1 -

Newcomer

1 -

Notifications

1 -

Oil

1 -

open connectors

1 -

Order Change Log

1 -

ORDERS

2 -

OSS Note 390635

1 -

outbound delivery

1 -

outsourcing

1 -

PCE

1 -

Permit to Work

1 -

PIR Consumption Mode

1 -

PIR's

1 -

PIRs

1 -

PIRs Consumption

1 -

PIRs Reduction

1 -

Plan Independent Requirement

1 -

Premium Plus

1 -

pricing

1 -

Primavera P6

1 -

Process Excellence

1 -

Process Management

1 -

Process Order Change Log

1 -

Process purchase requisitions

1 -

Product Information

1 -

Production Order Change Log

1 -

purchase order

1 -

Purchase requisition

1 -

Purchasing Lead Time

1 -

Redwood for SAP Job execution Setup

1 -

RISE with SAP

1 -

RisewithSAP

1 -

Rizing

1 -

S4 Cost Center Planning

1 -

S4 HANA

1 -

S4HANA

3 -

S4HANACloud audit

1 -

Sales and Distribution

1 -

Sales Commission

1 -

sales order

1 -

SAP

2 -

SAP Best Practices

1 -

SAP Build

1 -

SAP Build apps

1 -

SAP Cloud ALM

1 -

SAP Data Quality Management

1 -

SAP Maintenance resource scheduling

2 -

SAP Note 390635

1 -

SAP S4HANA

2 -

SAP S4HANA Cloud private edition

1 -

SAP Upgrade Automation

1 -

SAP WCM

1 -

SAP Work Clearance Management

1 -

Schedule Agreement

1 -

SDM

1 -

security

2 -

Settlement Management

1 -

soar

2 -

Sourcing and Procurement

1 -

SSIS

1 -

SU01

1 -

SUM2.0SP17

1 -

SUMDMO

1 -

Teams

2 -

User Administration

1 -

User Participation

1 -

Utilities

1 -

va01

1 -

vendor

1 -

vl01n

1 -

vl02n

1 -

WCM

1 -

X12 850

1 -

xlsx_file_abap

1 -

YTD|MTD|QTD in CDs views using Date Function

1

- « Previous

- Next »

Related Content

- Preparing for Universal Parallel Accounting in Enterprise Resource Planning Blogs by SAP

- FAQ on Upgrading SAP S/4HANA Cloud Public Edition in Enterprise Resource Planning Blogs by SAP

- What is the complete list of function restrictions during software update? in Enterprise Resource Planning Q&A

- Migrate to new asset accounting within ECC in Enterprise Resource Planning Q&A

- Readiness for Universal Parallel Accounting in Enterprise Resource Planning Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 10 | |

| 3 | |

| 2 | |

| 2 | |

| 2 | |

| 2 | |

| 2 | |

| 1 | |

| 1 | |

| 1 |