- SAP Community

- Products and Technology

- Technology

- Technology Blogs by Members

- Building a Side-by-side Extension with Kyma and Mi...

Technology Blogs by Members

Explore a vibrant mix of technical expertise, industry insights, and tech buzz in member blogs covering SAP products, technology, and events. Get in the mix!

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

christianlechne

Active Contributor

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

01-12-2021

7:20 AM

Idea and Scenario

The topic of side-by-side extension is out now for quite a while in the SAP ecosystem, but the playfield changed a bit over the last years as some new players entered the field (e. g. a managed Kyma offering) and the combination (or should I say the embrace) of the SAP stack and non-SAP platforms (like Microsoft Azure) has also gained attention. With this post I would like to dig into one possible scenario on how such an extension can look like focusing on the “new” players and see where there are pitfalls or issues be it because of combining SAP and Microsoft or due to problems in one or the other platform itself.

The storyline from a business perspective is the following: let us assume a customer order was placed and the article was in stock at that point in time. During the processing of the order, it becomes apparent that the article cannot be delivered in time. Of course, we could now plainly inform the customer about the delay and that is it, but maybe the customer was already subject to several delays in prior orders and complained about that. Maybe it makes sense to check the order history and if there are delayed orders also if there have been complaints. Based on this analysis we can decide how to proceed and maybe give an additional discount when informing the customer about the delay.

Disclaimer: No idea if this makes perfect sense from a business perspective, but for the further implementation, let us just assume that it makes sense 😊

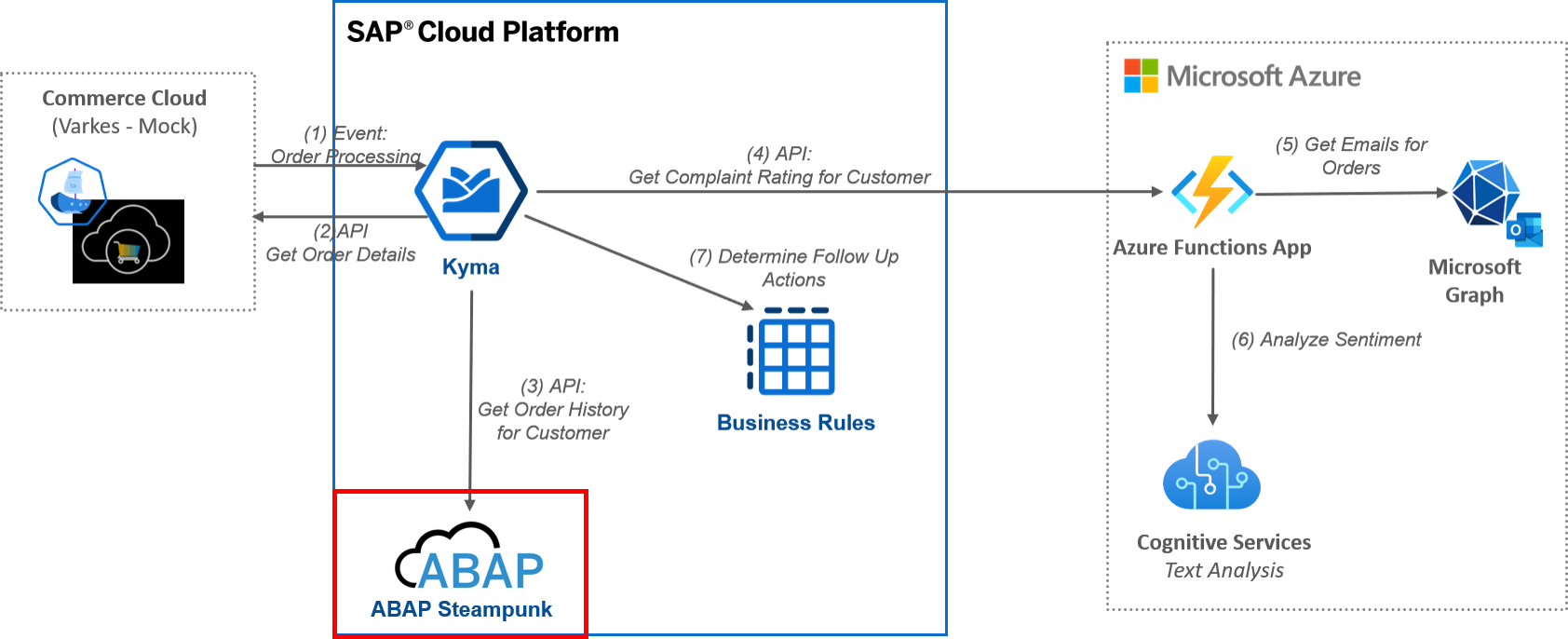

How would that look like from a system perspective:

- A SAP Commerce Cloud system raises an “processing” event stating that an order is delayed

- This event is retrieved by a Kyma function that then orchestrates several steps:

- First it makes a call back to Commerce Cloud to get further information about the order i. e. the ID of the business partner to get the order history of the business partner.

- Then it calls an ABAP system (e. g. Steampunk) to get the order history of the business partner.

- If the order history contains delayed orders the function triggers a check if complaints connected to the orders exist. For that it triggers an API that analyzes if there are complaints for the orders and returns the sentiment of the complaints. We assume that this information is stored in a Microsoft Outlook account. To keep things self-contained it makes sense to develop this building block in Azure and provide the API via an Azure Function i. e. a Durable Function.

- Based on the analysis the Kyma function can call the Business Rule Service to decide upon the next steps.

The overview of the solution looks like this:

In the following sections we will walk through the implementation of the scenario but a few words before we start to manage expectations:

- The code for the sample is available on GitHub (find reference in each section) but it is not production ready. There are some edges that need to be removed, but I would assume it a good starting point.

- I do not have access to “real” systems like an SAP S/4HANA system or an SAP Commerce Cloud and I assume that is also valid for some readers. So, I focused on what is possible within SAP Cloud Platform Trial and for the rest the motto is “fake it until you make it”. As we are mostly dealing with APIs this restriction does not really matter.

The story is different for Azure as there are just “productive” accounts with free tiering. - The emails for the complaints are stored in my testing mail account … as mentioned: fake it until you make it.

Let us start the journey … this is the way.

Step 0: Setup Kyma on SAP Cloud Platform

As Kyma is the central spoke for our scenario make sure that you have setup Kyma on your SCP trial. You find some information about that in this blog post https://blogs.sap.com/2020/10/09/kyma-runtime-available-in-trial-and-now-we-are-complete/ or in this video: https://youtu.be/uhkbbH7oS5g

Step 1: Event from the Commerce Cloud and Callback (Mockup)

Components: Varkes, Kyma

Where to find the code: https://github.com/lechnerc77/ExtensionSample-CommerceMock

The initial trigger is the SAP Commerce Cloud event. How to achieve that without a SAP Commerce Cloud system at hand? The Kyma ecosystem helps us a lot with this as they have Varkes (https://github.com/kyma-incubator/varkes) in their portfolio. Varkes is a framework for mocking applications and providing the necessary tooling for binding the mocked applications to Kyma. Even better SAP provides a prepopulated implementation for some SAP CX systems including SAP Commerce Cloud that is available on GitHub https://github.com/SAP-samples/xf-application-mocks.

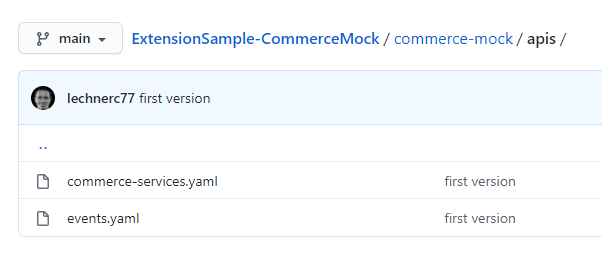

This repository is the baseline for our mockup. We make some adoptions as we do not need the full-fledged mock and consequently throw out some files from the API folder reducing the APIs to the commerce-services and events:

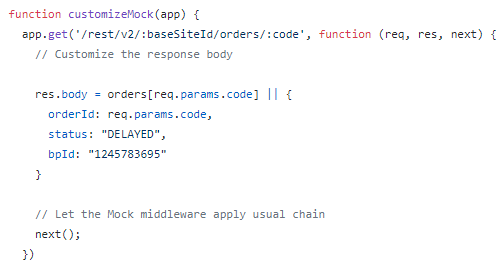

So far, we are fine for the event that triggers the scenario. Next, we must tell the mock what to return when the Kyma function calls back to get more information about the order that is delayed i. e. the ID of the business partner. To achieve this, we make an adjustment to the app.js file. If you look into that file you will see that there is a customizeMock function that allows us to define what data is returned when a specific route is matched. We code that a business partner ID is returned.

Now we need to bring that code into Kyma. First, we must build a Docker image. The repository contains the Dockerfile as well as a Make file. I assume you use the Docker Hub as image repository, so you must to replace the docker ID in the Make File and then run

make push-image

After that we create a deployment in Kyma that will serve as our Commerce Cloud Mock system. The corresponding yaml files are available in the deployment folder of the repository:

- The yaml file contains the definition of the deployment (here again replace your docker ID for the container image) as well as the definition of the service

- The yaml file contains the definition of the API rule

We deploy the app in a dedicated namespace via

kubectl -n <your namespace> apply -f k8s.yaml

and the API rules via

kubectl -n <your namespace> apply -f apirule.yaml

The deployment takes a while, so in the meantime we setup the prerequisites to allow other Kyma apps to consume the events and services of the Commerce Cloud Mock. You find the detailed procedure either in the blog post https://blogs.sap.com/2020/06/17/sap-cloud-platform-extension-factory-kyma-runtime-mock-applications...or in this walk-through video: https://youtu.be/r9mlTXHfnNM

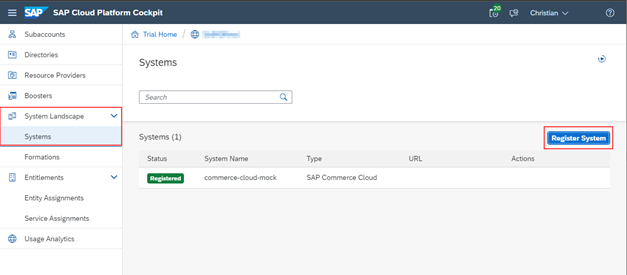

As a rough guide you must register a Commerce Cloud system in the SAP Cloud Platform:

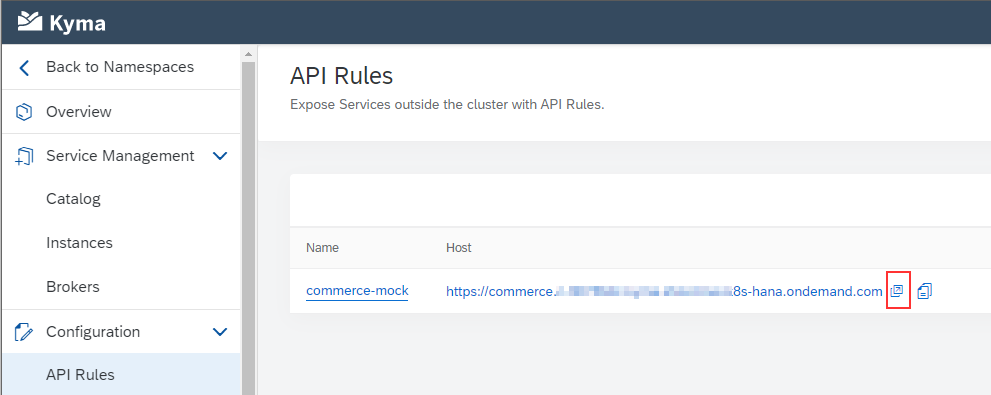

In this process you get a token. Switch back to deployed Commerce Cloud Mock app in Kyma and access the connection overview via the link in the API rule:

Here you find a connect button in the upper right corner where you must copy your token. After that, the mock system is connected to SAP Cloud Platform.

The next step is to create a formation in the SAP Cloud Platform Cockpit:

This way we link the system together with the subaccount.

Finally, we expose the APIs and events from our mock system via the “Register All” button in the Commerce Cloud Mock dashboard:

Result: the foundation for the first two steps handled by the Kyma function is in place.

In the next step we retrieve the order history from the ABAP system.

Step 2: Retrieve Order History from Backend

Components: ABAP Steampunk (at least I tried), Kyma

Where to find the code (you will see later why there are two repos):

- https://github.com/lechnerc77/ExtensionSample-ABAPSteampunk

- https://github.com/lechnerc77/ExtensionSample-ABAPMock

We expose the order history via an OData service made available by the ABAP environment on SAP Cloud Platform aka Steampunk (at least that was my plan).

Setting up the ABAP environment on SAP Cloud Platform is easy as a so-called booster is available for that:

Triggering the booster will execute the setup and after the execution you get the service key needed for connecting to your project in Eclipse. For details on the setup see: https://developers.sap.com/tutorials/abap-environment-trial-onboarding.html

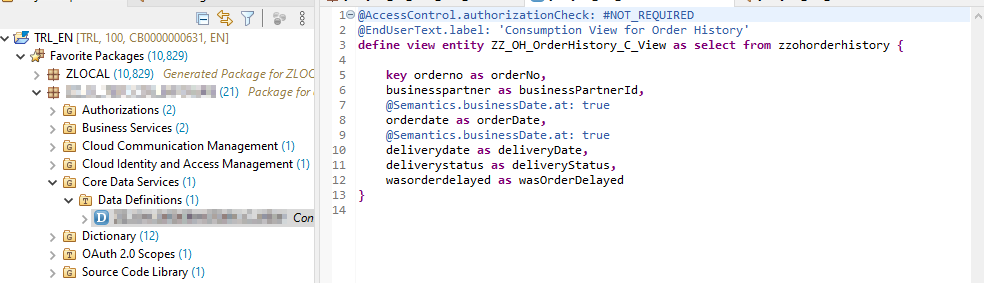

We create a simple OData service that is just needed to read the data, so no fancy behavior definition needed. Basically, we create a database table with the information, the CDS view, the corresponding service definition, and the service binding as well as the communication scenario.

This is straightforward and we end up with a simple view:

filled with some data via the interface if_oo_adt_classrun

The OData service delivers the expected results:

Next, we create the communication arrangement. For those of you who do not work too much with ABAP Steampunk on SAP Cloud Platform Trial (like me), I have a little surprise: the creation of an communication arrangement (as described here https://developers.sap.com/tutorials/abap-environment-communication-arrangement.html) is not possible on trial. The corresponding Fiori apps are not available. This means that we cannot call our service from the outside as we cannot fetch a token for the call in the way it is intended (Certainly, there might be a way to somehow retrieve the token, but that is a heck of a workaround that I did not want to explore). This is the moment where we take a deep breath (it is okay to swear – be assured I did), calm down and fake it until SAP will make it.

What do we need? An API that returns the data of an order history. What do we have? Kyma. So, we create another mock but this time for the ABAP backend. I did so by building a simple Go application that returns the data as the ABAP service did. You find the code as mentioned in the beginning on GitHub https://github.com/lechnerc77/ExtensionSample-ABAPMock

It is a very basic Go program that contains the three entries hard coded and exposes an API with an endpoint called orderhistory. Super simple, just to make things work and get the ABAP impediment out of the way. The next steps are the same as for the Commerce Cloud Mock deployment:

- Build the Docker image (Docker file)

- Push the image to Docker Hub (Make file)

- Create a namespace for the mock and deploy it using the deployment.yaml

- Deploy the API rule to access the API using the apirule.yaml

In contrast to Steampunk we can put in place an OAuth flow to get the token to access the secured endpoint:

For that we set up an OAuth client in Kyma that provides the Client ID and the Client secret.

Achievement for fetching the order history unlocked, however in a way that was not planned. As we have the order history, so prior orders of the business partner including their state we can now cover the next step namely checking if there have been any mails connected to the orders and execute a sentiment analysis on them.

Step 3: Get Emails with potential complaints and do sentiment analysis

Components: Azure Durable Functions, Microsoft Graph, Cognitive Services

Where to find the code: https://github.com/lechnerc77/ExtensionSample-DurableFunction

We assume that the correspondence with respect to orders is collected in a central Outlook mailbox. We must search the emails that are related to an order and then trigger the sentiment analysis for them. For the first part we use Microsoft Graph (https://docs.microsoft.com/en-Us/graph/overview), which offers us a central and unified entry point to interact with the Microsoft365 universe. For the later we use the text analytics service that is part of the Cognitive Services on Azure (https://azure.microsoft.com/en-us/services/cognitive-services/text-analytics/#features). Cognitive Services are pretrained ML services covering several area like image recognition or like in our case text analytics.

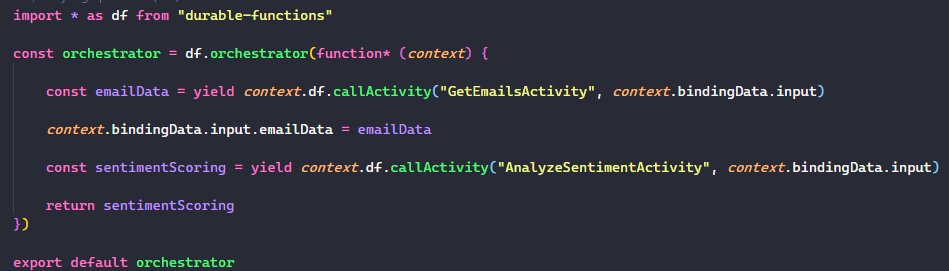

We offer an HTTP endpoint for the caller to hand over the order history, orchestrate the steps from above in sequence, and we want to take care about servers less, so we will make use of Microsoft Function as a Service (FaaS) offering aka Azure Functions (https://docs.microsoft.com/en-us/azure/azure-functions/). To be more specific we use the Durable Function extension that enables us to model the step sequence code-based and still benefit from serverless paradigms. In other words the extension allows us to bring some kind of stateful workflow-like behavior to FaaS. If you want to dig deeper into that area this blog https://blogs.sap.com/2020/02/17/a-serverless-extension-story-ii-bringing-state-to-the-stateless/ and this video https://youtu.be/pRhta19FNIg might be helpful.

Center Part: Azure Durable Function

The central part is a durable function that is triggered by an HTTP request. We secure the starter function via a function access key. This is not the best way, but okay for our proof of concept. You find further details on this topic here: https://docs.microsoft.com/en-us/azure/azure-functions/security-concepts.

The durable orchestrator handles two activity functions representing the two steps that we want to execute. The first one for searching the emails and the second one for calling the Cognitive Service API. The only additional logic in the orchestrator is that it makes the result of the first activity function available to the second one and return the result to the second one:

The business logic is exclusively located in the activities.

Fetching Emails via Microsoft Graph

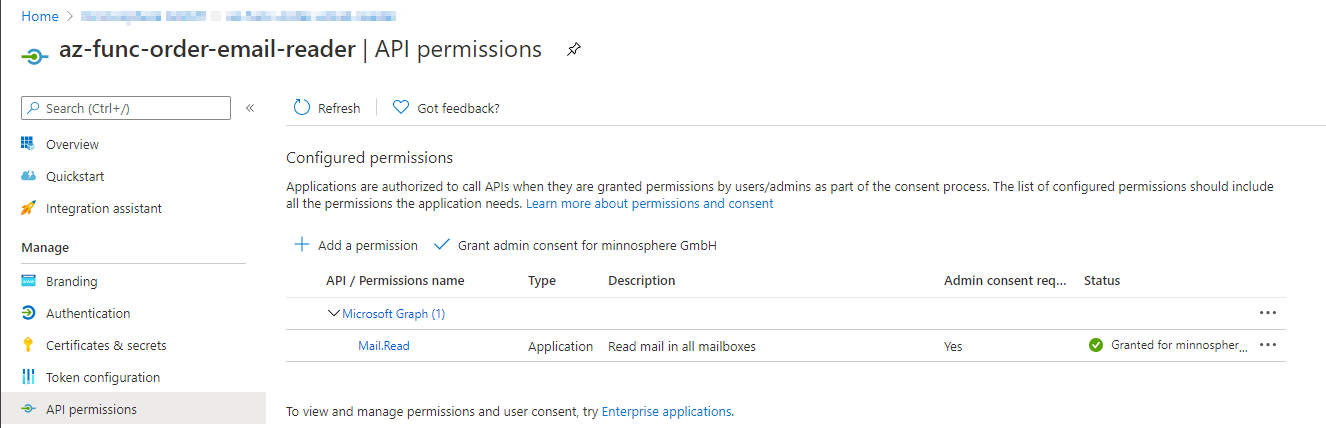

The first activity function takes the order numbers and calls the Microsoft Graph API to get all emails that contain the order numbers. To make this call possible we must allow the function to access the emails. This is done via an App registration in the Azure Active Directory that contains the permissions:

Be aware that we have no user interaction, so we need an Application permission and not a delegated permission. The admin must consent to set it active. The app registration provides us the necessary secrets to make the call.

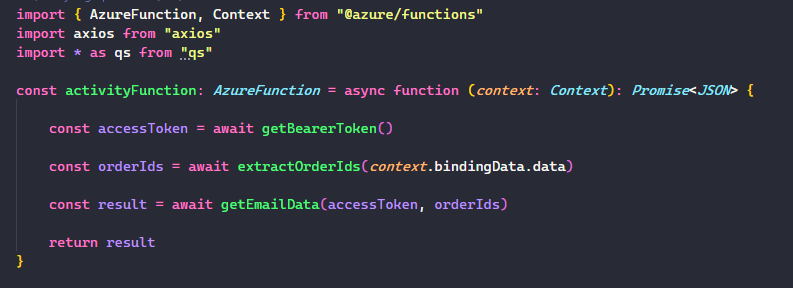

The activity then uses the OAuth Client Credentials flow to get the Bearer token from the OAuth client and read the emails.

One remark: you will see that the fetching of the Bearer token as well as the call of Microsoft Graph is done manually via Axios (https://www.npmjs.com/package/axios). There are libraries that you can use for the authentication stuff (msal.js - https://github.com/AzureAD/microsoft-authentication-library-for-js) as well as for the Microsoft Graph API (Microsoft Graph SDK - https://github.com/microsoftgraph/msgraph-sdk-javascript) but both do not (yet) support the silent approach via client credentials that we use. Not a big deal, but more work on our side.

Sentiment Analysis via Cognitive Services

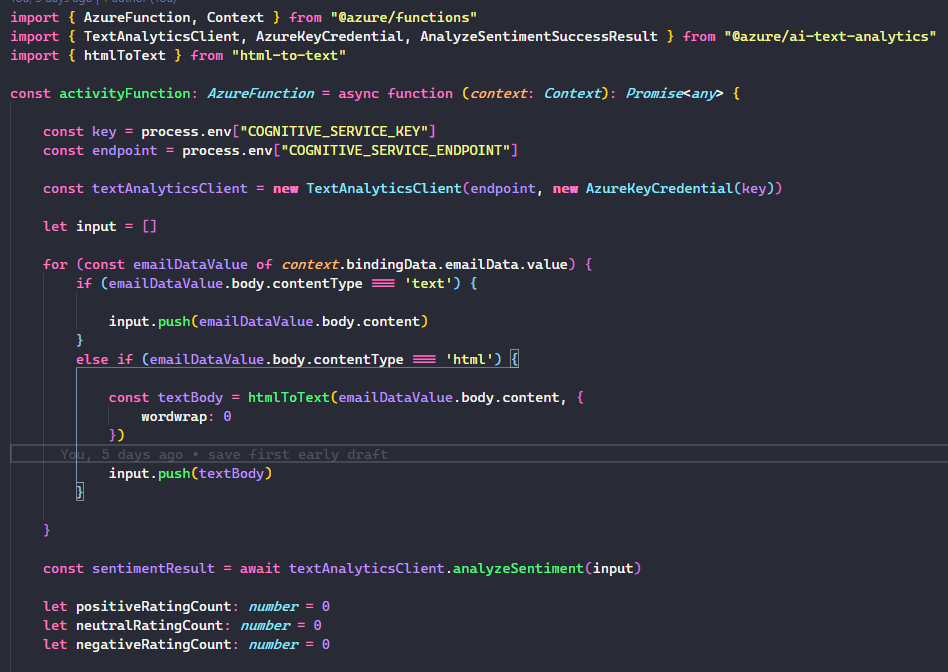

The second activity uses the emails and calls the text analytics to do the sentiment analysis. For that we create a Cognitive Service resource in Azure Portal that then also provides us the keys for accessing the HTTP endpoints.

The activity itself basically calls the endpoint and hands over the email. We then extract the number of positive, neutral, and negative sentiments from the result and hand it back to the orchestrator. This time we are a luckier than in the first activity as we can make use of the Text Analytics Client provided by Microsoft. Here is a snippet of the code:

Last, we deploy the Durable Function to Azure using the Azure Functions Extension for VS Code (https://marketplace.visualstudio.com/items?itemName=ms-azuretools.vscode-azurefunctions). That was it, no drama, no unexpected impediments on the Microsoft Azure side of the house.

Now time to glue things together in a Kyma function.

Step 4: Gluing the things together in Kyma

Components: Kyma Function

Where to find the code: https://github.com/lechnerc77/ExtensionSample-KymaFunction

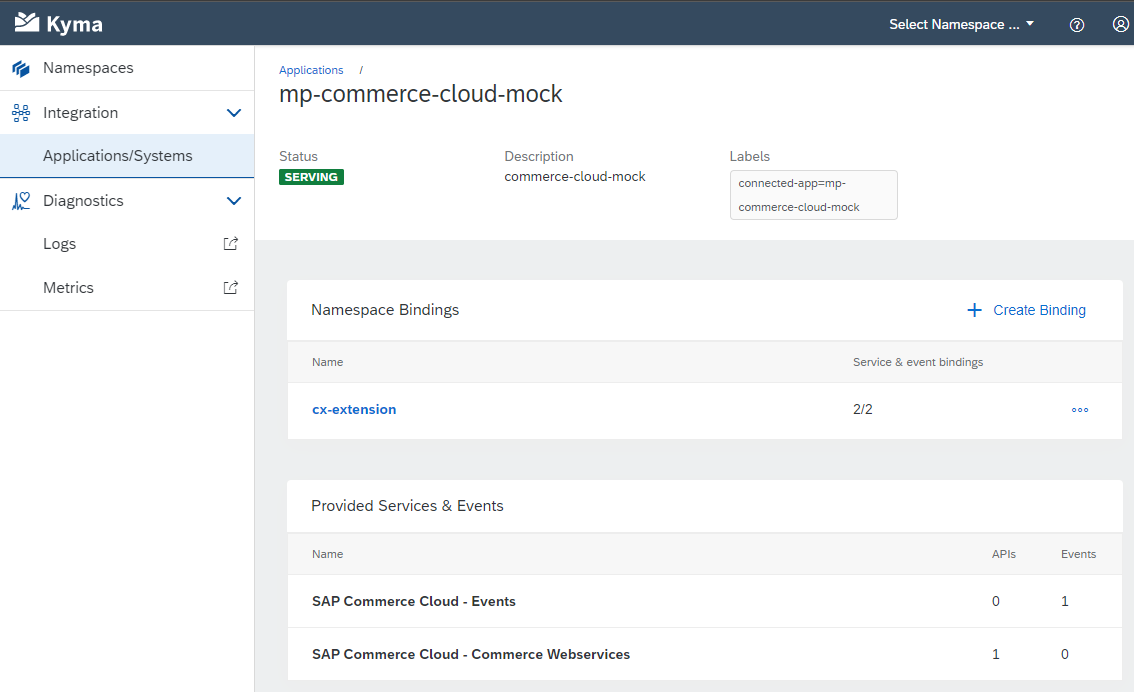

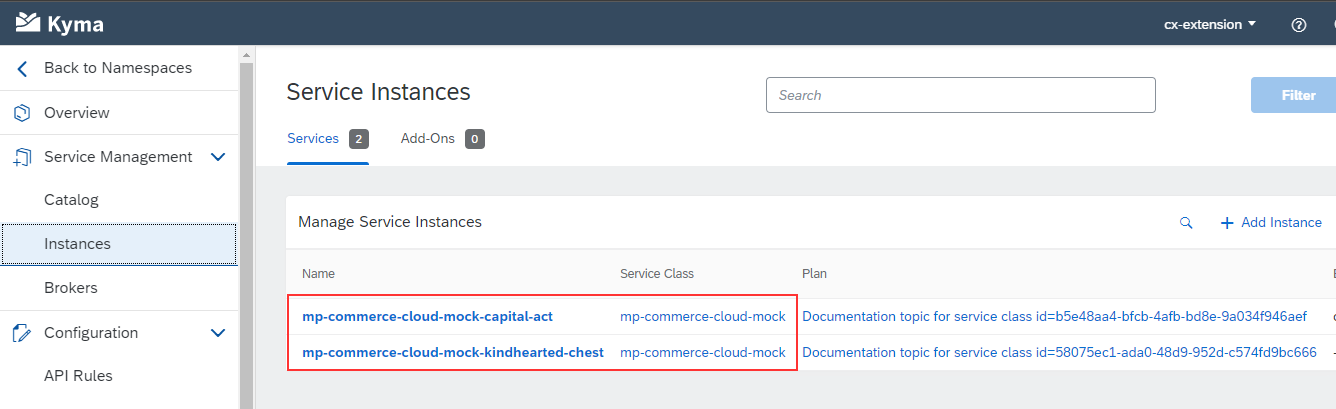

We create a dedicated namespace for this function e. g. cx-extension. To get a connection to the Commerce Cloud Mock, we create a namespace binding to our namespace in the integration section of the Kyma dashboard:

Due to this binding, we can create service instances of the Commerce Cloud events as well as for the Commerce Cloud APIs in our namespace that we later consume in the Kyma function:

You find a decent description of the procedure in this blog post https://blogs.sap.com/2020/06/17/sap-cloud-platform-extension-factory-kyma-runtime-commerce-mock-eve... or a walk-through in this video https://youtu.be/r9mlTXHfnNM

Now we can create the function … or? Let us recap what the function should do:

- It is triggered by an event – this is a configuration in the function UI – check

- It calls the Commerce Cloud Mock API via the service instance that we bind to the function to get the business partner ID– that is a configuration in the function UI – check

- It calls the ABAP backend (mock) to get the order history – this is an external API and we need to get the OAuth token first. We must store the corresponding HTTP endpoints in the environment variables in the function UI and we must store the client ID and client secret there too … no we do not want to do that, that is what Secrets in Kubernetes are for. But we cannot reference them in the UI – investigation needed

- It calls the Azure Function to get the sentiment analysis results. Same story here, we need the API key and we want to store that safely – investigation needed

The good news at this point is: we can use secrets and config maps in Kyma Functions. The bad news is: we cannot do that via the UI.

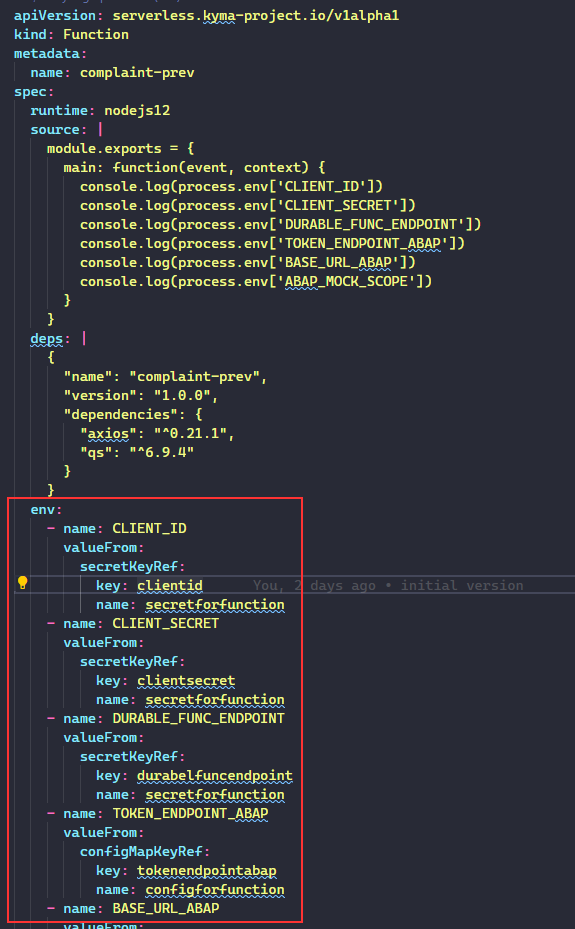

We create a config map containing all the relevant configuration information and a secret for all the confidential information and deploy it into the namespace of the function. Now we need to reference these variables in the function. How can we do that without the UI? The answer is via a deployment.yaml file for the function. We can reference the environment variables there as for a “regular” deployment:

The only downside is that we will not see them in the function UI after deployment. Using kubectl describe shows you the truth what is really part of your function environment variables.

You can also put the complete function code in the deployment file, but my plan was to deploy some basic skeleton and adopt the code in the Kyma UI afterwards. This plan changed as there seems to be a bug in the building/deployment of the function (see https://github.com/kyma-project/kyma/issues/10303) which screws up the environment variables. We must put the complete code into the yaml file. After successful deployment, the function should be in status “Running”:

Next, we wire up the function with the Commerce Cloud Mock event and the API using the service instances:

One further hint I stumbled across: there seems to be a limitation within the Kyma cluster on trial with respect to available resources (see https://answers.sap.com/questions/13222456/kyma-build-of-function-run-infinitely.html ). If your function gets stuck in the status “Build” you might have too many resources allocated in your Kyma trial. To resolve it, remove some resources.

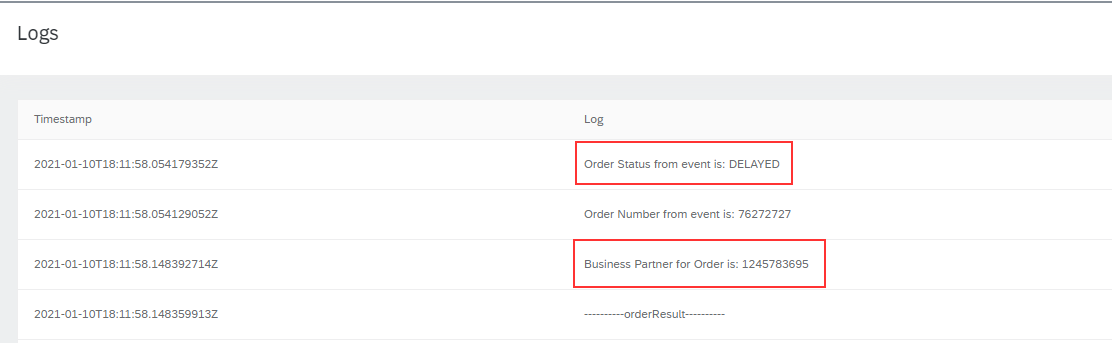

Now we should be done. Time to try things out. Let us trigger the function via an event from the Commerce Cloud Mock dashboard and see what the log tells us:

Our Kyma function gets triggered by the event:

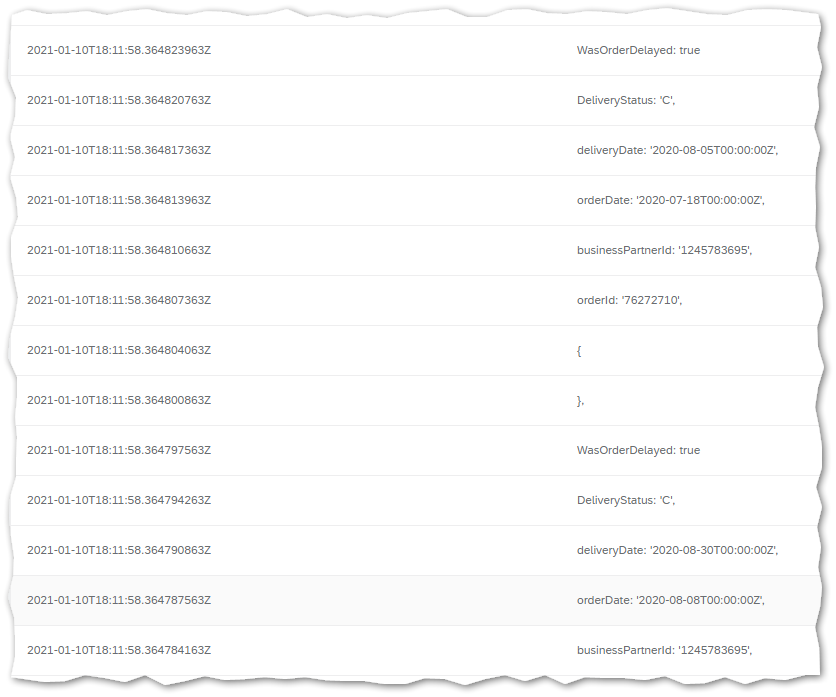

We get the order history from our mocked ABAP service:

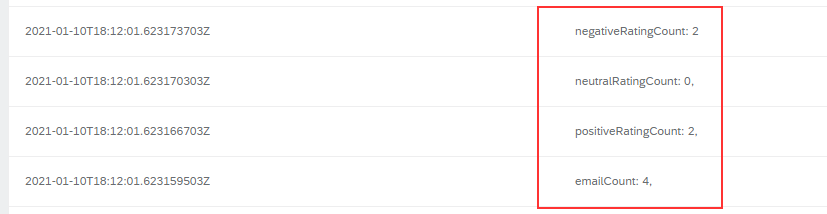

We get the result from your sentiment analysis:

So, things finally worked out!

But wait: we wanted to do something with the Business Rule Service on SAP Cloud Platform, right?

What about the decision service … well I tried

UPDATE: Thanks to archana.shukla and team (see comments) the issues with the booster could be sorted out on my account, delivering the expected experience from the ABAP Steampunk booster. In addition the Business Rule Service setup changed with respect to the app router (see comment below and updated help.sap.com section).

So expect some more to come in that area, am I right martin-pankraz 🙂

Ideally the decision of the follow-up actions after the sentiment analysis is determined via a decision service or business rules. SAP Cloud Platform offers such a service namely the Business Rules service. What is even better there is a booster available for setting things up comprising the Workflow and the Business Rules Service:

As the booster for ABAP went through quite smoothly, I gave it a go and what came back is:

Next stop: developers.sap.com to see if there is some tutorial to follow along and yes there is (https://developers.sap.com/tutorials/cp-starter-ibpm-employeeonboarding-1-setup.html) but this tutorial also uses the booster ☹.

Next try: help.sap.com (https://help.sap.com/viewer/0e4dd38c4e204f47b1ffd09e5684537b/Cloud/en-US/c045b537db3c4743a5e7d21d798...😞

Attention: these sections might be misleading as they are relevant for customers with a Portal service only (kind of legacy so to say). So when setting up you Business Rules as of now in the Cloud Foundry area, just follow along the description in the "Initial Setup" section of help.sap.com ignoring the Portal-related topics

So for the moment my journey with respect to business rules stopped at this point, but it will be continued.

Summary

Summarizing the journey of a side-by-side extension with Kyma and Microsoft Azure I would state that building side-by-side extensions with these building blocks is a feasible task. From the SAP side of the house there is quite some value in Kyma. Nevertheless, you cannot achieve everything in Kyma, so taking the best of different worlds can give rise to really cool new extensions of course especially when bringing in Microsoft Azure (I am biased okay 😉) and add a lot of value in the business processes for internal and external customers.

Looking more closely to the technical platforms my take-aways are:

- Kyma is the most promising environment on SAP Cloud Platform when it comes to building extensions (I leave the commercials aside). The integration with the SAP ecosystem is smooth, but still a bit limited. In addition, there are some pitfalls that I did not expect. They show that the maturity of the platform needs to be further improved, but from my perspective the foundation to do so is there.

Another important highlight is the documentation on https://kyma-project.io/ and the samples available on GitHub, that support development a lot. - Microsoft Azure complements the development with numerous beneficial offerings and a very pleasant development experience. Everything worked as expected. Some convenience features would be nice though.

Overall mixing in Microsoft Azure into side-by-side extensions for SAP is a very valid option that you should consider when designing extensions.

Documentation … let us put it this way: compared to SAP there are many many more developers using the platform, so the sources of information are great and so is the official help. - The SAP Cloud Platform trial has limitations that you need to consider if you can. It depends on the use-case if the offering is sufficient to build real-world proof-of-concepts or to make your hands dirty with some services (including a low entry barrier).

One thing at the very end: special thanks to

- the Kyma team especially marco.dorn , jamie.cawley and gabbi that supported in the SAP Community and beyond the scenes. Highly appreciated!

- to martin.pankraz for triggering this project and giving feedback on the story

- SAP Managed Tags:

- SAP BTP, Kyma runtime,

- Kyma Open Source,

- SAP Business Technology Platform

7 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

"automatische backups"

1 -

"regelmäßige sicherung"

1 -

"TypeScript" "Development" "FeedBack"

1 -

505 Technology Updates 53

1 -

ABAP

18 -

ABAP API

1 -

ABAP CDS Views

4 -

ABAP CDS Views - BW Extraction

1 -

ABAP CDS Views - CDC (Change Data Capture)

1 -

ABAP class

2 -

ABAP Cloud

3 -

ABAP DDIC CDS view

1 -

ABAP Development

5 -

ABAP in Eclipse

3 -

ABAP Platform Trial

1 -

ABAP Programming

2 -

abap technical

1 -

abapGit

1 -

absl

2 -

access data from SAP Datasphere directly from Snowflake

1 -

Access data from SAP datasphere to Qliksense

1 -

Accrual

1 -

action

1 -

adapter modules

1 -

Addon

1 -

Adobe Document Services

1 -

ADS

1 -

ADS Config

1 -

ADS with ABAP

1 -

ADS with Java

1 -

ADT

2 -

Advance Shipping and Receiving

1 -

Advanced Event Mesh

3 -

Advanced formula

1 -

AEM

1 -

AI

8 -

AI Launchpad

1 -

AI Projects

1 -

AIML

10 -

Alert in Sap analytical cloud

1 -

Amazon S3

1 -

Analytic Models

1 -

Analytical Dataset

1 -

Analytical Model

1 -

Analytics

1 -

Analyze Workload Data

1 -

annotations

1 -

API

1 -

API and Integration

4 -

API Call

2 -

API security

1 -

Application Architecture

1 -

Application Development

5 -

Application Development for SAP HANA Cloud

3 -

Applications and Business Processes (AP)

1 -

Artificial Intelligence

1 -

Artificial Intelligence (AI)

5 -

Artificial Intelligence (AI) 1 Business Trends 363 Business Trends 8 Digital Transformation with Cloud ERP (DT) 1 Event Information 462 Event Information 15 Expert Insights 114 Expert Insights 76 Life at SAP 418 Life at SAP 1 Product Updates 4

1 -

Artificial Intelligence (AI) blockchain Data & Analytics

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise Oil Gas IoT Exploration Production

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise sustainability responsibility esg social compliance cybersecurity risk

1 -

AS Java

1 -

ASE

1 -

ASR

2 -

Associations in CDS Views

1 -

ASUG

1 -

Attachments

1 -

Authentication

1 -

Authorisations

1 -

Automating Processes

1 -

Automation

2 -

aws

2 -

Azure

2 -

Azure AI Studio

1 -

Azure API Center

1 -

Azure API Management

1 -

B2B Integration

1 -

Background job

1 -

Backorder Processing

1 -

Backpropagation

1 -

Backup

1 -

Backup and Recovery

1 -

Backup schedule

1 -

BADI_MATERIAL_CHECK error message

1 -

Bank

1 -

Bank Communication Management

1 -

BAS

1 -

basis

2 -

Basis Monitoring & Tcodes with Key notes

2 -

Batch Management

1 -

BDC

1 -

Best Practice

1 -

BI

1 -

bitcoin

1 -

Blockchain

3 -

bodl

1 -

BOP in aATP

1 -

BOP Segments

1 -

BOP Strategies

1 -

BOP Variant

1 -

BPC

1 -

BPC LIVE

1 -

BTP

14 -

BTP AI Launchpad

1 -

BTP Destination

2 -

Business AI

1 -

Business and IT Integration

1 -

Business application stu

1 -

Business Application Studio

1 -

Business Architecture

1 -

Business Communication Services

1 -

Business Continuity

2 -

Business Data Fabric

3 -

Business Fabric

1 -

Business Partner

13 -

Business Partner Master Data

11 -

Business Technology Platform

2 -

Business Trends

4 -

BW4HANA

1 -

CA

1 -

calculation view

1 -

CAP

4 -

Capgemini

1 -

CAPM

1 -

Catalyst for Efficiency: Revolutionizing SAP Integration Suite with Artificial Intelligence (AI) and

1 -

CCMS

2 -

CDQ

13 -

CDS

2 -

CDS Views

1 -

Cental Finance

1 -

Certificates

1 -

CFL

1 -

Change Management

1 -

chatbot

1 -

chatgpt

3 -

CICD

1 -

CL_SALV_TABLE

2 -

Class Runner

1 -

Classrunner

1 -

Cloud ALM Monitoring

1 -

Cloud ALM Operations

1 -

cloud connector

1 -

Cloud Extensibility

1 -

Cloud Foundry

4 -

Cloud Integration

6 -

Cloud Platform Integration

2 -

cloudalm

1 -

communication

1 -

Compensation Information Management

1 -

Compensation Management

1 -

Compliance

1 -

Compound Employee API

1 -

Configuration

1 -

Connectors

1 -

Consolidation

1 -

Consolidation Extension for SAP Analytics Cloud

3 -

Control Indicators.

1 -

Controller-Service-Repository pattern

1 -

Conversion

1 -

Cosine similarity

1 -

CPI

1 -

cryptocurrency

1 -

CSI

1 -

ctms

1 -

Custom chatbot

3 -

Custom Destination Service

1 -

custom fields

1 -

Custom Headers

1 -

Customer Experience

1 -

Customer Journey

1 -

Customizing

1 -

cyber security

4 -

cybersecurity

1 -

Data

1 -

Data & Analytics

1 -

Data Aging

1 -

Data Analytics

2 -

Data and Analytics (DA)

1 -

Data Archiving

1 -

Data Back-up

1 -

Data Flow

1 -

Data Governance

5 -

Data Integration

2 -

Data Quality

13 -

Data Quality Management

13 -

Data Synchronization

1 -

data transfer

1 -

Data Unleashed

1 -

Data Value

9 -

Database and Data Management

1 -

database tables

1 -

Databricks

1 -

Dataframe

1 -

Datasphere

3 -

datenbanksicherung

1 -

dba cockpit

1 -

dbacockpit

1 -

Debugging

2 -

Defender

1 -

Delimiting Pay Components

1 -

Delta Integrations

1 -

Destination

3 -

Destination Service

1 -

Developer extensibility

1 -

Developing with SAP Integration Suite

1 -

Devops

1 -

digital transformation

1 -

Disaster Recovery

1 -

Documentation

1 -

Dot Product

1 -

DQM

1 -

dump database

1 -

dump transaction

1 -

e-Invoice

1 -

E4H Conversion

1 -

Eclipse ADT ABAP Development Tools

2 -

edoc

1 -

edocument

1 -

ELA

1 -

Embedded Consolidation

1 -

Embedding

1 -

Embeddings

1 -

Employee Central

1 -

Employee Central Payroll

1 -

Employee Central Time Off

1 -

Employee Information

1 -

Employee Rehires

1 -

Enable Now

1 -

Enable now manager

1 -

endpoint

1 -

Enhancement Request

1 -

Enterprise Architecture

1 -

Entra

1 -

ESLint

1 -

ETL Business Analytics with SAP Signavio

1 -

Euclidean distance

1 -

Event Dates

1 -

Event Driven Architecture

1 -

Event Mesh

2 -

Event Reason

1 -

EventBasedIntegration

1 -

EWM

1 -

EWM Outbound configuration

1 -

EWM-TM-Integration

1 -

Existing Event Changes

1 -

Expand

1 -

Expert

2 -

Expert Insights

2 -

Exploits

1 -

Fiori

16 -

Fiori App Extension

2 -

Fiori Elements

2 -

Fiori Launchpad

2 -

Fiori SAPUI5

13 -

first-guidance

1 -

Flask

2 -

FTC

1 -

Full Stack

9 -

Funds Management

1 -

gCTS

1 -

GenAI hub

1 -

General

2 -

Generative AI

1 -

Getting Started

1 -

GitHub

11 -

Google cloud

1 -

Grants Management

1 -

groovy

2 -

GTP

1 -

HANA

6 -

HANA Cloud

2 -

Hana Cloud Database Integration

2 -

HANA DB

2 -

Hana Vector Engine

1 -

HANA XS Advanced

1 -

Historical Events

1 -

home labs

1 -

HowTo

1 -

HR Data Management

1 -

html5

9 -

HTML5 Application

1 -

Identity cards validation

1 -

idm

1 -

Implementation

1 -

Infuse AI

1 -

input parameter

1 -

instant payments

1 -

Integration

3 -

Integration Advisor

1 -

Integration Architecture

1 -

Integration Center

1 -

Integration Suite

1 -

intelligent enterprise

1 -

Internal Table

1 -

iot

1 -

Java

1 -

JMS Receiver channel ping issue

1 -

job

1 -

Job Information Changes

1 -

Job-Related Events

1 -

Job_Event_Information

1 -

joule

4 -

Journal Entries

1 -

Just Ask

1 -

Kerberos for ABAP

10 -

Kerberos for JAVA

9 -

KNN

1 -

Launch Wizard

1 -

Learning Content

2 -

Life at SAP

5 -

lightning

1 -

Linear Regression SAP HANA Cloud

1 -

Loading Indicator

1 -

local tax regulations

1 -

LP

1 -

Machine Learning

4 -

Marketing

1 -

Master Data

3 -

Master Data Management

15 -

Maxdb

2 -

MDG

1 -

MDGM

1 -

MDM

1 -

Message box.

1 -

Messages on RF Device

1 -

Microservices Architecture

1 -

Microsoft

1 -

Microsoft Universal Print

1 -

Middleware Solutions

1 -

Migration

5 -

ML Model Development

1 -

MLFlow

1 -

Modeling in SAP HANA Cloud

9 -

Monitoring

3 -

MPL

1 -

MTA

1 -

Multi-factor-authentication

1 -

Multi-Record Scenarios

1 -

Multilayer Perceptron

1 -

Multiple Event Triggers

1 -

Myself Transformation

1 -

Neo

1 -

Neural Networks

1 -

New Event Creation

1 -

New Feature

1 -

Newcomer

1 -

NodeJS

3 -

ODATA

2 -

OData APIs

1 -

odatav2

1 -

ODATAV4

1 -

ODBC

1 -

ODBC Connection

1 -

Onpremise

1 -

open source

2 -

OpenAI API

1 -

Oracle

1 -

PaPM

1 -

PaPM Dynamic Data Copy through Writer function

1 -

PaPM Remote Call

1 -

Partner Built Foundation Model

1 -

PAS-C01

1 -

Pay Component Management

1 -

PGP

1 -

Pickle

1 -

PLANNING ARCHITECTURE

1 -

Popup in Sap analytical cloud

1 -

PostgrSQL

1 -

POSTMAN

1 -

Prettier

1 -

Process Automation

2 -

Product Updates

6 -

PSM

1 -

Public Cloud

1 -

Python

5 -

python library - Document information extraction service

1 -

Qlik

1 -

Qualtrics

1 -

RAP

3 -

RAP BO

2 -

React

1 -

Record Deletion

1 -

Recovery

1 -

recurring payments

1 -

redeply

1 -

Release

1 -

Remote Consumption Model

1 -

Replication Flows

1 -

report painter

1 -

research

1 -

Resilience

1 -

REST

1 -

REST API

1 -

Retagging Required

1 -

Risk

1 -

rolandkramer

2 -

Rolling Kernel Switch

1 -

route

1 -

rules

1 -

S4 HANA

1 -

S4 HANA Cloud

1 -

S4 HANA On-Premise

1 -

S4HANA

4 -

S4HANA Cloud

1 -

S4HANA_OP_2023

2 -

SAC

11 -

SAC PLANNING

10 -

SAP

4 -

SAP ABAP

1 -

SAP Advanced Event Mesh

1 -

SAP AI Core

10 -

SAP AI Launchpad

9 -

SAP Analytic Cloud

1 -

SAP Analytic Cloud Compass

1 -

Sap Analytical Cloud

1 -

SAP Analytics Cloud

5 -

SAP Analytics Cloud for Consolidation

3 -

SAP Analytics cloud planning

1 -

SAP Analytics Cloud Story

1 -

SAP analytics clouds

1 -

SAP API Management

1 -

SAP Application Logging Service

1 -

SAP BAS

1 -

SAP Basis

6 -

SAP BO FC migration

1 -

SAP BODS

1 -

SAP BODS certification.

1 -

SAP BODS migration

1 -

SAP BPC migration

1 -

SAP BTP

25 -

SAP BTP Build Work Zone

2 -

SAP BTP Cloud Foundry

8 -

SAP BTP Costing

1 -

SAP BTP CTMS

1 -

SAP BTP Generative AI

1 -

SAP BTP Innovation

1 -

SAP BTP Migration Tool

1 -

SAP BTP SDK IOS

1 -

SAP BTPEA

1 -

SAP Build

12 -

SAP Build App

1 -

SAP Build apps

1 -

SAP Build CodeJam

1 -

SAP Build Process Automation

3 -

SAP Build work zone

11 -

SAP Business Objects Platform

1 -

SAP Business Technology

2 -

SAP Business Technology Platform (XP)

1 -

sap bw

1 -

SAP CAP

2 -

SAP CDC

1 -

SAP CDP

1 -

SAP CDS VIEW

1 -

SAP Certification

1 -

SAP Cloud ALM

4 -

SAP Cloud Application Programming Model

1 -

SAP Cloud Integration

1 -

SAP Cloud Integration for Data Services

1 -

SAP cloud platform

9 -

SAP Companion

1 -

SAP CPI

3 -

SAP CPI (Cloud Platform Integration)

2 -

SAP CPI Discover tab

1 -

sap credential store

1 -

SAP Customer Data Cloud

1 -

SAP Customer Data Platform

1 -

SAP Data Intelligence

1 -

SAP Data Migration in Retail Industry

1 -

SAP Data Services

1 -

SAP DATABASE

1 -

SAP Dataspher to Non SAP BI tools

1 -

SAP Datasphere

9 -

SAP DRC

1 -

SAP EWM

1 -

SAP Fiori

3 -

SAP Fiori App Embedding

1 -

Sap Fiori Extension Project Using BAS

1 -

SAP GRC

1 -

SAP HANA

1 -

SAP HANA PAL

1 -

SAP HANA Vector

1 -

SAP HCM (Human Capital Management)

1 -

SAP HR Solutions

1 -

SAP IDM

1 -

SAP Integration Suite

10 -

SAP Integrations

4 -

SAP iRPA

2 -

SAP LAGGING AND SLOW

1 -

SAP Learning Class

1 -

SAP Learning Hub

1 -

SAP Master Data

1 -

SAP Odata

3 -

SAP on Azure

2 -

SAP PAL

1 -

SAP PartnerEdge

1 -

sap partners

1 -

SAP Password Reset

1 -

SAP PO Migration

1 -

SAP Prepackaged Content

1 -

sap print

1 -

SAP Process Automation

2 -

SAP Process Integration

2 -

SAP Process Orchestration

1 -

SAP Router

1 -

SAP S4HANA

2 -

SAP S4HANA Cloud

2 -

SAP S4HANA Cloud for Finance

1 -

SAP S4HANA Cloud private edition

1 -

SAP Sandbox

1 -

SAP STMS

1 -

SAP successfactors

3 -

SAP SuccessFactors HXM Core

1 -

SAP Time

1 -

SAP TM

2 -

SAP Trading Partner Management

1 -

SAP UI5

1 -

SAP Upgrade

1 -

SAP Utilities

1 -

SAP-GUI

9 -

SAP_COM_0276

1 -

SAPBTP

1 -

SAPCPI

1 -

SAPEWM

1 -

sapfirstguidance

3 -

SAPHANAService

1 -

SAPIQ

2 -

sapmentors

1 -

saponaws

2 -

saprouter

1 -

SAPRouter installation

1 -

SAPS4HANA

1 -

SAPUI5

5 -

schedule

1 -

Script Operator

1 -

Secure Login Client Setup

9 -

security

10 -

Selenium Testing

1 -

Self Transformation

1 -

Self-Transformation

1 -

SEN

1 -

SEN Manager

1 -

Sender

1 -

service

2 -

SET_CELL_TYPE

1 -

SET_CELL_TYPE_COLUMN

1 -

SFTP scenario

2 -

Simplex

1 -

Single Sign On

9 -

Singlesource

1 -

SKLearn

1 -

Slow loading

1 -

SOAP

2 -

Software Development

1 -

SOLMAN

1 -

solman 7.2

2 -

Solution Manager

3 -

sp_dumpdb

1 -

sp_dumptrans

1 -

SQL

1 -

sql script

1 -

SSL

9 -

SSO

9 -

Story2

1 -

Substring function

1 -

SuccessFactors

1 -

SuccessFactors Platform

1 -

SuccessFactors Time Tracking

1 -

Sybase

1 -

Synthetic User Monitoring

1 -

system copy method

1 -

System owner

1 -

Table splitting

1 -

Tax Integration

1 -

Technical article

1 -

Technical articles

1 -

Technology Updates

15 -

Technology Updates

1 -

Technology_Updates

1 -

terraform

1 -

Testing

1 -

Threats

2 -

Time Collectors

1 -

Time Off

2 -

Time Sheet

1 -

Time Sheet SAP SuccessFactors Time Tracking

1 -

Tips and tricks

2 -

toggle button

1 -

Tools

1 -

Trainings & Certifications

1 -

Transformation Flow

1 -

Transport in SAP BODS

1 -

Transport Management

1 -

TypeScript

3 -

ui designer

1 -

unbind

1 -

Unified Customer Profile

1 -

UPB

1 -

Use of Parameters for Data Copy in PaPM

1 -

User Unlock

1 -

VA02

1 -

Validations

1 -

Vector Database

2 -

Vector Engine

1 -

Vectorization

1 -

Visual Studio Code

1 -

VSCode

2 -

VSCode extenions

1 -

Vulnerabilities

1 -

Web SDK

1 -

Webhook

1 -

work zone

1 -

workload

1 -

xsa

1 -

XSA Refresh

1

- « Previous

- Next »

Related Content

- SAP BTP FAQs - Part 1 (General Topics in SAP BTP) in Technology Blogs by SAP

- How to create LLM based Chatbot for SAP HANA Cloud using RAG Application in Technology Blogs by SAP

- The 2024 Developer Insights Survey: The Report in Technology Blogs by SAP

- Govern SAP APIs living in various API Management gateways in a single place with Azure API Center in Technology Blogs by Members

- Embracing TypeScript in SAPUI5 Development in Technology Blogs by Members

Top kudoed authors

| User | Count |

|---|---|

| 53 | |

| 5 | |

| 4 | |

| 4 | |

| 4 | |

| 4 | |

| 3 | |

| 3 | |

| 3 | |

| 3 |