- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Enhanced Data Analysis of Fitness Data using HANA ...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Extending on my fitness related datasets and using some more 2024 based capabilities, I will not go into details on how I get it into Datasphere, as I covered this previously with a Travel Sustainability Blog.

Digging into further analysis of the data, using some newer technologies and datasets, I want to focus on a couple of areas:

- Finding Similar Activities using the HANA Vector Engine

- Using External Datasets to enrich data, and in my case find areas of concern using HANA Spatial Engine (not new but always good to use newer datasets).

- Restricting data between users using SAP Data Access Controls.

Also additional SAP Analytics Cloud examples developed will be demo'ed as part of a Webinar that will be linked to this blog.

With a bit more details:

- Finding Similar Events using the HANA Vector Engine

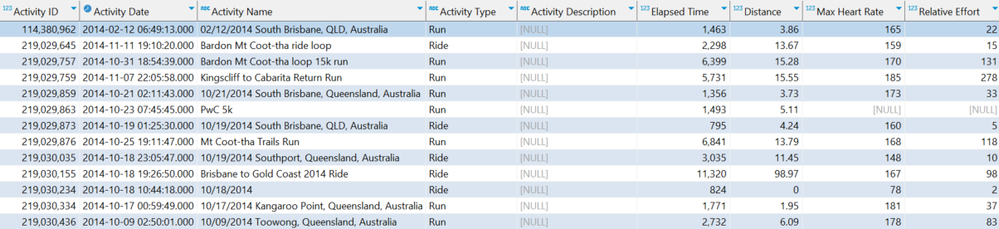

Using a dataset of about 2000 different activities, the goal was to find similar efforts, irrespective of location. Here is a sample of a few records to get an idea of the dataset:

Using the columns: Elapsed Time, Distance. Relative Effort and Elevation Gain - I created vectors in the HANA Cloud based on these values:

ALTER TABLE LOCAL_ACTIVITIES ADD (EMBEDDING REAL_VECTOR);

UPDATE LOCAL_ACTIVITIES SET EMBEDDING = TO_REAL_VECTOR('[

'||to_varchar("Elapsed Time")||',

'||to_varchar("Distance")||',

'||to_varchar("Relative Effort")||',

'||to_varchar("Elevation Gain")||']')

WHERE "Elapsed Time" IS NOT NULL

AND "Distance" IS NOT NULL

AND "Relative Effort" IS NOT NULL;

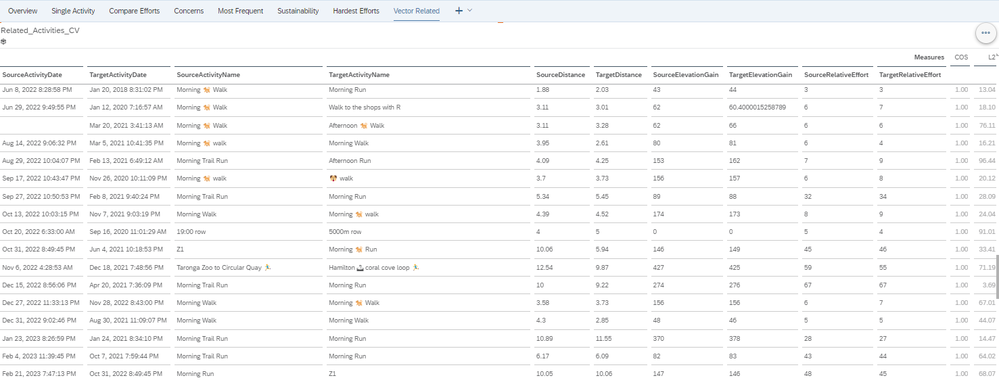

Then creating a view on this, which can be used as a view / SQL query in Datasphere:

CREATE VIEW RELATED_ACTIVITIES_VECTOR_V as

SELECT

A."Activity Date" AS "SourceActivityDate", B."Activity Date" AS "TargetActivityDate", A."Activity Name" AS "SourceActivityName",

B."Activity Name" as "TargetActivityName", A."Distance" AS "SourceDistance",

B."Distance" AS "TargetDistance", A."Relative Effort" AS "SourceRelativeEffort", B."Relative Effort" AS "TargetRelativeEffort",

A."Elevation Gain" AS "SourceElevationGain", B."Elevation Gain" AS "TargetElevationGain",

cosine_similarity(A.EMBEDDING, B.EMBEDDING) COS,

l2distance(A.EMBEDDING, B.EMBEDDING) L2

FROM LOCAL_ACTIVITIES A, LOCAL_ACTIVITIES B

WHERE A."Activity ID" > B."Activity ID"

AND cosine_similarity(A.EMBEDDING, B.EMBEDDING) > .999999

AND l2distance(A.EMBEDDING, B.EMBEDDING) < 100;

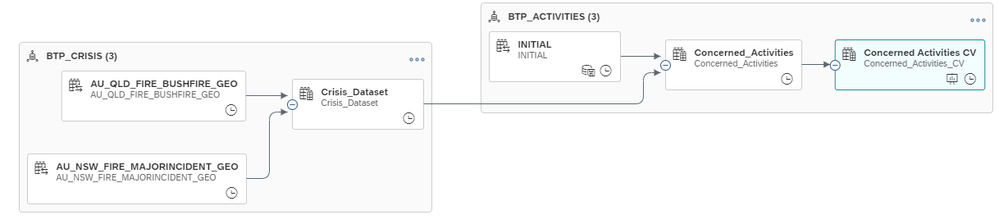

Then making the query available in Datasphere and presenting the results in SAP Analytics Cloud:

2. Using External Datasets to enrich data, and in my case find areas of Concern using HANA Spatial Engine.

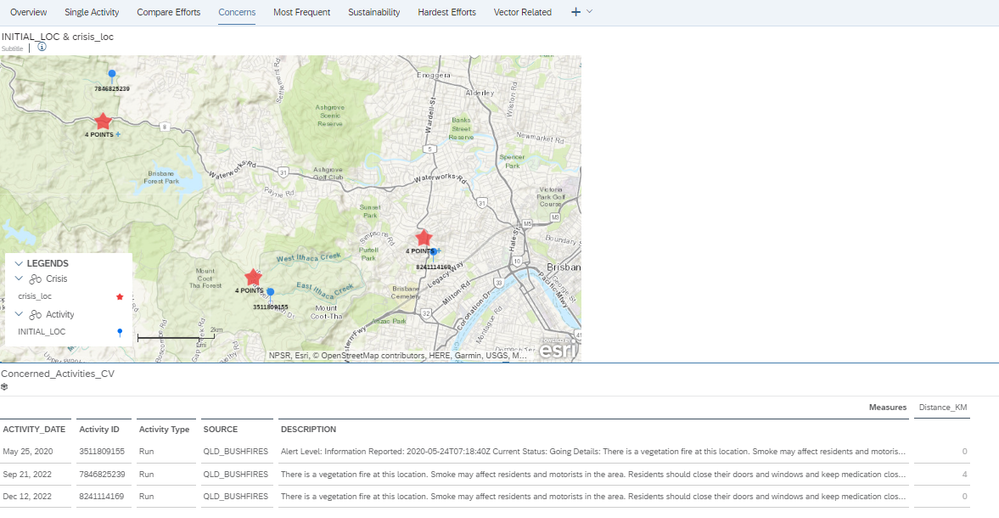

In a separate Datasphere space, we have a range of Global Crisis Datasets. For me and my fitness data, I thought the simplest would be determining if I ran near any fires as part of my run. Yes, this data is hindsight - but either way an interesting use case. we easily build a BTP Build App that is preventative to the user, but I'll leave that for someone else in my team to build.

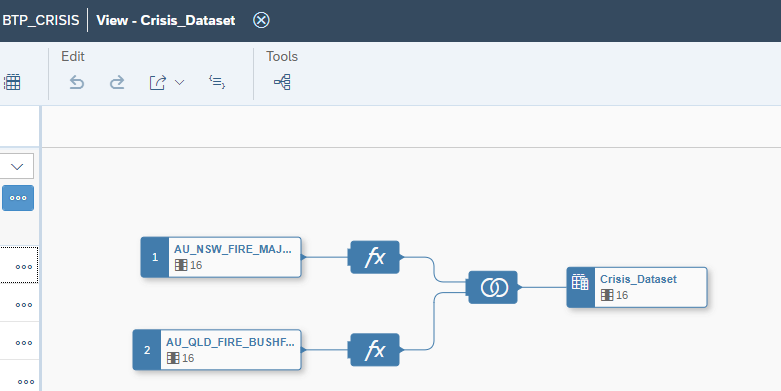

So picking Queensland and New South Wales fire data, and joining them together:

In a relatively simple query, I can perform geospatial analysis query based on location and time:

SELECT distinct "L_ROW", "Activity ID", "ACTIVITY_DATE", "Activity Type", "SOURCE", "DESCRIPTION", TO_INTEGER("GEO".ST_TRANSFORM( 3857 ).ST_Distance("ST_POINT_3857", 'kilometer')) "Distance_KM",

"A"."LATITUDE" "ACTIVITY_LATITUDE",

"A"."LONGITUDE" "ACTIVITY_LONGITUDE",

"C"."LATITUDE" "CRISIS_LATITUDE",

"C"."LONGITUDE" "CRISIS_LONGITUDE"

FROM "INITIAL" "A", "BTP_CRISIS.Crisis_Dataset" "C"

where "GEO".ST_TRANSFORM( 3857 ).ST_Distance("ST_POINT_3857", 'kilometer') < 2

and "ACTIVITY_DATE" BETWEEN add_days("PUBLISHED", -1) AND add_days("PUBLISHED", 1)

This query is finding all the fire warnings that were published within 1 day, and within 2 km of my start point of the run. I could have also made these parameters, so that the user can also dynamically choose the timescale and the distance. I could also have used the query to be based on any point on the run. Maybe as a V2.

Once, I enable this data to be viewed in SAC, I can quickly get the output:

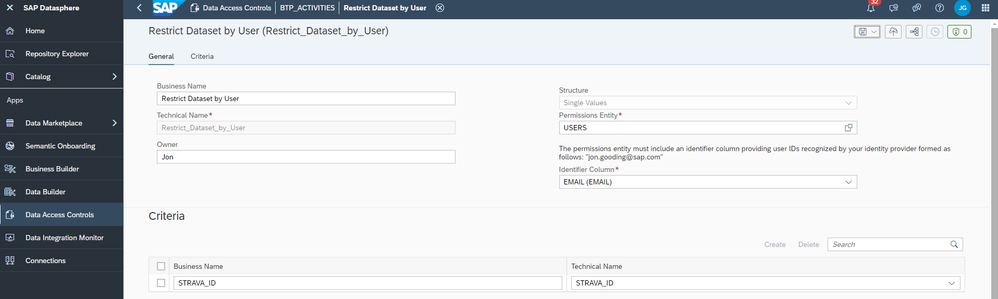

3. Restricting data between users using SAP Data Access Controls.

The data I am loading into the SAP Datasphere instance is multi-user and there is a requirement to keep it isolated across users. So to ensure data privacy, the Datasphere Data Access Controls

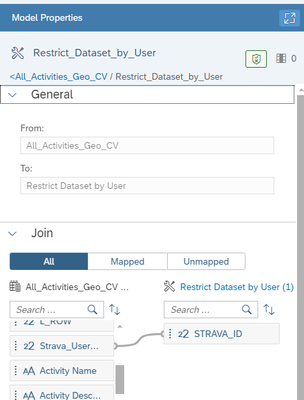

The datasets is unique by user of the Strava Id, so we can simply apply Data Access Controls to limit to their own datasets. For this, a reference table / view is needed, and we simply create a Data Access Control over based on the requirements:

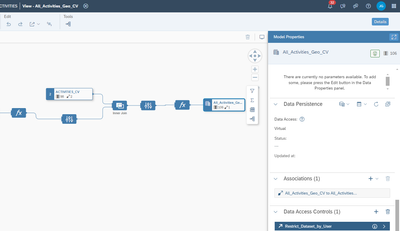

Applying the Data Access Controls to the appropriate Views:

Now we have a platform that allows us to performs some rich data analysis using modern tools that suit the individual - using their own datasets. Some examples that have been used are around Jupyter Notebooks, DBeaver Analysis and also using text input the Vector engine to match this to the existing datasets. More to come in future blogs and Webinars.

Personally, I think it's so much more interesting using you own datasets, in this case a personal fitness dataset - or in the business world , your own systems data - which can easily be supplemented into this technology to gain some invaluable insights.

If you have any other scenarios, let me know and I'll see if I - or we can cover it as part of our next Workshop on this.

Cheers

Jon

- SAP Managed Tags:

- SAP Analytics Cloud,

- SAP Datasphere

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

94 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

306 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,576 -

Product Updates

349 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

434 -

Workload Fluctuations

1

- SAP Datasphere + SAP S/4HANA: Your Guide to Seamless Data Integration in Technology Blogs by SAP

- What’s New in SAP Datasphere Version 2024.9 — Apr 23, 2024 in Technology Blogs by Members

- Hybrid Architectures: A Modern Approach for SAP Data Integration in Technology Blogs by SAP

- Quick & Easy Datasphere - When to use Data Flow, Transformation Flow, SQL View? in Technology Blogs by Members

- SAP Analytics Cloud: Support Universal Account Model (UAM) with Custom Widget in Technology Blogs by SAP

| User | Count |

|---|---|

| 24 | |

| 19 | |

| 12 | |

| 11 | |

| 10 | |

| 9 | |

| 9 | |

| 8 | |

| 7 | |

| 7 |