- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Hybrid Developments using SAP HANA Cloud and SAP D...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Product and Topic Expert

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

10-19-2022

3:59 AM

I was going through the blog by vishwanath.g about the CI/CD automation approach for hybrid developments focusing on HDI container-based developments for SAP HANA Cloud & Space level Developments within SAP Datasphere .My blog would be a pre-requisite for the automation approach and would provide the hands-on and step by step tutorials for accessing SAP Datasphere space objects in your HDI container as well accessing HDI containers in your SAP DWC space. The bi-directional approach of using SAP DWC as SaaS and utilize the underlying SAP HANA Cloud for advanced or complex developments supports customers in the following scenarios:

Please note that there have been some updates in SAP Datasphere roadmap with availability of Choropleth layer for Spatial Analytics and generic OData based Public APIs to build custom solutions. Please refer the blog https://blogs.sap.com/2022/10/05/sap-data-warehouse-cloud-in-q3-news/ from klaus-peter.sauer4 for more details.

Now let’s discuss the Scenario 1 in detail “Accessing Datasphere Space Objects in the HDI container”

Here are the steps to be followed in sequence

Prerequisites:

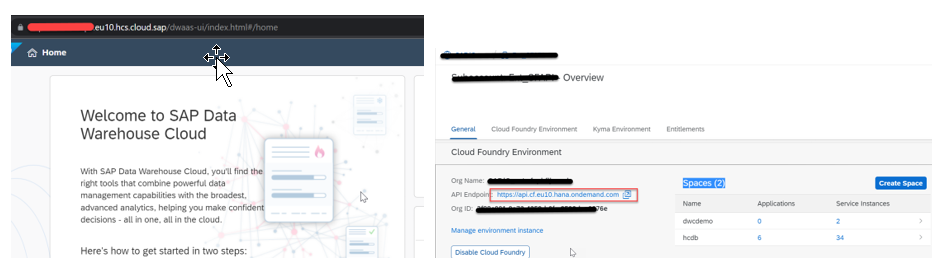

As you see in the below screenshots, my SAP Datasphere and subaccounts are in datacenter eu10.

And I also assume you have the necessary views in your Datasphere Space to be exposed for BAS based development. In my case, I already have a view "COVID_STATS", a federated data source from BigQuery which will be blended with other data sources.

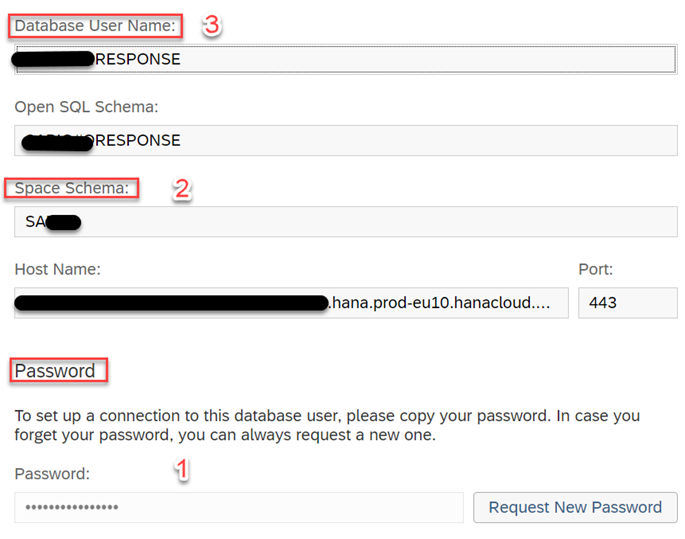

Finally, the Open SQL Schema/DB user within your Datasphere Space with access to read and write for HDI consumption. In my case, the DB User is QRESPONSE. When you select the info dialog [1], it will provide the credentials for User-Provided service [UPS] that we will use later for BAS developments.

SAP service Ticket for Space mapping:

In order to consume objects deployed in Datasphere Spaces into your own HDI container, you need access to the underlying SAP HANA Cloud tenant from your BTP space. In order to achieve this, you have raise a ticket to SAP component DWC-SM and request instance sharing (sharing the HANA Cloud tenant of SAP Datasphere to your BTP Space) . While creating the incident, please provide the following details and request for tenant mapping.

You can get the SAP Datasphere tenant ID & Database ID as seen below [System->About]

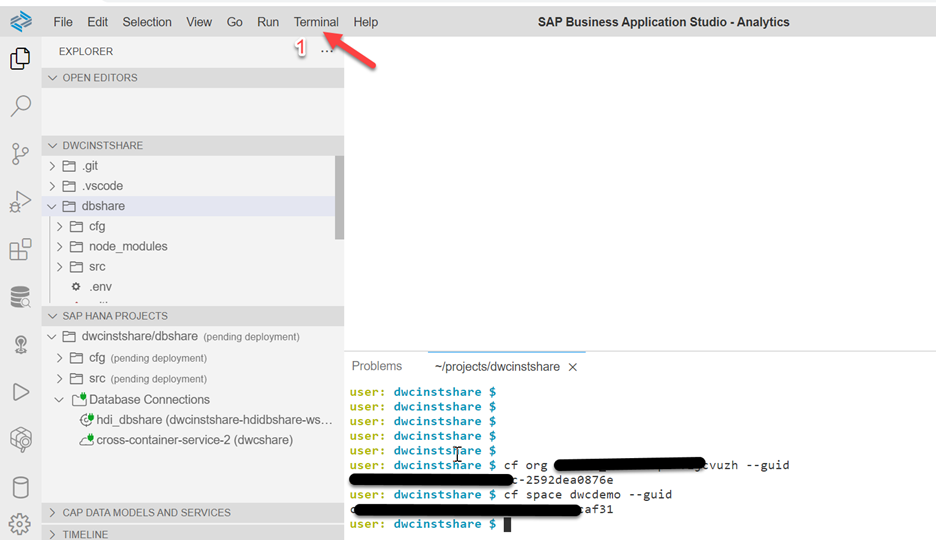

You can get the Org ID and Space ID using your Cloud Foundry CLI or from your Business Application studio Terminal[1] by executing the following commands

If you are assigned to multiple Orgs or Spaces , then execute "cf orgs" & "cf spaces" to get the Org name and Space name . And then execute the commands. Once you have these details, please raise a service ticket to DWC-SM requesting to map your BTP space to DWC Space.

Once support team completes the request, you will get a screenshot with confirmation of space mapping.

Now that all the necessary prerequisites and space mapping are taken care, let’s get into development.

HDI Development using Business Application Studio [BAS]

You can use the following git repository for reference and am going to explain the steps based on the same.

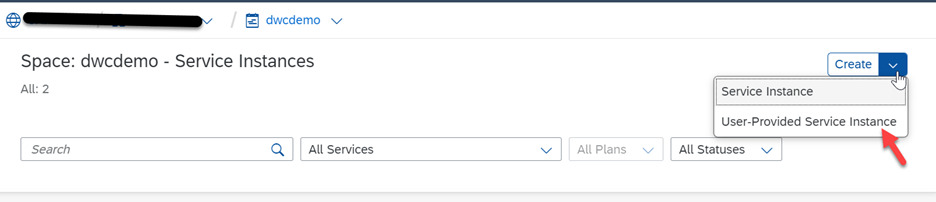

Create User-Provided Service [UPS] using BTP Cockpit

Since I already have my HANA Cloud instance and instance-based developments in the space [hcdb], I created a separate space for developments based on instance sharing [space dwcdemo]. If you want to create it in the existing space where you already have a HANA Cloud instance, then you need to add the Database ID in the yaml file before deployments.

As mentioned in the prerequisites [point 5], you should already have the Open SQL schema details from the DWC Space. Navigate to the newly created BTP space for developments and create a UPS with the details you have captured in point 5.

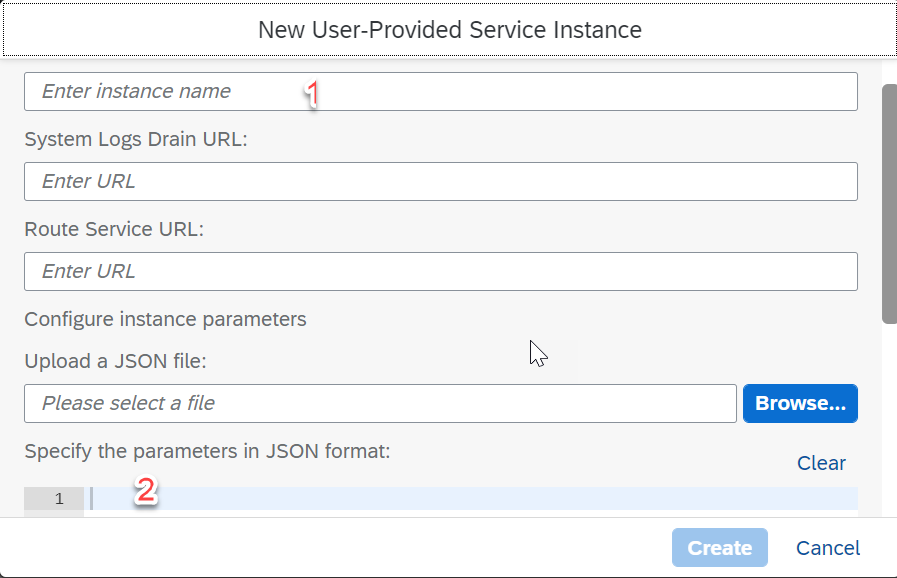

Provide the instance name[1] as “dwcshare” as the config files in shared GIT repo address the UPS with the same name. If you are providing a different name, then make sure you adjust the config files once you clone the repository. And in the section “Configure Instance parameters” section[2], provide the Open SQL Schema access details.

Use the below code snippet as reference for instance parameters.

And here is a screenshot for your reference for the Open SQL schema connection

Once completed, you should see the new UPS instance dwcshare created in your SAP BTP space.

Clone the repository and deploy with minimal adjustments

Navigate to Business Application Studio and create a new Space [or login to existing one] for SAP HANA Native Application

Select the newly created space[in my case “CrossAnalytics”] . Now login to BTP cloud foundry space before you clone the repository . This will be the space where you deploy the developments you are going to create based on SAP DWC artifacts.

To login to your BTP space, you can select the cloud foundry targets [1] or use Terminal[2].

Let’s use the cloud foundry targets approach to login and provide the credentials to connect to the BTP space.

Once you login, select the option “Clone from Git”

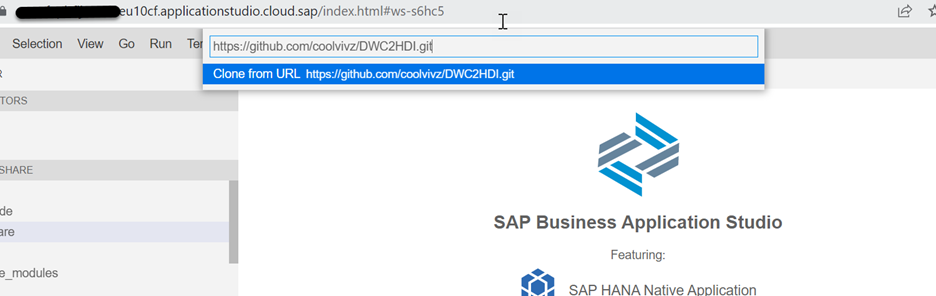

Provide the repo URL and click enter to Clone.

And select the option “Open” to view the repository in the BAS editor.

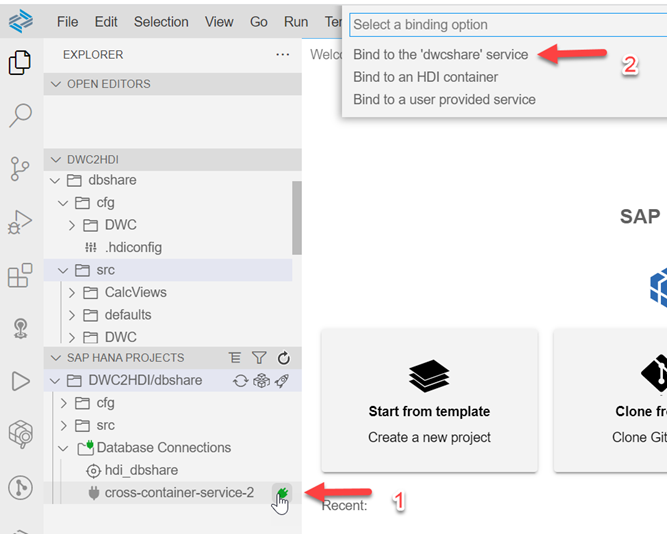

The repository is cloned now as you see in the highlighted part of the screen. And we need to map the UPS to container-service[1] and bind the service instance [2].

Let’s bind the UPS ‘dwcshare’ which was created previously. Select the bind option[1] and you will see the option “Bind to the dwcshare service”. Select the option and bind the UPS .

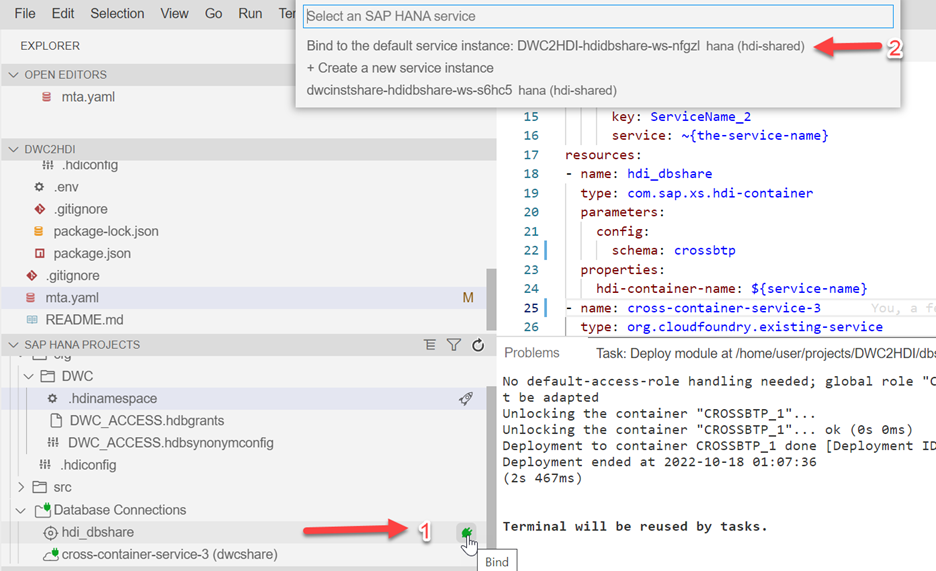

Now bind the hdi container [1] by selecting "Bind to the default service instance" [2]

When you bind the instance , you will see this message on how to deal with undeployment of files in case of differences between DB and project filesystem. Select “Enable”.

Once you bind the UPS and container, you will see the name of UPS and container as shown below.

Now let’s do a quick check on the artifacts before we deploy.

cfg folder has the hdbgrants and hdbsynonymconfig which will point to the objects from DWC Space.

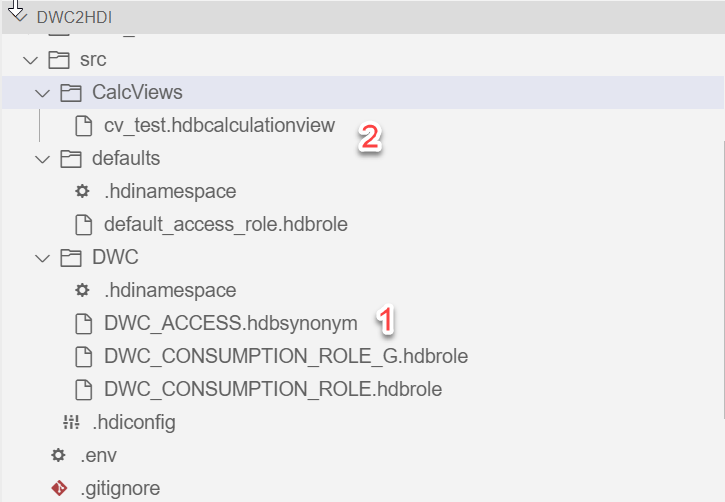

src folder will have the synonyms and the development artifacts such as calculation view based upon synonyms.

In the hdbsynonymconfig file, replace the upsname [1] if you have created a different UPS name[1] than used in repo and change the object name to your DWC Space object view or views[2].

Also update the same information in hdbgrants file with your UPS on line 2 and name of the Datasphere Space view on line 9

Finally in the source folder [src], update the hdbsynonym[1] with all your Datasphere space objects that you wanted to use for native HANA deployments. Please make sure the objects mentioned here are the same as mentioned in cfg folder. Also in my case I have created a calculation view[2] based on my Datasphere Space object COVID_STATS. So you need to replace the data source with your own view or can delete the calculation view as of now. Once you deploy the synonyms, you can create your own calculation view.

Once these adjustments are done, deploy the cfg[1]folder first followed by src[2] folder. Once the deployment is successful, the objects are deployed in the schema CROSSBTP_1. If you want to change the name of the schema, adjust the mta.yaml file (line 22).

Assuming you followed all these changes, the container should have deployed with no error. If you check the synonym used in my calculation view, it will be the same object from Datasphere Space.

Also, the deployed container CROSSBTP_1 can now be added back to SAP Datasphere space as a HDI container. And this HDI container could be used a source while using "Data Builder"

In order to consume the deployed container back in SAP Datasphere Space, please follow these steps.

1. Navigate to the Data builder within your space.

2. Create a graphical view

3. Under the sources tab, you will find the deployed container[CROSSBTP_1] and the view[cv_test] which could be utilized back in your SAP Datasphere space with other data sources.

I would like to thank axel.meier for his initial blog on this concept and helping with deployment issues. Hopefully this helps you in understanding how to access the HANA Cloud tenant of SAP Datasphere for HDI developments using Business Application studio. Please do share your feedback or reach out to me if you have issues in accessing the Git Repositories .

- Customers migrating from HANA Enterprise Data mart to SAP Datasphere with dependency on multi-model analytics capabilities

- Customers migrating from SAP BW to SAP Datasphere with dependency on Native HANA developments.

- Customers planning to expose the SAP Datasphere Space Objects for external OData consumption or as Web APIs

Please note that there have been some updates in SAP Datasphere roadmap with availability of Choropleth layer for Spatial Analytics and generic OData based Public APIs to build custom solutions. Please refer the blog https://blogs.sap.com/2022/10/05/sap-data-warehouse-cloud-in-q3-news/ from klaus-peter.sauer4 for more details.

Now let’s discuss the Scenario 1 in detail “Accessing Datasphere Space Objects in the HDI container”

Access Space Objects in HDI container

Here are the steps to be followed in sequence

- Prerequisites

- Raise SAP service Ticket for Space mapping [Between Datasphere Space and your BTP Space]

- HDI Development using Business Application Studio [BAS]

Prerequisites:

- You already have access to the SAP Datasphere Tenant.

- You have a BTP account with an Organization and Space for HDI Developments.

- Both your DWC Tenant and BTP account must be in the same Data Center. [eg EU10 or US10]

- You already have a DWC Space with necessary views to be exposed for BAS based development.

- You have a created a Open SQL Schema/Database User within your SAP DWC space enabling READ, WRITE & HDI consumption.

As you see in the below screenshots, my SAP Datasphere and subaccounts are in datacenter eu10.

And I also assume you have the necessary views in your Datasphere Space to be exposed for BAS based development. In my case, I already have a view "COVID_STATS", a federated data source from BigQuery which will be blended with other data sources.

DWC Analytical Dataset

Finally, the Open SQL Schema/DB user within your Datasphere Space with access to read and write for HDI consumption. In my case, the DB User is QRESPONSE. When you select the info dialog [1], it will provide the credentials for User-Provided service [UPS] that we will use later for BAS developments.

SAP service Ticket for Space mapping:

In order to consume objects deployed in Datasphere Spaces into your own HDI container, you need access to the underlying SAP HANA Cloud tenant from your BTP space. In order to achieve this, you have raise a ticket to SAP component DWC-SM and request instance sharing (sharing the HANA Cloud tenant of SAP Datasphere to your BTP Space) . While creating the incident, please provide the following details and request for tenant mapping.

- SAP Datasphere Tenant ID

- SAP Datasphere Database ID

- SAP BTP Org GUID

- SAP BTP Space GUID

You can get the SAP Datasphere tenant ID & Database ID as seen below [System->About]

SAP DWC Tenant ID

You can get the Org ID and Space ID using your Cloud Foundry CLI or from your Business Application studio Terminal[1] by executing the following commands

cf org <orgname> --guid

cf space <spacename> --guid

Business Application Studio

If you are assigned to multiple Orgs or Spaces , then execute "cf orgs" & "cf spaces" to get the Org name and Space name . And then execute the commands. Once you have these details, please raise a service ticket to DWC-SM requesting to map your BTP space to DWC Space.

Once support team completes the request, you will get a screenshot with confirmation of space mapping.

Space Mapping

Now that all the necessary prerequisites and space mapping are taken care, let’s get into development.

HDI Development using Business Application Studio [BAS]

- Create User-Provided Service [UPS] using BTP Cockpit

- Clone the repository and deploy with minimal adjustments

You can use the following git repository for reference and am going to explain the steps based on the same.

Create User-Provided Service [UPS] using BTP Cockpit

Since I already have my HANA Cloud instance and instance-based developments in the space [hcdb], I created a separate space for developments based on instance sharing [space dwcdemo]. If you want to create it in the existing space where you already have a HANA Cloud instance, then you need to add the Database ID in the yaml file before deployments.

As mentioned in the prerequisites [point 5], you should already have the Open SQL schema details from the DWC Space. Navigate to the newly created BTP space for developments and create a UPS with the details you have captured in point 5.

Provide the instance name[1] as “dwcshare” as the config files in shared GIT repo address the UPS with the same name. If you are providing a different name, then make sure you adjust the config files once you clone the repository. And in the section “Configure Instance parameters” section[2], provide the Open SQL Schema access details.

Use the below code snippet as reference for instance parameters.

{

"password": “ ” --> Password from DWC Space DB user[1]

"schema": " ", --> Provide the Space Schema NOT the OpenSQL schema [2]

"tags": [

"hana"

],

"user": " " --> Provide the Open SQL Schema Database Username [3]

}

And here is a screenshot for your reference for the Open SQL schema connection

Once completed, you should see the new UPS instance dwcshare created in your SAP BTP space.

Clone the repository and deploy with minimal adjustments

Navigate to Business Application Studio and create a new Space [or login to existing one] for SAP HANA Native Application

Select the newly created space[in my case “CrossAnalytics”] . Now login to BTP cloud foundry space before you clone the repository . This will be the space where you deploy the developments you are going to create based on SAP DWC artifacts.

To login to your BTP space, you can select the cloud foundry targets [1] or use Terminal[2].

Let’s use the cloud foundry targets approach to login and provide the credentials to connect to the BTP space.

Once you login, select the option “Clone from Git”

Provide the repo URL and click enter to Clone.

And select the option “Open” to view the repository in the BAS editor.

The repository is cloned now as you see in the highlighted part of the screen. And we need to map the UPS to container-service[1] and bind the service instance [2].

Let’s bind the UPS ‘dwcshare’ which was created previously. Select the bind option[1] and you will see the option “Bind to the dwcshare service”. Select the option and bind the UPS .

Now bind the hdi container [1] by selecting "Bind to the default service instance" [2]

When you bind the instance , you will see this message on how to deal with undeployment of files in case of differences between DB and project filesystem. Select “Enable”.

Once you bind the UPS and container, you will see the name of UPS and container as shown below.

Now let’s do a quick check on the artifacts before we deploy.

cfg folder has the hdbgrants and hdbsynonymconfig which will point to the objects from DWC Space.

src folder will have the synonyms and the development artifacts such as calculation view based upon synonyms.

In the hdbsynonymconfig file, replace the upsname [1] if you have created a different UPS name[1] than used in repo and change the object name to your DWC Space object view or views[2].

Also update the same information in hdbgrants file with your UPS on line 2 and name of the Datasphere Space view on line 9

Finally in the source folder [src], update the hdbsynonym[1] with all your Datasphere space objects that you wanted to use for native HANA deployments. Please make sure the objects mentioned here are the same as mentioned in cfg folder. Also in my case I have created a calculation view[2] based on my Datasphere Space object COVID_STATS. So you need to replace the data source with your own view or can delete the calculation view as of now. Once you deploy the synonyms, you can create your own calculation view.

Once these adjustments are done, deploy the cfg[1]folder first followed by src[2] folder. Once the deployment is successful, the objects are deployed in the schema CROSSBTP_1. If you want to change the name of the schema, adjust the mta.yaml file (line 22).

Assuming you followed all these changes, the container should have deployed with no error. If you check the synonym used in my calculation view, it will be the same object from Datasphere Space.

Also, the deployed container CROSSBTP_1 can now be added back to SAP Datasphere space as a HDI container. And this HDI container could be used a source while using "Data Builder"

In order to consume the deployed container back in SAP Datasphere Space, please follow these steps.

1. Navigate to the Data builder within your space.

2. Create a graphical view

3. Under the sources tab, you will find the deployed container[CROSSBTP_1] and the view[cv_test] which could be utilized back in your SAP Datasphere space with other data sources.

I would like to thank axel.meier for his initial blog on this concept and helping with deployment issues. Hopefully this helps you in understanding how to access the HANA Cloud tenant of SAP Datasphere for HDI developments using Business Application studio. Please do share your feedback or reach out to me if you have issues in accessing the Git Repositories .

Labels:

2 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

110 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

74 -

Expert

1 -

Expert Insights

177 -

Expert Insights

348 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

14 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,575 -

Product Updates

392 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,871 -

Technology Updates

482 -

Workload Fluctuations

1

Related Content

- Replication Flow Blog Part 6 – Confluent as Replication Target in Technology Blogs by SAP

- SAP Datasphere News in April in Technology Blogs by SAP

- SAP Datasphere + SAP S/4HANA: Your Guide to Seamless Data Integration in Technology Blogs by SAP

- Help Needed: Developing Reports on Invoices, Payments, and Inventory in SAP Datasphere in Technology Q&A

- Quick & Easy Datasphere - When to use Data Flow, Transformation Flow, SQL View? in Technology Blogs by Members

Top kudoed authors

| User | Count |

|---|---|

| 15 | |

| 11 | |

| 10 | |

| 9 | |

| 8 | |

| 8 | |

| 7 | |

| 7 | |

| 7 | |

| 7 |