- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Optimise your SAP BTP, Cloud Foundry runtime costs

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Advisor

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

02-11-2022

8:40 AM

Recent talks with customers motivated me to write this blog post on how you can optimize your consumptions and therefore charges for SAP BTP, Cloud Foundry runtime when using the consumption-based models of SAP BTP. The runtime is charged by "GB of memory”, but it wasn’t obvious which amount of memory is taken into consideration.

When you deploy an application either via the user interface or by defining its attributes via YAML, you set the amount of memory which is reserved for each instance (or a default of 1024 MB is set). In the UI, this is called “Memory Quota” and inside a YAML, this is the value

This value is reported in the respective Cloud Foundry App Usage event (further described here at “memory_in_mb_per_instance“) along with the number of app instances.

Each hour, this is metered for each application instance in each Space inside a Global Account. At the end of a month, the hourly values are summed-up, divided by 730 hours, and rounded-up to the next full GB which is then charged in case the Global Account is based on the consumption-based model (CPEA or Pay-as-You-Go). An elaborate example for this calculation is given in the SAP Help Portal.

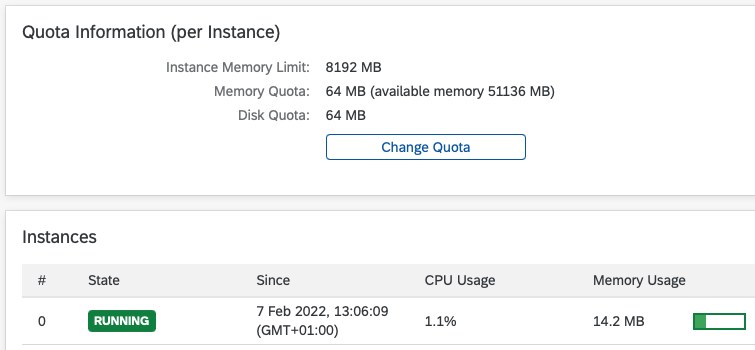

To start optimizing the above value, open your deployed application. You can see the defined value in the UI at “Quota Information > Memory Quota”. The maximum you can define for each instance is the “Instance Memory Limit“.

The memory available for the whole subaccount is shown in the brackets of “Memory Quota” called “available memory“ (see the second "Note" box here).

Further down below in the “Instances” section, you can see how much of the memory quota per instance is currently used. This is visualized with by the green bar of “Memory Usage”.

If you want to analyze the memory usage over time, you can use the “SAP Application Logging Service for SAP BTP“. It retains logs for 7 days and inside the Kibana dashboard of the service instance, you can open “Four Golden Signals > Saturation” and there see the “Memory load” over time. At the top right, you can change the time frame for the charts.

Furthermore, keep in mind that the assigned memory relates to the assigned CPUs. Each GB of memory relates to 1/4 CPU. Therefore, it is important to consider as well the “CPU load” over time which is displayd above the memory load chart.

After learning about the required memory+CPU and potential peaks, you can apply changes to the Memory Quota. Keep in mind that “if an instance exceeds this limit, Cloud Foundry restarts the instance. If an instance exceeds its memory limit repeatedly in a short period of time, Cloud Foundry delays restarting the instance.“ (source)

On top, keep in mind that apps based on certain technologies require a minimum of memory, like Java-based applications.

Once you know the limits you need to set per instance for the vertical scaling, you can start thinking about horizontal scaling to dynamically add instances upon load or time schedule. Have a look into the “Application Autoscaler” service offered inside the SAP BTP to learn more about this.

"Efficient Workload management on SAP Cloud Foundry using Application Autoscaler" elaborates on the topic, too.

When you deploy an application either via the user interface or by defining its attributes via YAML, you set the amount of memory which is reserved for each instance (or a default of 1024 MB is set). In the UI, this is called “Memory Quota” and inside a YAML, this is the value

modules.parameters.memory

This value is reported in the respective Cloud Foundry App Usage event (further described here at “memory_in_mb_per_instance“) along with the number of app instances.

Each hour, this is metered for each application instance in each Space inside a Global Account. At the end of a month, the hourly values are summed-up, divided by 730 hours, and rounded-up to the next full GB which is then charged in case the Global Account is based on the consumption-based model (CPEA or Pay-as-You-Go). An elaborate example for this calculation is given in the SAP Help Portal.

Start analysing

To start optimizing the above value, open your deployed application. You can see the defined value in the UI at “Quota Information > Memory Quota”. The maximum you can define for each instance is the “Instance Memory Limit“.

The memory available for the whole subaccount is shown in the brackets of “Memory Quota” called “available memory“ (see the second "Note" box here).

Further down below in the “Instances” section, you can see how much of the memory quota per instance is currently used. This is visualized with by the green bar of “Memory Usage”.

If you want to analyze the memory usage over time, you can use the “SAP Application Logging Service for SAP BTP“. It retains logs for 7 days and inside the Kibana dashboard of the service instance, you can open “Four Golden Signals > Saturation” and there see the “Memory load” over time. At the top right, you can change the time frame for the charts.

Furthermore, keep in mind that the assigned memory relates to the assigned CPUs. Each GB of memory relates to 1/4 CPU. Therefore, it is important to consider as well the “CPU load” over time which is displayd above the memory load chart.

After learning about the required memory+CPU and potential peaks, you can apply changes to the Memory Quota. Keep in mind that “if an instance exceeds this limit, Cloud Foundry restarts the instance. If an instance exceeds its memory limit repeatedly in a short period of time, Cloud Foundry delays restarting the instance.“ (source)

On top, keep in mind that apps based on certain technologies require a minimum of memory, like Java-based applications.

Avoiding trouble with help of the Application Autoscaler

Once you know the limits you need to set per instance for the vertical scaling, you can start thinking about horizontal scaling to dynamically add instances upon load or time schedule. Have a look into the “Application Autoscaler” service offered inside the SAP BTP to learn more about this.

"Efficient Workload management on SAP Cloud Foundry using Application Autoscaler" elaborates on the topic, too.

- SAP Managed Tags:

- SAP BTP, Cloud Foundry runtime and environment

Labels:

22 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

112 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

76 -

Expert

1 -

Expert Insights

177 -

Expert Insights

348 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

14 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,575 -

Product Updates

392 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,871 -

Technology Updates

485 -

Workload Fluctuations

1

Related Content

- How to Connect a Fieldglass System to SAP Start in Technology Blogs by SAP

- IoT: RFID integration with SAP HANA Cloud via SAP BTP in Technology Blogs by Members

- A python flask app sends the http request failed to invoke a S4 odata v4 service in Technology Q&A

- Tracking HANA Machine Learning experiments with MLflow: A conceptual guide for MLOps in Technology Blogs by SAP

- Tracking HANA Machine Learning experiments with MLflow: A technical Deep Dive in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 14 | |

| 11 | |

| 11 | |

| 10 | |

| 10 | |

| 8 | |

| 7 | |

| 7 | |

| 7 | |

| 7 |