- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Replication Flow Blog Series Part 2 – Premium Outb...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

This blog is part of a blog series from SAP Datasphere product management with the focus on the Replication Flow capabilities in SAP Datasphere:

- Replication Flow Blog Series Part 1 – Overview | SAP Blogs

- Replication Flow Blog Series Part 2 – Premium Outbound Integration | SAP Blogs

- Replication Flows Blog Series Part 3 – Integration with Kafka

- Replication Flow Blog Series Part 4 – Sizing

- Replication Flows Blog Series Part 5 – Integration between SAP Datasphere and Databricks

- Replication Flows Blog Series Part 6 – Confluent as a Replication Target

In the first detailed blog, you learned how to setup a replication within Replication Flow and got some more details about the different settings and monitoring.

Introduction premium outbound integration

With the mid-November release of SAP Datasphere, some new target connectivity types are made available. With the clear focus to strengthen integrated customer scenarios we named any external connectivity “premium outbound integration” to outline the data integration to external parties. It allows the data movement into objects stores from the main providers as well as BigQuery as part of the SAP-Google Analytics Partnership. The following connectivity options are now available:

- Google BigQuery as part of the endorsed partnership

- Google GCS

- Azure Data Lake Storage Gen 2

- Amazon Simple Storage Service (AWS S3)

Connectivity Overview November 2023

With this new enhancement customer can start spreading their use cases and add integration to object stores into their scenarios. SAP Datasphere as business data fabric enables the communication between the SAP centric business data “world” and data assets stored externally. Premium Outbound Integration is the recommended way to move data out of any SAP system to external targets.

Integration with SAP BigQuery

To highlight one of the most wanted replication scenarios, we want to show you a step-by-step explanation of a Replication Flow from SAP S/4HANA to BigQuery.

For this blog we assume the Google BigQuery connection has already been created in the space by following the step by step guide in the SAP Datasphere documentation: BigQuery Docu @ help.sap.com

Specify Replication Flow

In our scenario we defined as a source connection an SAP S/4HANA system, and we want to replicate the following CDS views:

- Z_CDS_EPM_BUPA

- Z_CDS_EPM_PD

- Z_CDS_EPM_SO

As Load Type we selected: Initial and Delta

Source system and selected CDS views

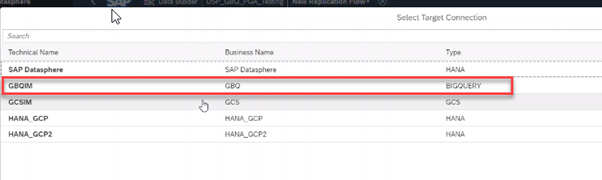

In the next step we define our pre-defined connection to Google BigQuery as target system.

Target system BigQuery

Afterwards we need to select the target dataset by navigating to the container selection.

Select target dataset

In this example we choose the dataset GOOGLE_DEMO that already exists in BigQuery.

Note: In Google Big Query language the container selection in your Replication Flow corresponds to the datasets that are available in your Google BigQuery system. Therefore, will use the terminology dataset in the upcoming paragraphs when we talk about the target container in this sample scenario.

Target dataset

The target dataset GOOGLE_DEMO is set and now we can use the basic filter and mapping functionality you know from Replication Flow.

Let us have a quick look at the default settings by navigating to the projection screen.

Navigate to Projections

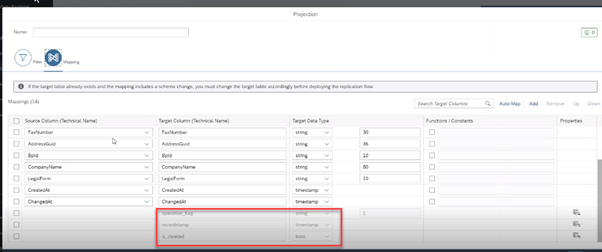

After navigating to the Mapping tab, you will see the derived structure which can be adjusted.

Structure Mapping

In addition, you will see also three fields that cannot be changed:

- operation_flag: indicates the executed operation on the source record (insert, update, delete etc.).

- recordstamp: timestamp when the change happened.

- Is_deleted: indicated if a record was deleted in the source,

These three fields will be automatically created in the target structure and will be filled by the systems and can be used depending on the information you require in a certain use case.

Beside the standard adjustments that can be made to structures, there are some special target settings that can be made after navigating to the settings icon back on the main screen.

BigQuery target settings

The Write Mode is in this mode by default on Append. In this release Append API from Google BigQuery is used. Further APIs will be considered depending on the availability.

Depending on the length, Decimals can be clamped by activating the Clamp Decimals setting. This can also be activated for all objects in the Replication Flow.

You find a comprehensive explanation in our product documentation: help.sap.com

Deploy and run data replication

As the next step the Replication Flow can be deployed and afterwards we will start it.

Run Replication Flow

This will start the replication process which can be monitored in the monitoring environment of SAP Datasphere. This was illustrated in our first blog, so we will directly jump to the BigQuery environment and have a look at the moved data.

Big Query Explorer using Google Cloud Console

After navigating to our dataset GOOGLE_DEMO we find our automatically created tables and select Z_CDS_EPM_BUPA to have a look at the structure.

Dataset structure in BigQuery

The data can be displayed via selecting Preview.

Preview replicated data

Within this blog you got all insights into the new premium outbound integration functionality offered by SAP Datasphere as the recommended way to move data out of the SAP environment.

Extending the connectivity to object stores and BigQuery will give you significantly new opportunities.

You can also find some more information about the usage of Google BigQuery in Replication Flows in our product documentation: help.sap.com

Always check our official roadmap for planned connectivity and functionality: SAP Datasphere Roadmap Explorer

Thanks to my co-author daniel.ingenhaag and the rest of the SAP Datasphere product team.

- SAP Managed Tags:

- SAP Datasphere

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

109 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

74 -

Expert

1 -

Expert Insights

177 -

Expert Insights

346 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

14 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,575 -

Product Updates

388 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,871 -

Technology Updates

479 -

Workload Fluctuations

1

- Replicate BOM Product from S/4 Hana Private Cloud to CPQ 2.0 in Technology Q&A

- Replication Flow Blog Part 6 – Confluent as Replication Target in Technology Blogs by SAP

- B2B Business Processes - Ultimate Cyber Data Security - with Blockchain and SAP BTP 🚀 in Technology Blogs by Members

- SAP Datasphere News in April in Technology Blogs by SAP

- SAP Datasphere + SAP S/4HANA: Your Guide to Seamless Data Integration in Technology Blogs by SAP

| User | Count |

|---|---|

| 17 | |

| 15 | |

| 11 | |

| 11 | |

| 9 | |

| 8 | |

| 8 | |

| 7 | |

| 7 | |

| 7 |