- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Setting Up Initial Access to HANA Cloud data lake ...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

The HANA Cloud, data lake supports storage of any type of data in its native format. Managed file storage provides a place to securely store any type of file without requiring you to setup and configure storage on an external hyperscaler account. This is very useful if you need a place to put files for ingestion into HANA Cloud, data lake IQ for high speed SQL analysis, or if you need to extract data for any reason. Hopefully, in the near future, HANA data lake Files will also be easily accessible from HANA Cloud, HANA databases as well.

Setting up and configuring access to SAP HANA Cloud, data lake Files for the first time can be a difficult process, especially if you are coming from a database background and are not familiar with object storage or REST APIs.

Here is a process I have used to test HANA Data Lake Files. Because HANA Data Lake Files manages user security and access via certificates, you need to generate signed certificates to setup user access. If you don't have access to a signing authority, you can create a CA and signed client certificate and update the HDL Files configuration using the process below, which leverages OpenSSL. I have used it many times so it should work for you.

First you need to create and upload a CA bundle. You can generate the CA using the OpenSSL command:

openssl genrsa -out ca.key 2048

Next, you create the CA’s public certificate (valid for 200 days in this case). Provide at least a common name and fill other fields as desired.

openssl req -x509 -new -key ca.key -days 200 -out ca.crt

Now you need to create a signing request for the client certificate. Provide at least a common name and fill other fields as desired.

openssl req -new -nodes -newkey rsa:2048 -out client.csr -keyout client.key

Finally, create the client certificate (valid for 100 days in this case)

openssl x509 -days 100 -req -in client.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out client.crt

*Note* - Make sure the fields are not all exactly the same between the CA and client certs, otherwise it is assumed to be a self-signed cert and the cert validation below will fail.

To verify the certificate was signed by a given CA (so that when you upload the CA certificate to HANA data lake you know it can be used to validate your client certificate):

openssl verify -CAfile ca.crt client.crt

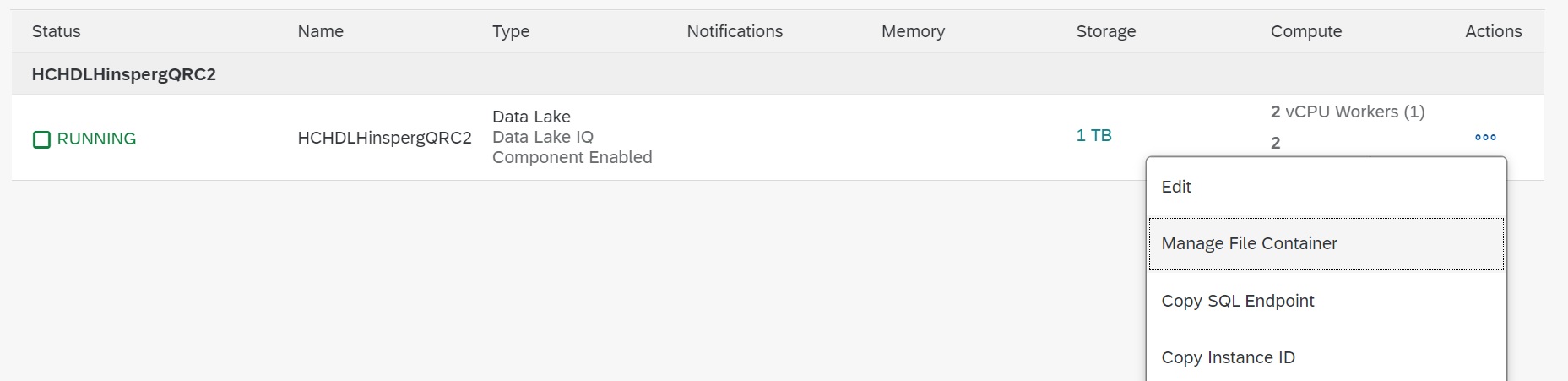

Then open your instance in HANA Cloud Central and choose "Manage File Container" to setup your HANA Data Lake Files user.

Edit the configuration and choose "Add" in the "Trusts" section. Copy or upload the ca.crt you generated earlier and click "Apply". However, don't close the "Manage File Container" screen just yet.

Now we can configure our user to enable them to access the managed file storage.

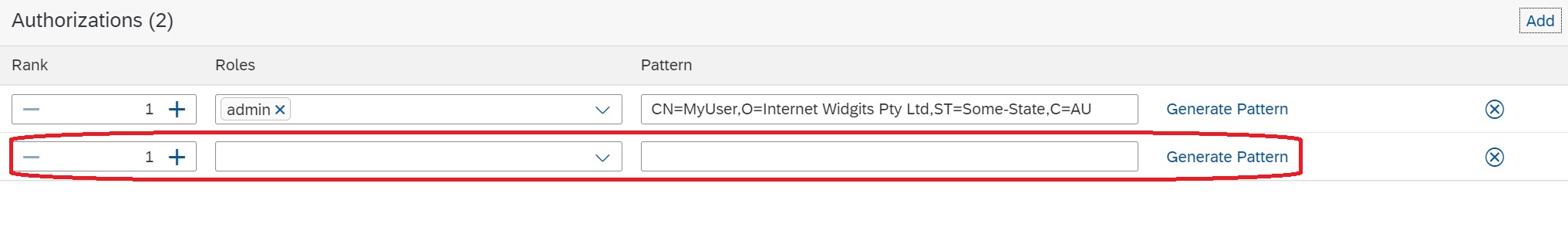

Scroll down to the "Authorizations" section and choose "Add". A new entry will appear

Choose a role for your user from the drop down (by default there are admin and user roles).

Here is where things get a little tricky. You need to add the pattern string from your client certificate so that when you make a request, the storage gateway (the entry point into HANA data lake files) can determine which user to validate you against.

You have 2 options for generating the pattern string. You can use the following OpenSSL command to generate the pattern string (omit the “subject= “ prefix that will appear in the output)

openssl x509 -in client.crt -in client.crt -nameopt RFC2253 -subject -noout

Alternatively, you can use the "generate pattern" option on the screen, which will open a dialog box that allows you to upload/paste your client certificate and will automatically generate the pattern for you. Note that we do not save the certificate, only the pattern string:

Click "Apply" to add the pattern string to your authorizations entry.

Note that the pattern string also allows wild cards, so you can authorized a class of certificates with a certain role. If the certificate pattern matches multiple authorizations, the one that is used is governed by the "Rank" value set for the specific authorization entry.

You should now be able to access and use HANA Data Lake Files via the REST api.

Here is a sample curl command which works for me and should validate that you have a successful connection (the intance id and files REST API endpoint can be copied from the instance details in the HANA Cloud Central). Use the client certificate and key that you generated above and used to create your authorization.

Note that curl can be a little tricky - I was on Windows and I could not get the Windows 10 version of curl to work for me. I ended up downloading a new curl version (7.75.0), which did work, however, I had to use the '--insecure' option to skip validation of the HC server certificate because I wasn't sure how to access the certificate store on Windows from curl.

curl --insecure -H "x-sap-filecontainer: <instance_id>" --cert ./client.crt --key ./client.key "https://<Files REST API endpoint>/webhdfs/v1/?op=LISTSTATUS" -X GET

The above command should return (for an empty HANA Data Lake):

{"FileStatuses":{"FileStatus":[]}}

Now you should be all set to use HANA Data Lake Files to store any type of file in HANA Cloud. For the full set of supported REST APIs and arguments for managing files, see the documentation

Thanks for reading!

- SAP Managed Tags:

- SAP HANA Cloud,

- Big Data,

- SAP HANA Cloud, data lake

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

103 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

69 -

Expert

1 -

Expert Insights

177 -

Expert Insights

322 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,576 -

Product Updates

368 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

454 -

Workload Fluctuations

1

- Fiori PO Approver app Accept and Reject button customization from ECC backend in Technology Blogs by Members

- Cost optimized SAP HANA DR options on Google Cloud in Technology Blogs by Members

- Dynamic Extensions of the Planning Area Defined by the Table Context QRC2 2024 in Technology Blogs by SAP

- Build Full Stack Applications in SAP BTP Cloud Foundry as Multi Target Applications (MTA) in Technology Blogs by Members

- Consuming SAP with SAP Build Apps - Web App in SAP Build Work Zone, standard edition in Technology Blogs by SAP

| User | Count |

|---|---|

| 24 | |

| 9 | |

| 8 | |

| 7 | |

| 7 | |

| 6 | |

| 6 | |

| 6 | |

| 6 | |

| 6 |