- SAP Community

- Products and Technology

- Technology

- Technology Q&A

- Deploy to HANA Cloud fails with missing build plug...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Deploy to HANA Cloud fails with missing build plugin after migration from HANA Service

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

on 04-19-2023 12:04 PM

Hi community,

we migrated from HANA Service to HANA Cloud Service following the migration guide. However when we start the deployment of our database schema it fails.

$ node node_modules/@sap/hdi-deploy/deploy.js --root ./dist/db --auto-undeploy --exit

(...)

modified files: [

"src/.hdiconfig",

"src/gen/.hdiconfig"

]

(...)

Deploying the configuration file "src/.hdiconfig"...

Warning: Could not find a configured library that contains the "com.sap.hana.di.virtualfunctionpackage.hadoop" build plugin [8211539]

at "src/.hdiconfig" (0:0)

(...)

Adding "src/gen/myModel.MyTable.hdbtable" for deploy... ok (0s 0ms)

Error: Could not find the "com.sap.hana.di.cds" build plugin [8210501]

Error: Preparing... failed [8211602]

(...)

During the migration the wizard informed us that "com.sap.di.cds" build plugin is not supported with HANA Cloud (see here). However we could proceed with migration.

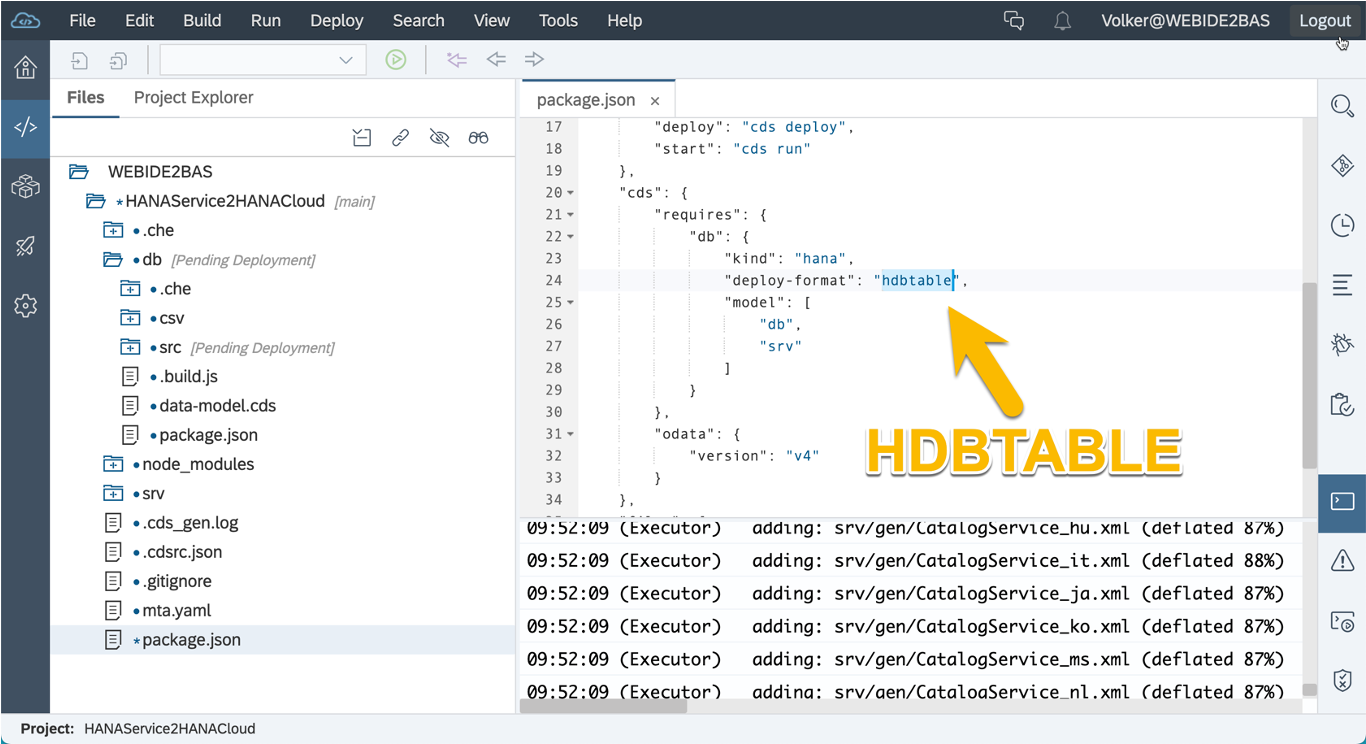

After the migration was successful we adjusted our coding to run with HANA Cloud. We changed the following files.

.cdsrc.json

Added:

"hana": {

"deploy-format": "hdbtable",

"syntax": "hdi"

}Changed:

"requires": {

"db": {

"kind": "hana-cloud"

}

}As various HDI build plugins are deprecated like mentioned above (see here) I removed them from the .hdiconfig file. Among other things also the "com.sap.hana.di.cds" build plugin - which let's the deployment fail.

We are using the following versions of cds npm packages:

@sap/cds: 6.7.0

@sap/cds-compiler: 3.8.2

@sap/cds-dk: 6.7.0

@sap/hdi-deploy: 4.2.3

hdb: 0.19.0

Which steps do we need to take to deploy our schema to HANA Cloud? It seems that the deployer wants to delete/undeploy the outdated .hdbcds files and needs the "com.sap.hana.di.cds" build plugin for doing this. But this plugin is deprecated in HANA Cloud and therefore no longer exists, so the deployment fails.

Kind regards,

Johannes

- SAP Managed Tags:

- SAP HANA Cloud,

- SAP Cloud Application Programming Model

Accepted Solutions (1)

Accepted Solutions (1)

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

Hi Johannes,

as described in the corresponding answer - it is crucial that you first switch to hdbtable/hdbview based deployment on your HaaS instance before starting the migration. I think there is no better solution than reverting the entire migration as the hdbcds artifacts cannot be undeployed on the HANA Cloud instance.

You can define wildcards in the undeploy.json file in order to undeploy existing hdbcds files as shown below. No table will be dropped as a handover form hdbcds to corresponding hdbtable based deployment will take place as long as a corresponding counterpart exists.

Thus, you have to ensure that no hdbcds artifacts exist before starting the migration - otherwise it will fail as the required build plugin com.sap.hana.di.cds is not supported on SAP HANA Cloud as you've already recognized. This build plugin is also required for undeployment. Any build plugins that are not supported on HANA Cloud have to be deleted from the .hdiconfig file.

[

"src/**/*.hdbcds"

]Note: The setting "syntax": "hdi" is no longer supported and should be deleted.

Regards,

Lothar

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

Hi Johannes,

in HANA Cloud we do not support HDBCDS. So you have to clean up the Source (HANA Service or HANA 2) to work without HDBCDS. If you use CAP you have a single spot where you can adjust the generation call deploy-format

Now that only have hdbtable generated you still have hdbcds files in your design time repository (<containername>#DI). WebIDE or BAS by default will clean that up with appending --auto-undeploy to the HDI deploy command. Now if you do cf deploy this mechanism is not there. You have to add the undeploy.json to work in automated environment.

If you have mixed scenarios with your own hdbcds (various reasons for this) you can use a open source tool called hana-cli with a command called "massConvert". This will allow you to convert by reverse engineering from the run time objects.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

Hi lothar.bender,

Hi volker.saggau,

thank you for your help. We managed to migrate to HANA Cloud Service. We reverted the migration, undeployed the unsported plugin files and restarted the migration. Unfortunately we had to delete the instance of HANA Cloud and recreate it because we ran in the "Target HANA has executed catalog migration before" error.

Are there any plans to include the point in the migration guide that the undeployment of hdi plugins (hdbcds files) that are no longer supported is essential to complete the migration successfully in the sense that you can still deploy your database schema after the migration? This is the information I was missing on my first try.

Thanks again & kind regards,

Johannes

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

Hi Johannes,

Hi Volker Saggau,

We don't use CAP. It is an MTA application with db module containing hand coded .hdbcds artifact file. We have deployed it to HANA Service and now working on migration of HANA service to HANA Cloud.

Does that hdbtable deploy-format applicable to non CAP MTA application?

How do we make sure that the unsupported hdbcds plugin files are undeployed from the source HANA Service? I know the migration check does that but can we ensure it before starting mirgation?

Thanks,

Ravindra

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

Answers (0)

- SAP CAP Error - invalid table name: Could not find table/view in Technology Q&A

- Common standard features not working in RAP OData V4 - S4 2022 FPS02 in Technology Q&A

- Develop with Joule in SAP Build Code in Technology Blogs by SAP

- What’s New in SAP Analytics Cloud Release 2024.10 in Technology Blogs by SAP

- BODS migration in Technology Blogs by Members

| User | Count |

|---|---|

| 72 | |

| 8 | |

| 8 | |

| 6 | |

| 6 | |

| 6 | |

| 5 | |

| 5 | |

| 5 | |

| 5 |

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.