- SAP Community

- Groups

- Interest Groups

- Application Development

- Blog Posts

- “Test Doubles” and using OSQL Test Double Framewor...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Here are the links to the other blogs in this blog series:

In this blog post, I am writing about test doubles in general and later mention how to handle the dependency in ABAP when the DOC is a database object.

Now let’s say that instead of taking the date of hire as an input from the user, our code takes Employee ID as an input. It then reads the date of hire of an employee from the database and calculates the years of service. In our development system we create the necessary test data before executing the tests. Later, when the unit tests will run, the query to access the database will also execute. The loaded test data will be fetched by the test case and all our tests will run successfully. So far, all okay. Fine!!

Figure 1: Accessing the DOC in Unit Testing

Well, what’s the problem here?

Now let’s say this entire set of code is transported out from the development system. This code will reach to multiple systems, where the unit tests will be executed in an automated mode. However, each system is set up for a specific purpose and thus have a specific set of authorizations and characteristics.

The point which I am making here is, not all the systems will have the required type of data in the DB. Not all systems have authorizations to read/write to the DB. Not all systems will have authorizations to access the file systems or interact with communication systems and web services. These are some of the problems which we face if we rely on the DOCs for executing the unit tests. Also, the unit is no longer in isolation if it interacts with DOCs, and the essence of unit testing is lost.

Sometimes the system is refreshed or the data in the database gets modified and consequently, the required type of test data is no longer available. Suddenly your unit tests will start failing and then you keep wondering as to why!!

Following are the difficulties which we normally face in testing the CUT where DOCs are accessed:

- The required DOC might not be available on all the systems.

- System refresh, modifications in the DOC may also be a point of concern.

- Even if the DOC is available, the authorization to access them may not be available.

- Due to variations in customizations of different systems, the unit test won’t return consistent results.

- Also, one test case may modify the data in the database, which might create problems in the execution of other test cases. This creates an unwanted dependency and interference amongst the test cases.

- If the unit test fails due to any of the above-mentioned issues, it will be difficult to figure out whether the actual problem is in the CUT, in the unit test, in the customizations, in the test data or somewhere else.

Thus, we see here that a test is not a unit test if it interacts with any DOCs such as:

- Database Object

- CDS View

- Standard SAP Function Module

- Another network

- File system

- Another unit tests

- Environment configuration files

Just keep in mind that, the moment you venture out of the LTC or access other UTM, your test is no longer a unit test, because dependency has been created.

How do we handle this? What’s the way out?

We need to do something which will restrict the CUT from accessing the DOCs. Clearly, we cannot modify the code lines in the CUT to remove this interaction. So, we need to handle this in the LTC itself. What if we re-direct the access of CUT to a mimic of the DOC, rather than the actual DOC?

Just imagine the following real-life situation.

You want to buy a flat and you visit the apartment to have a look at the flat you are interested in. Now, let’s say the actual flat is not available for you to have a look at. Probably because the apartment is still under construction, or it is undergoing some maintenance work. There can be some other such reasons too.

Now, I would like you to start relating this to our unit testing scenario. What does the agent / house owner do in such a case? He/she will show you another flat in the same apartment (maybe a sample flat), whose features are exactly like the ones you are interested in. So, now you will have a look at this flat and decide on your deal.

I hope you had a great time reading by the above situation!! Similarly, for unit testing we create a dummy artefact of the DOC, (say for instance, a database object). We create a test environment, which will enable the CUT to access this dummy object, instead of the actual object.

Let’s say that the DOC we are trying to access is a database table.

In effect, the dummy of a database table will behave similar to the actual ones, the advantage being that the data of these dummies are defined locally in the LTC itself and they have exactly the same structure and keys as that of the actual table. This dummy/mock of the database object is called as a “Test Double”.

Figure 2: Accessing the test double instead of the DOC using Test Fixtures

Figure 2: Accessing the test double instead of the DOC using Test Fixtures

Do keep in mind that the test double doesn’t have to behave exactly like the real DOC. It merely has to provide the same interface (the same point of contact) as the real one, so that the CUT thinks that it is the real one. So, the test double has the same name, same structure, same keys and the same point of contact with the Open SQL statements.

Depending on the DOC you are working with, there are ways to direct the dependency of the CUT to the test double, in place of the actual DOC.

My DOC is a database object. How do I create and access the test double?

To perform CRUD operations in ABAP, we use Open SQL (OSQL). One of the major advancements in OSQL is the code-pushdown capability. Execution of logic for OSQL statements takes place in the database layer instead of application layer.

Here comes the need of a framework to implement dependency injection in the database layer. Test doubles need to be created in the database for the DOCs of the OSQL statements. The original dependency is to be dropped off .

The OSQL Test Double Framework provides capabilities to create test doubles for any database object, insert data in test double (i.e. creation of modifiable or insertable test doubles) and re-direct the interaction of the CUT to these test doubles instead of the actual database object. Consequently, this enables testing of all the OSQL statements in our production code, through the normal ABAP test classes.

This is difficult for certain database objects like database views and database functions which are read-only. However, the framework handles this through some modifications which enable insertion of data into their corresponding test doubles.

Putting the pieces into place and using OSQL Test Double Framework

Figure 3: Process to use the OSQL Test Double Framework

1. Create the test double

As mentioned in the previous section, the test double should provide the same interface (point of contact) as the actual ones. This provision of the same point of contact is handled here in this step.

The test double framework provides the functionality of test doubles within the test environment. This environment is the Open SQL Test Environment. We will need to create this environment in our test class.

The class which provides the Open SQL Test Environment is cl_osql_test_environment.

This class implements methods of the interface if_osql_test_environment, which is the interface of test environment to redirect any database object. We create an instance of this test environment using the static method create() as below:

environment = cl_osql_test_environment=>create( i_dependency_list = ‘list of all the database object DOCs’ ).

where,

list of all the database object DOCs = names of all the database objects for which we need the test doubles.

Since the test environment is needed throughout the scope of the test class, and the test environment is not modified by any means, we put the above piece of code in class_setup().

2. Load test-data into the test double

We have got an empty water bottle. Time to fill it with some water!!

What I mean here is that the test double created in previous step has no data and so has no use as yet.

As we very well know, internal tables are defined and loaded with data locally. So what we do here is, create an internal table of the type of our database table, and load the test data inside this.

DATA: mt_table_name TYPE STANDARD TABLE OF db_table_name.

mt_table_name = VALUE #( ( dummy_record_01 ) ( dummy_record_02 ) …… ( dummy_record_n ) ).

“Inserting the dummy records from the internal table mt_table_name to the test double of db_table_name

environment->insert_test_data(mt_table_name).

where,

mt_table_name = name of the internal table, of type of the DOC database table. (by convention, “mt” stands for member table of class. You may use any other prefixes as per your standards)

dummy_record = test data. This can be any random values of your choice.

You may or may not fill the data for all the fields. But the values for all the keys must be provided. Likewise, load the test data into all the required dependent database objects.

It is recommended to write this code in setup() or a UTM because:

- The data may get modified by the CUT during execution of the unit test. This may create dependency issues amongst the test cases and consequently might result in failing unit tests.

- All the UTMs will get a fresh test data to work with, as setup() is executed before each UTM.

When to write this code in setup():

- If the same set of test data will be able to cover all the test cases.

When to write this code in a UTM:

- The database object is not used in all or most of the methods, but only a few of them.

- The same database object is needed for all the UTMs, but the type of data records to be loaded, varies drastically, for different test cases.

To mention in short: In Step 1, we created the test double. In Step 2, we loaded the test data in an internal table and then moved this test data into the test double which we created in Step 1.

3. Fetch data of double from the CUT

So now there’s some water in the bottle. Let’s use this!!

Now this test double will be accessed by our CUT, whenever the unit tests are executed.

We just need to invoke our production methods from the UTM. The redirection to the test doubles will be taken care automatically by the OSQL Test Double Framework.

4. Destroy the double

It’s time to clean our water bottle with a cloth and let it dry.

In this step, we clear the data in the test doubles. For this we invoke the clear_doubles() method of our instance of test environment as below.

environment->clear_doubles( ).

Keep in mind that this is just erasing off the data in the test doubles. The doubles still exist, however they have no data.

Destroying the test double should preferably be done in the teardown() method, as it is executed after each UTM.

Example for practical demonstration

I will extend the same demo use case of getting years of service of employees which I used in the previous example. For this demo, I will use a Z table, instead of a standard table. However, there are no changes in the approach.

Listing the artefacts for this demo:

Table 1: List of artefacts for practical demonstration

| DB Table [DOC] | ZEMPDATA_DEMO |

| Global Class [CUT] | ZCL_ZEMPDATA_DEMO_OPERATIONS Methods: - GET_YRS_OF_SRVC_EMPID - GET_DATA_CNT_LOB_DESG |

| LTC for the CUT | ltc_zempdata_demo_operations |

Structure of ZEMPDATA_DEMO is as follows:

Figure 4: Structure of table ZEMPDATA_DEMO (DOC)

Global class definition is as follows:

Figure 5: Class definition for CUT

Method GET_YRS_OF_SRVC_EMPID:

This method takes an Employee ID as input. It then fetches the record of the employee from the database and calculates the years of service for that employee. The method signature and implementation are shown below:

Figure 6: Production method definition - GET_YRS_OF_SRVC_EMPID

Let us find out the positive and negative test cases for this method:

Positive Test Case:

- An employee exists with the provided Employee ID

Negative Test Case:

- No employee exists with the provided Employee ID

Method GET_DATA_CNT_LOB_DESG:

This method takes a Line of Business and Designation as input. It queries the database to search for all the employees belonging to this line of business and designation. It returns this selected data along with the number of employees. The method signature and implementation are shown below:

Figure 7: Production method definition - GET_DATA_CNT_LOB_DESG

Let us find out the positive and negative test cases for this method:

Positive Test Case:

- Employees exist in the provided combination of line of business and designation

Negative Test Case:

- No employees exist in the provided combination of line of business and designation

Summing up, we have identified a total of 4 test cases for CUT. There can be more test cases here, but to keep it simple to understand we consider here 1 positive and 1 negative test case for both the methods as above.

I had provided information on certain parts of LTC in my previous blog. So here, I will be stressing more on the test doubles in the LTC, rather than the other details previously explained.

Let’s have a look at the definition of the LTC.

Figure 8: LTC Definition

Line 5 to Line 8: The corresponding UTMs for each test case are defined in the public section of the LTC.

Line 11: Data definition for the test environment locally to redirect the access from the actual database object to the test double

Line 12 to Line 16: Declaring the test fixtures.

Line 18: Declaring an internal table of the type of DOC database table. Since, ZEMPDATA_DEMO is the DB object which we want to mock. Test data will be loaded into this internal table in the class implementation.

Line 19: Declaring an instance of the CUT.

Let me now walk you through the implementation of the LTC.

Figure 9: LTC Implementation – Setup test fixtures

Setting up the test environment and creating the test double:

As seen previously, create() method of the class cl_sql_test_environment creates the corresponding test doubles for all the dependency objects listed as values of the parameter – i_dependency_list. We do this in the static method class_setup().

Line 29: Here we pass the name of our database table as parameter. Thus a test double with name ZEMPDATA_DEMO is created for our database table ZEMPDATA_DEMO, with the exact same structure and keys.

Loading test data in the test double:

Line 38: We will load our test data in the internal table mt_zempdata_demo.

Line 51: Here, the test data is inserted from the internal table mt_empdata_demo into the test double of ZEMPDATA_DEMO. This is the purpose for which we declare the internal table of the type of our database table.

Below figures 10-13 depict the implementation of all the 4 test cases:

Figure 10: LTC Implementation - Positive test case for method GET_YRS_OF_SRVC_EMPID

Figure 11: LTC Implementation - Negative test case for method GET_YRS_OF_SRVC_EMPID

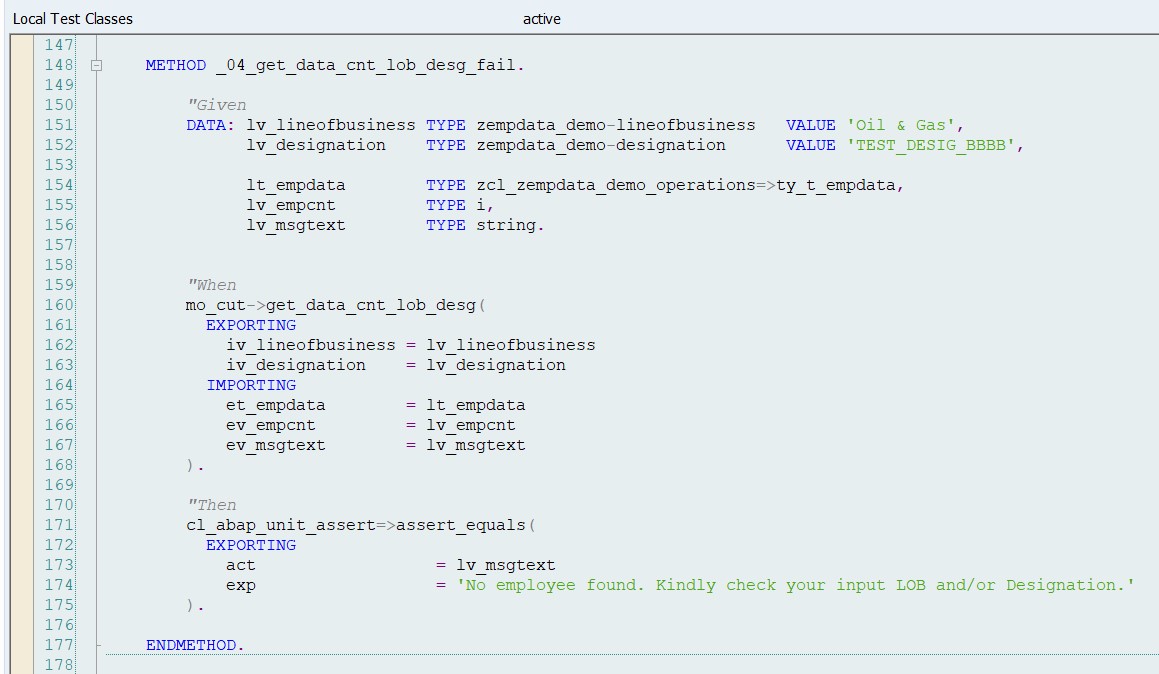

Figure 12: LTC Implementation - Positive test case for method GET_DATA_CNT_LOB_DESG

In Figure 12:

Line 113 – 114: For the method GET_DATA_CT_LOB_DESG, we need to pass the line of business and designation. So we set the required values of these parameters for this test case. The same is applicable for lines 151 and 152 in Figure 13 below.

Lines 120-125: We are going to receive the count and employee data. There may or may not be multiple records fetched (This depends on the test data which was set in setup()). So, we create an internal table local to this UTM with the expected data. This is used further for the assertion in the “Then” section of the UTM.

Figure 13: LTC Implementation - Negative test case for method GET_DATA_CNT_LOB_DESG

Figure 14: LTC Implementation - Teardown test fixtures

Line 183: Clearing the data of test doubles after every test case.

Line 190: Destroying the OSQL test environment, which we created in class_setup().

In my upcoming blogs, I will mention about performing unit testing in scenarios where other types of DOCs are involved.

- SAP Managed Tags:

- ABAP Development,

- ABAP Testing and Analysis

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

A Dynamic Memory Allocation Tool

1 -

ABAP

9 -

abap cds

1 -

ABAP CDS Views

14 -

ABAP class

1 -

ABAP Cloud

1 -

ABAP Development

5 -

ABAP in Eclipse

2 -

ABAP Keyword Documentation

2 -

ABAP OOABAP

2 -

ABAP Programming

1 -

abap technical

1 -

ABAP test cockpit

7 -

ABAP test cokpit

1 -

ADT

1 -

Advanced Event Mesh

1 -

AEM

1 -

AI

1 -

API and Integration

1 -

APIs

9 -

APIs ABAP

1 -

App Dev and Integration

1 -

Application Development

2 -

application job

1 -

archivelinks

1 -

Automation

4 -

B2B Integration

1 -

BTP

1 -

CAP

1 -

CAPM

1 -

Career Development

3 -

CL_GUI_FRONTEND_SERVICES

1 -

CL_SALV_TABLE

1 -

Cloud Extensibility

8 -

Cloud Native

7 -

Cloud Platform Integration

1 -

CloudEvents

2 -

CMIS

1 -

Connection

1 -

container

1 -

Customer Portal

1 -

Debugging

2 -

Developer extensibility

1 -

Developing at Scale

3 -

DMS

1 -

dynamic logpoints

1 -

Dynpro

1 -

Dynpro Width

1 -

Eclipse ADT ABAP Development Tools

1 -

EDA

1 -

Event Mesh

1 -

Expert

1 -

Field Symbols in ABAP

1 -

Fiori

1 -

Fiori App Extension

1 -

Forms & Templates

1 -

General

1 -

Getting Started

1 -

IBM watsonx

2 -

Integration & Connectivity

10 -

Introduction

1 -

JavaScripts used by Adobe Forms

1 -

joule

1 -

NodeJS

1 -

ODATA

3 -

OOABAP

3 -

Outbound queue

1 -

ProCustomer

1 -

Product Updates

1 -

Programming Models

14 -

Restful webservices Using POST MAN

1 -

RFC

1 -

RFFOEDI1

1 -

SAP BAS

1 -

SAP BTP

1 -

SAP Build

1 -

SAP Build apps

1 -

SAP Build CodeJam

1 -

SAP CodeTalk

1 -

SAP Odata

2 -

SAP SEGW

1 -

SAP UI5

1 -

SAP UI5 Custom Library

1 -

SAPEnhancements

1 -

SapMachine

1 -

security

3 -

SM30

1 -

Table Maintenance Generator

1 -

text editor

1 -

Tools

18 -

User Experience

6 -

Width

1

| User | Count |

|---|---|

| 4 | |

| 4 | |

| 3 | |

| 2 | |

| 2 | |

| 1 | |

| 1 | |

| 1 | |

| 1 | |

| 1 |