- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- 3D Object Reconstruction: Custom Videos and Length...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Advisor

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

06-23-2023

9:57 PM

Introduction

Have you ever imagined reconstructing a 3D object using only one custom video, without the need for CAD? Have you ever wished to store data of 3D objects in industrial sites without losing spatial information, which is crucial for accurate length measurements? With the latest technologies like instant-ngp and three.js, these possibilities can be realized, and they can also be seamlessly integrated with SAP AI Core and SAP BTP Kyma, respectively.

Instant ngp (NeRF)

A neural radiance field (NeRF) is a fully-connected neural network capable of producing new perspectives of intricate 3D scenes using only a limited collection of 2D images.

Traditionally, training and rendering a NeRF model using deep learning techniques can be a time-consuming process, often taking an entire day. However, NVIDIA has developed the Instant ngp, the latest NeRF variant that significantly reduces the training and rendering time to just 30 minutes. Furthermore, this latest NeRF is capable of determining the color properties of the rendered 3D object.

There are 6 steps to take a 3D rendering in GUI system. Here is the process:

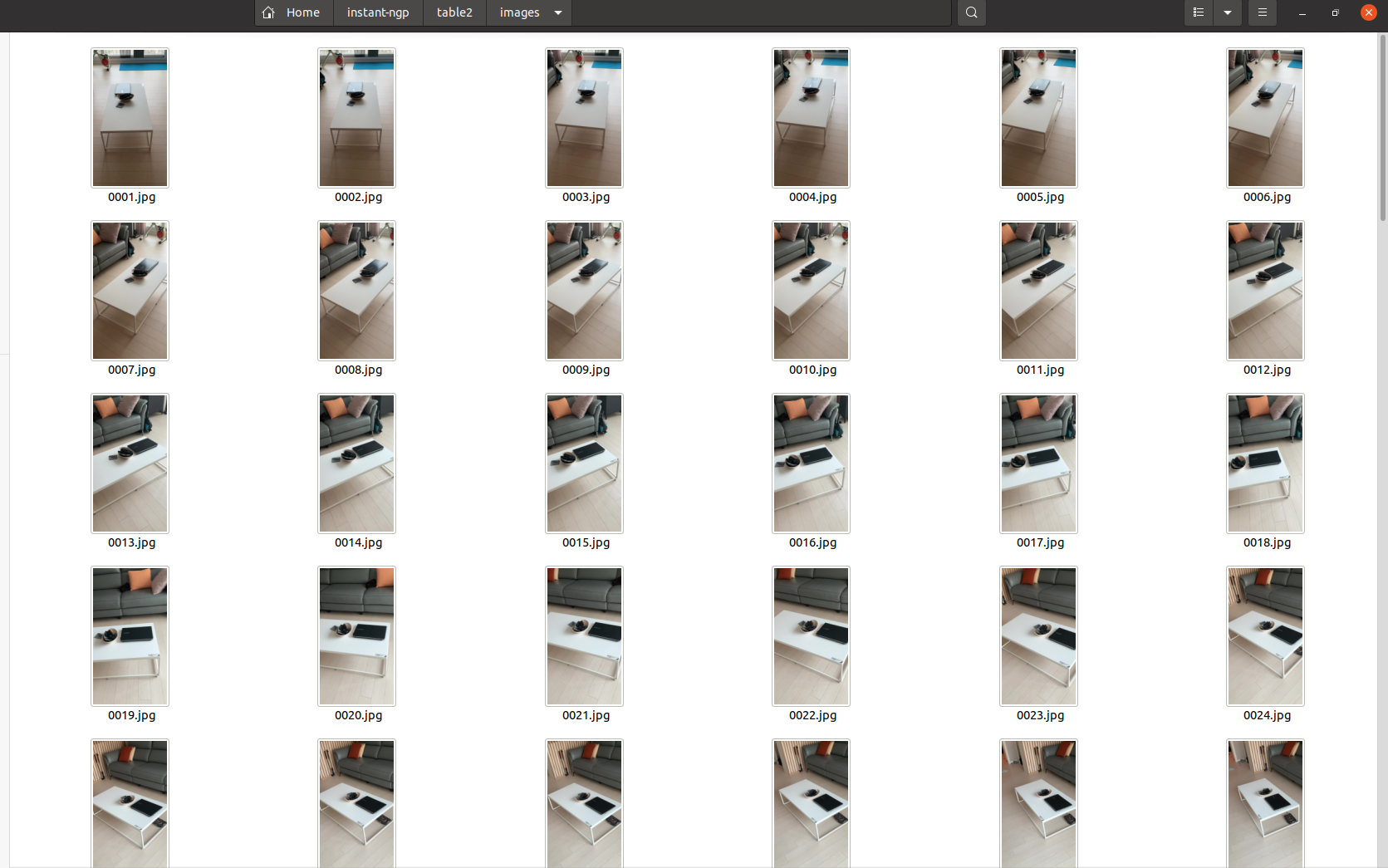

Step 1. Take a video for 1 minute

Step 2.Convert Video to Images

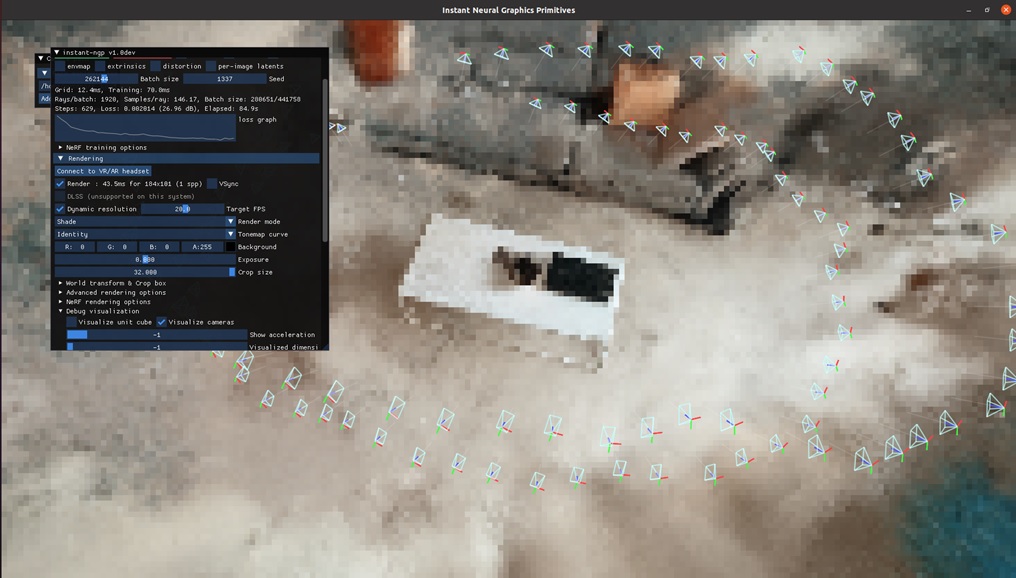

Step 3.Detect Camera Poses

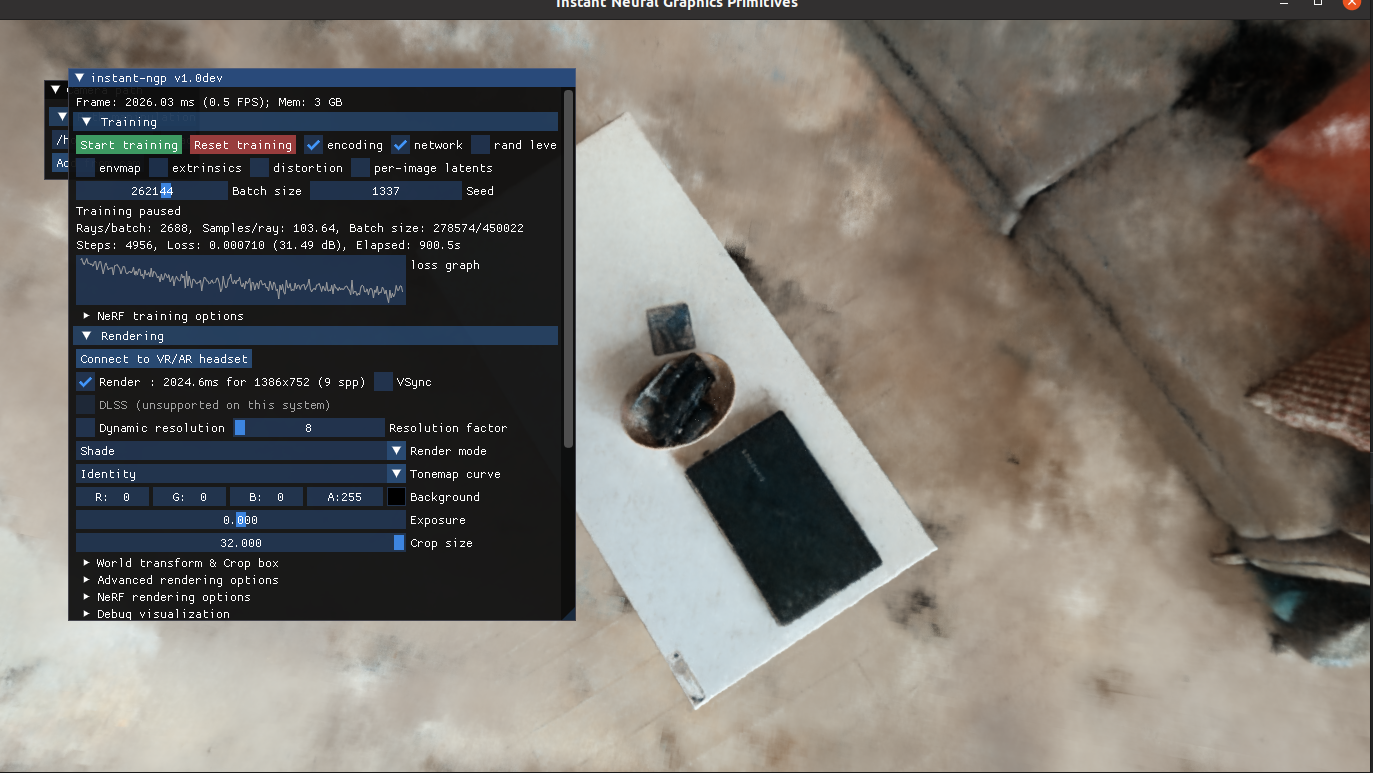

Step 4. Rendering

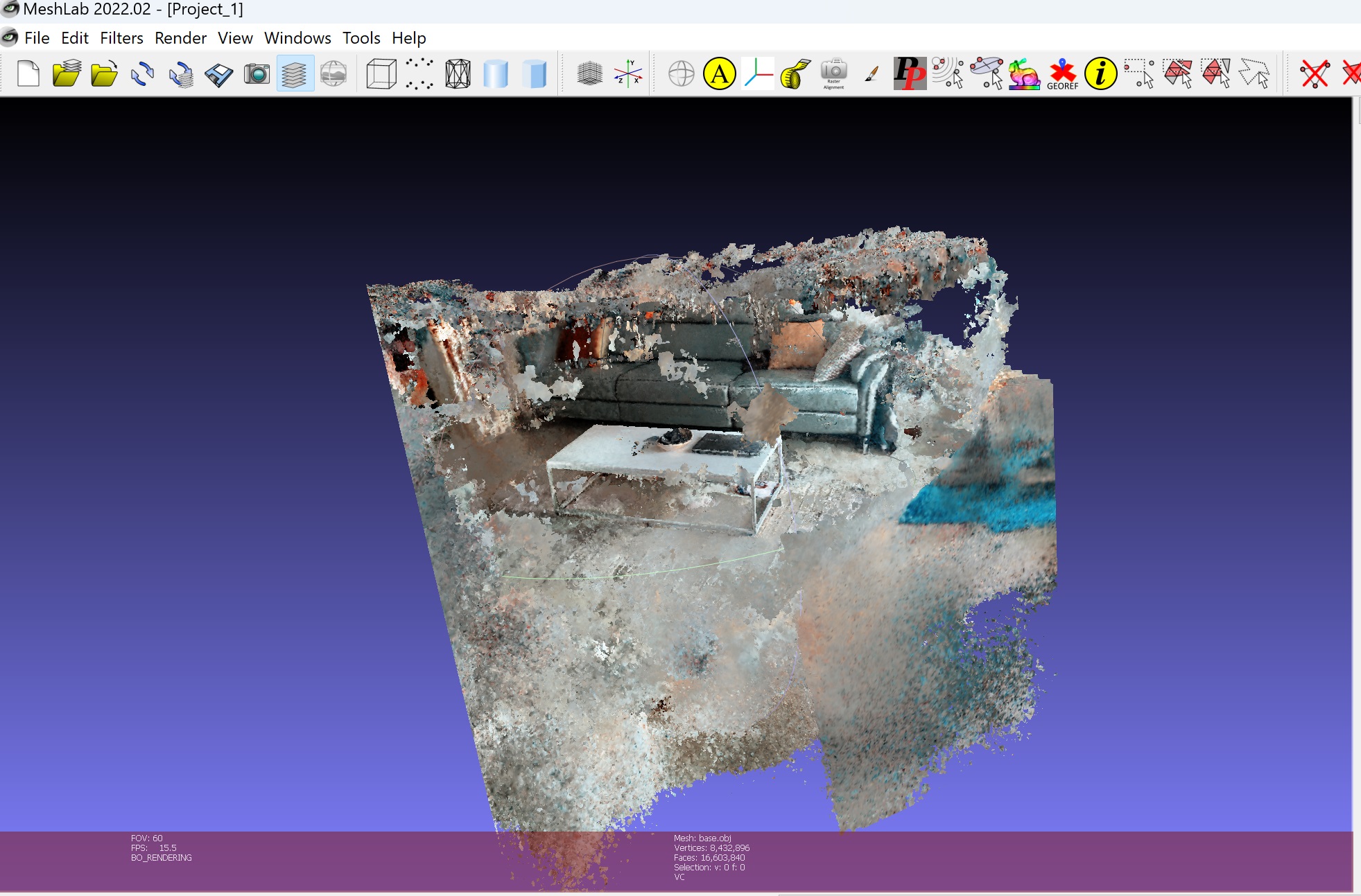

Step 5. Export Mesh

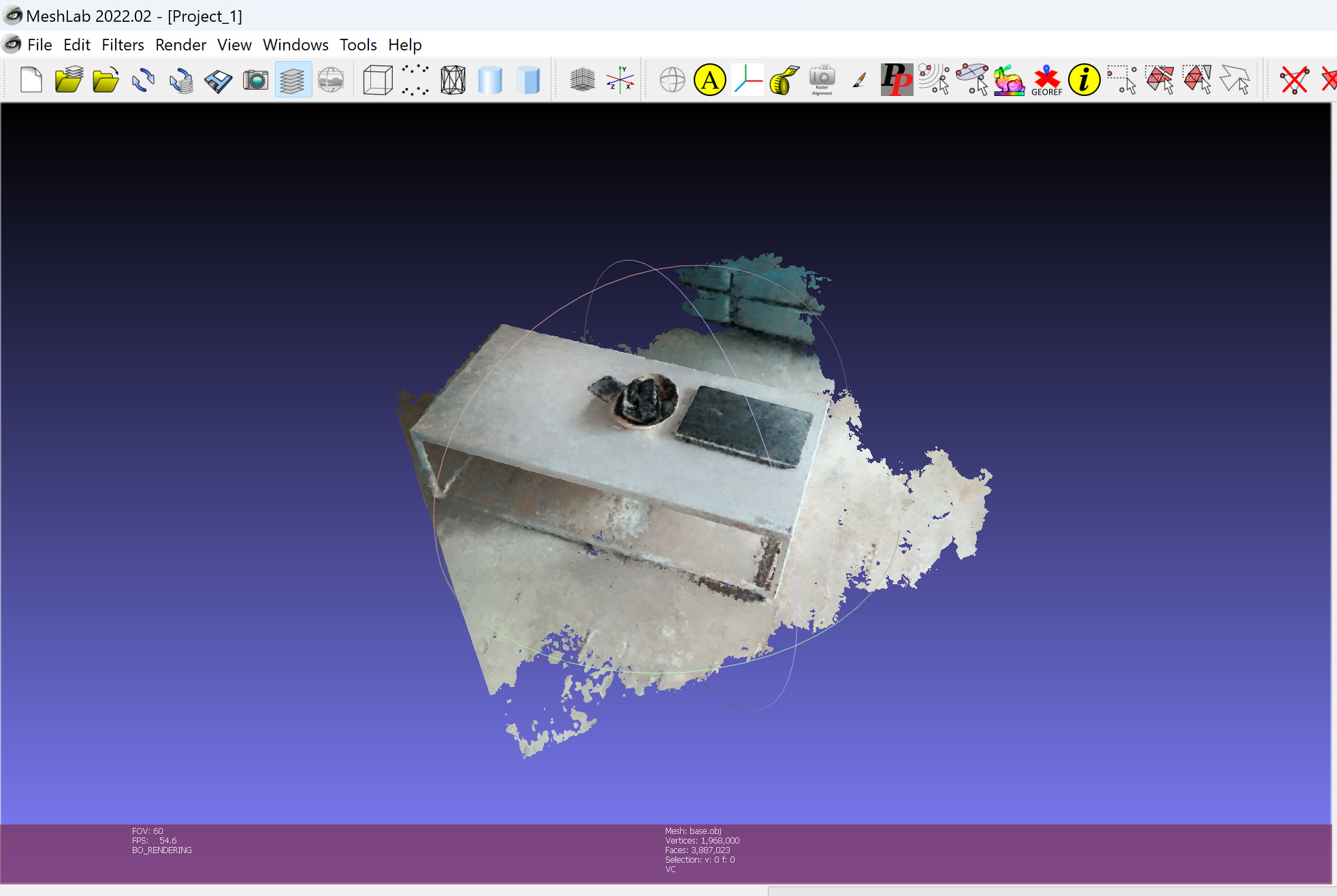

Step 6. Smoothing and Scaling and removing noise

It is truly remarkable that we can create a 3D object from just a single video.

However, in order to streamline the entire process and achieve 3D reconstruction in a cloud system, leveraging the SAP AI Core is essential. The SAP AI Core provides the necessary tools and capabilities to minimize the required steps and efficiently perform 3D reconstruction tasks.

SAP AI Core

SAP AI Core is one of the powerful SAP BTP service to deploy and manage artificial intelligence. When working with SAP AI Core, GPU acceleration can significantly enhance the quality of mesh and rendering, while this cloud computing allows you to skip all the necessary process. You can integrate the above 6 steps by following SAP AI Core tutorial, which will guide you through the process of connecting GitHub to SAP AI Core, creating an object store secret, configuring the necessary settings, and executing the process successfully.

First, you have the option to modify the Docker file according to your requirements. Next, to ensure seamless integration with Amazon S3, you can leverage a YAML file to effectively configure and manage the dataset.

apiVersion: argoproj.io/v1alpha1

kind: WorkflowTemplate

metadata:

name: instant-ngp-v56-i584986

annotations:

scenarios.ai.sap.com/description: "Yong Auto instant-ngp 3D Rendering"

scenarios.ai.sap.com/name: "yong-instant-ngp-train"

executables.ai.sap.com/description: "yong Auto instant-ngp 3D Rendering executable"

executables.ai.sap.com/name: "yong-instant-ngp-executable"

artifacts.ai.sap.com/image-data-v1.kind: "dataset"

artifacts.ai.sap.com/image-model-v1.kind: "model" #model - default - object store secret

labels:

scenarios.ai.sap.com/id: "yong-instant-ngp"

executables.ai.sap.com/id: "yong-instant-ngp-training"

ai.sap.com/version: "1.0.0"

spec:

imagePullSecrets:

- name: credentialrepo-i584986

entrypoint: image-instant-ngp-training

templates:

- name: image-instant-ngp-training

metadata:

labels:

ai.sap.com/resourcePlan: infer.l

inputs:

artifacts:

- name: image-data-v1

path: /volume/

outputs:

artifacts:

- name: image-model-v1

path: /model/

globalName: image-model-v1

archive:

none: {}

container:

image: "docker.io/ertyu116/instantngp:v7"

ports:

- containerPort: 9001

protocol: TCP

imagePullPolicy: Always

command: ["/bin/sh", "-c"]

args:

- >

set -e && echo "---Start Training---" &&cmake -DNGP_BUILD_WITH_GUI=off ./ -B ./build && cmake --build build --config RelWithDebInfo -j 8 && python3 ./scripts/colmap2nerf.py --video_in /volume/IMG_0184.MOV --video_fps 2 --run_colmap --aabb_scale 2 --out /volume/transforms.json --overwrite && ls /volume/ && python3 ./scripts/run.py --scene /volume --save_snapshot /model/base.msgpack --save_mesh /model/base.obj --train --n_steps 35000 && echo "---End Training---"

yaml file for SAP AI Core

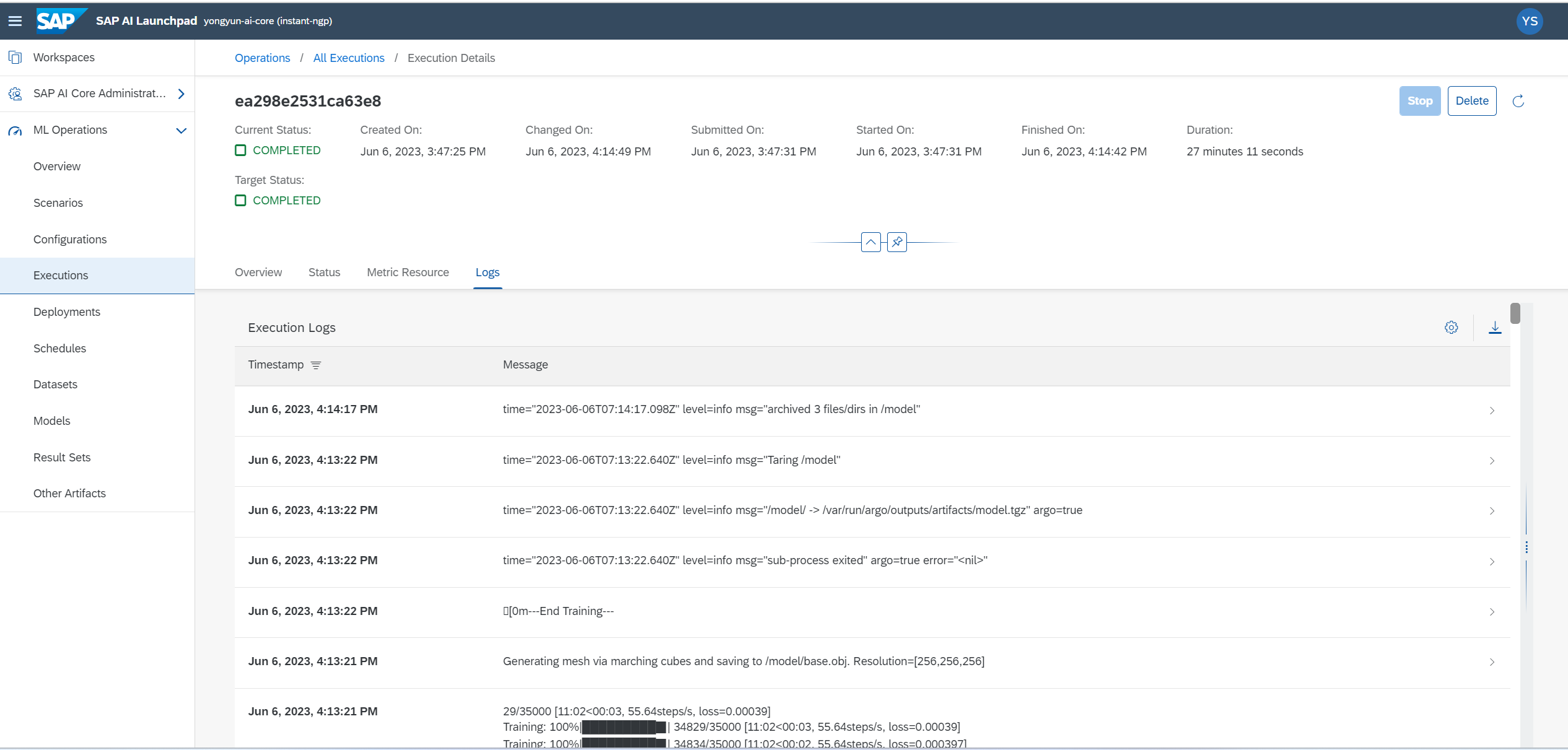

By doing so, you can observe the train result in SAP AI Core.

- Epoch: 35000

- Build: 10 min

- Estimating camera pose:5 min

- Train: 5 min

SAP AI Core

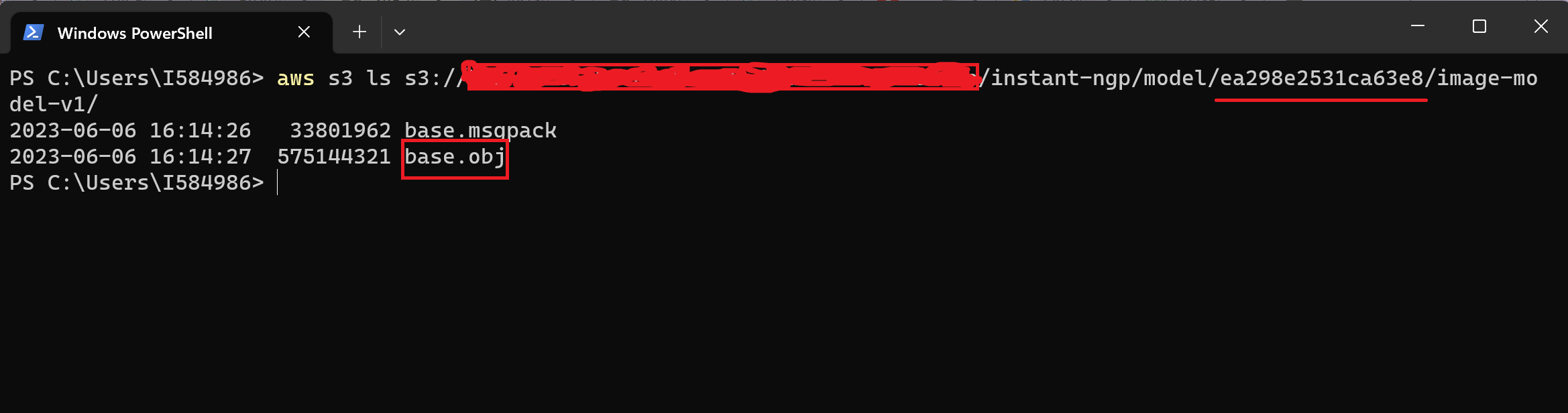

At the end of the process, you will notice the generation of the "ea298e2531ca63e8" folder, which contains the 3D output file named "Base.obj". This file is stored in Amazon S3.

Output file is stored in S3

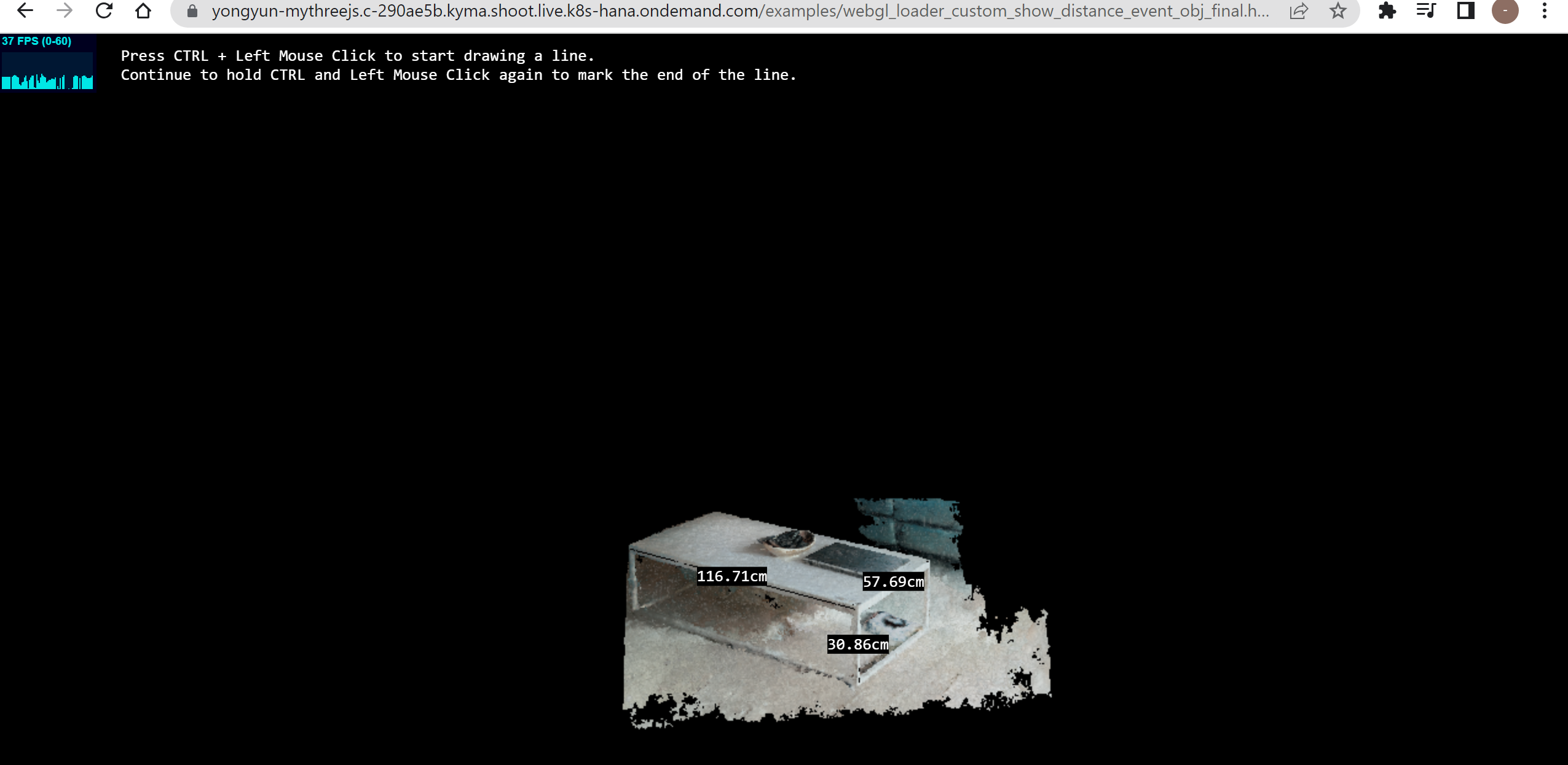

After obtaining 3D file, you can visualize the 3D object with Three.js in SAP BTP Kyma Runtime.

SAP BTP Kyma Runtime

Visualization may be one of the most critical tasks in computer vision. To accomplish this, Three.js can be utilized. Moreover, for making it accessible to the public, there is no doubt that leveraging SAP BTP Kyma runtime is the most effective approach.

By utilizing this JavaScript code within Three.js, you can implement a measurement feature for 3D objects.

const raycaster = new THREE.Raycaster()

let intersects

const mouse = new THREE.Vector2()

renderer.domElement.addEventListener('pointerdown', onClick, false)

function onClick(event) {

if (ctrlDown) {

raycaster.setFromCamera(mouse, camera)

intersects = raycaster.intersectObjects(pickableObjects, false)

if (intersects.length > 0) {

if (!drawingLine) {

//start the line

const points = []

points.push(intersects[0].point)

points.push(intersects[0].point.clone())

const geometry = new THREE.BufferGeometry().setFromPoints(points)

line = new THREE.LineSegments(

geometry,

new THREE.LineBasicMaterial({

color: 0x00000,

linewidth: 5,

transparent: true,

opacity: 1.00,

// depthTest: false,

// depthWrite: false

})

)

line.frustumCulled = false

scene.add(line)

const measurementDiv = document.createElement('div')

measurementDiv.className = 'measurementLabel'

measurementDiv.innerText = '0.0cm'

const measurementLabel = new CSS2DObject(measurementDiv)

measurementLabel.position.copy(intersects[0].point)

measurementLabels[lineId] = measurementLabel

scene.add(measurementLabels[lineId])

drawingLine = true

} else {

//finish the line

const positions = line.geometry.attributes.position.array

positions[3] = intersects[0].point.x

positions[4] = intersects[0].point.y

positions[5] = intersects[0].point.z

line.geometry.attributes.position.needsUpdate = true

lineId++

drawingLine = false

}

}

}

}

document.addEventListener('mousemove', onDocumentMouseMove, false)

function onDocumentMouseMove(event) {

event.preventDefault()

mouse.x = (event.clientX / window.innerWidth) * 2 - 1

mouse.y = -(event.clientY / window.innerHeight) * 2 + 1

if (drawingLine) {

raycaster.setFromCamera(mouse, camera)

intersects = raycaster.intersectObjects(pickableObjects, false)

if (intersects.length > 0) {

const positions = line.geometry.attributes.position.array

const v0 = new THREE.Vector3(positions[0], positions[1], positions[2])

const v1 = new THREE.Vector3(

intersects[0].point.x,

intersects[0].point.y,

intersects[0].point.z

)

positions[3] = intersects[0].point.x

positions[4] = intersects[0].point.y

positions[5] = intersects[0].point.z

line.geometry.attributes.position.needsUpdate = true

const distance = v0.distanceTo(v1)

measurementLabels[lineId].element.innerText = distance.toFixed(2) + 'cm'

measurementLabels[lineId].position.lerpVectors(v0, v1, 0.5)

// console.log('Distance:', distance);

}

}

}measurement code for 3D object

To run your server using Node.js, you can create a Docker file. Once you have created the Docker file, you can build a Docker image. This image can then be used to spin up containers that run your Node.js server

# Use an official Node.js image as the base

FROM node:14-alpine

# Set the working directory in the container

WORKDIR /app

# Copy all file to Docker

COPY . .

# Expose the port that your application will run on

EXPOSE 80

# Start a simple HTTP server to serve the HTML file

CMD ["npx", "http-server", "-p", "80", "-c-1", "."]

Dockerfile for three js

Once you have set up your server using Node.js within a Docker container, you can further enhance your deployment by utilizing a YAML file for SAP BTP Kyma.

apiVersion: v1

kind: Namespace

metadata:

name: yongyun-mythreejs

labels:

istio-injection: enabled

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-threjs-app

namespace: yongyun-mythreejs

spec:

replicas: 1

selector:

matchLabels:

app: my-threjs-app

template:

metadata:

labels:

app: my-threjs-app

version: v1

spec:

containers:

- name: my-threjs-app

image: ertyu116/my-threejs-app:v13

imagePullPolicy: Always

resources:

limits:

memory: "128Mi"

cpu: "500m"

requests:

memory: "32Mi"

ports:

- containerPort: 80

name: http

---

apiVersion: v1

kind: Service

metadata:

name: my-threjs-app-service-v1

labels:

app: my-threjs-app

spec:

selector:

app: my-threjs-app

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: gateway.kyma-project.io/v1alpha1

kind: APIRule

metadata:

name: yongyun-mythreejs

namespace: yongyun-mythreejs

spec:

gateway: kyma-gateway.kyma-system.svc.cluster.local

service:

name: my-threjs-app-service-v1

port: 80

host: yongyun-mythreejs

rules:

- accessStrategies:

- handler: allow

methods: ["GET", "POST"]

path: /.*

mutators: []

yaml file for SAP BTP kyma

Lastly, you have the capability to measure the length using the provided functionality. It is important to note that the accuracy of the length measurement is approximately +/- 2 cm when scaling is applied in MeshLab.

Measure 3D object

Problems and Limitation

The quality of the mesh is determined by the AABB scale, which determines the number of 3D spaces used for rendering. However, this approach may not yield optimal results when it comes to mesh quality. It is expected that future advancements in 3D reconstruction within the field of Computer Vision will greatly improve mesh quality.

Currently, techniques such as smoothing, noise removal, and scaling are commonly employed in Meshlab. Nevertheless, it is possible to import these functions into SAP BTP Kyma using Three.js, which would offer even more enhanced capabilities.

Overall

In Computer Vision, the latest 3D rendering technique is advancing rapidly. It is truly exhilarating to leverage the SAP BTP system to automate the process using this cutting-edge technique and share the results with the public through visualization.

This versatile technique finds applications across a wide range of industrial sites.

If you are interested in viewing my 3D work, please click the link below. Kindly note that it may take approximately one minute to load the data.

yongyun-3d-reconstruction

Credits

I sincerely appreciate the invaluable support from gunteralbrecht, who provided guidance on utilizing the latest technology with BTP System.

I would like to express my gratitude to lsm1401 for the assistance in creating the architecture for the project.

Special thanks to ryutaro.umezuki for the comprehensive guide on Three.js, which greatly contributed to the development process.

Lastly, I would like to acknowledge rugved88 for the valuable inputs during the brainstorming sessions.

Reference

- SAP Managed Tags:

- SAP BTP, Kyma runtime,

- SAP AI Core

Labels:

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

301 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

346 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

430 -

Workload Fluctuations

1

Related Content

- SAC: Do you have an option to limit the length in Measure in model? in Technology Q&A

- Characterization of Ship Trajectories in the Maritime Domain using Automatic Identification System (AIS) Data - An Application of SAP HANA Spatial Enabled by Coordinate Transformations. in Technology Blogs by SAP

- Smooth transition to ABAP for Cloud Development(Cheat sheet) in Technology Blogs by Members

- SAP Datasphere - Unit Conversion in Technology Blogs by SAP

- SAP Datasphere: HANA System Memory and CPU - Overall Consumption and Breakdown in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 26 | |

| 17 | |

| 15 | |

| 13 | |

| 11 | |

| 9 | |

| 8 | |

| 8 | |

| 8 | |

| 7 |