- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Getting started with the predefined CI/CD pipeline...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

SAP Continuous Integration and Delivery?

SAP Continuous Integration and Delivery is a fully managed service on SAP BTP that you can use to configure and run predefined CI/CD pipelines with very little effort. It connects with your source code repository and builds, tests, and deploys your code changes. On the user interface you can configure and run jobs without having to worry about the underlying pipeline infrastructure.

Container-based applications?

Containerization is the latest trend in software development. When properly implemented in development workflows, it can greatly improve productivity. The open-source project Kubernetes offers a ton of benefits and can help you deploy and operate cloud native applications at scale. Yet despite all of its amazingness, Kubernetes comes with a large tooling ecosystem and can be very complicated to set up.

SAP BTP, Kyma runtime?

The solution to this is the SAP BTP, Kyma runtime - a fully managed Kubernetes runtime based on the open-source project called Kyma. This cloud-native solution allows you to develop and deploy applications with serverless functions and combine them with containerized microservices.

This post will cover:

- Where to get started

- How to connect SAP Continuous Integration and Delivery with the Kyma runtime

- How to configure credentials

- How to configure the pipeline stages and choose between build tools

Before you get started:

- You have enabled SAP Continuous Integration and Delivery on SAP BTP. See Enabling the Service.

- You are an administrator of SAP Continuous Integration and Delivery. See Assigning Roles and Permissions.

- You have enabled Kyma runtime. See SAP BTP, Kyma runtime: How to get started or follow the tutorial Enable SAP BTP, Kyma Runtime.

- You have a container registry, for example, a Docker Hub account.

- You have a repository with the source code (in this example we will use GitHub, but you can also use GitLab or Bitbucket server)

Getting started

Creating a new pipeline involves 4 simple steps:

- Create a Kyma service account.

For the CI/CD pipeline to run continuously, it is necessary to create Kyma application credentials that don't expire. The authentication token for a cluster is generated in the kubeconfig file. The kubeconfig that you can download directly from the Kyma dashboard expires every 8 hours and is therefore not suited for our scenario. This requires us to create a custom kubeconfig file using a service account. A detailed step by step can be found in the following tutorial: Create a Kyma service account.

- Connect your repository to SAP Continuous Integration and Delivery and add a webhook to your GitHub account.

This step is where all the magic happens – whenever you are creating or updating a docker image locally, you can simply push the changes to your GitHub repository. A webhook push event is sent to the service and a build of the connected job is automatically triggered. Learn more in the following tutorial: Get Started with an SAP Fiori Project in SAP Continuous Integration and Delivery.

- Start with configuring the credentials in SAP Continuous Integration and Delivery:

- Kubernetes credentials

Add a "Secret Text" credential with the content of your kubeconfig file that you created in step 1 in the "Secret" field.

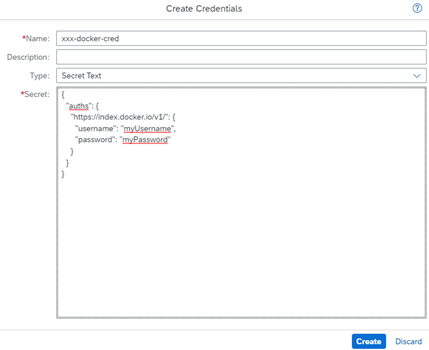

- Container registry credentials

The SAP Continuous Integration and Delivery service publishes a new container image each time a job is triggered. This process requires access to your container repository, in this case, Docker Hub.

Paste the following lines into the "Secret" text field and replace the placeholders:

{

"auths": {

"<containerRegistryURL>": {

"username": "<myUsername>",

"password": "<myPassword>"

}

}

}For Docker Hub, it should look like this:

Now we are ready to configure our first pipeline job.

- In SAP Continuous Integration and Delivery, configure a new job as described in Create a Job. As "Pipeline", choose "Container-Based Applications".

Configuring the General Parameters

The "Container Registry URL" for Docker Hub is https://index.docker.io/v1/. The name of your image consists of your given "Container Image Name" and a tag name. To tag your image, you have two options:

- If you define a "Container Image Tag", all newly built container images have the same tag, in our case, 0.1.

- If you choose the "Tag Container Image Automatically" checkbox, your container images receive a unique version tag every build. This allows you to compare your container images afterwards. If you want to use this feature, be sure to add the following line to your Dockerfile after the FROM line:

ENV VERSION x.yFor "Container Registry Credentials", choose the credentials you created in the last step.

After this step, you can start configuring the stages of the pipeline:

The Init stage is already done by the pipeline. You can enable or disable the Build, Acceptance, and Release stage depending on your needs. This way, you can choose to only build an image, only deploy an existing image, or combine the two to deploy an image you just built.

Configuring the Build Stage

Find the full path to your Dockerfile in GitHub and enter it in the "Container File Path" field.

For

it would look like this:

Configuring the Acceptance and Release Stage

The configuration of the Acceptance and Release stages are basically the same, except that in the Acceptance stage, you can profit from additional Helm tests, in case you choose to deploy the application with the Helm tool (you can find more information on which deployment tool to use in the next section).

Also – in the Acceptance stage, feel free to use a special Kyma namespace and kubeconfig for the purpose of testing a deployment in a different cluster.

Which deployment tool should I use?

The SAP Continuous Integration and Delivery service lets you choose between two deployment tools: helm3 or kubectl. Let’s go through both scenarios.

Deploy using helm3

With Helm, you can manage applications using Helm charts. In general, Helm charts are most useful for deploying simple applications and can handle the install-update-delete lifecycle for the applications deployed to the cluster. You can also use Helm to perform tests and it provides templates for your Helm charts. Some interesting configuration parameters:

- "Chart Path": this is the path to the directory that contains the Chart.yaml file in your GitHub repository (note: the Chart.yaml needs to be in the helm/parent directory, but can be in a different subdirectory within it).

- "Helm Values": this is the path to the values.yaml file in your GitHub repository. You also have the option to configure a "Helm Values Secret" by creating a "Secret Text" credential and adding the content of your values.yaml file to the "Secret field".

Configuring the Helm values is optional - if you don’t set the Helm values either as a path or a secret, the values.yaml that is in the chart path will be used (If it doesn't exist in the repo either, then no values will be used).

- "Force Resource Updates": choose this checkbox to add a --force flag to your deployment. This will make Helm upgrade a resource to a new version of a chart.

Deploy using kubectl

kubectl comes in handy when you want to implement a complex, custom configuration or deploy a special application that involves a lot of operational expertise. The configuration parameters include:

- "Application Template File": enter the name of your Kubernetes application template file.

- "Deploy Command": choose "apply" to create a new resource or "replace" to replace an existing one.

- "Create Container Registry Secret": Kubernetes needs to authenticate with a container registry to pull an image. Choose this checkbox to create a secret in your Kubernetes cluster based on your "Container Registry Credentials".

Don't forget to save your job!

Congratulations! Now you can create Docker images, push them to your container registry, and upload them into your SAP BTP, Kyma runtime. You can also monitor the status of your jobs and view their full logs:

If you found this post useful and want to learn more about this scenario, you can also see the Container-Based Applications product documentation on the SAP Help Portal.

Last but not least thank you laura.veinberga for your collaboration.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

95 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

68 -

Expert

1 -

Expert Insights

177 -

Expert Insights

311 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,576 -

Product Updates

355 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

444 -

Workload Fluctuations

1

- SAP Datasphere + SAP S/4HANA: Your Guide to Seamless Data Integration in Technology Blogs by SAP

- Supporting Multiple API Gateways with SAP API Management – using Azure API Management as example in Technology Blogs by SAP

- Kyma Integration with SAP Cloud Logging. Part 2: Let's ship some traces in Technology Blogs by SAP

- 10+ ways to reshape your SAP landscape with SAP Business Technology Platform - Blog 5 in Technology Blogs by SAP

- Streamline the updates for SAP HANA Cloud with SAP Automation Pilot in Technology Blogs by SAP

| User | Count |

|---|---|

| 17 | |

| 17 | |

| 12 | |

| 11 | |

| 9 | |

| 9 | |

| 8 | |

| 8 | |

| 7 | |

| 7 |