- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Integrating ABAP Function modules with SAP Data In...

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Product and Topic Expert

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

06-01-2021

9:13 AM

Introduction:

In this new blog post I worked together with Michael Sun about the usage of ABAP function module calls with SAP Data Intelligence using Custom ABAP Operators.

You may have already seen a similar blog post from Britta Thoelking about how to call function modules with pipelines in SAP Data Hub: https://blogs.sap.com/2019/11/04/abap-integration-calling-a-abap-function-module-within-a-sap-data-h...

The goal of this new blog post is to provide simplified guidance and a foundation for the general usage of function modules in SAP Data Intelligence Pipelines that require certain input parameters, such as elements, structures or table, to execute the function module call. Be aware that the required input parameters in form of structures and tables can be also deeply nested into certain sub-levels depending on the function module you choose.

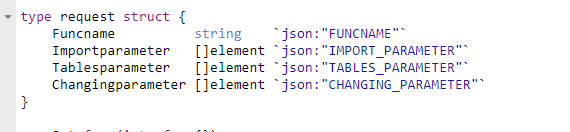

One major part of this blog post is to provide a generic Custom ABAP Operator that is extracting the metadata (or schema definition) of any ABAP function module that resides in your ABAP system that you connect to Data Intelligence. This operator will extract the required input parameters to prepare the execution of the function module and provide it in a JSON format as output. In the output JSON file all required input parameters are listed that the function module requires to successfully trigger the execution. This JSON is then being used as a template to define to map the required input parameters, which are then being used for the second part of this blog post to use another custom ABAP operator that is actually executing the function module on the connected ABAP system.

You can find some of the material incl. code snippets and sample pipelines under the following link.

Pre-requisites

The example illustrated in this blog post is based on SAP S/4 HANA System Version 2020 as well as a Data Intelligence Cloud system version 2013. The general pre-requisites for connecting an SAP ABAP system with Data Intelligence are documented in the following link, but in general you need to have one of the following systems available:

- SAP S/4 HANA 1909 or higher (ABAP Pipeline Engine is already pre-installed)

- SAP ECC with DMIS 2011 SP17 or DMIS2018 SP02 or later (depending on SAP release)

- SAP BW with DMIS 2011 SP17 or later

Note: Even if the minimum version to use the ABAP integration with Data Intelligence is DMIS 2011 SP17 / DMIS 2018 SP02, it is recommended to use one of the latest DMIS versions such as DMIS2011 SP19 or SP20 / DMIS 2018 SP04 or SP05.

In addition, you need to create a RFC-based connection of type “ABAP” in Data Intelligence against your SAP ABAP system. In this blog post we will not go into details for setting up the connection and expect the connection is already in place. Further details about setting up the connection can be found using the following links:

- ABAP Connection Type for SAP Data Intelligence: https://launchpad.support.sap.com/#/notes/2835207

- SAP Data Intelligence ABAP Security Settings incl. whitelisting: https://launchpad.support.sap.com/#/notes/2831756

- Note Analyzer for ABAP-based Replication Technology:

https://launchpad.support.sap.com/#/notes/2596411

( Using Scenario “ABAP Integration for SAP Data Intelligence”)

Use Case:

The use case we focus on is using two pipelines in the Data Intelligence Modeler by using an existing function module to create sales orders in a remote SAP S/4 HANA system. The first pipeline will leverage a custom ABAP operator to extract the metadata of the function module including the required input parameters for performing the function module call.

In a second pipeline, we will perform the required mapping for the generated JSON file and provide the required input parameters to trigger the function module call in the connected ABAP system with a second custom ABAP operator. For simplification reasons, we perform a manual mapping of the required input parameters in a Go operator, but in real scenarios you may perform the mapping based on data coming from another connected system, a web service or from a CSV file. In this blog post we will use “BAPI_EPM_SO_CREATE” to create a sales order in our SAP S/4 HANA system that requires certain input parameters for creating a sales order, which will be described more in detail in the following steps.

Pipeline 1: Use Custom ABAP Operator to extract Metadata of Function Module

Input: Provide name of function module in Terminal as input, e.g. in this case “BAPI_EPM_SO_CREATE”.

Output: JSON file providing input and output parameters of function module.

Pipeline 2: Mapping of JSON file to perform Function module Call and create a Sales Order

Important Note: Before directly triggering the execution of the function module in the pipeline, it is helpful to check the documentation of the function module as well as trying to test the function module call with input parameters in transaction se37.

Input: JSON file of Step 1 incl. some manual mapping using a Go Operator to prepare the creation of the Sales Order in the SAP S/4 HANA system.

Output: Response Message from function call execution, which can be a successful message or an error message being captured from the ABAP system.

Implementation on ABAP System

The next chapter will deal with a brief step by step description for how to create a custom operator in your ABAP system, i.e. in this case a SAP S/4 HANA system. To simplify the creation of custom ABAP operators, a framework has been provided to create your custom ABAP operators by using two reports:

- DHAPE_CREATE_OPERATOR_CLASS: Will generate an implementation class

- DHAPE_CREATE_OPER_BADI_IMPL: Create a BAdI implementation

Here we will only focus on the major part of using the new custom ABAP operator including the required coding. As a starting point we also shortly recap to the different event-based methods that can be used inside the operator to react on certain actions as already explained in the referred blog post:

- ON_START: Called once before the pipeline is started.

- ON_RESUME: Called at least once before the pipeline is started or resumed.

- STEP: Called frequently.

- ON_SUSPEND: Called at least once after the pipeline is stopped or suspended.

- ON_STOP: Called once after the pipeline is stopped.

If you create a custom operator, it will already have some available sample coding that we will replace with the logic of the use case we are implementing.

First, we look at the custom ABAP operator “Function Module Schema Reader” from the first pipeline, which is extracting the metadata (=schema definition) of the function module we provide as input, e.g. in this example “BAPI_EPM_SO_CREATE”.

Before we start to create the custom ABAP operator for DI, a custom class needs to be created that represents the “heart” of this scenario we are looking at. This class will take care of building up the json schema based on the input function module name (= Method CONVERT_TO_JSON) as well as dynamically call the chosen function module based on the mapped input json (method CALL_FM_WITH_JSON).

The required coding for the custom class “zcl_fm_interface_converter” can be found in the following git repository link.

Later you will see that this class is being referenced in both custom ABAP operators, e.g. like displayed here for the “read FM schema” operator:

Important Note: If you import the code and receive some syntax errors, it can be caused by missing APIs that are not available in your system. The code is being implemented based on a SAP S/4 HANA 2020 System. If you implement it on another SAP system, you might need to adjust the code a bit to get the scenario working.

To start the custom operator creation, login to your SAP S/4 HANA system via SAP Logon and then you can either directly open transaction S38 or open transaction DHAPE (LTAPE for ECC and BW systems with DMIS plugin).

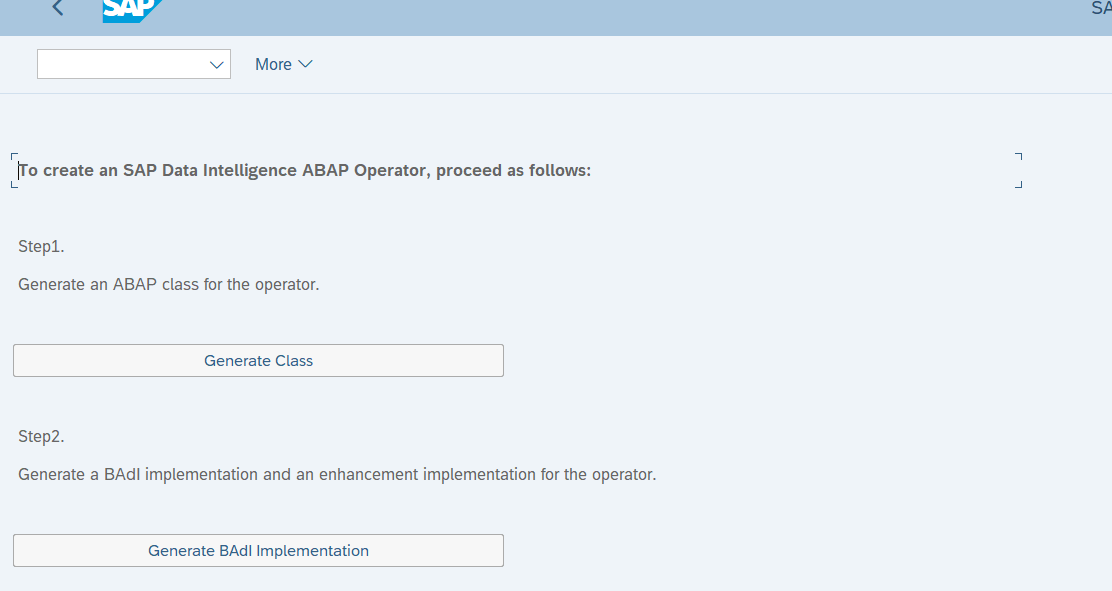

In this case we go to transaction DHAPE and you will see the following two steps for generating a custom operator that have already been mentioned before. Click on “Generate Class”.

Search for report DHAPE_CREATE_OPERATOR_CLASS and execute it to generate an implementation

Note: If you are performing the steps on an ABAP system which is not an S/4 system, e.g. BW or SAP ECC, the program is called R_LTAPE_CREATE_OPERATOR_CLASS for creating the operator implementation class.

Enter required information for the Operator Class generator:

Note: When you create a custom operator, the customer namespace is being defined as default operator namespace. Standard ABAP operators delivered by SAP are sitting in a different namespace, e.g. com.sap.abap.cds.reader.v2 for the ABAP CDS Reader operator.

Select in which package you want to store the operator:

Click on the Save icon to generate the implementation class.

The following screen appears:

Click exit to close the window.

Go again to transaction DHAPE and click on the second step “Generate BAdI Implementation”:

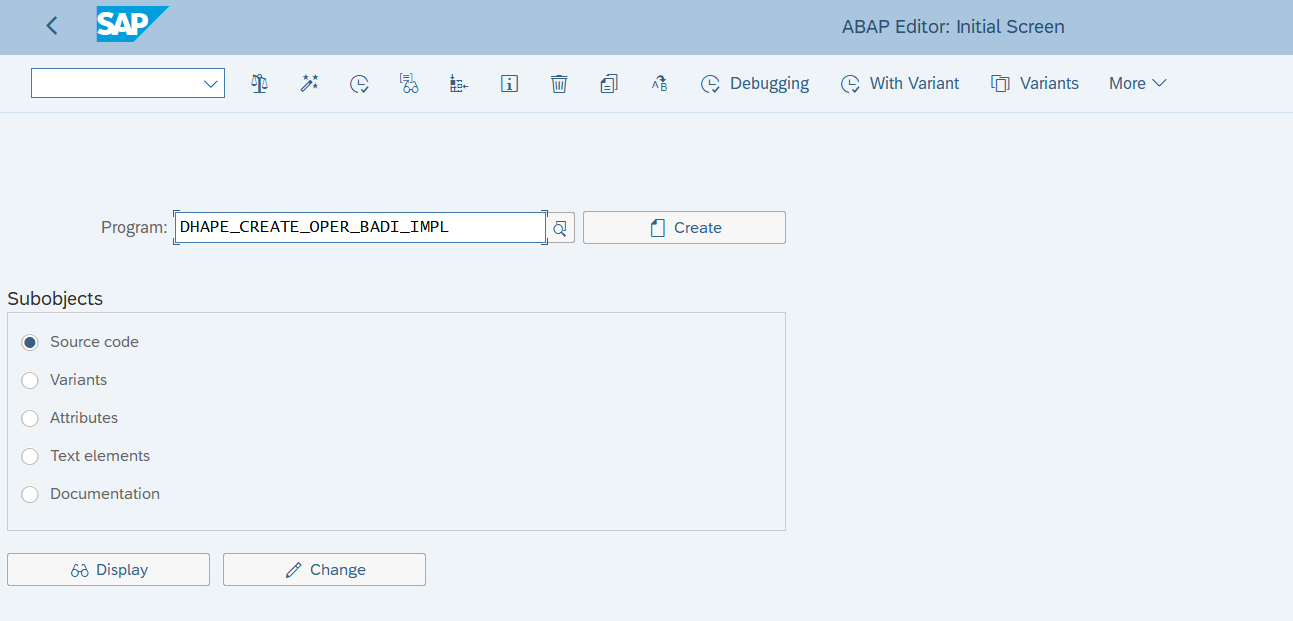

As an alternative you can directly open transaction SE38 and open program “DHAPE_CRATE_OPER_BADI_IMPL” :

Enter the requested information for your operator and click on execute

Assign a package and click on save:

You should now see the following screen (Enhancement Implementation).

Click on Implementation Class in the left menu bar:

In the Class Builder click on “NEW PROCESS”:

In the next window click on “lcl_process”:

The operator code for the “FM Schema Reader” in the ABAP system is using the following main steps which are being executed:

- Receive and read function module name from input port (mo_in -> in) in Data Intelligence Pipeline

- Extract schema definition for provided function module name

- Call “convert_to_json” method to provide schema definition as JSON output

- Send results in JSON format to output port (mo_out -> out)

The operator code for the lcl_process looks as follows :

Please find the actual code snippet in ABAP for easily re-using and adopting the code in your system:

METHOD if_dhape_graph_process~step.

DATA: lo_converter TYPE REF TO zcl_fm_interface_converter,

lv_funcname TYPE rs38l-name.

"This method caled in a loop as long as the graph is alive

rv_progress = abap_false.

CHECK mv_alive = abap_true.

IF mo_in->has_data( ).

CHECK mo_out->is_blocked( ) <> abap_true.

mo_in->read_copy( IMPORTING ea_data = lv_funcname ).

TRANSLATE lv_funcname TO UPPER CASE.

CREATE OBJECT lo_converter

EXPORTING

iv_funcname = lv_funcname.

DATA(lv_result) = lo_converter->convert_to_json( ).

mo_out->write_copy( lv_result ).

rv_progress = abap_true.

ELSEIF mo_in->is_closed( ).

mv_alive = abap_false.

mo_out->close( ).

rv_progress = abap_true.

ENDIF.

ENDMETHOD.Secondly, we look at the custom ABAP operator “FM Caller” from the second pipeline, which is using the provided JSON file including the mapping of all required input parameters for triggering the execution of “BAPI_EPM_SO_CREATE” to create the sales order object in the ABAP backend system.

Here we will not repeat each and every step for creating the ABAP operator as before, but concentrate on the important topic, which is the following code piece what is performing the function module call with the mapped JSON file as input.

The main steps of the code look as follows:

- Receive mapped input schema in JSON format using input port (mo_in -> in)

- Use received input data to call function module “BAPI_EPM_SO_CREATE”

- Provide result in output after call was successful

- Provide error message in case call function module was not successful

The operator code of the “FM Caller” in the ABAP backend system looks as follows after opening the local class lcl_process:

Please find the actual code snippet in ABAP for easily re-using and adopting the code in your system:

CLASS lcl_process DEFINITION INHERITING FROM cl_dhape_graph_proc_abstract.

PUBLIC SECTION.

METHODS: if_dhape_graph_process~on_start REDEFINITION.

METHODS: if_dhape_graph_process~on_resume REDEFINITION.

METHODS: if_dhape_graph_process~step REDEFINITION.

DATA:

mo_util TYPE REF TO cl_dhape_util_factory,

mo_in TYPE REF TO if_dhape_graph_channel_reader,

mo_out TYPE REF TO if_dhape_graph_channel_writer,

mv_myparameter TYPE string.

ENDCLASS.

CLASS lcl_process IMPLEMENTATION.

METHOD if_dhape_graph_process~on_start.

"This method is called when the graph is submitted.

"Note that you can only check things here but you cannot initialize variables.

ENDMETHOD.

METHOD if_dhape_graph_process~on_resume.

"This method is called before the graph is started.

"Read parameters from the config here

mv_myparameter = to_upper( if_dhape_graph_process~get_conf_value( '/Config/myparameter' ) ).

"Do initialization here.

mo_util = cl_dhape_util_factory=>new( ).

mo_in = get_port( 'in' )->get_reader( ).

mo_out = get_port( 'out' )->get_writer( ).

ENDMETHOD.

METHOD if_dhape_graph_process~step.

DATA: lo_converter TYPE REF TO zcl_fm_interface_converter.

"This method caled in a loop as long as the graph is alive

rv_progress = abap_false.

CHECK mv_alive = abap_true.

IF mo_in->has_data( ).

CHECK mo_out->is_blocked( ) <> abap_true.

DATA lv_json type string.

mo_in->read_copy( IMPORTING ea_data = lv_json ).

CREATE OBJECT lo_converter.

DATA(lv_result) = lo_converter->call_fm_with_json( lv_json ).

mo_out->write_copy( lv_result ).

rv_progress = abap_true.

ELSEIF mo_in->is_closed( ).

mv_alive = abap_false.

mo_out->close( ).

rv_progress = abap_true.

ENDIF.

ENDMETHOD.

ENDCLASS.Implementation on Data Intelligence

Pipeline 1: Provide Function module name and extract schema definition as JSON output

Step 1: Drag and drop the custom ABAP operator “read fm schema” into the modeling canvas. To do so, use the “Custom ABAP Operator”, select the connection of the ABAP System where operator is residing and finally choose the operator from the ABAP operator selection panel:

Step 2: Use a Terminal and Multiplexer Operator and connect it with your “read fm schema” operator as illustrated in the screenshot above. The terminal operator will be used as trigger by typing in the function module name for which the schema definition will be extracted.

Step 3: Use a write file operator including to File converter to select your target in which the resulting schema definition of the function module in form of a JSON file should be stored. In this case we are storing it in an Azure Data Lake (ADL) storage. After the JSON file is being written to the ADL target, a graph terminator will stop the pipeline.

Step 4: Launch the provided pipeline and once running, open the Terminal in your browser by clicking the “Open UI” button:

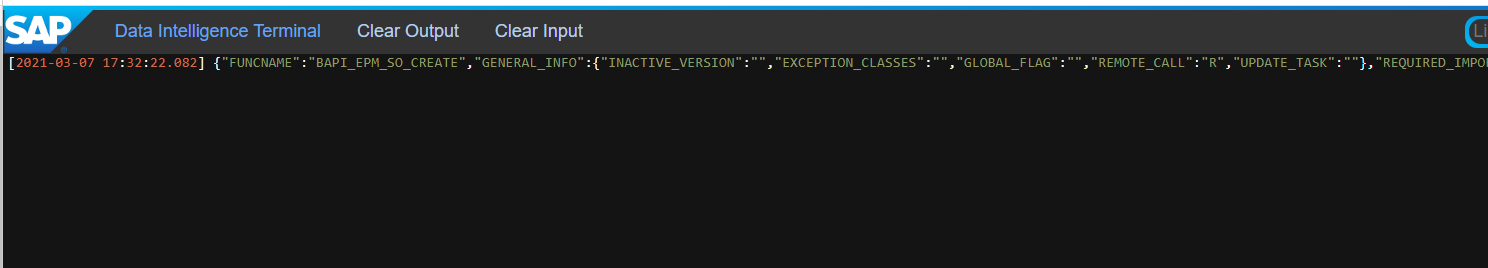

Step 5: Provide a function module name as input in the terminal and hit enter, e.g. here “BAPI_EPM_SO_CREATE”:

You should now see a similar message like this in the Terminal, which is showing the schema definition of your provided function module in JSON format:

After the JSON file has been written successfully to your target file location, the pipeline will be switching to status completed.

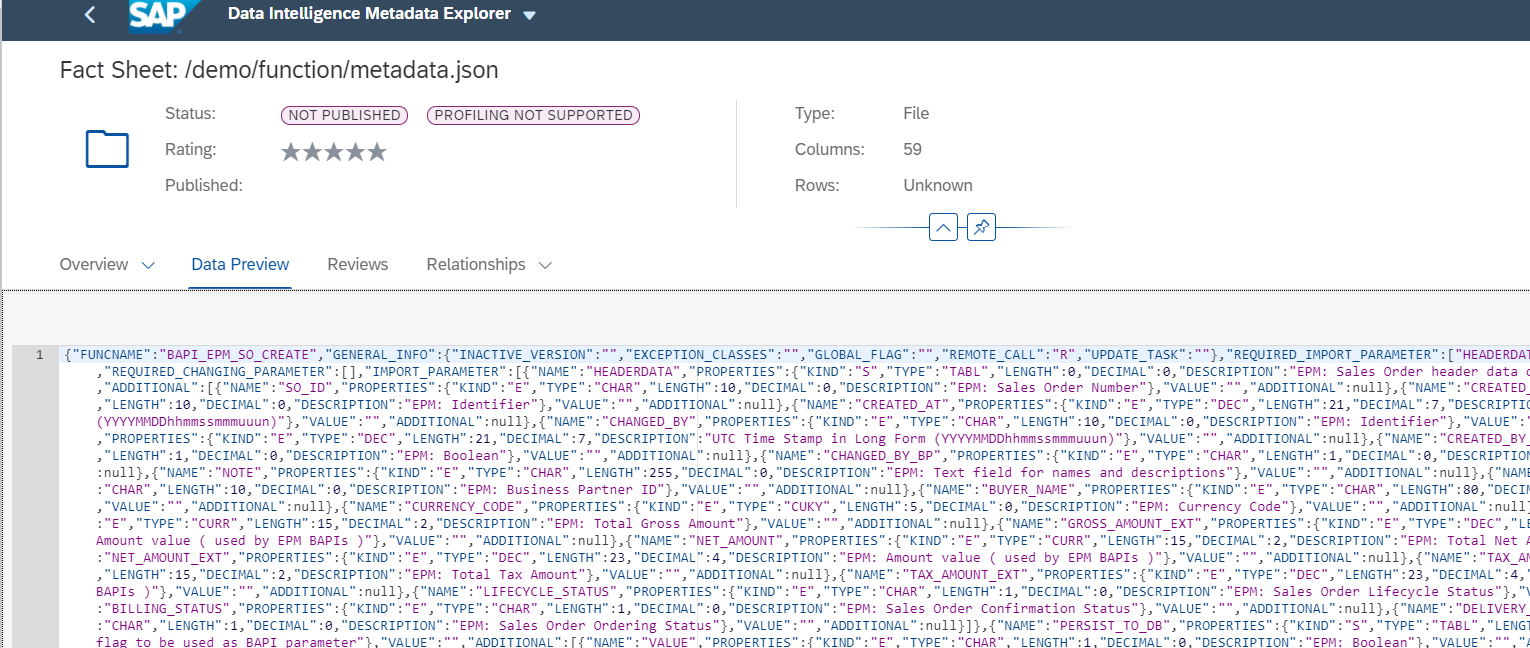

You can also easily browse the JSON file using the DI Metadata Explorer by opening the folder location, which you defined as target in your pipeline:

By clicking on the preview icon, you can preview the JSON file in the Metadata Explorer:

Pipeline 2: Map Input Parameters and execute function module

Step 1: Use Read File Operator to select JSON schema definition of your function module from Pipeline 1

Note: Header Remover operator will remove metadata of the file of the message and extracting only the actual message content as input for the Go Operator that is

Step 2: In the Go Operator we will perform a manual mapping which includes some general part as well as a part that is specific to the function module we have chosen in this scenario: “BAPI_EPM_SO_CREATE”. In this case, we need to provide input data in the header data about the BUYER ID and BUYER NAME as well as input one or multiple input sets for the Sales Order Item such as GROSS_AMOUNT, NET_AMOUNT and TAX_AMOUNT. All this will finally be parsed into the correct request in JSON format that will be send to the FM caller operator.

Out of the received schema definition of the function module, not all elements are required for the execution of the function module. For creating the request, the following elements are required:

Sample mapping for Sales Order Header:

In this case the following fields need to be mapped to perform a successful execution:

- Header Data

- BUYER ID

- BUYER NAME

- CURRENCY CODE

- Persist to DB (set to false by keeping it empty)

- Item Data

- NOTE (Description Field)

- GROSS AMOUNT

- NET AMOUNT

- TAX AMOUNT

- DELIVERY DATE

- QUANTITY

Extract of mapping for Sales Order Item (please check the github link to get the full mapping inside the GO operator):

Step 3: The output of the Mapping Operator will provide the final request in JSON format to trigger the execution of the function module using the FM Caller operator.

To use the FM Caller Operator, drag and drop the “Custom ABAP Operator” to the canvas:

Select the ABAP connection of the SAP ABAP system where you created the custom operator and finally choose the Operator in the “ABAP Operator” Selection screen:

Step 4: Receive and store the response message of the function module in a file as well as checking the result in a wiretap operator.

In the wiretap you should now see that the Sales order has been created successfully:

Conclusion

In summary, this blog post should now hopefully help you to easily adopt and leverage the usage of ABAP function modules with SAP Data Intelligence. As already mentioned earlier, the provided material should provide a foundation for you that can be adjusted as well as enhanced with additional content based on your individual needs. Especially the first part to extract the schema definition of the function module is generic and can be adopted for any function module you like to consider in your individual scenario.

Especially the second pipeline can be further enhanced, e.g. by using input data from another data source in the mapping for the function module call or by providing configuration parameters for the custom “mapping” operator in Go. These are ideas which we already have in mind and we will consider for a future update of this blog post.

If you have any feedback, please feel free to share it! 😊

Please find below some helpful links and resources for Data Intelligence:

- Follow SAP Data Intelligence for updates

- Visit and bookmark your SAP Data Intelligence community topic page

- Check out questions and answers about SAP Data Intelligence

- Ask a question

- Like / share this blog post with your social network

Kind regards,

Daniel & Michael

Lookup Material and Blog References:

Blogs:

- https://blogs.sap.com/2019/11/04/abap-integration-calling-a-abap-function-module-within-a-sap-data-h...

- https://blogs.sap.com/2019/10/29/abap-integration-for-sap-data-hub-and-sap-data-intelligence-overvie...

Documentation:

- Data Intelligence ABAP Integration User Guide: https://help.sap.com/viewer/1c4ff4486dd04e47b32fe406111bfee8/3.1.latest/en-US

- SAP Managed Tags:

- SAP Data Intelligence,

- ABAP Connectivity

Labels:

4 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

101 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

69 -

Expert

1 -

Expert Insights

177 -

Expert Insights

318 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,576 -

Product Updates

366 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

452 -

Workload Fluctuations

1

Related Content

- SAP Datasphere + SAP S/4HANA: Your Guide to Seamless Data Integration in Technology Blogs by SAP

- Integrating Smart contracts with SAPUI5 in Technology Blogs by Members

- Integrating CDS action in SAP Mobile Services for Data Retrieval in Technology Q&A

- Demystifying the Common Super Domain for SAP Mobile Start in Technology Blogs by SAP

- New Machine Learning features in SAP HANA Cloud in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 21 | |

| 12 | |

| 10 | |

| 8 | |

| 8 | |

| 8 | |

| 7 | |

| 6 | |

| 6 | |

| 6 |