- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- SAP Datasphere, BW bridge: How to transfer data fr...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Abstract

We have seen many articles and publications about SAP Datasphere in terms of features, connections and greenfield implementation, but many customers have been asking what can be done to save the investments which were already done on their current BW systems in terms of data. Is that possible that years of data loads can be transferred together with the metadata in an automated way? The answer is yes, you don't have to manually reload everything.

My previous blog SAP Datasphere, SAP BW bridge: Demystifying the Remote Conversion provides an introduction to the Remote Conversion and the SAP Datasphere, SAP BW bridge Runbook describes in the details on how to transfer data from SAP BW or BW/4HANA to a SAP Datasphere, BW bridge single tenant. This blog intends to explain how to transfer data to an SAP Datasphere, BW bridge 2-tier tenant landscape.

Scenario: you have a SAP BW or BW/4HANA (sender system) and are planning to transfer its data to SAP Datasphere, BW bridge, which contains a development and a productive tenant (receiver systems).

Overview

When having a SAP Datasphere, BW bridge landscape with one development and one productive tenant, it is assumed that you wish to populate this productive tenant with the same data from your SAP BW productive system. For that, it is required that the SAP BW productive system is used as the sender system for the data transfer process.

The data transfer process to a 2-tier tenant landscape basically consists on 3 main steps:

1. Transfer the metadata from the SAP BW productive system (sender) to the SAP BW bridge development system (receiver).

2. Transport the metadata from the SAP Datasphere, BW bridge development system to the SAP Datasphere, BW bridge productive system via git-based CTS (gCTS).

3. Transfer the data from the SAP BW productive system (sender) to the SAP BW bridge productive receiver system (receiver).

Figure 1: Data transfer to an SAP Datasphere, BW bridge 2-tier tenant landscape

Along this blog, the following systems naming convention will be used for simplification purposes:

- SAP BW productive system (sender) = System A

- This is the system from where the objects/data model will be transferred via the transfer tool (metadata and data).

- SAP Datasphere, BW bridge development system (receiver) = System B

- This is the system that will receive the transferred objects/data model via the transfer tool (metadata and optionally the data) and send the metadata to System C via gCTS (with property development enabled = true).

- SAP Datasphere, BW bridge productive system (receiver) = System C

- This is the system that will receive the metadata from System B via gCTS and the data from System A via the transfer tool (with property development enabled = false).

The Remote Conversion to SAP Datasphere, BW bridge is supported for SAP BW from BW 7.3 SP 10 and from SAP BW/4HANA 2021 SP00. See SAP Note 3141688 for details.

Preparation

Getting started with SAP Datasphere, BW bridge has activities in terms of provisioning and connectivity.

After that, prior the start of the data transfer process, it is required to prepare System B and System C by creating the software component, the ABAP development package and the transport request, which will contain and enable the objects in scope (to be transferred fro System A) to be transported/pulled from System B to System C via gCTS.

In SAP Datasphere, BW bridge, it is not possible to store SAP BW bridge objects on the package $TMP. All SAP BW bridge objects have to be assigned to an ABAP development package. This development package must not be created under the package ZLOCAL since objects created on packages under ZLOCAL cannot be transported.

i. Prepare System B

i.1. In System B -> BW bridge cockpit | Create a software component of type Development. This will generate a Git repository for System B.

Figure 2: Manage Software Components - System B

Figure 3: Create a Software Component - System B

i.2. In System B -> BW bridge cockpit | Clone the new software component into System B. Choose Repository Role 'Source – Allow Pull and Push'. This will generate a structured package for that software component.

Figure 4: Clone the software component - System B

The clone process may take couple of minutes:

Figure 5.1: Clone the Software Component - System B

Figure 5.2: Clone the Software Component - System B

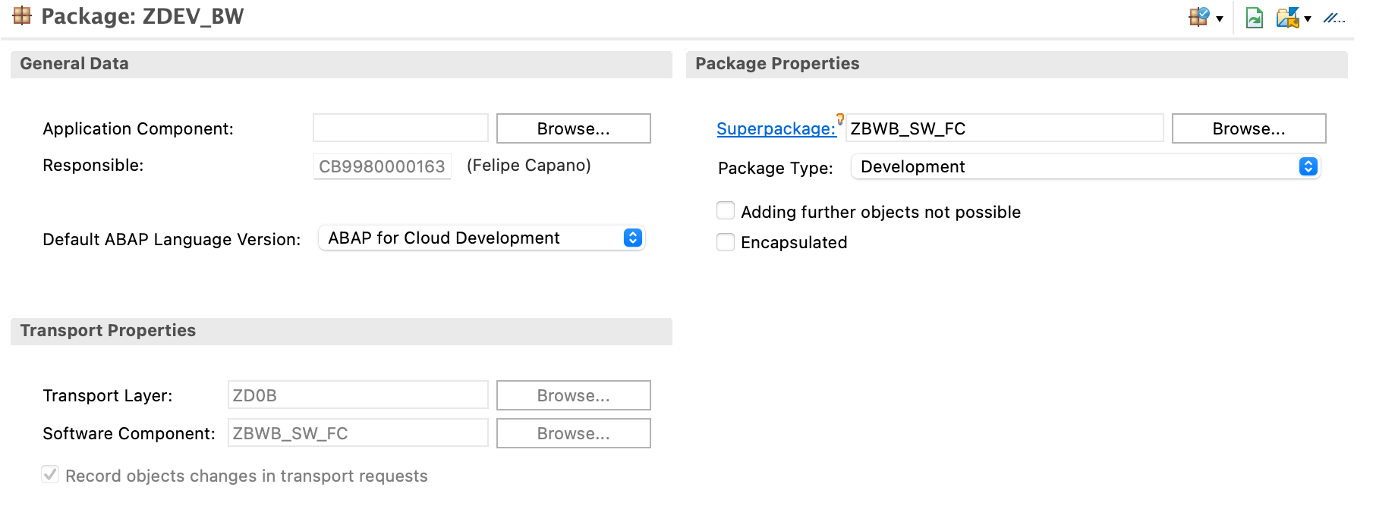

i.3. In System B -> BW bridge project | Create a development package as sub-package of the structured package which was generated for the software component created in a.1.

Figure 6.1: Create the ABAP development package - System B

Enter te ABAP development package name and associate it to the structured package that was created for the software component (Superpackage).

Figure 6.2: Create the ABAP development package - System B

Figure 6.3: Create the ABAP development package - System B

Figure 6.4: Create the transport request – System B

Figure 7: Structured package that was created for the software component - System B

Figure 8: ABAP development package and software component association - System B

i.4. In System B -> BW bridge cockpit | Add the migration user to the transport request which was created. This is required to allow the transfer of the objects.

Figure 9.1: Add the migration user to the transport request - System B

Figure 9.2: Add the migration user to the transport request - System B

Figure 9.3: Add the migration user to the transport request - System B

Figure 9.4: Add the migration user to the transport request - System B

The migration user name should be available for selection, you can also check it via the BW bridge cockpit -> Communication Arrangements.

Figure 10: Communication Arrangements, migration user name - System B

For the Remote Conversion, two communication arrangements based on the communication scenarios SAP_COM_0691 (SAP DWC BW Bridge - Migration Integration) and SAP_COM_0818 (SAP DWC BW Bridge - Data Migration Integration) are required.

Figure 11: Communication Arrangements SAP_COM_0691 and SAP_COM_0818 - System B

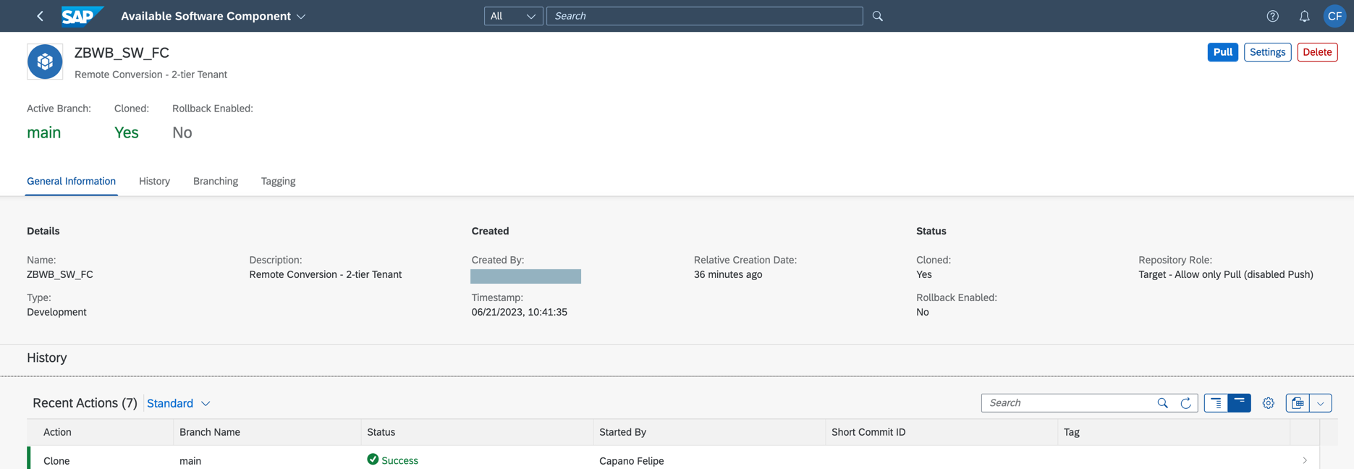

ii. Prepare System C

ii.1. In System C | Clone the software component into system C. Choose Repository Role 'Target – Allow Only Pull (Disabled Push)' and switch Rollback Mechanism to 'Disabled – Allow also invalid commits to be imported'.

Figure 12.1: Clone the Software Component - System C

Figure 12.2: Clone the Software Component - System C

Figure 12.3: Clone the Software Component - System C

The preparation activities for System B and System C were finished and the data transfer steps can be started.

Data transfer process

Assumptions:

- The BW objects were created in System A and will be transferred to System B and System C.

- After the metadata transfer to System B, you have the option to also transfer the data to your System B (Figure 1, path 1.a). This is optional and can be useful if you intend to build System B with the productive data from your System A. In case this is not desired, you can skip it and proceed with the step 2. Another option to populate System B is to connect it to your actual SAP BW development system and load the data from there (outside the Remote Conversion). The SAP BW development system is not represented in the Figure 1 for simplification reasons. The data transfer from System A to System B is optional, but the metadata is mandatory - it not possible to transfer the metadata directly to System C via the transfer tool (this happens via gCTS).

Metadata: it will be transferred from System A to System B via the Conversion Cockpit (transfer tool) and subsequently transported from System B to System C via gCTS.

Data: after the gCTS transport, the data will be transferred from System A to System B (optional) and System C. This is done via the transfer tool. The data transfer process to System B and System C are very similar, with slight differences which will be further explained.

A Remote Conversion to a SAP Datasphere, BW bridge productive system requires the creation of two (2) RFCs:

- One (1) from System A to System C - it will be used for the data transfer.

- One (1) from System A to System B - it will be used for the metadata transfer.

1. Transfer the metadata from System A to System B

There is a sequence of tasks to be performed by the Remote Conversion and one of them is the metadata transfer. We can say the metadata transfer is a particularity, a subset of the Remote Conversion. After the scope collection is made, the objects and associated tables are collected in System A, mapped, and transferred to the corresponding tables in System B via RFC. For Shell and Remote Conversions, an RFC connection from sender system to receiver system is required. This RFC connection is established using the Cloud Connector.

The SAP Datasphere, SAP BW bridge Runbook uses a data model to exemplify the execution along all data transfer tasks for a single tenant landscape. The same data model is used in this blog to describe the subsequent steps for a 2-tier tenant landscape data transfer.

The scope contains: 1 InfoCube, 1 ADSO, 1 DTP, 1 Transformation and 19 InfoObjects.

The detailed tasks to perform the Remote Conversion from System A to System B are described in the chapter 3 of the Runbook.

Figure 13: Metadata collected in System A (transfer tool)

Figure 14: Metadata transferred from System A to System B (transfer tool)

2. Transport the metadata from System B to System C via git-based CTS (gCTS)

With this step, you can transport the metadata, which was transferred from System A to System B, to System C. In SAP Datasphere, SAP BW bridge, transports are carried out via gCTS (git-based Change and Transport System) - see SAP Help for details. This transport process is controlled via the software component. Initially, all objects assigned to a software component are transferred from the development environment to the productive environment, and subsequently a delta mechanism is available in gCTS. This means, when the software component is transported again, only new objects and objects with a more recent time stamp (changed objects) will be transferred to the productive environment.

Please note that any objects creation, activation or modification in SAP Datasphere, BW bridge must be made in the development environment, and gCTS is the channel to transport such objects from the development git repository to the productive git repository. In SAP BW bridge, it is not possible to assign objects to a “local” package $TMP. All objects - regardless of whether they are created via Business Content activation, or Conversion/Migration from a BW 7.x or BW/4HANA system, or manually created in SAP BW bridge – have to be assigned to an ABAP package (type development). The ABAP package and transport request should be assigned to the software component.

2.1. In System B -> BW bridge project, Transport Organizer | Release all tasks of the transport request. Subsequently, release the transport request. By releasing the transport, all objects and changes of that transport will be pushed into the git repository.

Our transport request is *900416 and it has 2 tasks - one assigned to my user (*900417) and another one assigned to the migration user (*900418).

Figure 15.1: Release the transport request tasks in System B

Figure 15.2: Release the transport request tasks in System B

Figure 15.3: Release the transport request in System B

Figure 16: Transport request was released in System B

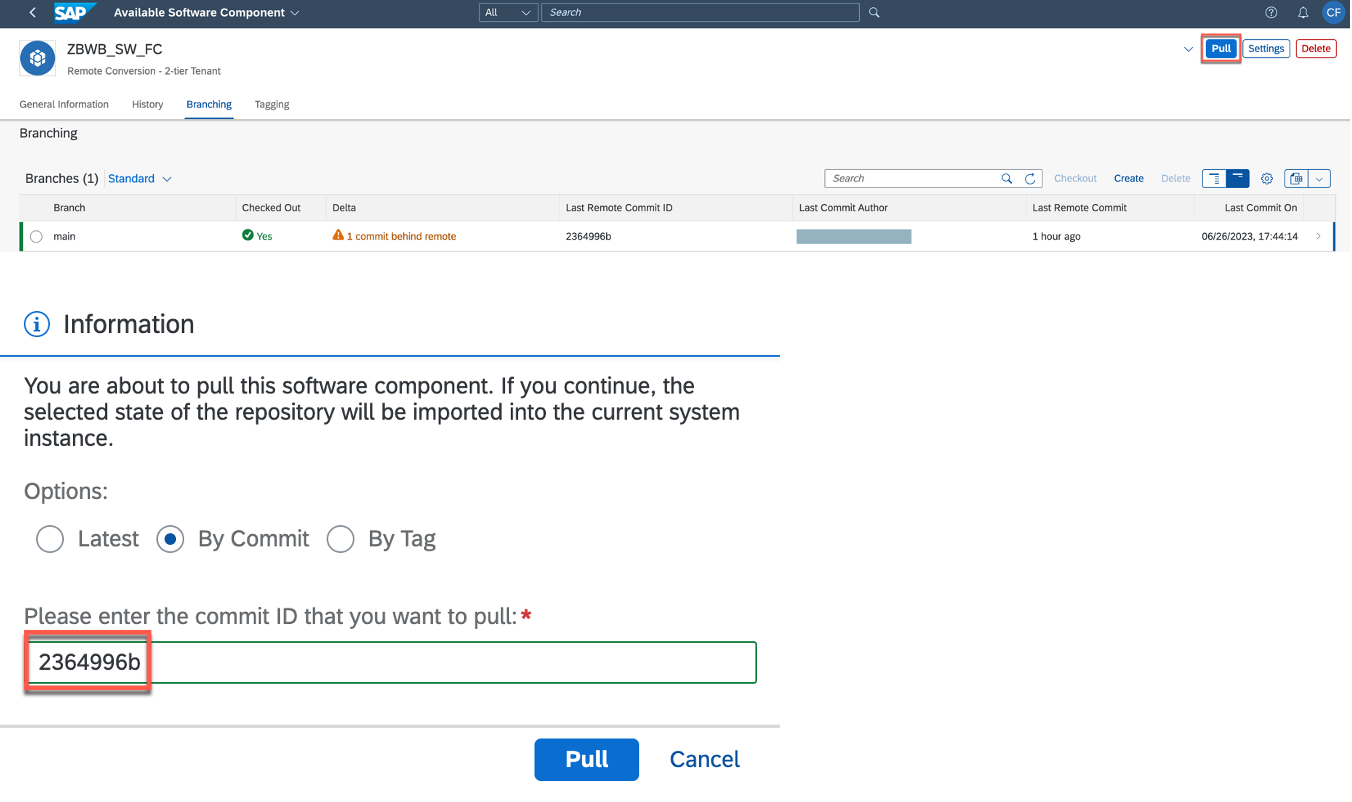

2.2. In System C -> BW bridge cockpit | Pull the software component into System C. By pulling the software component, all changes since the last pull will be synchronized.

Figure 17: Manage the software component - System C

Clicking on Branch, you can see the transport request and Commit ID which are being pulled.

Figure 18: Branching - System C

Figure 19: List of committed objects to be pulled - System C

The are different options to make the pull - Latest, By Commit or By Tag. In this example, I will use the By Commit option.

Figure 20.1: Pull the software component - System C

Figure 20.2: Pull the software component - System C

Figure 20.3: Pull the software component in progress - System C

Figure 20.4: Pull the software component general information - System C

Figure 21.1: Pull the software is finished - System C

Figure 21.2: Pull the software is finished - System C

The objects/data model were successfully transported to System C.

Figure 22: Metadata transported to System C (gCTS)

3. Transfer the data from System A to System C

For transferring the data from System A to System C, a new conversion package in System A should be created. This process is similar as previously done when transferred the same data from System A to System B.

It is possible to create and run a productive conversion package without having a previous development conversion package run. This means, you did not run a Remote Conversion from System A to B and want to run it only from System A to System C.

You may follow the same steps as described in the chapter 3 of the Runbook. There are just a few differences you should consider when running a conversion package to a SAP Datasphere, BW bridge productive environment.

3.1. Define RFC Destinations: here you should enter the RFC which was created from System A to System C (see Figure 23).

Figure 23: RFC from System A to System C

For the previous conversion package to the SAP Datasphere, BW bridge development system (Runbook), we used the RFC from System A to System B (see Figure 24).

Figure 24: RFC from System A to System B

3.2. Collect Scope for Transfer (Data flow): select 'Rem. Transfer Cloud Dev. System' and enter the RFC used from System A to System B.

Figure 25: Collect Scope for Transfer (Data flow) - productive conversion package

Figure 26: Collect Scope for Transfer (Data flow) - RFC destination for the metadata

Please note we are using 2 (two) RFCs:

- from System A to System C in Define RFC Destinations - it will be used for the data transfer.

- from System A to System B in Collect Scope for Transfer (Data flow) - it will be used for the metadata transfer.

The metadata was already transferred from System A to System (section 1), we need to enter this RFC again for the productive conversion package to ensure consistency for the data to be transferred to System C.

3.3. Define Object Mapping and Store Object List: go to Parameters to display the objects list and specify the ABAP development package and transport request. Note they are the same as previously used when the transfer from System A to System B was done.

Figure 27: Define Object Mapping and Store Object List - productive conversion package

Figure 28: Define Object Mapping and Store Object List - ABAP package and transport request

3.4. Transfer Metadata to Receiver System: this task will fail at the first attempt because the transfer tool makes a validation check point, whether the metadata was successfully transported to System C (via gCTS, as described in section 2). This is required as the data transfer can only happen only if the corresponding metadata is already transported and consistent in System C.

In case the gCTS transport was not done yet, you should do it now at this point.

We can see the warning logs indicating that the objects are "already active". This is due to the fact we already transferred the metadata to System B (via the transfer tool, as described in section 1). In case we had not run a Remote Conversion from System A to System B, the logs would be green as the metadata would have been transferred for the first time.

Figure 29.1: Transfer Metadata to Receiver System - productive conversion package

You can resume the Task List Run and proceed.

Figure 29.2: Transfer Metadata to Receiver System - productive conversion package

Figure 29.3: Transfer Metadata to Receiver System - productive conversion package

Figure 29.4: Transfer Metadata to Receiver System - productive conversion package

Apart from the differences described in the sections 3.1 to 3.4, all other tasks can be executed in the same way as done for the Remote Conversion from System A to System B.

In the Process Monitor of the productive conversion package, all phases are finished. The phase Systems Settings After Migration was finished for the purpose of this explanation. This phase contains tasks deletions for the clusters and InfoCube shadow tables, and for real projects, it is recommended to keep them open during certain time until all migration validations are completed.

Figure 30: Process monitor - productive conversion package

The 2 InfoProviders data which were transferred to System C.

Figure 31.1: Manage Datastore Objects - System C

Figure 31.2: Manage Datastore Objects - System C

Figure 32.1: Data Preview - System C

Figure 32.2: Data Preview - System C

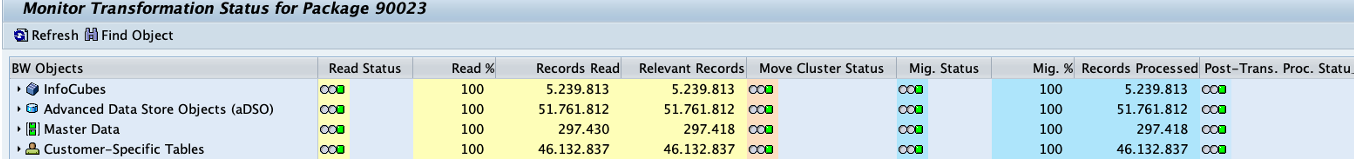

In Monitor: Transformation Status (Optional), a dashboard of the objects which had data transferred to System C can be displayed.

Figure 33: Monitor: Transformation Status (Optional)

In View Time-Related Migration Statistics (Optional), there are some statistics about the data transfer process. The scope contained 130 tables (/BI* and RS*) with 100 million records. 5 (five) BTC work processes were used, resulting in a the total runtime of approx. 35 minutes.

Figure 34: Data transfer statistics

Conclusion

The Remote Conversion is not a new solution, it has been successfully used by customers for SAP BW/4HANA migrations since many years. Recently, it became available to be used also for SAP Datasphere, BW bridge. Apart from the specifics of the receiver systems (on-premise or cloud), one of the main differences between a Remote Conversion to SAP BW/4HANA or to SAP Datasphere, BW bridge is the approach for a productive run. For SAP BW/4HANA, we have the test cycles following a 1:1 relation (DEV to DEV, PROD to PROD, etc.). For SAP Datasphere, BW bridge, any metadata activation, creation or modification should go through the development tenant via gCTS and the data through the transfer tool - reason why the sender BW or BW/4HANA system should be the productive one in case you intend to build a productive SAP Datasphere, BW bridge tenant.

The Remote Conversion to SAP Datasphere, BW bridge represents the end-to-end process: metadata + data transfer to the development tenant, metadata + data transfer to the productive tenant, and delta queues clone + synchronization. Often I have observed project teams to underlook the Remote Conversion option and taking the decision for other paths (greenfield, Shell), perhaps for not knowing the value of the the Remote Conversion or for not knowing how to use it.

Taking a simple example of a SAP BW system with 5 TB and 50 thousand objects, from which 250 are delta DataSources.

- Greenfield: all objects have to be manually recreated in SAP BW bridge and all DataSources delta queues to be manually reinitialized and loaded + the load of the full DataSources. Considering an SAP Datasphere, BW bridge 2-tier tenant , the data loads will need to be manually done to the development and productive tenants.

- Shell Conversion: the objects metadata can be transferred to SAP BW bridge via the transfer tool, all DataSources delta queues to be manually reinitialized and loaded + the load of the full DataSources. Considering an SAP Datasphere, BW bridge 2-tier tenant, the data loads will need to be manually done to the development and productive tenants.

- Remote Conversion: the objects - metadata and data - can be transferred to SAP BW bridge via the transfer tool, to both development and productive tenants. The delta queues are cloned and synchronized, and no reinitialization is needed. The 5 TB of data is transferred at table level, bypassing the standard data load application layer.

The decision of using the Remote Conversion involves many variables - size of the system, number of BW systems, number of objects, number of source systems, if it's a standalone or ERP-driven project (i.e. parallel S/4HANA conversion), time, etc. There is no magical recipe and it is crucial to do a proper assessment about the as-is situation of the landscape/business in order to select the most suitable option for your transition path to SAP Datasphere. A Readiness Check is recommended so that you can know the efforts level to make the system ready for the transition.

The main decision is whether Datasphere, BW bridge as a solution is the right place for you to land. Being that true, there are different roads for this journey - and the Remote Conversion should definitely be considered as one of the options.

In case of any questions or comments, feel free to post it here or contact me directly.

Thank you to my colleagues Thomas Rinneberg, Horatiu-Zeno Simon, Oliver Thomsen and Dirk Janning for your continuous valuable contributions and support.

- SAP Managed Tags:

- BW (SAP Business Warehouse),

- SAP Datasphere,

- SAP BW/4HANA,

- SAP Business Technology Platform

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

116 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

75 -

Expert

1 -

Expert Insights

177 -

Expert Insights

357 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

14 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,575 -

Product Updates

398 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,871 -

Technology Updates

493 -

Workload Fluctuations

1

- SAP Datasphere Wish List (05/2024) in Technology Q&A

- Want to learn more about SAP Master Data Governance at SAP Sapphire 2024? in Technology Blogs by SAP

- SAP Datasphere – Architecture and Security Concept in Technology Blogs by Members

- Watch the SAP BW Modernization Webinar Series in Technology Blogs by SAP

- Currency Translation in SAP Datasphere in Technology Blogs by Members

| User | Count |

|---|---|

| 20 | |

| 11 | |

| 8 | |

| 8 | |

| 7 | |

| 7 | |

| 7 | |

| 6 | |

| 6 | |

| 6 |