- SAP Community

- Groups

- Interest Groups

- Application Development

- Blog Posts

- A journey of discovery and a prime example for uti...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

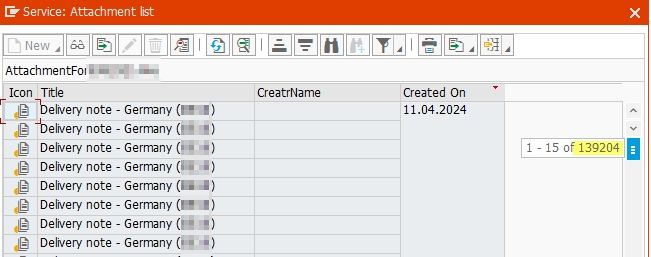

I recently got pulled into trying to resolve a helpdesk ticket raised by a user department because they had noticed something "off" with delivery note attachments to several deliveries. For some unknown reason, some deliveries kept getting the same PDF attached over and over again - and not just a few times but really often, like up to almost 140,000 times!

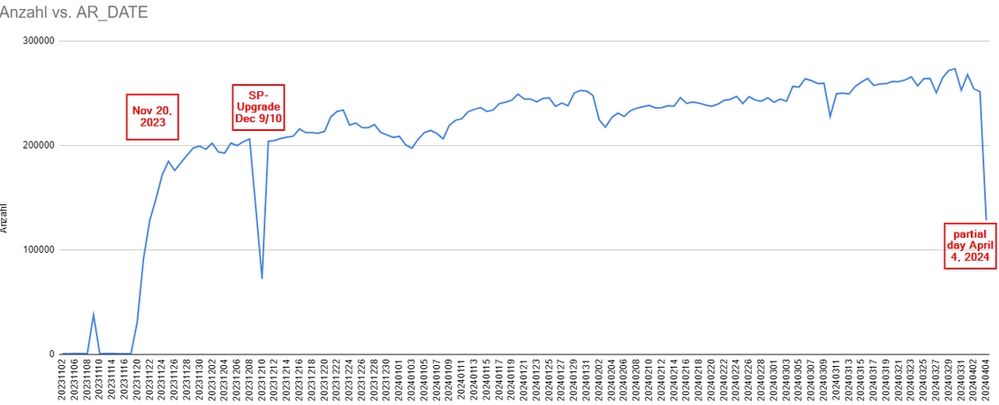

Collecting information

To begin with, we didn't really know where these were coming from and our only lead were the many entries also getting added to the archive link table TOA01. There, we noticed a big jump in daily additions at around November 20. Before then, we had daily additions in the 3 digit numbers range and afterwards it quickly jumped up to 250K entries per day.

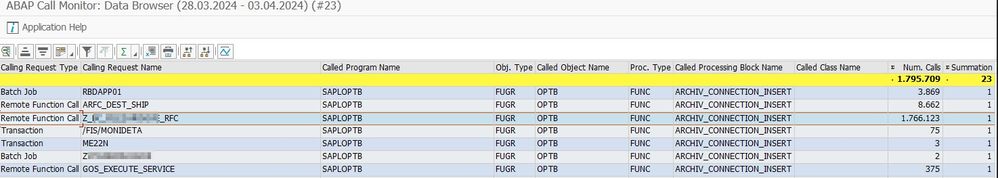

I then did a where-used analysis for table TOA01 and found function module ARCHIVE_CONNECTION_INSERT which looked like a promising lead just based on its name! Luckily enough, we have the SAP Call Monitor (SCMON) active in our productive environments, so I went there to see if there were any "interesting" hits for the function module. Not too surprisingly, there was a very clear outlier within the 7 days worth of data readily available:

So, I checked our RFC-enabled function module which looked fine logic-wise and which also hadn't been changed recently. It therefore couldn't really be the underlying reason for what was happening in the system. I now however had some impacted piece of code to take a closer look at.

Dynamic logpoints to the rescue!

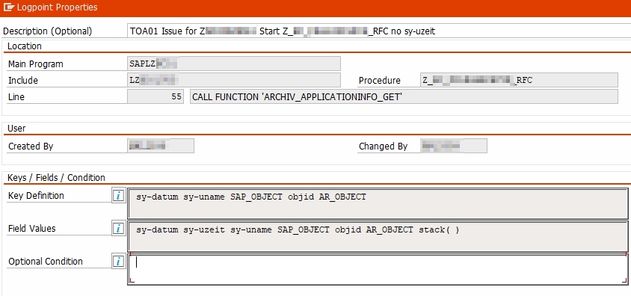

As far as I'm concerned, dynamic logpoints (SDLP) are one of the best options to troubleshoot issues like the one at hand where you can't capture the process stand alone via starting a report or transaction. So, I identified a suitable place in the code and defined a logpoint for a few relevant fields:

It didn't take long for a very distinct patter to emerge in that we had most delivery numbers showing up with slowly increasing KEY COUNTS (the combination of fields in Key Definition) and a few others where the numbers went up far more quickly.

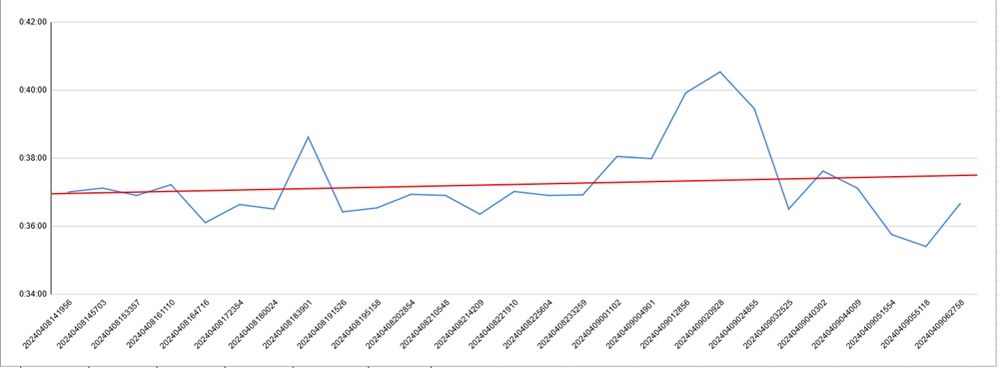

Slow cycle with something between 36 and 40 minutes between each addition:

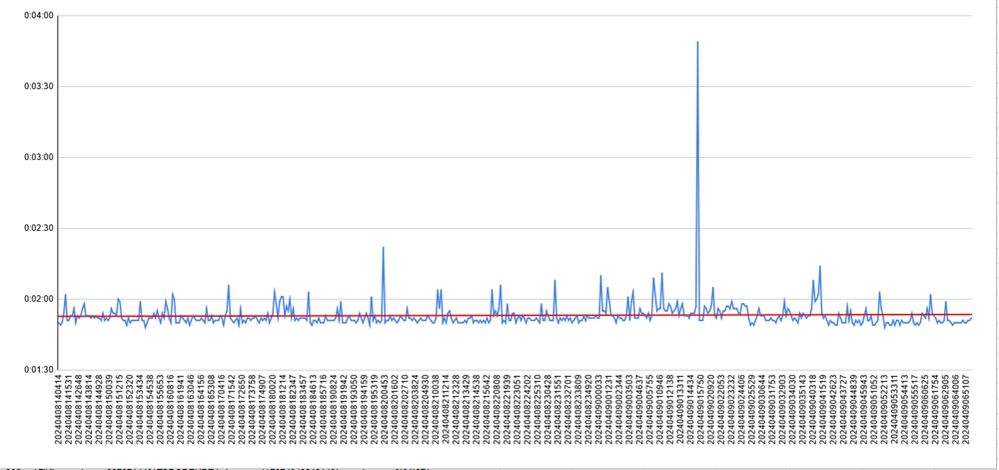

Fast cycle with something between 1m50s and 2m30s (ignoring the outlier):

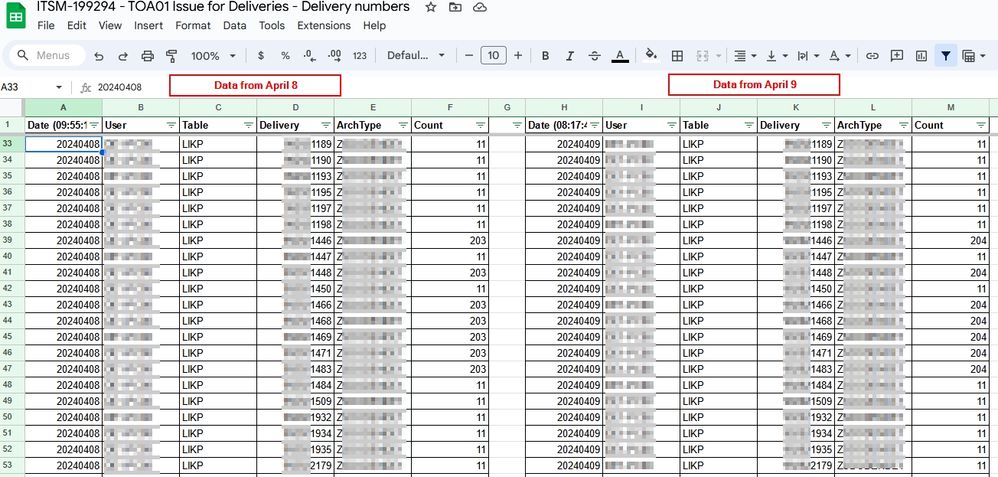

Watching this for a few days, the pattern was eerily consistent so that it was roughly possible to tell when numbers would go up again for one of the deliveries simply by knowing if it was stuck in the slow or fast cycle! Just how eerily regular the pattern was, can be seen in this screenshot with only partially masked delivery numbers. I had the dynamic logpoints active for about 8 hours each day, during which time they had been triggered about 74K times each:

Resolution of the issue

By that time we had collected more than enough data to be "fairly" certain that the root cause for the issue was outside our SAP system and had to be with one of our external logistic partners who kept re-sending the same delivery notes over and over again instead of just once. After getting in touch with them, the issue was quickly resolved: the root cause of all of this was a local C-drive running out of space with nobody noticing! Once that was tackled on their end, the number of transmitted delivery notes went down to pre-issue numbers immediately!

Next steps

Now that we successfully stopped the build-up of unwanted attachments we'll need to find a way to get rid of the millions of obsolete attachments in an orderly way with always obviously leaving one attachment intact but deleting all the many others from an impacted delivery. I already identified one most likely helpful function module for this task: ARCHIV_DELETE_META. I'll however have to investigate how best to get rid of the many items without causing issues for the system with these many updates.

So, here is a question for you: did you per chance already have to tackle a comparable clean-up of archivelink attachments and if so, am I on the right track with ARCHIV_DELETE_META or are there other/better options available? If you have any suggestions, please mention them in the comments. Thanks!

- SAP Managed Tags:

- ABAP Development,

- ABAP Testing and Analysis

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

A Dynamic Memory Allocation Tool

1 -

ABAP

8 -

abap cds

1 -

ABAP CDS Views

14 -

ABAP class

1 -

ABAP Cloud

1 -

ABAP Development

4 -

ABAP in Eclipse

1 -

ABAP Keyword Documentation

2 -

ABAP OOABAP

2 -

ABAP Programming

1 -

abap technical

1 -

ABAP test cockpit

7 -

ABAP test cokpit

1 -

ADT

1 -

Advanced Event Mesh

1 -

AEM

1 -

AI

1 -

API and Integration

1 -

APIs

8 -

APIs ABAP

1 -

App Dev and Integration

1 -

Application Development

2 -

application job

1 -

archivelinks

1 -

Automation

4 -

BTP

1 -

CAP

1 -

CAPM

1 -

Career Development

3 -

CL_GUI_FRONTEND_SERVICES

1 -

CL_SALV_TABLE

1 -

Cloud Extensibility

8 -

Cloud Native

7 -

Cloud Platform Integration

1 -

CloudEvents

2 -

CMIS

1 -

Connection

1 -

container

1 -

Debugging

2 -

Developer extensibility

1 -

Developing at Scale

3 -

DMS

1 -

dynamic logpoints

1 -

Eclipse ADT ABAP Development Tools

1 -

EDA

1 -

Event Mesh

1 -

Expert

1 -

Field Symbols in ABAP

1 -

Fiori

1 -

Fiori App Extension

1 -

Forms & Templates

1 -

General

1 -

Getting Started

1 -

IBM watsonx

1 -

Integration & Connectivity

9 -

Introduction

1 -

JavaScripts used by Adobe Forms

1 -

joule

1 -

NodeJS

1 -

ODATA

3 -

OOABAP

3 -

Outbound queue

1 -

Product Updates

1 -

Programming Models

14 -

Restful webservices Using POST MAN

1 -

RFC

1 -

RFFOEDI1

1 -

SAP BAS

1 -

SAP BTP

1 -

SAP Build

1 -

SAP Build apps

1 -

SAP Build CodeJam

1 -

SAP CodeTalk

1 -

SAP Odata

1 -

SAP UI5

1 -

SAP UI5 Custom Library

1 -

SAPEnhancements

1 -

SapMachine

1 -

security

3 -

text editor

1 -

Tools

17 -

User Experience

5

| User | Count |

|---|---|

| 5 | |

| 5 | |

| 3 | |

| 3 | |

| 3 | |

| 2 | |

| 2 | |

| 2 | |

| 1 | |

| 1 |