- SAP Community

- Groups

- Interest Groups

- Artificial Intelligence and Machine Learning

- Blogs

- Generating handwritten digits with a Generative Ad...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

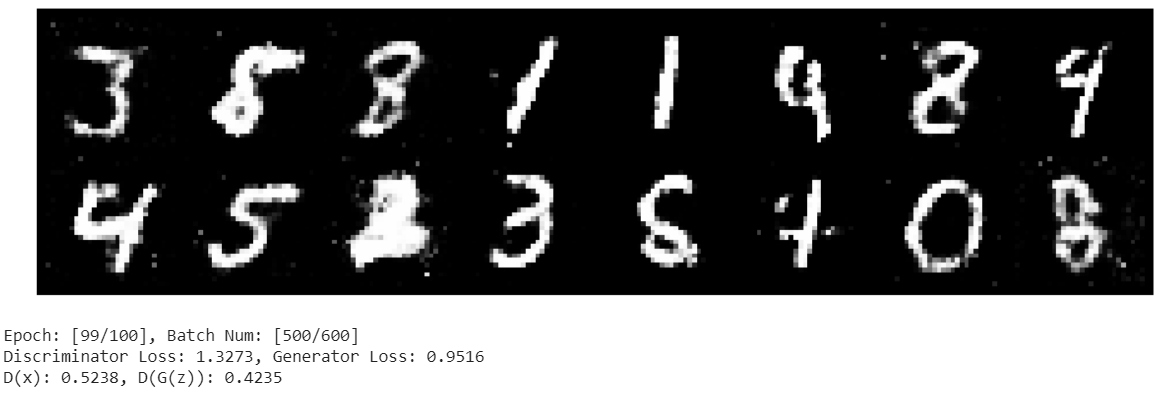

Ladies and gentlemen, behold:

A number eight! Generated by a computer! Are you impressed?

I guess not. And that’s ok.

As you may know, I’m super enthusiastic about Generative Adversarial Networks or GANs. This is the third and final post in a series of three blogs, and previously we have looked at deepfakes and how GANs work. In this post I will share the results of a GAN network I ran locally. It is able to generate handwritten digits with a quality high enough that the discriminator will not be able to tell the difference between the real life samples and the generated samples. This is to test out how the network behaves on a very controlled and relatively simple dataset.

The sample data is retrieved from the MNIST dataset, which contains a training set of 60,000 samples. The Python code I have used is originally written by Diego Gomez Mosquera and can be found here.

My code alterations are insignificant and just to make it run in my own Jupyter Notebook. I have not tried to run it on SAP Cloud Platform Cloud Foundry – but if you see a purpose to do so please report your findings.

I ran the GAN for 100 epochs with other values as suggested by the original code – and with random noise as input to the generator.

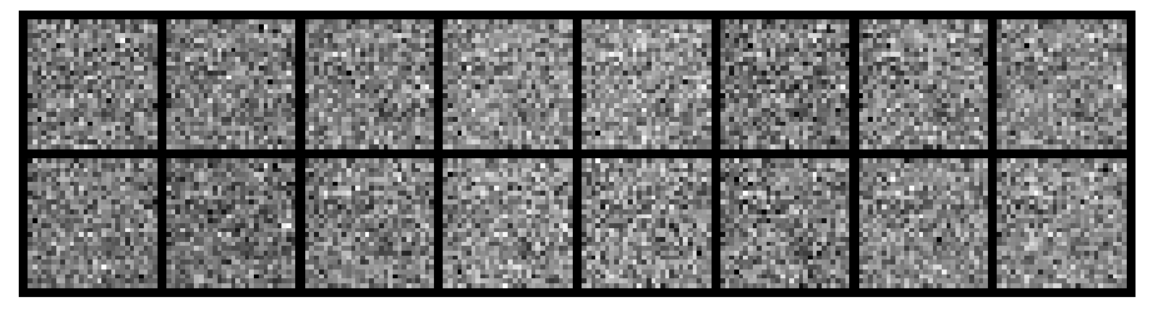

The first epoch shows the random noise:

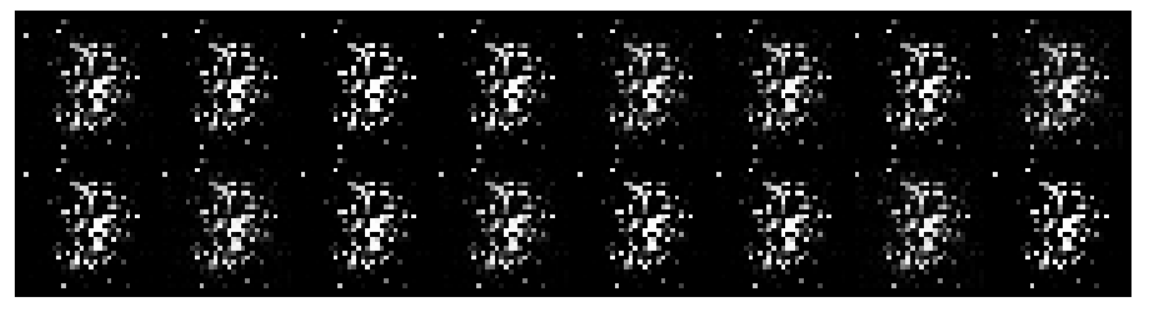

Already after a two epochs, we can see something is forming:

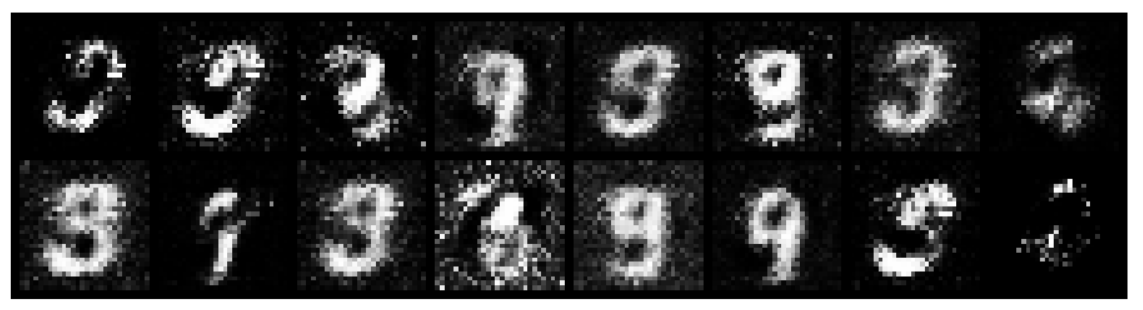

After 20 epochs something resembling numbers are quite visible in the output:

The final result after 100 turns shows quite good results:

Results after 100 epochs. The discriminator loss is higher than the generator’s, as the generator is now good at it’s job and the discriminator is being fooled more often.

So it worked! We create more or less believable numbers images. Running the training for another 100 epochs would probably have fixed the remaining defects. Going from simple, handwritten digits to pieces of art or complex photographs will require a different layout of the network – but the concept is the same: A forger, an expert and the competition between them.

So far, this is not put into an SAP context – or even related to a SAP business area or process. Maybe you have the idea of how this technique can be applied to solve an existing problem or to create new opportunities. If so, please comment, share and contribute!

/Simen

Twitter: https://twitter.com/simenhuuse

LinkedIn: https://www.linkedin.com/in/simenhuuse/

Source:

- Diego Gomez Mosquera, “GANs from Scratch 1: A deep introduction. With code in PyTorch and TensorFlow” URL: https://medium.com/ai-society/gans-from-scratch-1-a-deep-introduction-with-code-in-pytorch-and-tenso...

- Goodfellow et al 2016, “Generative Adversarial Nets” URL: https://arxiv.org/pdf/1406.2661.pdf

- SAP Managed Tags:

- Machine Learning

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

Agents

4 -

AI

5 -

AI Launchpad

2 -

Artificial Intelligence

2 -

Artificial Intelligence (AI)

3 -

Brainstorming

1 -

BTP

1 -

Business AI

2 -

Business Trends

1 -

Business Trends

1 -

Cloud Foundry

1 -

Data and Analytics (DA)

1 -

Design and Engineering

1 -

forecasting

1 -

GenAI

1 -

Generative AI

5 -

Generative AI Hub

4 -

Graph

1 -

Language Models

1 -

LlamaIndex

1 -

LLM

2 -

LLMs

2 -

Machine Learning

1 -

Machine learning using SAP HANA

1 -

Mistral AI

1 -

NLP (Natural Language Processing)

1 -

open source

1 -

OpenAI

1 -

Python

2 -

RAG

2 -

Retrieval Augmented Generation

1 -

SAP Build Process Automation

1 -

SAP HANA

1 -

SAP HANA Cloud

1 -

Technology Updates

1 -

User Experience

1 -

user interface

1 -

Vector Database

3 -

Vector DB

1 -

Vector Similarity

1