- SAP Community

- Groups

- Interest Groups

- Artificial Intelligence and Machine Learning

- Blogs

- Introduction of the end-to-end Machine Learning op...

Artificial Intelligence and Machine Learning Blogs

Explore AI and ML blogs. Discover use cases, advancements, and the transformative potential of AI for businesses. Stay informed of trends and applications.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Product and Topic Expert

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

06-13-2022

4:17 PM

I worked on this project with my colleagues gianluigi.bagnoli, stuart.clarke2, dayanand.karalkar2, yatsea.li, amagnani and jacobtan

In our previous blog post, my colleague gianluigi.bagnoli explained to you the storyline of our blog post series about building intelligent and sustainability scenarios on SAP BTP with AI and Planning: we imagined a traditional Milan-based Light Guide Plates (LGP) manufacturer, BAGNOLI & CO, that is transforming into a sustainable smart factory by reducing waste, and improving production efficiency and workplace safety with the help of SAP AI Core, SAP AI Launchpad and SAP Analytics Cloud for Planning.

In this blog post, we’ll learn how an SAP Partner like you can help BAGNOLI & CO achieve their business goals by developing advanced custom AI solutions. Specifically, how AI Core and AI Launchpad can enable the execution and operations of BAGNOLI & CO’s AI scenarios in a standardized, scalable, and hyperscaler-agnostic way.

Before we jump into the details about end-to-end ML Ops with SAP AI Core, let’s have a quick look at SAP’s AI Strategy. SAP is committed to building intelligent, sustainable, and connected enterprises by infusing AI technologies into applications and business scenarios, and it accomplishes this task with the AI offerings of SAP BTP.

The picture below (Fig.1) shows the rich SAP BTP AI capabilities offered as “AI services”. The consumption of these AI capabilities is possible thanks to the standardized AI API that allows the unified consumption of AI scenarios, regardless of whether it is provided on SAP or on partner technology platforms.

SAP has made AI a key strategic pillar to enable the intelligent enterprise. This required an updated approach to the underlying framework that was powering AI applications in SAP Business Technology Platform. Therefore, two new components have been introduced in SAP BTP:

Now let’s have a close look at how to achieve an end-to-end ML workflow in SAP AI Core.

The picture below (Fig. 2) shows an end-to-end procedure that an SAP partner can follow to implement an end-to-end ML workflow in SAP AI Core and SAP AI Launchpad. There are three main groups of operations:

Next, let’s have a look at each “box” one by one and let’s understand the rationale behind the SAP AI Core and SAP AI Launchpad design and the power of these two products.

In order to start working with SAP AI Core and SAP AI Launchpad, the onboarding is our very first step (box 0) which includes all the required configurations.

Everything starts with the provisioning of an SAP AI Core instance in SAP BTP and the subscription to SAP AI Launchpad. This is an easy task to carry out through the SAP BTP Cockpit. SAP AI Core is designed to be integrated with and make use of many open-source platforms and cloud infrastructures. This means that when working with SAP AI Core a user has to create an account to those platforms and authorize SAP AI Core to access them. All these activities constitute the one-time initial configurations of SAP AI Core.

Under the hood

Let’s have a closer look at the cloud infrastructures that make SAP AI Core a modern and complete tool for delivering cloud-native advanced AI solutions (see Fig. 4).

SAP AI Core is a runtime to execute ML pipelines and/or deploy trained machine learning models as APIs to serve inference requests with high performance in a scalable manner. This entails designing the pipelines and the serving applications and writing the relative pieces of code that will be executed in containers.

But, why containers? Containers package application software with their dependencies to abstract it from the infrastructure it runs on. Developers build and deploy containerised applications, breaking down monolithic apps into microservices for resource optimisation and easier maintenance in the Cloud.

SAP AI Core follows this paradigm consolidated in time, and this consideration brings us to the first platform integrated with SAP AI Core, that is Docker (see Fig. 4). Docker is the container platform used in SAP AI Core to store your code for training and serving in the form of portable containers.

Actually, containers need to be managed and deployed in some way. This is why behind SAP AI Core we find a Kubernetes infrastructure for managing containerized workloads and services. So, SAP AI Core makes use of these two tools that are complementary to each other and help with building cloud-native architectures.

What are the benefits one can achieve with this combo? First of all, applications are easier to maintain as they are broken down into smaller parts; secondly these parts run on an infrastructure that is more robust and the applications are more widely available. Moreover, applications are able to handle more load on-demand, improving the user experience and reducing the resource waste.

Now, let’s focus on another typical need in software development: making application definitions, configurations and environments declarative and version controlled. This is important because application deployment and lifecycle management have to be automated, auditable, and easy to understand.

How is this achieved in SAP AI Core? It’s achieved thanks to the use of declarative configuration files (referred to as “templates”) that can be used to specify the infrastructure desired state, the pipeline steps, and all the required input parameters and artifacts.

The templates are based on two powerful open-source ML engines that are well known in the development of cloud-native applications:

But where are these template files stored and version controlled? And how are they accessed by SAP AI Core? The answer to these questions leads us to the introduction of the second platform that has to be provided and integrated with SAP AI Core, that is GitHub (refer to Fig. 4 and 5).

A GitHub repository is used to host the training and serving templates and access to it has to be provided to SAP AI Core. Access must be granted so that an automated process (ArgoCD) can make the SAP AI Core environment match the described state in the GitHub repository.

So, templates, GitHub and ArgoCD are the ingredients in SAP AI Core that enable CI/CD, facilitating a “continuous” flow of new updates and features. For instance, if you want to change your training pipeline, you only need to update the corresponding template in the repository, and the automated process handles everything else, increasing your development flexibility and speed.

Let’s have a look now at the model training block (Fig. 6).

In order to train a model, a dataset needs to be provided and made available to SAP AI Core. This is achieved by storing the datasets in a hyperscaler object store and by providing the required access rights to SAP AI Core.

Why was this approach chosen for SAP AI Core? A hyperscaler object store represents the most cost effective, robust and durable way of managing datasets and models since it is characterized by the ability to massively expand under a controlled and efficiently managed software-defined framework. Moreover, as SAP AI Core is designed to work with containers, an object store makes data access convenient for Argo in a transparent, automatic fashion by copying data in/out of pipeline containers and assuring secure credential storage.

Step 1 in the model train block connects an object store to SAP AI Core and loads the training dataset. Importantly, step 1 also segregates the AI artifacts, which is achieved by balancing the creation of SAP AI Core instances and resource groups. Clearly, different tenants can isolate different AI artifacts, but it is also possible to define namespaces to group and segregate related resources within the scope of one SAP AI Core tenant (see Fig. 7).

One example of an asset that can be segregated within a resource group is the input dataset used to train a ML model. The registration of a dataset in SAP AI Core assumes the creation of a resource group, which is visible only to those people allowed to access that specific resource group and is consumed by all those components belonging to the same resource group.

As mentioned before, an SAP AI Core user has to design their own training pipeline and specify it into a training template, which is used to define an executable object in SAP AI Core. For convenience it falls under the umbrella of a specific “scenario” that acts as an additional namespace and groups together all the executables needed to solve a specific business challenge. The executable together with the input dataset are the key components the user has to bind together for creating a training configuration and then to train a ML model in SAP AI Core.

An executable includes information about the training input and output, the pipeline containers to be used, and the required infrastructure resources. SAP AI Core provides several preconfigured infrastructure bundles called “resource plans” that differ from one another depending on the number of CPUs, GPUs and the amount of memory. So, SAP AI Core is capable of dealing with demanding workloads.

Another important point when working with ML, is evaluating the model’s accuracy. ML models are used for practical business decisions, so more accurate models can result in better decisions. The cost of errors can be huge, but this can be mitigated by optimizing the model’s accuracy. This being said, an SAP AI Core user might ask: how am I going to evaluate the performance of my model? The answer is that SAP AI Core provides several APIs to register your favorite metrics that can be retrieved and inspected once the training is complete.

When the model has completed training and has satisfactory metrics, the final step of the model training block consists of harvesting the results. When the model is produced and stored by SAP AI Core in the same connected hyperscaler object store, it is automatically registered in SAP AI Core and is ready for deployment.

We are now at the final part of the ML end-to-end workflow (Fig. 8). Deploying a model in SAP AI Core consists of writing a web application that is able to serve the inference requests through an endpoint exposed on the internet, which could be easily scaled on the Kubernetes infrastructure.

To serve a model, you will code and develop a serving application that will be run in the form of a container. But what is this application typically expected to do?

Referring to Fig. 9, everything starts with an inference request sent to an endpoint. Internally the web application has to interpret the data contained in the body of the call and then it has to retrieve the model from the hyperscaler object store, apply it to the data and pack the prediction into a response that will be consumed by a custom service.

Coding is fundamental, but, as mentioned before, the model server that will be deployed is defined by a specific template. This self-contained template will create an executable with the definition of the required parameters, the container to be executed, the resources needed for starting the web application, and the number of replicas of the model server to be started.

This combination of the proper serving executable with the reference to the model to be used, will enable SAP AI Core to start your deployment.

Once the model server is running and the deployment URL is ready, the very final step of the ML workflow in SAP AI Core is the consumption of the model through the exposed endpoint. The API can be easily integrated in any business application via http request, such as a Jupyter notebook, Postman or a CAP application, etc.

In the next blog post, we will see how to develop a custom Deep Learning model involving CNNs in Tensorflow to automate the defect detection in Bagnoli & Co with the above-described ML end-to-end workflow in SAP AI Core.

Just as a reminder, here’s the full program of our journey:

If you're a partner and would like to learn more, don't forget to register for our upcoming bootcamps on sustainability-on-BTP, where this case is going to be explained and detailed. Here you can find dates and registration links:

SAP Hana Academy has prepared an interesting series of videos that provides more deep dive content about AI & Sustainability based on the bootcamp storyline. So, if you want to learn more about SAP BTP and the onboarding of SAP AI Core that I have described in this blog post, please, check this link.

In our previous blog post, my colleague gianluigi.bagnoli explained to you the storyline of our blog post series about building intelligent and sustainability scenarios on SAP BTP with AI and Planning: we imagined a traditional Milan-based Light Guide Plates (LGP) manufacturer, BAGNOLI & CO, that is transforming into a sustainable smart factory by reducing waste, and improving production efficiency and workplace safety with the help of SAP AI Core, SAP AI Launchpad and SAP Analytics Cloud for Planning.

In this blog post, we’ll learn how an SAP Partner like you can help BAGNOLI & CO achieve their business goals by developing advanced custom AI solutions. Specifically, how AI Core and AI Launchpad can enable the execution and operations of BAGNOLI & CO’s AI scenarios in a standardized, scalable, and hyperscaler-agnostic way.

Infusing AI into business applications via SAP Business Technology Platform

Before we jump into the details about end-to-end ML Ops with SAP AI Core, let’s have a quick look at SAP’s AI Strategy. SAP is committed to building intelligent, sustainable, and connected enterprises by infusing AI technologies into applications and business scenarios, and it accomplishes this task with the AI offerings of SAP BTP.

The picture below (Fig.1) shows the rich SAP BTP AI capabilities offered as “AI services”. The consumption of these AI capabilities is possible thanks to the standardized AI API that allows the unified consumption of AI scenarios, regardless of whether it is provided on SAP or on partner technology platforms.

Figure 1: SAP AI solution portfolio.

SAP has made AI a key strategic pillar to enable the intelligent enterprise. This required an updated approach to the underlying framework that was powering AI applications in SAP Business Technology Platform. Therefore, two new components have been introduced in SAP BTP:

- SAP AI Core: a service that allows you to build custom AI models using open-source frameworks and develop advanced AI use cases. It is designed to provide CI/CD capability and to support multitenancy;

- SAP AI Launchpad: a user interface (UI) that is the single-entry point for all the AI API-enabled runtimes available in SAP BTP, including SAP AI Core. It can be used as a client for accessing runtimes capabilities and therefore to manage the lifecycle of AI use cases.

How to achieve an end-to-end ML workflow in SAP AI Core?

Now let’s have a close look at how to achieve an end-to-end ML workflow in SAP AI Core.

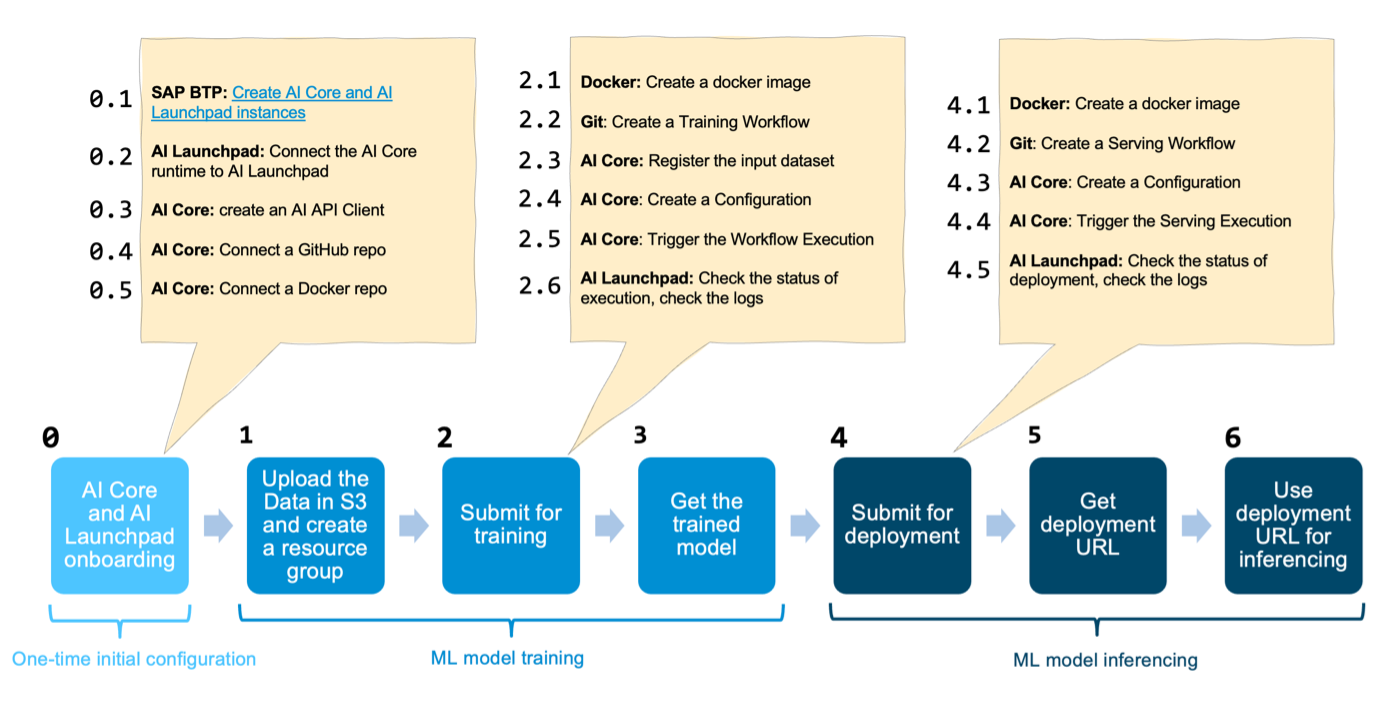

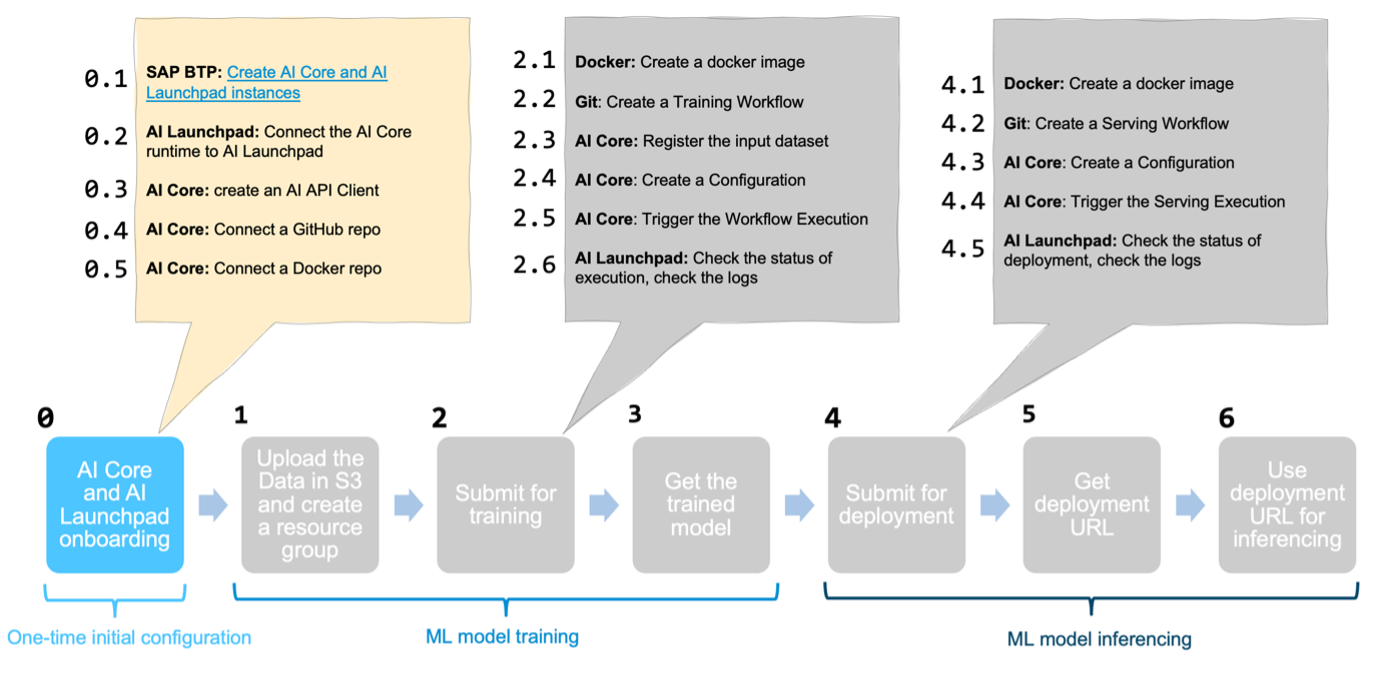

The picture below (Fig. 2) shows an end-to-end procedure that an SAP partner can follow to implement an end-to-end ML workflow in SAP AI Core and SAP AI Launchpad. There are three main groups of operations:

- The onboarding of SAP AI Core and SAP AI Launchpad;

- The model training operations;

- The model inferencing operations.

Figure 2: end-to-end ML workflow in SAP AI Core.

Next, let’s have a look at each “box” one by one and let’s understand the rationale behind the SAP AI Core and SAP AI Launchpad design and the power of these two products.

The onboarding of SAP AI Core and SAP AI Launchpad

In order to start working with SAP AI Core and SAP AI Launchpad, the onboarding is our very first step (box 0) which includes all the required configurations.

Figure 3: step 0 in our end-to-end ML workflow.

Everything starts with the provisioning of an SAP AI Core instance in SAP BTP and the subscription to SAP AI Launchpad. This is an easy task to carry out through the SAP BTP Cockpit. SAP AI Core is designed to be integrated with and make use of many open-source platforms and cloud infrastructures. This means that when working with SAP AI Core a user has to create an account to those platforms and authorize SAP AI Core to access them. All these activities constitute the one-time initial configurations of SAP AI Core.

Under the hood

Let’s have a closer look at the cloud infrastructures that make SAP AI Core a modern and complete tool for delivering cloud-native advanced AI solutions (see Fig. 4).

Figure 4: SAP AI Core architecture and the open-source AI infrastructures.

SAP AI Core is a runtime to execute ML pipelines and/or deploy trained machine learning models as APIs to serve inference requests with high performance in a scalable manner. This entails designing the pipelines and the serving applications and writing the relative pieces of code that will be executed in containers.

But, why containers? Containers package application software with their dependencies to abstract it from the infrastructure it runs on. Developers build and deploy containerised applications, breaking down monolithic apps into microservices for resource optimisation and easier maintenance in the Cloud.

SAP AI Core follows this paradigm consolidated in time, and this consideration brings us to the first platform integrated with SAP AI Core, that is Docker (see Fig. 4). Docker is the container platform used in SAP AI Core to store your code for training and serving in the form of portable containers.

Actually, containers need to be managed and deployed in some way. This is why behind SAP AI Core we find a Kubernetes infrastructure for managing containerized workloads and services. So, SAP AI Core makes use of these two tools that are complementary to each other and help with building cloud-native architectures.

What are the benefits one can achieve with this combo? First of all, applications are easier to maintain as they are broken down into smaller parts; secondly these parts run on an infrastructure that is more robust and the applications are more widely available. Moreover, applications are able to handle more load on-demand, improving the user experience and reducing the resource waste.

Now, let’s focus on another typical need in software development: making application definitions, configurations and environments declarative and version controlled. This is important because application deployment and lifecycle management have to be automated, auditable, and easy to understand.

How is this achieved in SAP AI Core? It’s achieved thanks to the use of declarative configuration files (referred to as “templates”) that can be used to specify the infrastructure desired state, the pipeline steps, and all the required input parameters and artifacts.

The templates are based on two powerful open-source ML engines that are well known in the development of cloud-native applications:

- Argo Workflows, an open-source container-native workflow engine for orchestrating parallel jobs on Kubernetes,

- KFServing, a standard model inference platform on Kubernetes.

But where are these template files stored and version controlled? And how are they accessed by SAP AI Core? The answer to these questions leads us to the introduction of the second platform that has to be provided and integrated with SAP AI Core, that is GitHub (refer to Fig. 4 and 5).

A GitHub repository is used to host the training and serving templates and access to it has to be provided to SAP AI Core. Access must be granted so that an automated process (ArgoCD) can make the SAP AI Core environment match the described state in the GitHub repository.

Figure 5: GitOps with ArgoCD in SAP AI Core.

So, templates, GitHub and ArgoCD are the ingredients in SAP AI Core that enable CI/CD, facilitating a “continuous” flow of new updates and features. For instance, if you want to change your training pipeline, you only need to update the corresponding template in the repository, and the automated process handles everything else, increasing your development flexibility and speed.

The model training in SAP AI Core

Let’s have a look now at the model training block (Fig. 6).

Figure 6: The model training block in our end-to-end ML workflow.

In order to train a model, a dataset needs to be provided and made available to SAP AI Core. This is achieved by storing the datasets in a hyperscaler object store and by providing the required access rights to SAP AI Core.

Why was this approach chosen for SAP AI Core? A hyperscaler object store represents the most cost effective, robust and durable way of managing datasets and models since it is characterized by the ability to massively expand under a controlled and efficiently managed software-defined framework. Moreover, as SAP AI Core is designed to work with containers, an object store makes data access convenient for Argo in a transparent, automatic fashion by copying data in/out of pipeline containers and assuring secure credential storage.

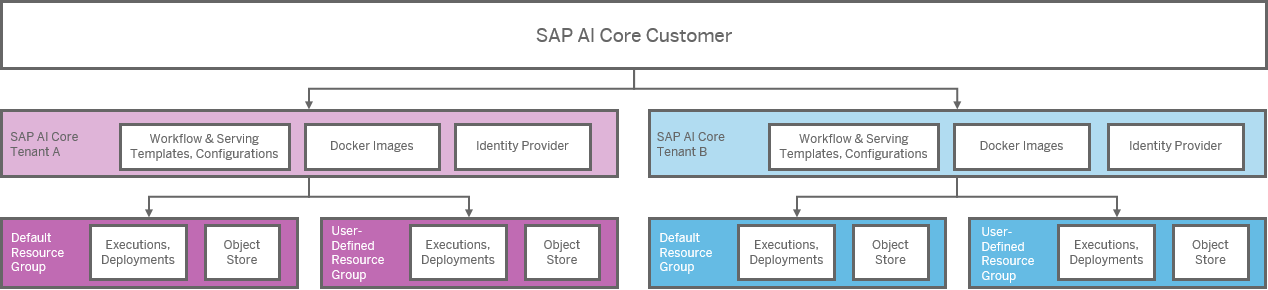

Step 1 in the model train block connects an object store to SAP AI Core and loads the training dataset. Importantly, step 1 also segregates the AI artifacts, which is achieved by balancing the creation of SAP AI Core instances and resource groups. Clearly, different tenants can isolate different AI artifacts, but it is also possible to define namespaces to group and segregate related resources within the scope of one SAP AI Core tenant (see Fig. 7).

Figure 7: Resource groups and multitenancy.

One example of an asset that can be segregated within a resource group is the input dataset used to train a ML model. The registration of a dataset in SAP AI Core assumes the creation of a resource group, which is visible only to those people allowed to access that specific resource group and is consumed by all those components belonging to the same resource group.

As mentioned before, an SAP AI Core user has to design their own training pipeline and specify it into a training template, which is used to define an executable object in SAP AI Core. For convenience it falls under the umbrella of a specific “scenario” that acts as an additional namespace and groups together all the executables needed to solve a specific business challenge. The executable together with the input dataset are the key components the user has to bind together for creating a training configuration and then to train a ML model in SAP AI Core.

An executable includes information about the training input and output, the pipeline containers to be used, and the required infrastructure resources. SAP AI Core provides several preconfigured infrastructure bundles called “resource plans” that differ from one another depending on the number of CPUs, GPUs and the amount of memory. So, SAP AI Core is capable of dealing with demanding workloads.

Another important point when working with ML, is evaluating the model’s accuracy. ML models are used for practical business decisions, so more accurate models can result in better decisions. The cost of errors can be huge, but this can be mitigated by optimizing the model’s accuracy. This being said, an SAP AI Core user might ask: how am I going to evaluate the performance of my model? The answer is that SAP AI Core provides several APIs to register your favorite metrics that can be retrieved and inspected once the training is complete.

When the model has completed training and has satisfactory metrics, the final step of the model training block consists of harvesting the results. When the model is produced and stored by SAP AI Core in the same connected hyperscaler object store, it is automatically registered in SAP AI Core and is ready for deployment.

The model deployment in SAP AI Core

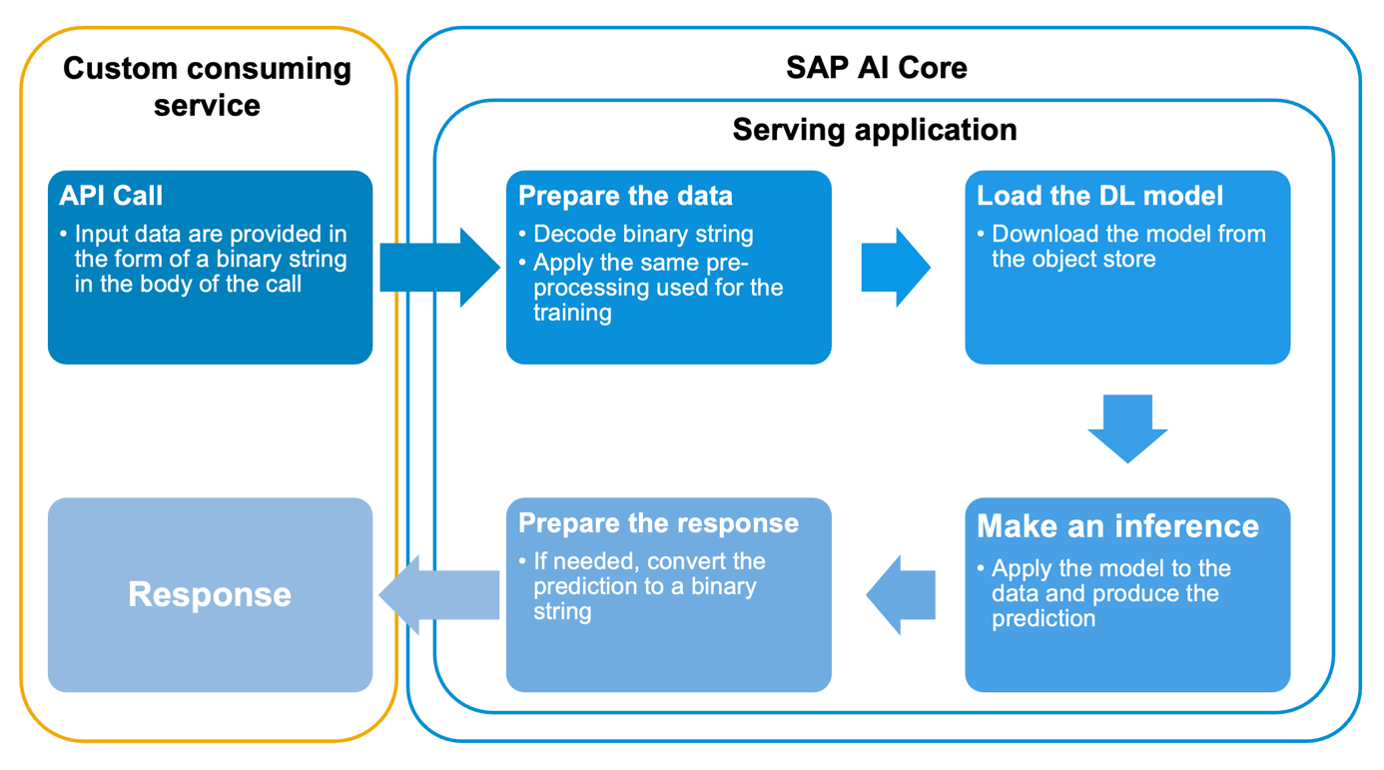

We are now at the final part of the ML end-to-end workflow (Fig. 8). Deploying a model in SAP AI Core consists of writing a web application that is able to serve the inference requests through an endpoint exposed on the internet, which could be easily scaled on the Kubernetes infrastructure.

Figure 8: The model deployment in our end-to-end ML workflow.

To serve a model, you will code and develop a serving application that will be run in the form of a container. But what is this application typically expected to do?

Referring to Fig. 9, everything starts with an inference request sent to an endpoint. Internally the web application has to interpret the data contained in the body of the call and then it has to retrieve the model from the hyperscaler object store, apply it to the data and pack the prediction into a response that will be consumed by a custom service.

Figure 9: The serving application workflow.

Coding is fundamental, but, as mentioned before, the model server that will be deployed is defined by a specific template. This self-contained template will create an executable with the definition of the required parameters, the container to be executed, the resources needed for starting the web application, and the number of replicas of the model server to be started.

This combination of the proper serving executable with the reference to the model to be used, will enable SAP AI Core to start your deployment.

Once the model server is running and the deployment URL is ready, the very final step of the ML workflow in SAP AI Core is the consumption of the model through the exposed endpoint. The API can be easily integrated in any business application via http request, such as a Jupyter notebook, Postman or a CAP application, etc.

What’s next?

In the next blog post, we will see how to develop a custom Deep Learning model involving CNNs in Tensorflow to automate the defect detection in Bagnoli & Co with the above-described ML end-to-end workflow in SAP AI Core.

Just as a reminder, here’s the full program of our journey:

- An overview of sustainability on top of SAP BTP

- Introduction of end-to-end ML ops with SAP AI Core (this blog post)

- BYOM with TensorFlow in SAP AI Core for Defect Detection

- BYOM with TensorFlow in SAP AI Core for sound-based Predictive Maintenance

- Embedding Intelligence and Sustainability into Custom Applications on SAP BTP

- Maintenance Cost Budgeting & Sustainability Planning with SAP Analytics Cloud

If you're a partner and would like to learn more, don't forget to register for our upcoming bootcamps on sustainability-on-BTP, where this case is going to be explained and detailed. Here you can find dates and registration links:

| Bootcamp | Session 1 | Session 2 | Session 3 | Registration |

| NA / LAC | June 23rd 11:00AM EST | June 30th 11:00AM EST | July 7th 11:00AM EST | Registration |

| EMEA / MEE | June 21st 09:00AM CET | June 28th 09:00AM CET | July 5th 09:00AM CET | Registration |

| APJ | July 6th 10:00AM SGT | July 13th 10:00AM SGT | July 20th 10:00AM SGT | Registration |

Additional resources

SAP Hana Academy has prepared an interesting series of videos that provides more deep dive content about AI & Sustainability based on the bootcamp storyline. So, if you want to learn more about SAP BTP and the onboarding of SAP AI Core that I have described in this blog post, please, check this link.

- SAP Managed Tags:

- Artificial Intelligence

7 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

Agents

3 -

AI

5 -

AI Launchpad

2 -

Artificial Intelligence

2 -

Artificial Intelligence (AI)

3 -

Brainstorming

1 -

BTP

1 -

Business AI

2 -

Business Trends

1 -

Business Trends

1 -

Cloud Foundry

1 -

Data and Analytics (DA)

1 -

Design and Engineering

1 -

forecasting

1 -

GenAI

1 -

Generative AI

4 -

Generative AI Hub

4 -

Graph

1 -

Language Models

1 -

LlamaIndex

1 -

LLM

2 -

LLMs

2 -

Machine Learning

1 -

Machine learning using SAP HANA

1 -

Mistral AI

1 -

NLP (Natural Language Processing)

1 -

open source

1 -

OpenAI

1 -

Python

2 -

RAG

2 -

Retrieval Augmented Generation

1 -

SAP Build Process Automation

1 -

SAP HANA

1 -

SAP HANA Cloud

1 -

Technology Updates

1 -

user experience

1 -

user interface

1 -

Vector Database

3 -

Vector DB

1 -

Vector Similarity

1