- SAP Community

- Groups

- Interest Groups

- Artificial Intelligence and Machine Learning

- Discussions

- Re: AI Core - problem with copying object from AWS...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

4 weeks ago

Hello experts,

I am new to AI Core product, and I am trying to make some scenarios, but I faced problem that is stopping me for a while now.

Background:

I have been doing tutorial about SAP BTP AI Core: https://developers.sap.com/group.ai-core-get-started-basics.html

Everything was working fine till the tutorial “Make Predictions for House Prices with SAP AI Core”: https://developers.sap.com/tutorials/ai-core-deploy.html

There is step about to loading trained model to make predictions (I will refer to this later):

model = pickle.load(open ('/mnt/models/model.pkl','rb'))

Then there is the rest of the code for creating endpoints and using this model for prediction.

Further configuration I did correct (Docker, yaml etc.), according to tutorial.

The service started but was not working (error 500 when calling endpoint).

My tests and analysis:

I couldn’t find any potential problem for that, I rechecked everything and the web service from tutorial was still not working.

I decided to create my own model and configuration for a very simple example of model which is instance of class making addition (very simple one, just for tests).

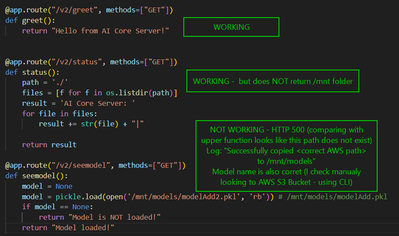

But this time I created some more endpoints for this web service to check if problem is only with the one using (and loading) model:

Again, everything was working except the part of using the model, so:

- The AWS S3 Bucket has this model (I check it manually using console) and path is correct.

- Python code is fine because I successfully tested it locally.

- Git with YAML file is correct because the service is running and other endpoints working.

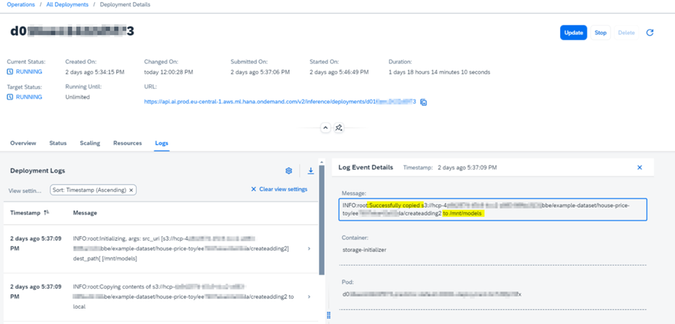

- All actions in AI Core (deployments, executions etc.) were also successful.

I implemented more functionalities into this webservice, so I was able to confirm that all other endpoints were working correctly and only the one loading model was returning error 500.

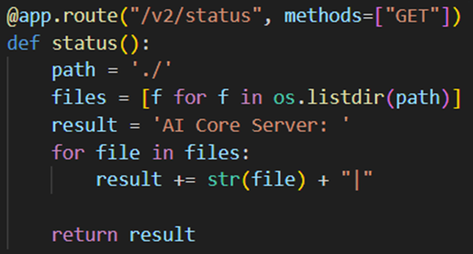

One of the functionalities was defined as this:

And the output was:

AI Core Server: __pycache__|main.py|requirements.txt|

So basically, there is no other folder (should be /mnt) or file in this location… Of course, I don’t know how exactly AI Core works internally, so maybe this approach for testing is wrong, but I also don’t know how else I can check this, when I see this log:

“INFO:root:Successfully copied s3://hcp-… to /mnt/models”

The location is 100% correct, I can see (and copy) this model using direct access to my AWS S3 Bucket. I did this and deployed locally, and it is working fine.

My conclusion is that the model is not copied successfully (the path doesn’t even exist), or I am making some error in the implementation of this functionality.

Question:s

- Is my approach for testing this correct (should this python function return all files and folders for my instance of AI Core in root location /)?

- How else I can debug/analyze this problem? Have you ever faced a similar issue?

- Is it possible that the free version of AI Core has some limitations like this or a hidden non-deterministic bug?

I fount very similar problem here:

https://community.sap.com/t5/technology-q-a/sap-ai-core-error-while-prediction/qaq-p/12769557

This problem has not been resolved on that forum as I see. I followed hints from there but with no results.

Best regards

- SAP Managed Tags:

- SAP AI Core,

- SAP AI Launchpad

- Labels:

-

SAP AI Core

-

SAP AI Launchpad

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2 weeks ago

Hello,

I’ve managed to resolve this error.

Of course, after the path adjustment model was copied correctly (thanks for pointing that out).

The problem was probably with dependencies location…

I think the best explanation would be according to this:

https://forums.fast.ai/t/attributeerror-cant-get-attribute-my-func-on-module-main-from-main-py/97320...

And in one of the comments there was solution:

“pickle is lazy and does not serialize class definitions or function definitions. Instead it saves a reference of how to find the class (the module it lives in and its name)”

and

“There’s an extension to pickle called dill, that does serialise Python objects and functions etc. (not references)”

So external reference to some dependent artifact was not matching.

Regards

- SAP Managed Tags:

- SAP AI Core,

- SAP AI Launchpad

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

4 weeks ago

Hi! As all your endpoints are working except for the model, I am assuming that you specify the bearer token and the default group correctly. Are you sure you are providing the correct input data in your json to make a prediction? Is the json formatted correctly? Is the endpoint specified correctly (/v2/predict if you followed the tutorial)? Are you using a POST request?

- SAP Managed Tags:

- SAP AI Core,

- SAP AI Launchpad

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

4 weeks ago

Hi,

Thanks for the quick reply!

I used JSON provided in the tutorial; it didn’t require any adjustments as I understood as well as endpoints (it was ready to be copied).

However, I don’t think data structure is the problem because it seems like the model is not even loaded, so the error appears before it comes to use input data. In my test scenario I am sending empty requests (tried GET and POST too).

As I understand, the key point to identify the reason, is to be sure if my piece of code reading folders and files in ./ directory should work and the model (or /mnt which is hardcoded part of the model path) should be visible there. If so, the log suggesting that model was successfully copied is not correct?

Please correct me if my reasoning is wrong.

Regards

- SAP Managed Tags:

- SAP AI Core,

- SAP AI Launchpad

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

4 weeks ago

Can you see the model in your artifacts?

Sorry for not understanding but have you tried the GET model endpoint: <your deployment url> + "/v2/greet"? And specify the resource group as well as the Bearer token?

- SAP Managed Tags:

- SAP AI Core,

- SAP AI Launchpad

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

4 weeks ago

I can see all my models in BTP AI Core and in my AWS S3 Bucket as well.

/v2/greet endpoint was also not working because there is init() function for loading model, which probably failed and that’s why I got error 500 code.

Currently I am not working with the original tutorial code, because due to prerequisites of loading model I was not able to test anything.

I am trying to analyze the problem, so I am adjusting it to do step by step modification. I will include the most important parts:

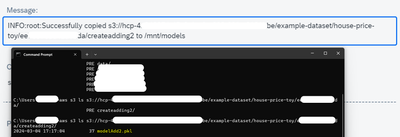

How I checked that paths and names are correct:

Parts I covered due to security reasons, I also triple-checked and they are correct.

Resource group and Bearer token are provided, otherwise I think none of the endpoints would work.

Regards

- SAP Managed Tags:

- SAP AI Core,

- SAP AI Launchpad

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

3 weeks ago

The path for artifacts and download into container is indeed tricky and I agree, the error messages are sometimes not helping in resolving the issue.

Some AI Core details:

1) the S3 bucket/pathprefix is the toot directory in S3 for your AI Core scenario.

Plz check if the model file is located below the pathprefix in S3.

If it is located on bucket/pathprefix/createadding2/my-model then the aritfiact path while registering should correspond to createadding/my-model

and in the template e.g. /mnt/models

Note that my-model could either be 1 file or a folder.

If it is a folder it will be placed under /mnt/models/...

Furthermore, in your test you list everything in the local folder "./" whereas /mnt is under root.

- SAP Managed Tags:

- SAP AI Core,

- SAP AI Launchpad

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

3 weeks ago

Hi,

Thank you for your suggestions, good point with local folder.

I changed path from „./” to „/” and added scanning “/mnt” path as well, that looks better.

I am not sure if this is what you meant with, I changed loading model to “/mnt/models/createadding2/modelAdd2.pkl”, so maybe this /createadding2 is also copies somehow to the path?

Sorry for the late answer, but I am now waiting for deployment of my new service after changes. I guess it may take a while, sometimes I needed to wait more than a day and give it a few tries to get resources for that (getting "message": "Task currently un-scheduleable due to lack of resource"). But this is already covered in tutorial, how to proceed:

I assume that the free version may take much more than just a few minutes.

I will let you know when I manage to deploy the app again and verify.

Regards

- SAP Managed Tags:

- SAP AI Core,

- SAP AI Launchpad

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2 weeks ago

Hello,

I’ve managed to resolve this error.

Of course, after the path adjustment model was copied correctly (thanks for pointing that out).

The problem was probably with dependencies location…

I think the best explanation would be according to this:

https://forums.fast.ai/t/attributeerror-cant-get-attribute-my-func-on-module-main-from-main-py/97320...

And in one of the comments there was solution:

“pickle is lazy and does not serialize class definitions or function definitions. Instead it saves a reference of how to find the class (the module it lives in and its name)”

and

“There’s an extension to pickle called dill, that does serialise Python objects and functions etc. (not references)”

So external reference to some dependent artifact was not matching.

Regards

- SAP Managed Tags:

- SAP AI Core,

- SAP AI Launchpad