- SAP Community

- Groups

- Interest Groups

- Information Architecture

- Blog Posts

- The ABAP Detective Sets The Thermostat

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

IntroductionThe following tongue-in-cheek story of a fictional detective focuses on the challenge of vetting an information flow rather than accepting returned values as truth. From an architecture perspective, an information consumer should be given clear and obvious dashboards or visualizations of current state (or history). While "information and services being easily usable and findable" is the goal, an operator also needs the power to examine the found data and verify the accuracy. In this case, small bugs crept in somehow, and auditing the content was necessary. |

Situation Normal

Danger On The Rocks Ahead

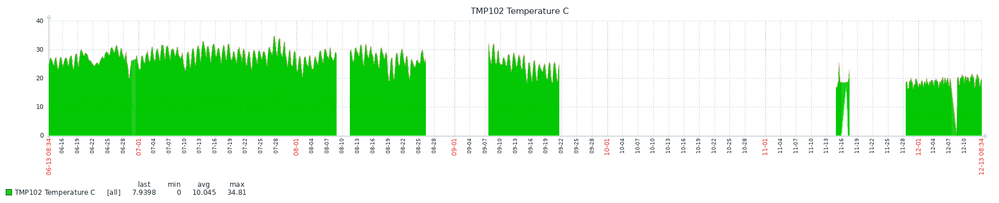

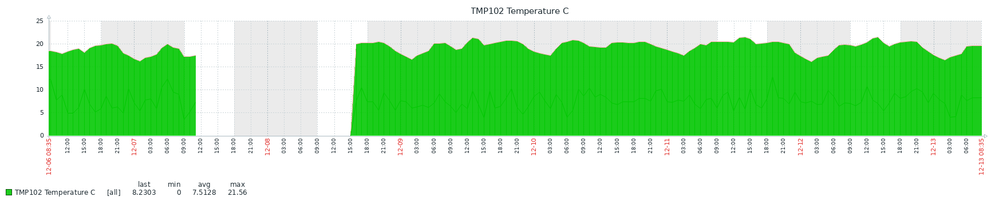

Looking at the above charts of 6-month and 1-week period (ignoring the known "out-of-service" data gaps), the temperatures look as we would expect, but the minimum and averages are not.

ERROR!

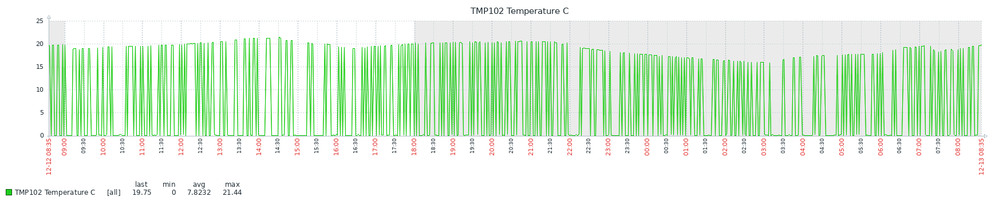

Zooming into 24-hour and 1-hour windows tells a different story:

Digging In

Somehow, the valid data readings are comingled with zero values, or as it turns out, close to zero. What is the information architecture issue? If you had a web of sensors, flooding data repositories, you might not notice the discrepancy in one stream unless your quality control checks flagged the glitches or jitter.

In this case, the cause appeared to be from using a desktop Pi 400, where the sensors was several inches away from the SOC (System On Chip) compared to similar systems like the Pi4 or smaller Pi Zero. After finding the problem, fixing it went on "the list."

I will skip details of the Python code the sensor vendor distributes, and focus on the debugging and corrective measures.

Print, Debug, and Repeat

At first I speculated the data failure was a timing issue, so maybe waiting and possibly sampling multiple times would reveal a correction path.

$ ./sparkfun-tmp102-pi400.py 18.75 0.0 0.0 18.94 18.88 0.0 0.0

OK, some good and some bad. This is worse:

$ ./sparkfun-tmp102-pi400.py 0.12 0.0

Close but not exactly zero yet definitely invalid (indoor) ambient temperature in Celsius! So we can't ignore only 0.0 but near values. Adding delays made no discernable impact on the results (data still bad, not worse, not better). So that didn't help.

Good enough

Elsewhere, I posted on a similar information feed quality control where I needed to store enough results temporarily to then analyze to discard metrics beyond a standard deviation. For that source, 0 might be correct and a simple algorithm would not suffice.

# time.sleep(1.0) counters = 0; while counters < 101: temperature = read_temp() print(round(temperature, 3)) time.sleep(0.001) counters = counters + 1;

I decided that "below one degree Celsius" was a workable limit that "should never occur." Other situations clearly need more thought/testing.

The resulting error correction

while counters < 101: temperature = read_temp() if (temperature) > 0.999: max = temperature counters = counters + 1; print (max);

Takes almost no time, too, as long delays could cause misses at the central collection node.

Run Time Check

$ time ./sparkfun-tmp102-pi400-nonzero.py 18.875 real 0m0.127s user 0m0.062s sys 0m0.014s $ time ./sparkfun-tmp102-pi400.py 0.0 real 0m0.128s user 0m0.098s sys 0m0.028s

- SAP Managed Tags:

- SAP Community

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP Detective

1 -

AI

1 -

Artificial Intelligence (AI)

1 -

Certification

1 -

Chatbots

1 -

chatgpt

1 -

Collaboration

2 -

communication

1 -

Content

1 -

design

1 -

Documentation

7 -

editing

1 -

Enable Now

1 -

Generative AI

1 -

global teams

1 -

graphics

1 -

help

2 -

information architecture

7 -

language

1 -

Learning

1 -

LLM

1 -

networking

2 -

project management

1 -

psychology

1 -

Python

1 -

Quality Control

1 -

SAP Help Portal

1 -

Skills

1 -

talents

1 -

teamwork

1 -

technical communication

2 -

Technical Communication Conferences

1 -

technical writing

2 -

terminology

1 -

usability

1 -

user assistance

10 -

User Enablement

1 -

User Experience

2 -

User Experience (UX)

1 -

user interface

1 -

ux

1 -

writing

6