- SAP Community

- Groups

- Interest Groups

- Artificial Intelligence and Machine Learning

- Blogs

- Fake it til you make it: Deepfakes and GANs.

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Machine synthesized speech, images and video in high quality and high volume is upon us. Some are used for entertainment purposes, others are created to deliberately spread disinformation. A central component is a class of machine learning called generative adversarial networks (GAN). How far has technology come today, how do the GANs work, and can we see a business potential with this toolset?

This blog consists of three parts with increasing technical focus. In this first post, we will go through the concept of deepfakes and why you should make yourself aware of them. I will also talk about some potential positive usecases of the technology.

In the second post, I will talk about how GANs work – high level, using the example of the art forger and the art expert.

In the third blog post, you will join me for a test run or a GAN that is able to generate “fake” handwritten digits.

Unless your company’s product or service consists in some form of digital imaging, this topic may seem to have little relevance to you. So why talk about it on the SAP Community Blog, you ask. Good question. I have three reasons:

- It’s fascinating and slightly scary, and we should be aware of the subject.

- The technology behind it is interesting, and we are eager to learn new stuff!

- The application of the technology may have business potential besides deepfakes.

If you agree to my reasoning – please read on.

You know the real-time filters on Snapchat, Instagram and other apps? The ones that use your phone camera and is able to track and transform features of you or your surroundings and change them into something else? Sure you do. If not you should get out more often, but here is an example:

(Photo: Snapchat / me)

When seeing this, you will immediately identify this as a fabricated clip with a random blog authors face in it. You are not easily fooled, because you are used to seeing creations like this on social media and elsewhere. Well, this video, and alike are made with the relatively limited hardware available on your phone - and in real-time. Anyone can do it, and new filters come out almost daily. The limitations of your phone CPU, RAM and network components defines the result.

Imagine what you could do if it was done on a supercomputer with all the compute money can buy. You could envision the quality of the end result scaling linearly? That’s great news for Hollywood – as it makes special effects cheaper, more accessible, and also more believable. The bad news is that “cheap, accessible and believable” makes the technology a convenient tool for someone with bad intentions. In many of these cases, a machine learning technique called Generative Adversarial Networks (GAN for short) are being used. It’s a class of machine learning, and we will look at how they work in the two following blog posts.

Everything you see can be forged.

If you think about the means used to damage or call into question politicians over the recent years, you will quickly realize that it could be tempting for someone to use deepfakes as parts of their smear campaign. They may use the technology to spread disinformation to boost up their own candidate, and even easier – tear down a competitor.

Achieving the descried output is really just a question of balancing time, cost and quality (as in any other software project). In theory, everything you see on a screen or in print can be a forgery or “deepfake”.

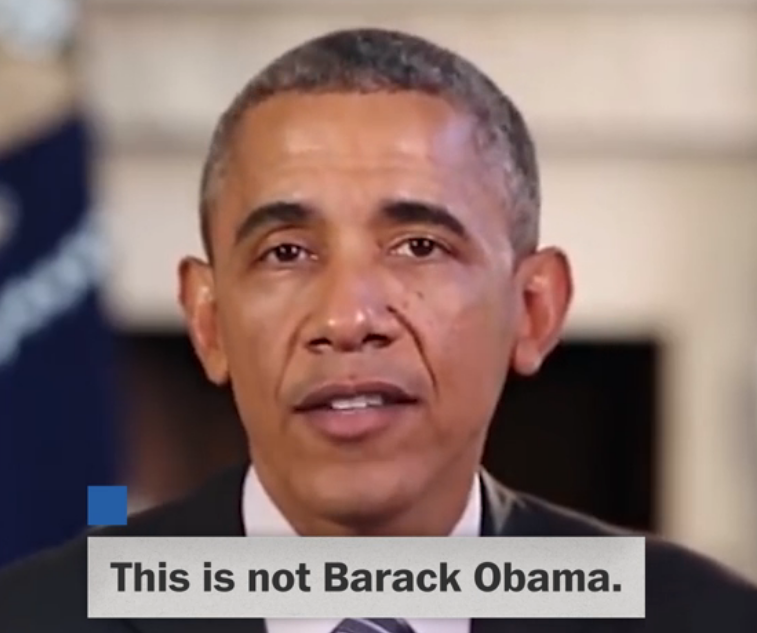

(Photo: from Washington Post video)

Faces, voices and surroundings can be faked, leaving us with having to question all digital content we are presented with. Here’s a good video on the subject.

I will not cling on to the scare of deepfakes – as you will find enough articles about that if you google it. Instead, let’s look at the positive potential of GANs. There has to be some, right?

I’m a strong believer that GANs will play a significant role both in creating and verifying the authenticity of digital content in our near future. There are already some examples, and here are some of my favorites:

CycleGAN: This one if my chosen number one, and the one that may have gotten the most attention in the media. CycleGAN takes features from one type of image and applies them to a different image. If you feed it images of zebras it will get what feature that makes us think that the animal is a zebra – and it can later apply that particular feature to another animal, for example a horse. Or, train on Monet paintings and make a photo look like a Monet. And – here’s another cool feature, it can be flipped around so that you can generate a photo from a Montet painting. Same goes for summer and winter:

(Photo: https://github.com/junyanz/CycleGAN)

SalGAN: The SalGAN detects areas on an image that is most likely to be the defining parts, or the core information of what the image is showing us. It will identify the parts of an image that will attract human attention, and will lead us to say that “oh this is a picture of someone playing baseball”.

(Photo: Pan et al)

Text to image synthesis: The system will create an image of what the text describes. So far, as you can see on the generated images on the right side, it is not really believable. But with more data and training it could be the next big thing:

(Photo: Reed et al)

Other use cases are anomaly detection on medical images, automatic music generation, and super-resolution. The “GAN Zoo” holds a list of over 500 GAN papers, you can access it here.

We have just started to scratch the surface of what this area of machine learning can contribute to, so let’s stay tuned – because I expect a lot to happen here.

In the next blog post we’ll go through a high level overview of how Generative Adversarial Networks work!

/Simen

Twitter: https://twitter.com/simenhuuse

LinkedIn: https://www.linkedin.com/in/simenhuuse/

Sources:

- Jun-Yan Zhu, CycleGAN on Github. URL: https://github.com/junyanz/CycleGAN

- Pan et al, “SalGAN: visual saliency prediction with adversarial networks”. URL: https://arxiv.org/pdf/1701.01081.pdf

- Reed et al, «Generative Adversarial Text to Image Synthesis”. URL: https://arxiv.org/pdf/1605.05396.pdf

- Washington post on deepfakes. URL: https://wapo.st/2ImkS0v

- Minchul Shin on Github: Gans Awesome Applications URL: https://github.com/nashory/gans-awesome-applications

- Diego Gomez Mosquera on Medium. URL: https://medium.com/ai-society/gans-from-scratch-1-a-deep-introduction-with-code-in-pytorch-and-tenso...

- SAP Managed Tags:

- Machine Learning

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

Agents

4 -

AI

5 -

AI Launchpad

2 -

Artificial Intelligence

2 -

Artificial Intelligence (AI)

3 -

Brainstorming

1 -

BTP

1 -

Business AI

2 -

Business Trends

1 -

Business Trends

1 -

Cloud Foundry

1 -

Data and Analytics (DA)

1 -

Design and Engineering

1 -

forecasting

1 -

GenAI

1 -

Generative AI

5 -

Generative AI Hub

4 -

Graph

1 -

Language Models

1 -

LlamaIndex

1 -

LLM

2 -

LLMs

2 -

Machine Learning

1 -

Machine learning using SAP HANA

1 -

Mistral AI

1 -

NLP (Natural Language Processing)

1 -

open source

1 -

OpenAI

1 -

Python

2 -

RAG

2 -

Retrieval Augmented Generation

1 -

SAP Build Process Automation

1 -

SAP HANA

1 -

SAP HANA Cloud

1 -

Technology Updates

1 -

User Experience

1 -

user interface

1 -

Vector Database

3 -

Vector DB

1 -

Vector Similarity

1