- SAP Community

- Groups

- Interest Groups

- Artificial Intelligence and Machine Learning

- Blogs

- Unlocking the Power of SAP AI Core: Building a RAG...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Introduction: In the ever-evolving landscape of AI and language models, the year 2023 marked a significant focus on the capabilities of Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG) architectures. As the year came to a close, I seized the opportunity during the holidays to delve into this cutting-edge field. This blog recounts my journey of building a sophisticated RAG pipeline, leveraging technologies like LlamaIndex, ChromaDB, HuggingFace’s Zephyr-7b (a fine-tuned version of mistralai/Mistral-7B-v0.1 ), and deploying the entire system on SAP Business Technology Platform (BTP).

How it All Began:

My exploration began with the learnings I had in the realms of LLM and Generative AI. LlamaIndex, a potent data framework connecting domain data to LLMs, caught my attention. Learning the intricacies of executing data ingestion pipelines, generating embeddings, and storing them in a vector database, I decided to extend this knowledge by building and deploying it on SAP AI Core.

Challenges and Solutions:

While embarking on this endeavor, I encountered a hurdle: the anticipation of the vectorized SAP HANA DB release in Q1 2024. Undeterred, I scoured for an open-source vector database and discovered ChromaDB — an influential, user-friendly solution with an active online community. The next puzzle piece was finding a free-to-use LLM, and I stumbled upon HuggingFace platform.

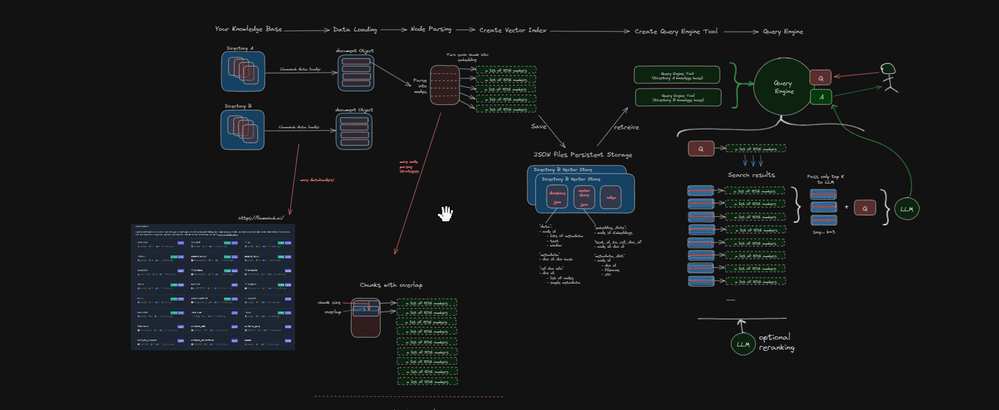

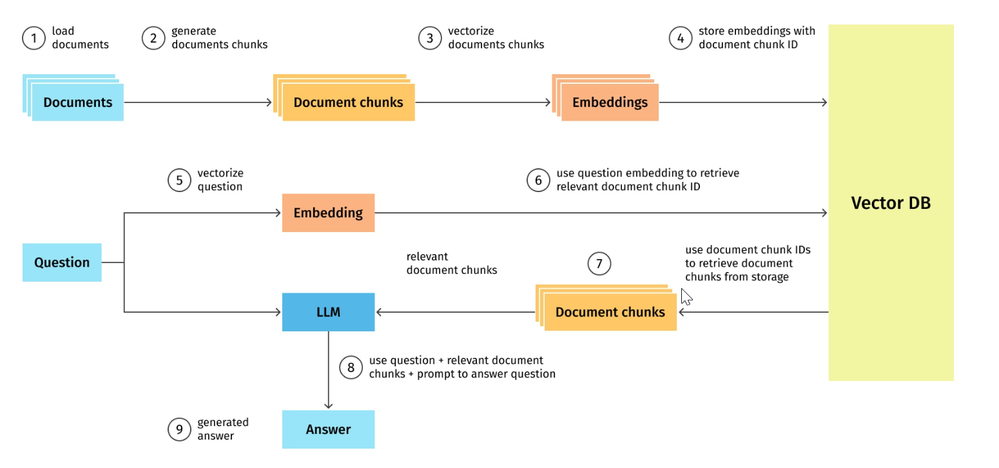

In a corporate use case where an SFTP site contains diverse data types, such as text-based PDFs, image-based PDFs, images, and plain text files. The goal is to ingest this data into the RAG pipeline, create vector embeddings, store them in a vector database, and build an index for efficient search and retrieval. The process flow is visualized in the diagram below:

Basic RAG implementation involves 4 steps:

If you are already familiar with RAG techniques, you can jump to Build & deployment steps for SAP BTP and AI Core

Step 1 : Loading documents — In our case we will be loading the documents from SFTP site

Step 2 : Parsing Documents into Text Chunks (Nodes) — Split the document into text chunks, which are called “Nodes” in LlamaIndex, where we define the chuck size as 512 in the embedding model.

sentence_node_parser = SentenceWindowNodeParser.from_defaults(

window_size=3,

window_metadata_key="window",

original_text_metadata_key="original_text"

)

nodes = sentence_node_parser.get_nodes_from_documents(documents)

Step 3 : Selection of embedding model and LLM

- The embedding model is used to create vector embeddings for each of the nodes. Here we are calling the microsoft/mpnet model from Hugging Face. This model tops the leader board in terms of overall performance for embeddings.

- LLM: User query and the relevant text chunks are fed into the LLM so that it can generate answers with relevant context.

embed_model = HuggingFaceEmbedding(model_name=”sentence-transformers/all-mpnet-base-v2", max_length=512)

Step 4. Create Index, retriever, and query engine

Index, retriever, and query engine are three basic components for asking questions about your data or documents:

- Index is a data structure that allows us to retrieve relevant information quickly for a user query from external documents. The Vector Store Index takes the text chunks/Nodes and then creates vector embeddings of the text of every node, ready to be queried by an LLM.

ctx_sentence = ServiceContext.from_defaults(llm=llm, embed_model=embed_model, node_parser=sentence_node_parser)

storage_context = StorageContext.from_defaults(vector_store=vector_store)

sentence_index = VectorStoreIndex(nodes, service_context=ctx_sentence, storage_context=storage_context)

- For retrieval we will be using an advanced method sentence window retrieval : To achieve an even more fine-grained retrieval, instead of using smaller child chunks, we can parse the documents into a single sentence per chunk.

- In this case, single sentences will be similar to the “child” chunk concept. The sentence “window” (5 sentences on either side of the original sentence) will be similar to the “parent” chunk concept. In other words, we use the single sentences during retrieval and pass the retrieved sentence with the sentence window to the LLM. Details of this technique can be visualized better with this diagram.

- Create the sentence window node parser

# create the sentence window node parser w/ default settings

node_parser = SentenceWindowNodeParser.from_defaults(

window_size=3,

window_metadata_key="window",

original_text_metadata_key="original_text",

)

sentence_nodes = node_parser.get_nodes_from_documents(docs)

sentence_index = VectorStoreIndex(sentence_nodes, service_context=service_context)

- Create a query engine

When we create the query engine, we can replace the sentence with the sentence window using the MetadataReplacementPostProcessor, so that the window of the sentences get sent to the LLM.

query_engine = sentence_index.as_query_engine(

similarity_top_k=2,

# the target key defaults to `window` to match the node_parser's default

node_postprocessors=[

MetadataReplacementPostProcessor(target_metadata_key="window")

],

)

window_response = query_engine.query(

"Can you tell me about the key concepts for supervised finetuning"

)

print(window_response)

Build & deployment steps for SAP BTP and AI Core

Now with this understanding lets build the RAG pipeline using LlamaIndex and run this pipeline on SAP AI core.

Pre requisites and setup:

- I have set up a SFTP server on BTP Kyma runtime, you can use any other SFTP server as well.

- Set up a ChromaDB using Docker image and deploy the docker image on Kyma runtime using below YAML file. Other deployment options

docker pull chromadb/chroma

docker tag chroma <your_username>/chroma:latest

docker push <your_username>/chroma:latestapiVersion: apps/v1

kind: Deployment

metadata:

name: my-chroma-app

spec:

replicas: 1

selector:

matchLabels:

app: my-chroma-app

template:

metadata:

labels:

app: my-chroma-app

spec:

containers:

- name: my-chroma-app

image: your-docker-username/my-chroma-image:latest

ports:

- containerPort: 80

Create a Load balancer service for this deployment

apiVersion: v1

kind: Service

metadata:

name: your-service

spec:

type: LoadBalancer

ports:

- port: 80

targetPort: 8080

selector:

app: your-app

and lastly create a DNS entry on top of the service to access the ChromaDB using host and port details over internet.

3. I have subscribed to the SAP AI Core standard edition for advanced memory usage in running LLM.

4. Now, let’s explore the code for building the RAG pipeline, including data ingestion from SFTP and loading the HuggingFace model zephyr-7b-beta model into SAP AI core

- In the VS code make a directory

mkdir zephyr-on-ai-core

- Code to build a Docker Image for custom GPU which will be required to load the transformer model, I have the docker file named as Dockerfile.customgpu

FROM python:3.11.6 AS base

WORKDIR /serving

COPY requirements.txt requirements.txt

RUN apt update

RUN git clone https://github.com/huggingface/transformers

RUN pip3 install -r requirements.txt

RUN apt-get update \

&& apt-get -y install tesseract-ocr

RUN apt-get install -y poppler-utils

FROM base as final

ENV LC_ALL=C.UTF-8

ENV LANG=C.UTF-8

RUN export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/nvidia/lib:/usr/local/nvidia/lib64:/usr/local/cuda/lib64:/usr/local/cuda-10.0/targets/x86_64-linux/lib:/usr/local/cuda-10.2/targets/x86_64-linux/lib:/usr/local/cuda-11/targets/x86_64-linux/lib:/usr/local/cuda-11.6/targets/x86_64-linux/lib/stubs:/usr/local/cuda-11.6/compat:/usr/local/cuda-11.6/targets/x86_64-linux/lib

RUN export PATH=$PATH:/usr/local/cuda-11/bin

# Required for huggingface

RUN mkdir -p /nonexistent/

RUN mkdir -p /transformerscache/

RUN chown -R 1000:1000 /nonexistent

RUN chmod -R 777 /nonexistent

RUN chmod -R 777 /transformerscache

# Create the directory and set permissions

RUN mkdir -p /serving/data

RUN chown -R 1000:1000 /serving/data

RUN chmod -R 777 /serving/data

# Create the directory and set permissions

RUN mkdir -p /serving/data/temp

RUN chown -R 1000:1000 /serving/data/temp

RUN chmod -R 777 /serving/data/temp

# Create the directory and set permissions

RUN mkdir -p /usr/local/lib/python3.11/site-packages/llama_index/download/llamahub_modules

RUN chown -R 1000:1000 /usr/local/lib/python3.11/site-packages/llama_index/download/llamahub_modules

RUN chmod -R 777 /usr/local/lib/python3.11/site-packages/llama_index/download/llamahub_modules

ENV TRANSFORMERS_CACHE=/transformerscache

COPY /serving /serving

CMD ["uvicorn", "app:api", "--host", "0.0.0.0", "--port", "8080"]

- Create a requirements.txt to manage all the dependencies as below

transformers==4.36.2

tokenizers>=0.13.3

--find-links https://download.pytorch.org/whl/torch_stable.html

torch

fastapi

uvicorn

accelerate

huggingface_hub

onnxruntime

requests

chromadb==0.4.9

llama-index

sentence-transformers==2.1.0

PyPDF2==1.26.0

pysftp

# pytesseract and its dependencies

pillow

pytesseract

PyMuPDF

# pdf2image and its dependencies

pdf2image

- Now let’s create a subfolder template and in this folder create a transformers.yaml with below code

apiVersion: ai.sap.com/v1alpha1

kind: ServingTemplate

metadata:

name: transformers

annotations:

scenarios.ai.sap.com/description: "zephyr"

scenarios.ai.sap.com/name: "zephyr"

executables.ai.sap.com/description: "zephyr"

executables.ai.sap.com/name: "zephyr"

labels:

scenarios.ai.sap.com/id: "zephyr"

ai.sap.com/version: "1.0"

spec:

template:

apiVersion: "serving.kserve.io/v1beta1"

metadata:

annotations: |

autoscaling.knative.dev/metric: concurrency

autoscaling.knative.dev/target: 1

autoscaling.knative.dev/targetBurstCapacity: 0

labels: |

ai.sap.com/resourcePlan: infer.l

spec: |

predictor:

imagePullSecrets:

- name: dockerpk

minReplicas: 1

maxReplicas: 5

containers:

- name: kserve-container

image: docker.io/purankhoeval/zephyr:01

ports:

- containerPort: 8080

protocol: TCP

- Create a another folder called serving and inside this we are going to create two files app.py and worker_model.py

- In the app.py we are creating API definition using FastAPI and this API has entry points defined for each operation such as Data Ingestion and Query search

- In the worker_model.py we are implementing the handling functions of data ingestion, query search and results retrieval

- Data ingestion for RAG is a multistep process. It starts with reading the content from SFTP server directory, I have kept 3 files for this scenario, a remittance image, a text based pdf of 20 pages which is a subset of this document and one more 46 pages image based pdf which has remittance details. These documents will be loaded using LlamaIndex library functions and split into smaller chunks i.e. nodes in our case.

# Code for worker_model.py

import torch

import transformers

import traceback # Add this import

import sys, os

import pysftp

import huggingface_hub

from pprint import pprint

from llama_index.node_parser import SentenceWindowNodeParser

from llama_index.embeddings import HuggingFaceEmbedding

from llama_index.schema import MetadataMode

from llama_index.postprocessor import MetadataReplacementPostProcessor

from llama_index.vector_stores import ChromaVectorStore,VectorStoreQuery

from llama_index import (

VectorStoreIndex,

SimpleDirectoryReader,

StorageContext,

ServiceContext,

Document

)

from llama_index.llms import HuggingFaceInferenceAPI

from llama_index.embeddings import HuggingFaceEmbedding

import chromadb

from chromadb.utils import embedding_functions

from llama_index import download_loader

from pathlib import Path

import pytesseract

import pdf2image

from pdf2image import convert_from_path

import fitz

transformers.utils.logging.set_verbosity_error()

transformers.utils.logging.disable_progress_bar()

os.environ["TRANSFORMERS_CACHE"] = "shared/IMR/llm2023/cache"

def download_pdf_from_sftp(sftp_host, sftp_username, sftp_password, sftp_port, remote_path, local_path):

cnopts = pysftp.CnOpts()

cnopts.hostkeys = None # Disable host key checking (not recommended for production)

with pysftp.Connection(sftp_host, username=sftp_username, password=sftp_password, port=sftp_port, cnopts=cnopts) as sftp:

# Create the local directory if it doesn't exist

os.makedirs(os.path.dirname(local_path), exist_ok=True)

# sftp.get(remote_path, local_path)

remote_files = sftp.listdir(remote_path)

# Download each file individually

for remote_file in remote_files:

remote_file_path = os.path.join(remote_path, remote_file)

local_file_path = os.path.join(local_path, remote_file)

sftp.get(remote_file_path, local_file_path)

# SFTP details

sftp_host = '<sftp_host>'

sftp_port = '<port>'

sftp_username = '<user>'

sftp_password = '<password>'

remote_pdf_path = '/upload/'

local_pdf_path = './data/'

# Download PDF from SFTP

download_pdf_from_sftp(sftp_host, sftp_username, sftp_password, sftp_port, remote_pdf_path, local_pdf_path)

ImageReader = download_loader("ImageReader")

# Use the model_kwargs to pass options to the parser function

loader = ImageReader(text_type="plain_text")

image_paths = []

documents = []

def is_text_based_pdf(pdf_path):

try:

# Open the PDF file

pdf_document = fitz.open(pdf_path)

# Iterate through each page and check for text

for page_number in range(pdf_document.page_count):

page = pdf_document[page_number]

text = page.get_text()

# If text is found on any page, it's likely a text-based PDF

if text.strip():

return True

# No text found on any page, it might be an image-based PDF

return False

except Exception as e:

# Handle exceptions (e.g., if the PDF is encrypted or malformed)

print(f"Error checking PDF: {e}")

return False

def process_pdf_file(pdf_path):

is_text_based = is_text_based_pdf(pdf_path)

# Check if the PDF is text-based or image-based

if is_text_based:

directory_reader = SimpleDirectoryReader(input_files=[pdf_path])

# Load data from the specified file path

documentspdf = directory_reader.load_data()

# Create a llamaindex Document from ImageDocument

doc1 = documentspdf[0]

doc1 = Document(doc_id=doc1.id_, text=doc1.text, metadata=doc1.metadata)

documents.append(doc1)

doc1 = []

else:

print("The PDF is image-based.")

# Convert the PDF to images

images = convert_from_path(pdf_path)

# Save each image to a file and load as ImageDocuments

for i, image in enumerate(images):

image_path = Path(f"./data/temp/page_{i}.png")

image.save(image_path)

image_paths.append(image_path)

doc = loader.load_data(file=image_path)

documents.extend(doc)

# Process files in the directory

def process_files_in_directory(directory_path):

# Iterate through files in the directory

for filename in os.listdir(directory_path):

file_path = os.path.join(directory_path, filename)

# Check file extension

_, file_extension = os.path.splitext(filename)

# Call the appropriate function based on the file type

if file_extension.lower() in ['.jpg', '.jpeg', '.png']:

local_image_path = "./data/remit.png"

ImageReader = download_loader("ImageReader")

# Use the model_kwargs to pass options to the parser function

loader = ImageReader(text_type="plain_text")

documentsimg = loader.load_data(file_path)

documents.extend(documentsimg)

elif file_extension.lower() == '.pdf':

process_pdf_file(file_path)

class Model:

generator = None

@staticmethod

def setup():

"""model setup"""

print("START LOADING SETUP ZEPHYR 7B", file=sys.stderr)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model_name = "HuggingFaceH4/zephyr-7b-beta"

HUB_TOKEN = "<hub_token>"

huggingface_hub.login(token=HUB_TOKEN)

llm = HuggingFaceInferenceAPI(

model_name="HuggingFaceH4/zephyr-7b-beta", token=HUB_TOKEN

)

print("SETUP DONE", file=sys.stderr)

@staticmethod

def predict(prompt, args):

"""model setup"""

return Model.generator(prompt, args)

@staticmethod

def query(question):

print("Question:", question)

# sentence_transformer_ef = embedding_functions.SentenceTransformerEmbeddingFunction(model_name="all-mpnet-base-v2")

# Set up ChromaDB client and collection

chroma_host = "<chroma host on kyma>"

chroma_port = 8000

chroma_client = chromadb.HttpClient(host=chroma_host, port=chroma_port)

# chroma_client = chromadb.PersistentClient(path='./sentence_index')

print('HEARTBEAT:', chroma_client.heartbeat())

chroma_collection_name = "multidoc"

chroma_collection = chroma_client.get_collection(name=chroma_collection_name)

# , embedding_function=sentence_transformer_ef)

HUB_TOKEN = "<your token>"

huggingface_hub.login(token=HUB_TOKEN)

llm = HuggingFaceInferenceAPI(

model_name="HuggingFaceH4/zephyr-7b-beta", token=HUB_TOKEN

)

embed_model = HuggingFaceEmbedding(model_name="sentence-transformers/all-mpnet-base-v2", max_length=512)

# set up ChromaVectorStore and load in data

vector_store = ChromaVectorStore(chroma_collection=chroma_collection)

ctx_sentence = ServiceContext.from_defaults(llm=llm, embed_model=embed_model)

retrieved_sentence_index = VectorStoreIndex.from_vector_store(vector_store=vector_store, service_context=ctx_sentence)

sentence_query_engine = retrieved_sentence_index.as_query_engine(

similarity_top_k=5,

verbose=True,

# the target key defaults to `window` to match the node_parser's default

node_postprocessors=[

MetadataReplacementPostProcessor(target_metadata_key="window")

],

)

import json

try:

sentence_response = sentence_query_engine.query(question)

# Check if the result is empty

if not sentence_response:

result_message = {"success": False, "message": "No results found."}

else:

# Extract relevant information from sentence_response

extracted_info = {"response": sentence_response.response}

result_message = {"success": True, "results": extracted_info}

# Print the JSON representation

print(json.dumps(result_message))

# Return the result_message

return result_message

except Exception as e:

error_message = {"success": False, "message": f"Error during query execution: {str(e)}"}

print(json.dumps(error_message))

traceback.print_exc()

sys.exit(1)

@staticmethod

def DataIngestion():

print("Data Ingestion Started")

directory_path = "./data/"

process_files_in_directory(directory_path)

sentence_node_parser = SentenceWindowNodeParser.from_defaults(

window_size=3,

window_metadata_key="window",

original_text_metadata_key="original_text"

)

nodes = sentence_node_parser.get_nodes_from_documents(documents)

HUB_TOKEN = "<your token>"

huggingface_hub.login(token=HUB_TOKEN)

llm = HuggingFaceInferenceAPI(

model_name="HuggingFaceH4/zephyr-7b-beta", token=HUB_TOKEN

)

# sentence_transformer_ef = embedding_functions.SentenceTransformerEmbeddingFunction(model_name="all-mpnet-base-v2")

chroma_host = "<chroma host on kyma>"

chroma_port = 8000

chroma_client = chromadb.HttpClient(host=chroma_host, port=chroma_port)

chroma_collection_name = "multidocai"

# chroma_client.delete_collection(name=chroma_collection_name)

chroma_collection = chroma_client.get_or_create_collection(name=chroma_collection_name)

# embedding_function=sentence_transformer_ef)

embed_model = HuggingFaceEmbedding(model_name="sentence-transformers/all-mpnet-base-v2", max_length=512)

vector_store = ChromaVectorStore(chroma_collection=chroma_collection)

ctx_sentence = ServiceContext.from_defaults(llm=llm, embed_model=embed_model, node_parser=sentence_node_parser)

storage_context = StorageContext.from_defaults(vector_store=vector_store)

sentence_index = VectorStoreIndex(nodes, service_context=ctx_sentence, storage_context=storage_context)

# sentence_index.storage_context.persist()

if __name__ == "__main__":

# for local testing

Model.setup()

print(Model.predict("Hello, who are you?", {}))

- We are ready to create a docker image. In the below code replace <your_username> with the actual docker hub username

docker build -t <your_username>/zephyr:01 -f Dockerfile.customgpu .

docker push <your_username>/zephyr:01

- Now push the entire project to github, we would be creating an AI application from the serving template in SAP AI Core using the github repository, and docker registry secret, detailed steps to set up the repository and how to create an AI application can be found in this article and deployment related tutorial

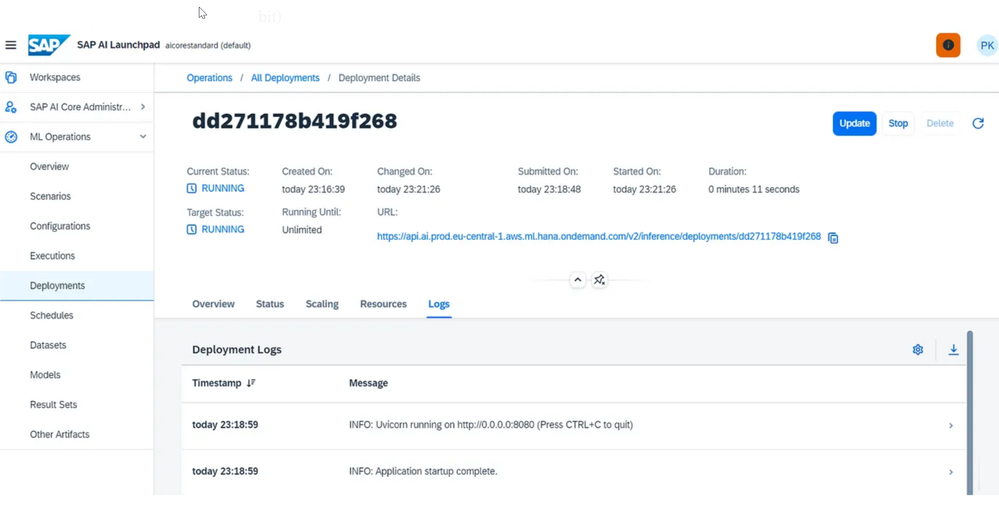

- Once you have deployed successfully, SAP AI Core will generate an inference API URL to run the LLM predictions ( URL generation takes a bit)

- You can test this inference endpoint using postman, just remember to set the bearer token for oAuth authentication using client id and client secret for the AI Core service.

5. Let’s move on to the last phase of this post to create a Next JS UI project which can call this AI inference endpoint to generate results for us. While client side is handled through Next JS, the server side requests are going to be handled by Node JS

- Back to the VS code project zephyr-on-ai-core, inside this parent folder let’s create a Next JS app using the terminal command

npx create-next-app ragui

cd ragui

npm run dev

- This will launch your Next.js app, and you can view it in your browser at http://localhost:3000.

- Inside ragui there should be a folder app and inside that page.tsx, modify this file with the following code. We are trying to create a basic chat app container where user can input the query and trigger the response retrieval by clicking on the send message button. There is an additional button start data ingestion to trigger the data ingestion process. This will trigger the logic to read the files located on SFTP server and for each of these files we are going to create nodes using LlamaIndex library function sentence node parser.

"use client"; // This is a client component

import React, { useState, useRef, useEffect } from 'react';

interface Message {

text: string;

user: string;

}

interface RenderMessageTextProps {

text: string;

}

const ChatApp = () => {

const [messages, setMessages] = useState<Message[]>([]);

const [newMessage, setNewMessage] = useState<string>('');

const [dataIngestionStatus, setDataIngestionStatus] = useState('');

const messagesContainerRef = useRef<HTMLDivElement>(null);

const renderMessageText = ({ text }: RenderMessageTextProps) => {

const formattedText = text.replace(/\n/g, '<br>');

return <span dangerouslySetInnerHTML={{ __html: formattedText }} />;

};

const handleStartDataIngestion = async () => {

try {

const messageData = {};

const response = await fetch('/api/startDataIngestion', {

method: 'POST',

headers: {

'Content-Type': 'application/json',

},

body: JSON.stringify(messageData),

});

if (response.ok) {

setDataIngestionStatus('Data ingestion successful');

} else {

setDataIngestionStatus('Data ingestion failed');

}

} catch (error) {

console.error('Error starting data ingestion:', error);

setDataIngestionStatus('Internal server error');

}

};

const handlesendMessage = async () => {

try {

const userMessage = {

text: newMessage,

user: 'You',

};

setMessages((prevMessages) => [...prevMessages, userMessage]);

const messageData = {

message: newMessage,

};

console.log('Sending message:', newMessage);

const response = await fetch('/api/runQuery', {

method: 'POST',

headers: {

'Content-Type': 'application/json',

},

body: JSON.stringify(messageData),

});

if (response.ok) {

const responseData = await response.json();

const responseText = responseData.response?.response || '';

setMessages((prevMessages) => [

...prevMessages,

{ text: responseText, user: 'AriMa' },

]);

} else {

setDataIngestionStatus('Failed to send message');

}

} catch (error) {

console.error('Error sending message:', error);

setDataIngestionStatus('Internal server error');

}

};

// Function to scroll to the bottom of the messages container

const scrollToBottom = () => {

if (messagesContainerRef.current) {

messagesContainerRef.current.scrollTop = messagesContainerRef.current.scrollHeight;

}

};

// Scroll to the bottom on initial render and whenever messages change

useEffect(() => {

scrollToBottom();

}, [messages]);

return (

<div className="flex flex-col items-center justify-between h-screen p-8 bg-gray-800 text-white">

<div className="flex items-center justify-between w-full mb-8">

<button

onClick={handleStartDataIngestion}

className="px-4 py-2 text-white bg-green-500 rounded-md focus:outline-none"

>

Start Data Ingestion

</button>

</div>

<div className="flex flex-col items-center justify-end flex-1 w-full mb-8 overflow-y-auto p-4">

<div ref={messagesContainerRef} className="flex flex-col">

{messages.map((message, index) => (

<div key={index} className="mb-2">

<span className="text-gray-400">{message.user}:</span> {message.text}

</div>

))}

</div>

</div>

<div className="flex items-center justify-between w-full">

<input

type="text"

value={newMessage}

onChange={(e) => setNewMessage(e.target.value)}

placeholder="Type a message..."

className="flex-1 px-4 py-2 mr-2 text-black bg-white rounded-md focus:outline-none"

/>

<button

onClick={handlesendMessage}

className="px-4 py-2 text-white bg-black rounded-md focus:outline-none"

>

Send Message

</button>

</div>

</div>

);

};

export default ChatApp;

Inside the app folder create another folder api and here create a folder as startDataIngestion and inside this create a file route.ts. The below code for this page api route will handle the data ingestion process

// pages/api/data-ingestion.ts

import type { NextApiRequest, NextApiResponse } from 'next';

import axios from 'axios';

import { NextResponse } from 'next/server';

interface Message {

role: string;

text: string;

}

export const POST = async (request: Request, res: NextResponse) => {

try {

const apiUrl = '< url >/api/DataIngestion';

const axiosRes = await axios.post(apiUrl);

// You need to handle conversation storage or update here (use a database, state management, etc.)

return NextResponse.json({ success: true, message: 'Data Ingestion Completed' });

} catch (error) {

console.error('Error with API request:', error);

return NextResponse.json({ success: false, message: 'Internal server error' });

}

}

Create another folder under api as runQuery and route.ts file inside this with the below code. This code will call the Node JS API to run the SAP AI Core inference API we deployed earlier with the query we are submitting it from the UI

// pages/api/runQuery.ts

import type { NextApiRequest, NextApiResponse } from 'next';

import axios from 'axios';

import { NextResponse } from 'next/server';

interface Message {

role: string;

text: string;

}

export const POST = async (request: Request, res: NextResponse) => {

try {

const userInput = await request.json();

const systemPrompt = "Answer with high precision";

const fullPrompt = `<|system|>\n${systemPrompt}</s>\n<|user|>\n${userInput.message}</s>\n<|assistant|>`;

// const fullPrompt = userInput.message;

console.log(fullPrompt);

const requestBody = {

prompt: fullPrompt,

args: {}

};

const apiUrl = '<url>/api/getResponse';

const axiosRes = await axios.post(apiUrl, requestBody);

const data = axiosRes.data;

const generatedText = data;

console.log('generated text:',generatedText);

const newMessage: Message = {

role: 'user',

text: userInput,

};

const newResponse: Message = {

role: 'arima',

text: generatedText,

};

// You need to handle conversation storage or update here (use a database, state management, etc.)

return NextResponse.json({ success: true, response: generatedText });

} catch (error) {

console.error('Error with API request:', error);

return NextResponse.json({ success: false, message: 'Internal server error' });

}

}

Kudos to if you made it this far, now onto the last part, implementation of server side API handling.

Create a folder serverapi under the parent folder zephyr-on-ai-core and inside this a file server.js with below code

const express = require('express');

const axios = require('axios');

const cors = require('cors');

const request = require('request-promise');

const app = express();

const port = process.env.PORT || 3006;

app.use(cors());

// Parse JSON in the request body

app.use(express.json());

app.post('/api/getResponse', async (req, res) => {

try {

// Get OAuth token (replace with your actual credentials)

const fs = require('fs');

// Specify the path to your service key file

const serviceKeyPath = './config.json';

// Read the content of the file

const serviceKeyContent = fs.readFileSync(serviceKeyPath, 'utf8');

const serviceKey = JSON.parse(serviceKeyContent);

// Extract client ID and client secret

const clientId = serviceKey.clientid;

const clientSecret = serviceKey.clientsecret;

const token = await getOAuthToken(clientId, clientSecret);

// Make AI API call

const response = await axios.post(

'https://<url>/v2/inference/deployments/d87531155571d6e8/v2/query',

req.body,

{

headers: {

Authorization: `Bearer ${token}`,

'ai-resource-group': 'default',

'Content-Type': 'application/json',

},

}

);

// res.json(response.data);

// res.json(response.results);

const responseBody = response.data.results.response;

res.status(200).json({ success: true, response: responseBody });

} catch (error) {

console.error('Error with API request:', error);

res.status(500).json({ error: 'Internal Server Error' });

}

});

app.post('/api/DataIngestion', async (req, res) => {

try {

// Get OAuth token (replace with your actual credentials)

const fs = require('fs');

// Specify the path to your service key file

const serviceKeyPath = './config.json';

// Read the content of the file

const serviceKeyContent = fs.readFileSync(serviceKeyPath, 'utf8');

const serviceKey = JSON.parse(serviceKeyContent);

// Extract client ID and client secret

const clientId = serviceKey.clientid;

const clientSecret = serviceKey.clientsecret;

const token = await getOAuthToken(clientId, clientSecret);

// Make AI API call

const response = await axios.post(

'https://<url>/v2/inference/deployments/d87531155571d6e8/v2/DataIngestion',

req.body,

{

headers: {

Authorization: `Bearer ${token}`,

'ai-resource-group': 'default',

'Content-Type': 'application/json',

},

}

);

res.status(200).json({ success: 'Data Ingestion completed' });

} catch (error) {

console.error('Error with API request:', error);

res.status(500).json({ error: 'Internal Server Error' });

}

});

app.listen(port, () => {

console.log(`Server is running on port ${port}`);

});

async function getOAuthToken(clientId, clientSecret) {

const tokenUrl = '<token_url>/oauth/token';

try {

const credentials = Buffer.from(`${clientId}:${clientSecret}`).toString('base64');

// Make a POST request to the token endpoint using request-promise

const response = await request.post({

uri: tokenUrl,

form: {

grant_type: 'client_credentials',

},

headers: {

'Content-Type': 'application/x-www-form-urlencoded',

'Authorization': `Basic ${credentials}`,

},

json: true, // Automatically parses the response body as JSON

});

// Check if the request was successful (status code 200)

if (response) {

// Access token is available in the response data

const accessToken = response.access_token;

return accessToken;

} else {

// Print the error details if the request was not successful

console.error(`Error: ${response.status} - ${response.error_description}`);

return null;

}

} catch (error) {

// Handle exceptions, e.g., network errors

console.error(`Error: ${error.message}`);

return null;

}

}

Package.json file for this node js with below code

{

"name": "server",

"version": "1.0.0",

"description": "",

"main": "server.js",

"scripts": {

"test": "echo \"Error: no test specified\" && exit 1"

},

"keywords": [],

"author": "",

"license": "ISC",

"dependencies": {

"axios": "^1.6.2",

"cors": "^2.8.5",

"express": "^4.18.2",

"request-promise": "^4.2.6"

}

}

and finally manifest.json file as below

---

applications:

- name: aicoreapirag

path: .

memory: 128M

disk_quota: 250M

Let’s push this node js api to SAP BTP cloud foundry runtime with below command

cd serverapi

cf login

cf push

Once the node js server side api is deployed successfully, we need to take the API URL from the BTP cockpit and use that URL in our frontend app code to call the server side api.

Now we are all set to push the Next JS app to cloud foundry with the below commands

cd ragui

npm run build

cf push

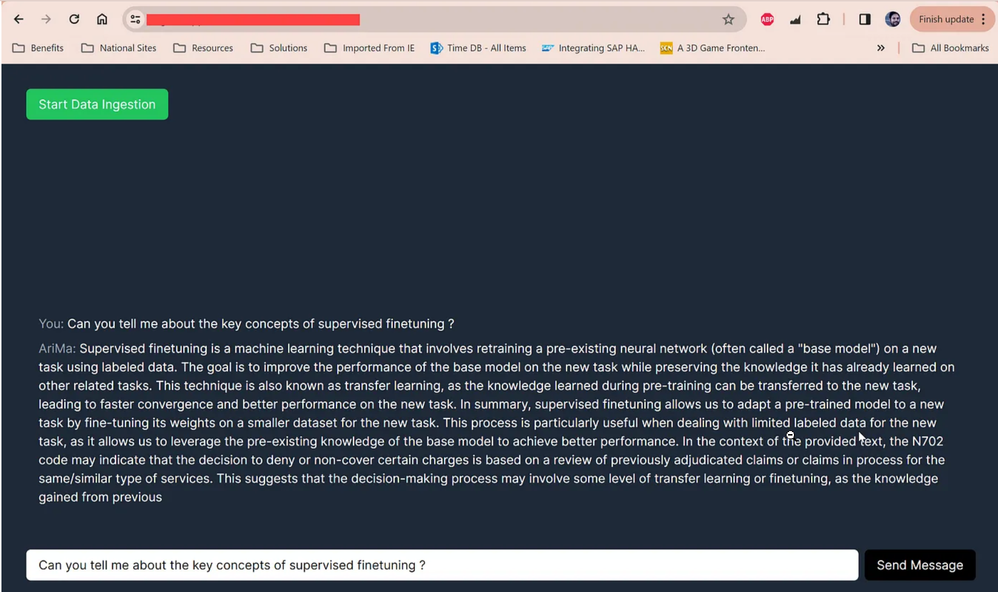

Let’s launch the chat app and ask it a query from the llama text pdf

Query 1: Can you tell me about the key concepts of supervised finetuning ?

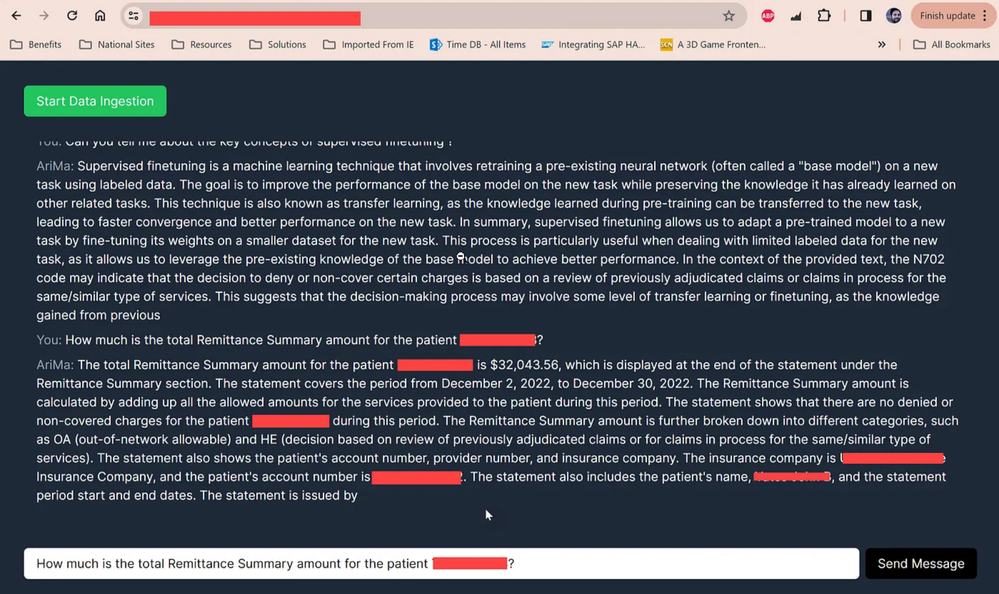

Next query is from image based pdf which contained itemized remittance summary for patients

Query 2 : How much is the total Remittance Summary amount for the patient <patient name>?

It’s a very basic UI chat app which works and serves the purpose of relaying the queries to LLM running on SAP AI Core and ingesting the data into vector database. You can also test the “Start Data Ingestion”, it works..

Thank you for reading this article, Try the code yourself and let me know for any feedback, and if you enjoyed reading this article:

Advanced RAG references :

- https://towardsdatascience.com/advanced-rag-01-small-to-big-retrieval-172181b396d4

- https://pub.towardsai.net/advanced-rag-techniques-an-illustrated-overview-04d193d8fec6

I published this article originally on Medium during the transition of SAP Community blogs

- SAP Managed Tags:

- SAP BTP, Kyma runtime,

- SAP AI Core,

- SAP AI Launchpad

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

Agents

3 -

AI

5 -

AI Launchpad

2 -

Artificial Intelligence

2 -

Artificial Intelligence (AI)

3 -

Brainstorming

1 -

BTP

1 -

Business AI

2 -

Business Trends

1 -

Business Trends

1 -

Cloud Foundry

1 -

Data and Analytics (DA)

1 -

Design and Engineering

1 -

forecasting

1 -

GenAI

1 -

Generative AI

4 -

Generative AI Hub

4 -

Graph

1 -

Language Models

1 -

LlamaIndex

1 -

LLM

2 -

LLMs

2 -

Machine Learning

1 -

Machine learning using SAP HANA

1 -

Mistral AI

1 -

NLP (Natural Language Processing)

1 -

open source

1 -

OpenAI

1 -

Python

2 -

RAG

2 -

Retrieval Augmented Generation

1 -

SAP Build Process Automation

1 -

SAP HANA

1 -

SAP HANA Cloud

1 -

Technology Updates

1 -

user experience

1 -

user interface

1 -

Vector Database

3 -

Vector DB

1 -

Vector Similarity

1

| User | Count |

|---|---|

| 11 | |

| 2 | |

| 2 | |

| 1 | |

| 1 |